Mind-Blowing AI Regulations in the UK: Ethics, Governance, and the Future

Estimated reading time: 6 minutes

Key Takeaways

- The UK is pioneering mind-blowing AI regulations focusing on innovation alongside ethical governance.

- Key principles include fairness, accountability, transparency, and reliability, underpinning responsible AI development.

- The upcoming 2025 AI Regulation Bill introduces mandatory impact assessments and a central AI authority.

- AI transparency laws grant individuals the right to explanation for AI-driven decisions.

- Emphasis is placed on AI-powered bias detection tools to ensure fairness, especially in high-risk sectors.

- Challenges include coordinating decentralized regulation and balancing compliance burdens with innovation.

- The UK aims to set a global standard with its flexible, principles-based approach to AI governance.

Table of Contents

- Mind-Blowing AI Regulations in the UK: Ethics, Governance, and the Future

- Key Takeaways

- 1. Understanding AI Ethics in the UK

- 2. Breaking Down Mind-Blowing AI Regulations

- 3. Responsible AI Development: From Theory to Practice

- 4. AI Transparency Laws: Building Trust Through Openness

- 5. AI-Powered Bias Detection: Tools and Policies

- 6. Challenges in Implementing AI Governance

- 7. The Future of AI Policy in the UK

- Conclusion

- Frequently Asked Questions (FAQ)

The United Kingdom is rapidly becoming a focal point in the global conversation on artificial intelligence, not just for its technological advancements but for its ambitious approach to regulation. The nation is charting a unique course, developing mind-blowing AI regulations designed to foster innovation while embedding strong ethical principles and robust governance structures. By championing fairness, ensuring accountability, and building public trust, the UK aims to lead the way in responsible AI development, establish clear AI transparency laws, and pioneer the use of AI-powered bias detection. This article delves deep into the UK’s groundbreaking regulatory landscape, exploring how these policies strive to balance the immense potential of AI with necessary societal safeguards, and what implications this holds for businesses, developers, and every citizen interacting with AI systems.

1. Understanding AI Ethics in the UK

At the heart of the UK’s strategy lies a strong commitment to AI ethics in the UK. This isn’t just a vague ideal; it’s a framework built on core principles intended to guide the development and deployment of AI systems. The government and regulatory bodies emphasize that ethical AI must be:

- Fair: AI should not produce discriminatory outcomes or perpetuate existing biases.

- Transparent: The decision-making processes of AI systems should be understandable, at least to the extent necessary for accountability.

- Accountable: Clear lines of responsibility must exist for the outcomes of AI systems.

- Safe and Reliable: AI systems must function as intended without causing unintended harm.

These principles aim to ensure that AI aligns with public values and respects fundamental human rights.

Key Ethical Challenges Driving Policy

Several pressing ethical concerns are shaping the UK’s regulatory response:

- Privacy Risks: The appetite of AI for vast datasets creates significant privacy concerns. Technologies like facial recognition, while potentially useful for security, demand careful regulation to prevent misuse and protect individual liberties. The Information Commissioner’s Office (ICO) plays a key role here.

- Accountability Gaps: Determining responsibility when an AI system causes harm—be it denying a loan unfairly or misdiagnosing a patient—is complex. Is it the developer, the deploying company, or the data provider? This lack of clarity is a major hurdle. This challenge resonates beyond traditional tech, impacting creative fields too, as highlighted by discussions around Wizards of the Coast’s stance on AI-generated artwork.

- Transparency Demands: Many advanced AI models operate as “black boxes,” making their internal logic difficult, if not impossible, to fully comprehend. The UK is pushing for explainable AI (XAI), requiring organizations to provide clear justifications for AI-driven decisions, especially those significantly impacting individuals. This is crucial for building trust and enabling effective recourse.

Sources: Read more insights from Diligent, the official UK Government Whitepaper, and Deloitte.

2. Breaking Down Mind-Blowing AI Regulations

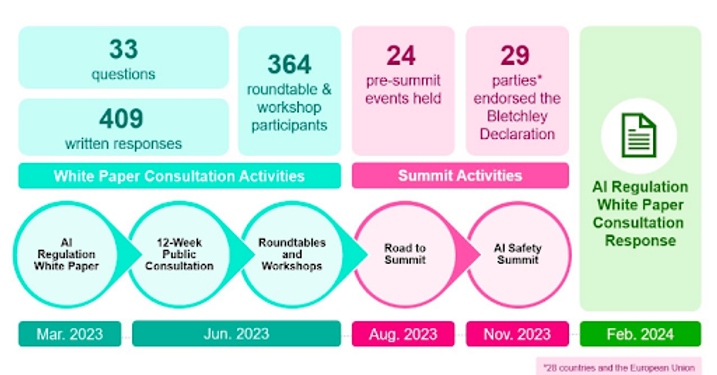

The UK is formalizing its approach through significant legislative efforts, notably the proposed 2025 AI Regulation Bill. While still evolving, this bill signals a move towards more concrete governance, particularly for high-risk AI applications.

Key Features of the Bill

Several radical measures are anticipated:

- Mandatory AI Impact Assessments (AIAs): Before deploying AI systems in critical sectors like healthcare, finance, transport, and justice, organizations will likely need to conduct rigorous assessments. These AIAs would evaluate potential risks, including algorithmic bias, safety failures, security vulnerabilities, and societal impacts.

- Centralized AI Authority: A dedicated regulatory body is expected to be established. This authority would oversee the implementation of AI regulations, monitor compliance across sectors, investigate potential breaches, and have the power to enforce penalties, including substantial fines.

- Sector-Specific Rules: Recognizing that AI risks vary by context, the framework allows existing regulators (like the MHRA for healthcare, FCA for finance) to develop tailored guidelines. For example, AI used in medical diagnosis will need to meet stringent NHS ethics standards and clinical safety requirements.

UK vs. Global Frameworks

The UK’s approach distinguishes itself from the EU’s AI Act, which uses a stricter, risk-based categorization (unacceptable, high, limited, minimal risk). In contrast, the UK favors a *flexible, principles-based, pro-innovation* framework. This involves setting core ethical principles (fairness, accountability, etc.) and empowering existing sectoral regulators to interpret and apply these principles within their domains. The goal is to create rules that can adapt quickly as technology evolves, avoiding rigid regulations that might stifle innovation. This adaptable approach is seen as essential given the rapid advancements seen in areas like 10 Cutting Edge AI Technologies Shaping the Future.

Sources: Explore details from Kennedys Law and Clyde & Co.

3. Responsible AI Development: From Theory to Practice

The UK regulatory landscape makes responsible AI development a mandatory requirement, not just an ethical aspiration. Businesses developing or deploying AI systems must integrate ethical considerations throughout the AI lifecycle.

Compliance Steps for Businesses

Organizations operating in the UK need to prepare for:

- Conducting Rigorous Risk Assessments: Systematically identifying, analyzing, and mitigating potential harms associated with their AI systems (e.g., bias, safety, security).

- Establishing Internal Governance: Appointing dedicated personnel, potentially an AI Ethics Officer or committee, to oversee compliance, ethical reviews, and internal policies related to AI.

- Maintaining Thorough Documentation: Keeping detailed records of data sources used for training, model design choices, testing procedures, performance metrics, and decision-making processes to ensure transparency and traceability.

- Implementing Robust Monitoring: Continuously monitoring AI systems post-deployment to detect performance degradation, emerging biases, or unforeseen negative consequences.

Case Study: NHS AI Lab

The NHS AI Lab serves as a prime example of responsible AI in practice. It has established stringent guidelines for AI tools used in healthcare. For instance, algorithms intended for cancer diagnosis must undergo independent audits verifying their accuracy, reliability, and fairness across diverse patient demographics (age, ethnicity, gender). This ensures that the benefits of AI do not come at the cost of exacerbating health inequalities. This level of scrutiny is becoming increasingly relevant across sectors, including consumer technology like AI in Smart Home Devices.

Sources: Find regulatory outlooks at Osborne Clarke and framework analysis by Deloitte.

4. AI Transparency Laws: Building Trust Through Openness

Central to building public trust are the UK’s burgeoning AI transparency laws. These laws aim to demystify AI decision-making and empower individuals affected by automated systems.

Right to Explanation

A cornerstone of UK transparency efforts is the strengthening of the ‘right to explanation’:

- Individuals significantly impacted by an AI-driven decision (e.g., loan denial, job application rejection, welfare benefit calculation) will have the right to request a clear, understandable explanation of how the decision was reached.

- Organizations deploying such systems must be prepared to provide these explanations. This often involves summarizing the main factors driving the decision and the logic used by the algorithm, potentially requiring investment in explainable AI (XAI) techniques. This mirrors transparency expectations seen with customer-facing technologies like AI chatbots in customer service.

Global Alignment and Public Sector Transparency

The UK’s transparency requirements show alignment with international standards, such as those in the EU’s GDPR concerning automated decision-making. Furthermore, there’s a growing push for transparency in the public sector use of AI, particularly in sensitive areas like law enforcement and justice. Proposals include mandatory public consultations and registers of algorithms used by public bodies.

Sources: Perspectives on AI ethics can be found at the UK Cybersecurity Council and policy updates at Global Policy Watch.

5. AI-Powered Bias Detection: Tools and Policies

Addressing algorithmic bias is a critical component of the UK’s ethical AI framework. The country is actively promoting and, in some cases, mandating the use of AI-powered bias detection tools and techniques to audit algorithms for unfair discrimination.

Innovations in Bias Auditing

The UK is fostering innovation in how bias is identified and mitigated:

- Public-private partnerships, like those involving the NHS, are developing and utilizing sophisticated tools to scan healthcare algorithms for biases related to race, gender, age, or socioeconomic status.

- Financial regulators (like the FCA) are pushing financial institutions to employ AI auditing tools to rigorously check credit-scoring models, fraud detection systems, and other financial algorithms for fairness and discriminatory patterns.

- Research bodies are developing standardized metrics and testing methodologies for assessing algorithmic fairness across different contexts.

Regulatory Mandates

Regulation is moving beyond encouragement towards explicit requirements:

- Companies using AI for recruitment and HR purposes face increasing pressure to demonstrate that their tools do not unfairly disadvantage candidates from specific demographic groups. Proof of bias audits may become mandatory.

- Financial institutions deploying AI for loan approvals or insurance underwriting could face significant fines if their algorithms are found to exhibit systemic bias against protected characteristics, a concern also relevant for AI-driven marketing tools that segment audiences.

Sources: Learn about governance paths from Policy Review and legal insights from Clyde & Co.

6. Challenges in Implementing AI Governance

Despite the UK’s ambitious plans for AI ethics in the UK and implementing mind-blowing AI regulations, significant hurdles remain in translating policy into effective practice.

Key Challenges

- Decentralized Enforcement: The UK’s model relies on multiple existing sectoral regulators (FCA, MHRA, Ofcom, ICO, etc.) to apply AI principles within their domains. While allowing for sector-specific expertise, this creates potential challenges in ensuring consistency, avoiding regulatory gaps, and coordinating enforcement actions, especially for AI systems spanning multiple sectors. Defining the role and powers of the proposed central AI authority relative to these existing bodies is crucial.

- Innovation vs. Compliance Burden: While aiming for a “pro-innovation” approach, the practicalities of compliance—conducting impact assessments, implementing explainability features, undergoing audits—can be resource-intensive. Startups and smaller businesses, vital to the UK’s tech ecosystem, may struggle with the associated costs and complexities, potentially slowing down innovation or creating barriers to entry. Striking the right balance is paramount.

- Pace of Technological Change: AI technology evolves at breakneck speed. Regulators face the constant challenge of keeping policies relevant and effective without becoming quickly outdated or inadvertently hindering beneficial advancements. The principles-based approach is intended to help, but its practical application requires continuous monitoring and adaptation.

- Skills Gap: Effective regulation requires expertise not only in law and policy but also in the technical intricacies of AI. There is a need to build capacity within regulatory bodies and across industries to understand, assess, and govern complex AI systems effectively.

Sources: Discussions on the regulation gap are found at Kennedys Law, and the UK action plan is analyzed by Clifford Chance.

7. The Future of AI Policy in the UK

The UK’s journey with mind-blowing AI regulations is just beginning. The current framework is designed to be dynamic, evolving in response to technological advancements, emerging risks, and societal feedback.

Predicted Trends

Looking ahead, several trends are likely to shape UK AI policy:

- Stricter Enforcement and Penalties: As the framework matures, expect more robust enforcement actions and potentially higher fines for non-compliance, especially for violations involving high-risk AI systems. Fines could potentially mirror GDPR levels, reaching significant percentages (e.g., 4-5%) of global annual revenue for severe breaches.

- Mandatory Certifications and Standards: There may be a move towards formal certification schemes for certain types of AI systems or even for AI developers and auditors, particularly in safety-critical areas. Government-approved standards could become prerequisites for market access.

- Focus on AI Assurance: Increased emphasis on techniques and services that provide verifiable assurance of an AI system’s properties (e.g., fairness, robustness, security), likely involving third-party auditing and testing becoming more common.

- Addressing Frontier AI Risks: As AI capabilities advance towards Artificial General Intelligence (AGI), regulations will need to grapple with more profound, potentially existential risks, requiring international collaboration.

Global Influence

The UK government explicitly aims for its approach to influence global AI governance standards. By demonstrating that a flexible, pro-innovation model can effectively manage risks without stifling economic growth, the UK hopes to offer an attractive alternative to more rigid regulatory regimes. Its success could shape international norms, particularly in fostering ethical AI ecosystems that balance rapid technological progress, seen in fields like quantum computing breakthroughs, with essential safeguards.

Sources: Future directions are discussed by Clyde & Co and outlined in the UK Government Whitepaper.

Conclusion

The UK is embarking on an ambitious project to craft mind-blowing AI regulations that marry technological progress with deep ethical considerations. By weaving principles of fairness, transparency, and accountability into the fabric of its regulatory approach, the nation is making a bold statement: innovation and robust governance *can* and *must* coexist. The emphasis on responsible AI development, the establishment of clear AI transparency laws, and the proactive use of AI-powered bias detection signal a commitment to building an AI future that benefits all of society.

However, the path forward involves navigating significant challenges, from coordinating regulation across diverse sectors to ensuring compliance doesn’t stifle the very innovation it seeks to guide. For businesses, developers, and policymakers, staying informed and agile will be crucial. The UK’s evolving AI policies are not just domestic rules; they represent a significant contribution to the global dialogue on how to govern transformative technologies. In the UK, ethical AI is rapidly transitioning from a guideline to a legal imperative, shaping a future where artificial intelligence serves humanity, responsibly and reliably.

Frequently Asked Questions (FAQ)

1. What is the main goal of the UK’s AI regulations?

The primary goal is to foster innovation and the economic benefits of AI while ensuring these technologies are developed and used safely, ethically, and in a way that builds public trust. The UK aims for a “pro-innovation” approach grounded in principles like fairness, accountability, transparency, and safety, rather than rigid, prescriptive rules.

2. How does the UK’s AI regulation approach differ from the EU’s AI Act?

The key difference lies in the structure. The EU AI Act uses a risk-based classification system (unacceptable, high, limited, minimal risk) with specific, detailed requirements for each category, creating a more centralized and harmonized legal framework. The UK opts for a principles-based, context-specific approach, relying on existing sectoral regulators to interpret and apply core AI ethics principles within their domains, aiming for greater flexibility and adaptability.

3. What is ‘Explainable AI (XAI)’ and why is it important in UK regulations?

Explainable AI (XAI) refers to methods and techniques that make the decision-making process of AI systems understandable to humans. It’s crucial in the UK’s regulatory context because it supports the principles of transparency and accountability. Under UK proposals, individuals affected by significant AI decisions may have a ‘right to explanation,’ requiring organisations to clearly articulate how an AI reached its conclusion. This builds trust and allows for meaningful challenge or recourse.

4. What are AI Impact Assessments (AIAs) under the proposed UK rules?

AI Impact Assessments are structured processes that organisations would need to conduct before deploying certain AI systems, particularly those deemed high-risk (e.g., in healthcare, finance, recruitment). The AIA would involve identifying, assessing, and documenting potential risks associated with the AI, including bias, safety flaws, security vulnerabilities, privacy infringements, and broader societal impacts. Mitigation strategies would also need to be outlined.

5. What responsibilities do businesses have regarding AI bias under UK guidelines?

Businesses are expected to take proactive steps to identify and mitigate unfair bias in their AI systems. This includes carefully selecting and examining training data, using bias detection tools during development and testing, conducting fairness audits (potentially using third parties), and continuously monitoring systems post-deployment. Regulatory mandates, especially in sectors like finance and employment, require demonstrating that AI tools do not result in unlawful discrimination against protected groups.