Navigating the Complexities of AI Integration

Estimated reading time: 12 minutes

Key Takeaways

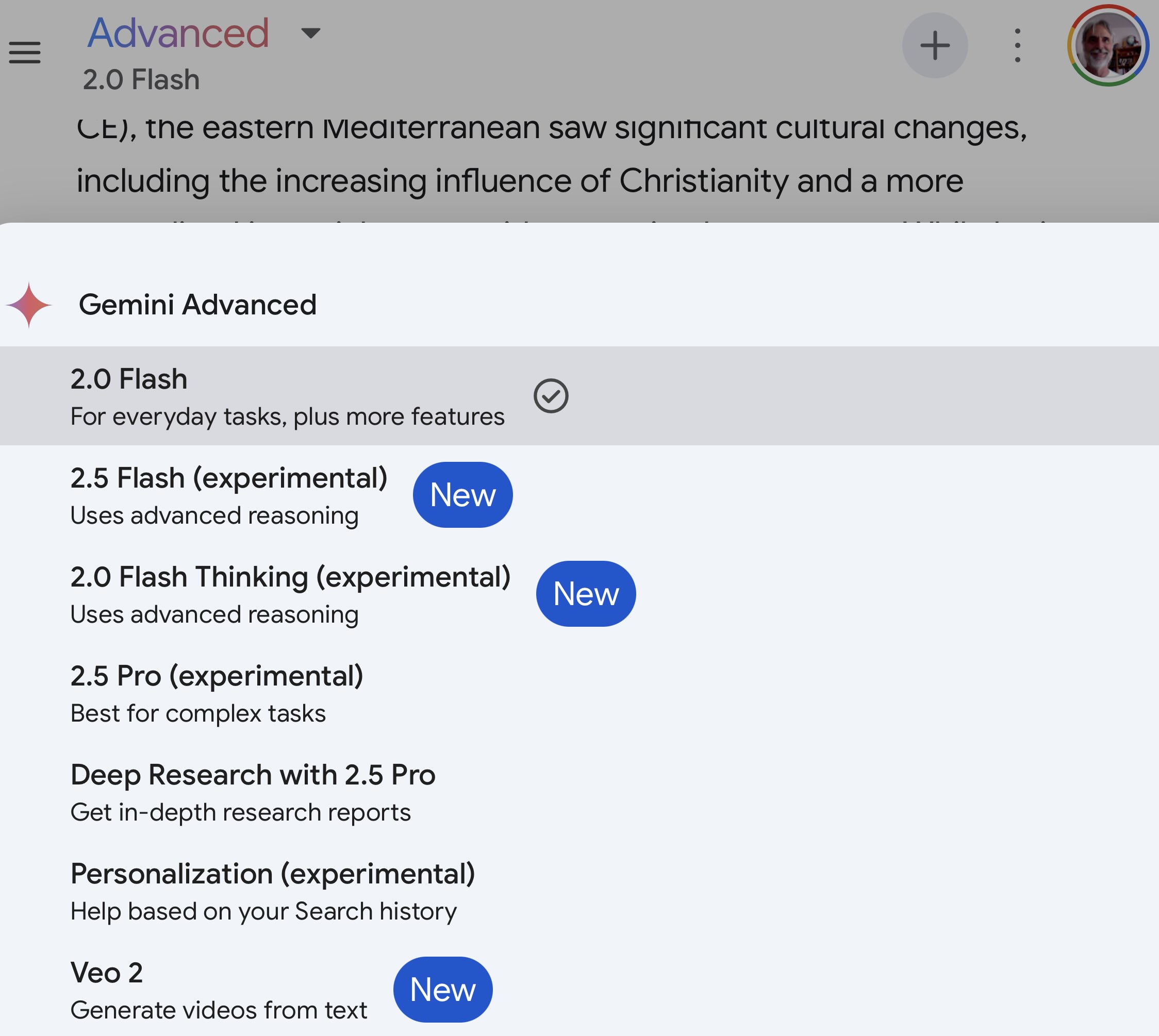

- AI models like Gemini Pro, Perplexity AI, and Azure OpenAI are rapidly being integrated to enhance applications.

- Common challenges include Gemini Pro tool integration errors, Perplexity AI API connection problems, and errors like the Azure OpenAI cannot assist error fix.

- Understanding the root causes of these errors is crucial for effective troubleshooting.

- This guide provides in-depth solutions and best practices for diagnosing and resolving prevalent AI integration issues.

- Proactive strategies and a thorough understanding of AI platforms are key to successful AI integration.

Table of contents

- Introduction: Navigating the Complexities of AI Integration

- Deep Dive into Gemini Pro Tool Integration Errors

- Specific Focus: Resolving Gemini Pro Function Calling Issues

- Diagnosing and Fixing Perplexity AI API Connection Problems

- Resolving the “Azure OpenAI Cannot Assist” Error Fix

- Troubleshooting AI Model Refusals: A Broader Perspective

- General Best Practices for Robust AI Integrations

The rapid advancement and adoption of Artificial Intelligence (AI) are transforming how we build and deploy applications. Powerful AI models, such as Google’s Gemini Pro, Perplexity AI, and Microsoft’s Azure OpenAI service, are no longer futuristic concepts but integral components being integrated into a myriad of software solutions to enhance capabilities, automate tasks, and provide intelligent insights. However, this integration is not without its hurdles. Developers frequently encounter complex issues that can impede progress and cause significant frustration. Among the most common pain points are **Gemini Pro tool integration errors**, which can arise from various misconfigurations and misunderstandings of how the model interacts with defined tools. Similarly, developers working with Perplexity AI may face **Perplexity AI API connection problems**, stemming from network issues, authentication failures, or API usage limits. Furthermore, users of Azure OpenAI often grapple with errors like the infamous Azure OpenAI cannot assist error fix, which points to the model’s content filtering or capability limitations. This comprehensive guide is designed to demystify these prevalent challenges. We aim to equip you with the knowledge and practical strategies necessary to effectively diagnose, troubleshoot, and resolve these common AI integration problems, ensuring your AI-powered applications function as intended.

Deep Dive into Gemini Pro Tool Integration Errors

When integrating tools with Gemini Pro, developers often encounter specific errors that hinder the model’s ability to leverage external functionalities. These **Gemini Pro tool integration errors** typically stem from how developers define, configure, and invoke these tools within the Gemini Pro framework. Understanding the underlying causes is the first step towards a successful resolution.

Several key areas are common sources of these errors:

- Authentication Failures: This is a fundamental but critical issue. It encompasses several potential problems, including the use of incorrect or expired API keys, missing authentication tokens in requests, or improperly configured service account credentials. If Gemini Pro cannot securely authenticate with the tool or its underlying API, it will be unable to execute any actions.

- Schema Mismatches: AI models, including Gemini Pro, rely on structured data formats (schemas) to understand how to interact with tools. A schema mismatch occurs when the expected input or output format of a tool does not align with what Gemini Pro is attempting to send or receive. This can manifest as issues with data types (e.g., sending a string where an integer is expected), missing required fields in an argument, or using incorrect parameter names.

- Tool Definition Issues: The way tools are defined within the Gemini Pro API, particularly concerning the `tools` and `tool_config` parameters, is crucial. Errors in this definition can lead to the model not recognizing the tool, misinterpreting its capabilities, or failing to invoke it correctly. This includes issues with the structure of the tool definition itself, the associated metadata, or how available functions within a tool are described.

Addressing these points systematically will help in pinpointing and resolving the root cause of Gemini Pro tool integration errors.

Specific Focus: Resolving Gemini Pro Function Calling Issues

A significant subset of **Gemini Pro tool integration errors** revolves around function calling. This feature allows Gemini Pro to generate structured JSON objects that describe function calls, enabling developers to trigger specific functions within their applications. When this mechanism fails, it can be particularly challenging to debug. Successfully resolving Gemini Pro function calling issues requires a meticulous approach to how functions are declared and how the model’s output is interpreted.

Here are common pitfalls and their solutions:

- Common Mistakes in Function Declaration: Functions intended for Gemini Pro to call must be declared with extreme precision. Each function requires a clear, descriptive name, a concise explanation of its purpose, and a well-defined parameter schema. This schema should accurately reflect the function’s expected arguments, including their names, data types, and whether they are required. Vague descriptions or incomplete parameter definitions are primary culprits for function calling failures. Ensure that the JSON schema used for parameter definitions is valid and adheres to the expected structure.

- Troubleshooting Unexpected Function Calls or Non-Calls: A frequent problem is when Gemini Pro either calls the wrong function, fails to call a function when one is expected, or invokes a function with incorrect arguments. To debug this, developers must examine the model’s raw output, specifically looking for fields related to tool usage (e.g., `tool_calls`, `function_call`). Analyzing these fields can reveal what function the model intended to call and with what arguments. If the model hallucinates function calls or parameters, it often indicates an issue with the function’s description or the prompt’s clarity.

- Best Practices for Function Definition and Parameter Handling: To mitigate these issues, adopt a disciplined approach to defining functions.

- Use descriptive and unambiguous names for both functions and their parameters. This helps Gemini Pro understand their purpose.

- Ensure that the data types specified in the parameter schema precisely match the expected types for your function arguments.

- Leverage JSON Schema for robust and standardized parameter definitions. This provides a strong contract between the model and your code.

- Provide clear and informative descriptions for each function and parameter. The model relies heavily on these descriptions to make informed decisions about calling functions.

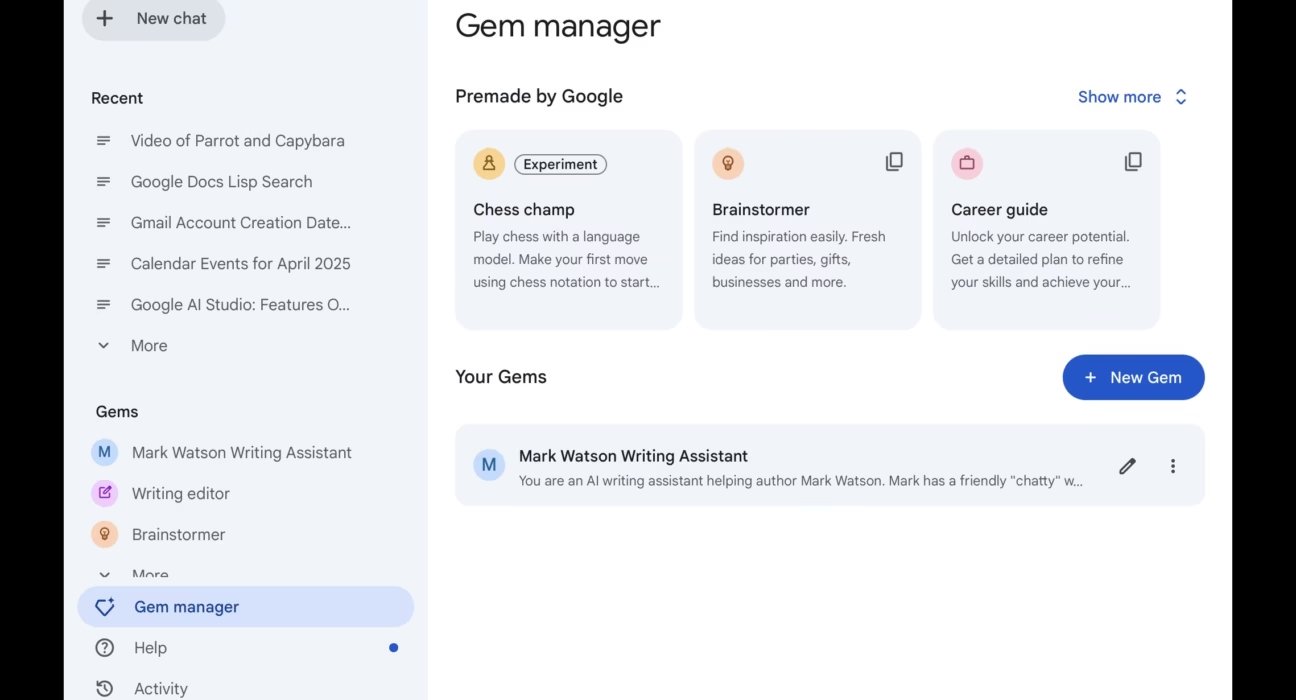

For a more structured approach to defining tools and functions, consider the general best practices in AI development, which often emphasize clarity, precision, and adherence to established formats. Explore how AI technology trends are continually refining these development workflows, offering new insights into effective tool integration.

Diagnosing and Fixing Perplexity AI API Connection Problems

Connecting to and reliably using the Perplexity AI API is essential for developers looking to integrate its powerful search and generation capabilities. However, encountering **Perplexity AI API connection problems** can be a significant roadblock. These issues often fall into predictable categories, making systematic diagnosis possible.

Let’s break down common causes and solutions:

- Network and Connectivity Issues: The most basic, yet often overlooked, causes of API connection problems are network-related. This can include:

- Firewalls: Corporate or local firewalls might be blocking outgoing requests to Perplexity AI’s API endpoints.

- Proxy Configurations: If you are behind a proxy server, it needs to be correctly configured to allow API requests.

- General Internet Instability: Intermittent internet connectivity can lead to dropped connections during API calls.

Ensure your network environment is stable and that Perplexity AI’s API endpoints are accessible from your server or development machine.

- Rate Limiting and Quotas: Perplexity AI, like most API providers, enforces rate limits and usage quotas to ensure fair usage and service stability. Exceeding these limits will result in connection errors, often indicated by specific HTTP status codes like `429 Too Many Requests`.

- Monitor Usage: Keep track of your API calls and usage against your allocated quotas.

- Implement Exponential Backoff: For transient rate limit errors, implement a retry mechanism with exponential backoff. This involves waiting progressively longer periods between retries, reducing the load on the API. Refer to the Perplexity AI SDK error handling guide for specific implementation details.

- Authentication and Authorization Failures: Incorrect credentials are a frequent source of API connection issues.

- API Keys: Ensure you are using the correct and active API key. Double-check for typos or if the key has been revoked or expired.

- Permissions: Verify that the API key or token used has the necessary permissions to access the specific API endpoints you are trying to use.

Always refer to your Perplexity AI account dashboard to manage and verify your API keys and their associated permissions.

- Debugging API Requests and Responses: When issues arise, inspecting the details of your API requests and the responses you receive is paramount.

- HTTP Status Codes: Pay close attention to the HTTP status codes returned by the API. Common indicators include:

- `401 Unauthorized`: Authentication failed.

- `403 Forbidden`: Authentication succeeded, but you don’t have permission.

- `429 Too Many Requests`: Rate limit exceeded.

- `5xx Server Errors`: Issues on Perplexity AI’s side.

- Response Bodies: The response body often contains detailed error messages that can pinpoint the exact problem.

- Debugging Tools: Utilize tools like `curl` in your terminal or built-in debugging features in your programming language’s HTTP client library to log and inspect requests and responses. The Perplexity AI SDK error handling guide is an invaluable resource for understanding common error responses and debugging strategies.

- HTTP Status Codes: Pay close attention to the HTTP status codes returned by the API. Common indicators include:

Staying informed about broader AI technology trends shaping the future can also provide context, as advancements in AI infrastructure can influence API behaviors and reliability.

Resolving the “Azure OpenAI Cannot Assist” Error Fix

The “Azure OpenAI cannot assist” error is a common indication that the deployed Azure OpenAI model is unable or unwilling to fulfill a user’s request. This error is often rooted in the service’s robust safety and content filtering mechanisms, but other factors can also contribute. Effectively implementing an Azure OpenAI cannot assist error fix involves understanding these underlying causes.

Key areas to investigate include:

- Content Filtering Policies: Azure OpenAI is designed with safety in mind, employing content filtering to prevent the generation of harmful, unethical, or inappropriate content. If a user’s prompt or the potential response triggers these filters, the model will refuse to proceed, resulting in the “cannot assist” error.

- Review Policies: Access your Azure portal and navigate to the content filtering settings for your Azure OpenAI resource.

- Adjust Settings Cautiously: If your legitimate use case is being flagged, you may need to adjust the sensitivity levels for different content categories (e.g., hate, sexual, violence, self-harm). Make these adjustments carefully and test thoroughly to avoid inadvertently enabling harmful content generation.

- Prompt Refinement: Often, rephrasing the prompt to be clearer, less ambiguous, and to avoid sensitive topics can bypass overly strict filters.

- Model Capabilities and Training: The specific Azure OpenAI model you are using has inherent capabilities and limitations based on its training data and fine-tuning.

- Task Suitability: If you are using a model fine-tuned for creative writing to answer complex factual queries, it might refuse the request because it’s outside its specialized domain. Ensure the deployed model is appropriate for the task.

- Model Updates: Keep track of model updates and new releases from Azure OpenAI, as newer models may have broader capabilities or improved adherence to instructions.

- Resource Limitations and Deployment Issues: Sometimes, the error can be a symptom of underlying infrastructure problems.

- Resource Constraints: Ensure that your Azure OpenAI deployment has sufficient quota and that underlying Azure resources (like compute) are not exhausted.

- Service Health: Check the Azure Service Health dashboard for any ongoing incidents or planned maintenance that might affect your Azure OpenAI deployment.

- Deployment Errors: Review the deployment history and logs in the Azure portal for any errors that occurred during the model deployment process.

- Strategies for Mitigation:

- Prompt Engineering: Crafting clear, specific, and well-structured prompts is crucial. Avoid ambiguity and provide sufficient context.

- User Education: If users are frequently triggering content filters, consider providing guidance on how to phrase their requests appropriately.

- Configuration Management: Regularly review and update content moderation settings as needed, balancing safety with usability.

To gain a deeper understanding of AI’s potential and limitations, exploring how AI is changing the world can provide valuable context on why certain models might refuse specific requests.

Troubleshooting AI Model Refusals: A Broader Perspective

Beyond specific error messages like the Azure OpenAI “cannot assist” response, AI models across the board can sometimes refuse to generate a response, fail to complete a task, or provide irrelevant output. Understanding the general principles behind troubleshooting AI model refusals is a critical skill for any developer working with these technologies.

Several common factors contribute to AI models refusing requests:

- Prompt Ambiguity and Lack of Clarity: This is perhaps the most frequent reason for a model’s failure to respond appropriately. Vague, ill-defined, or grammatically incorrect prompts leave the model guessing the user’s intent. Without a clear directive, the model may default to a refusal or provide a nonsensical answer.

- Violating Safety Guidelines and Ethical Boundaries: AI models are intentionally designed with safety guardrails to prevent misuse. Prompts that solicit hate speech, promote illegal activities, contain explicit content, or encourage self-harm will be refused. These refusals are intentional and are a core part of responsible AI deployment. The Perplexity AI SDK error handling guide, while specific, touches upon the importance of adhering to ethical guidelines, which is a universal principle.

- Insufficient Context: While models are powerful, they are not mind-readers. If a prompt lacks the necessary background information or context, the model may be unable to understand the request and therefore refuse to answer. For example, asking “What is the best option?” without specifying the context (e.g., “for a budget laptop under $500”) will likely lead to an unhelpful response.

- Complex or Unsolvable Requests: Some requests might be inherently too complex for the current capabilities of the model, require real-time data beyond its access, or involve tasks that are logically impossible. In such cases, refusal is a sign of the model recognizing its limitations.

Effective prompt engineering techniques are key to overcoming these challenges:

- Rephrasing for Clarity: Break down complex ideas into simpler sentences. Ensure your grammar is correct and your vocabulary is precise.

- Adding Necessary Context: Provide background information, define terms, and specify constraints. Think about what information *you* would need to fulfill the request if you were the AI.

- Breaking Down Complex Requests: If a task is multifaceted, try breaking it into a series of smaller, sequential prompts. This allows the model to process each part of the task more effectively.

- Using Few-Shot Examples: For specific formats or types of responses, providing a few examples within the prompt can significantly guide the model’s output. This is a powerful technique for steering the AI towards the desired outcome. Referencing the Perplexity AI SDK error handling guide can offer insights into structuring interactions for better results.

Mastering prompt engineering is fundamental to unlocking human-like AI conversations, making this area of focus particularly valuable.

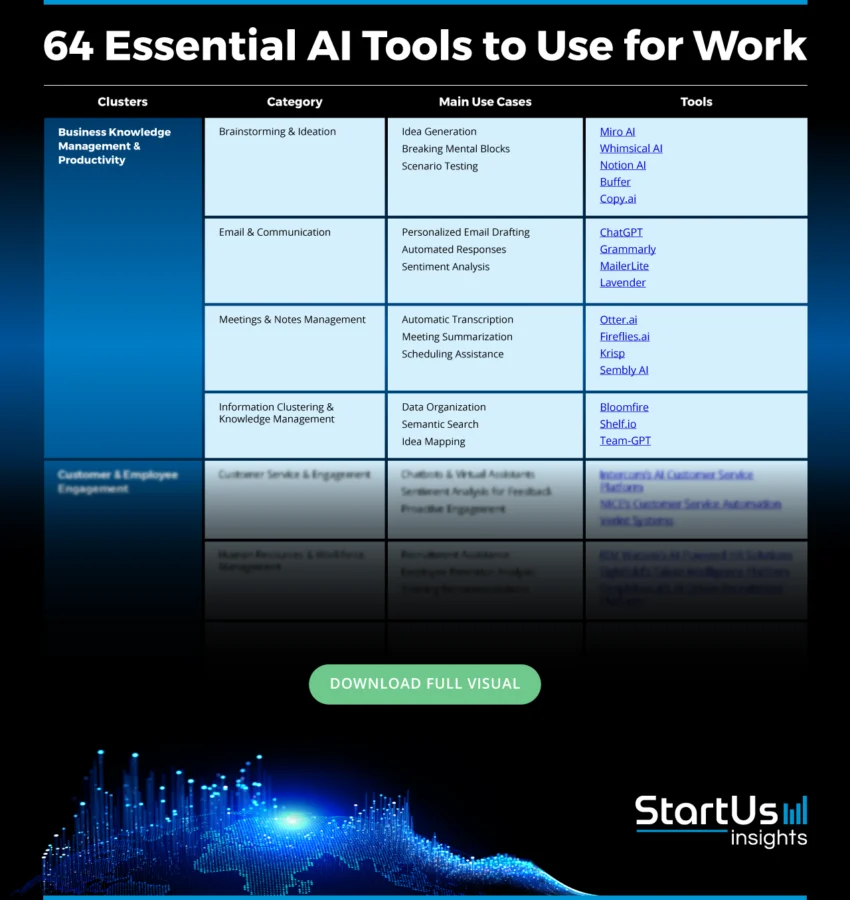

General Best Practices for Robust AI Integrations

Beyond addressing specific errors, adopting a set of general best practices can significantly improve the reliability and robustness of any AI integration. These strategies aim to prevent issues before they arise and streamline the troubleshooting process when problems do occur.

Here are overarching strategies for success:

- Thorough Documentation Review: Before and during integration, make it a priority to thoroughly read and understand the official documentation provided by AI service providers like Gemini Pro, Perplexity AI, and Azure OpenAI. This documentation is your primary resource for understanding APIs, features, limitations, and troubleshooting steps.

- Implement Comprehensive Error Handling and Logging: Build robust error handling directly into your application. Use specific try-catch blocks around API calls to gracefully manage potential exceptions. Crucially, implement detailed logging. Log every request sent to the AI service, the response received (including status codes and bodies), and any errors encountered. This detailed log data is invaluable for quickly diagnosing problems. The guidance on error handling in SDKs is a good starting point.

- Iterative Testing and Validation: Adopt an iterative development approach. Instead of integrating large chunks of functionality at once, build and test individual components and their interactions with the AI models incrementally. This makes it easier to pinpoint where an error originates. Validate that each part of your integration works as expected before moving on to the next.

- Stay Updated: The AI landscape evolves rapidly. Keep abreast of updates, new versions, and deprecation notices from your AI providers. Changes in APIs, model behaviors, or pricing can introduce breaking changes or new error modes if not accounted for.

- Utilize Community and Support Resources: Don’t hesitate to leverage the collective knowledge of the developer community and official support channels.

- Forums and Discussions: Engage in official forums or community platforms like GitHub discussions (e.g., for projects like Open WebUI: Open WebUI Discussions) where developers share problems and solutions.

- Official Support: If you have a support plan or are encountering critical issues, utilize the official support channels provided by the AI service. Perplexity AI offers a Help Center for assistance.

- Security Best Practices: Ensure your API keys and credentials are kept secure. Avoid hardcoding them directly into your codebase; use environment variables or secure secret management systems.

Embracing AI workflow automation for businesses can also lead to more streamlined and robust integrations by standardizing processes and reducing manual intervention.

Conclusion: Mastering AI Integration Challenges

Successfully integrating AI models into applications requires a proactive and systematic approach to problem-solving. We’ve delved into the intricacies of tackling common issues such as Gemini Pro tool integration errors, which often stem from authentication and schema mismatches, and the critical importance of precise function calling definitions. We’ve also explored the nuances of Perplexity AI API connection problems, highlighting the need to address network stability, rate limits, and credential management. Furthermore, we’ve provided strategies for the Azure OpenAI cannot assist error fix, emphasizing the role of content filtering, model suitability, and resource availability.

The challenges associated with troubleshooting AI model refusals, whether specific to a platform or general in nature, underscore the power of effective prompt engineering. By refining prompts for clarity, providing adequate context, and adhering to safety guidelines, developers can significantly improve the likelihood of receiving desired responses.

Adopting the general best practices outlined – thorough documentation review, robust error handling and logging, iterative testing, staying updated, and leveraging community support – forms the foundation for building resilient and reliable AI-powered applications. These challenges, while sometimes frustrating, are invaluable learning opportunities that push the boundaries of our understanding and application of AI technologies.

Call to Action: We encourage you to share your own experiences with AI integration errors and the solutions you’ve found effective in the comments below. Your insights can help fellow developers navigate these complex waters. For further assistance, always refer to the official documentation and support resources provided by your AI service providers. For those looking to deepen their expertise and leverage AI more effectively, exploring how AI is transforming businesses offers a broader perspective on the impact and potential of these powerful tools.

Frequently Asked Questions

Q1: What is the most common cause of Gemini Pro tool integration errors?

A1: The most common causes are often related to incorrect authentication (API keys, credentials) and schema mismatches, where the data format expected by the tool doesn’t align with what Gemini Pro is sending or receiving.

Q2: How can I quickly diagnose Perplexity AI API connection problems?

A2: Start by checking your network connectivity and firewall settings. Then, verify your API key is correct and active. If those are fine, inspect the HTTP status codes and response bodies for specific error messages. Tools like `curl` can be very helpful for this.

Q3: My Azure OpenAI model is refusing requests with “cannot assist.” What should I do?

A3: First, review your prompt for clarity and ensure it doesn’t inadvertently trigger content filters. Then, check the content filtering settings in your Azure portal. If the issue persists, ensure the deployed model is suitable for your task and check Azure service health for any ongoing issues.

Q4: What are some effective prompt engineering techniques to avoid AI model refusals?

A4: Techniques include making prompts clearer and more specific, providing ample context, breaking down complex requests into smaller steps, and using few-shot examples to guide the model’s output. Avoid ambiguous language and sensitive topics that might trigger safety filters.

Q5: Where can I find reliable help when I’m stuck with an AI integration issue?

A5: Official documentation from the AI provider is your first resource. Community forums (like GitHub discussions), developer communities, and official support channels are also excellent places to seek help.