Unlocking Structured Data: A Guide to LLM Output with JSON Schema

Estimated reading time: 12 minutes

Key Takeaways

- Large Language Models (LLMs) often produce unpredictable and unstructured output, posing a challenge for automated systems.

- JSON Schema is a powerful specification for defining and enforcing the structure of JSON data.

- LLM outputs lack consistent structure, hindering integration into downstream applications and robust data validation.

- Effective **instructions** in prompts are crucial for guiding LLMs to generate JSON that adheres to a specified JSON Schema.

- Clear prompting, explicit rules, and example-driven guidance are essential for achieving reliable and validated LLM data.

- Implementing a structured workflow involving schema definition, prompt engineering, and validation is key to successful LLM integration.

- Advanced strategies include handling complex data types, error handling, and iterative refinement for optimal LLM output.

Table of contents

- Unlocking Structured Data: A Guide to LLM Output with JSON Schema

- Key Takeaways

- The Unpredictability Problem: Why LLM Outputs Need Structure

- Understanding JSON Schema: Your Data’s Blueprint

- The Formatting Hurdle: Bridging LLMs and JSON Schema

- The Art of Instructions: Guiding LLMs for Structured Output

- A Practical Walkthrough: Implementing JSON Schema for LLM Output Validation

- Advanced Strategies and Best Practices

The Unpredictability Problem: Why LLM Outputs Need Structure

Large Language Models (LLMs) are remarkable for their ability to generate human-like text, translate languages, and answer questions in a conversational manner. However, inherent in their design as probabilistic models, LLMs can produce varied outputs even when presented with the exact same input. This lack of consistent structure can be a significant hurdle for applications that rely on predictable and organized data. Imagine trying to feed data into an automated system that expects a specific format, only to receive a slightly different one each time. This variability makes integration with APIs, data pipelines, and AI agents incredibly challenging and error-prone.

For instance, an LLM might be asked to extract key details from a document. Without strict guidance, it could return a list with different item names, varying order, or even include explanatory text within the extracted data. This unpredictability severely hinders machine readability and any form of automated processing. To overcome this, a crucial step is to implement robust data validation. This ensures that the data generated by LLMs is not only accurate but also consistently formatted, making it usable and reliable for downstream applications.

The core issue stems from the LLM’s nature: it predicts the next most likely token based on its training data. While this excels at creative and varied text generation, it doesn’t inherently prioritize structured output that adheres to a predefined format. This is where explicit control and definition become paramount.

“LLMs are powerful, but without structure, their outputs can be like a wild river – unpredictable and difficult to channel.”

The need for structured output is not just a matter of convenience; it’s a fundamental requirement for building reliable software systems. Without it, the immense potential of LLMs remains largely untapped for automated workflows that demand precision and consistency. This is precisely why tools like JSON Schema are becoming indispensable allies in the LLM ecosystem.

The challenge is multifaceted: LLMs are trained on vast amounts of unstructured text, and their primary function is to generate text that *sounds* plausible and coherent. Directing this capability towards generating strictly formatted data requires a different approach. It’s akin to asking a freestyle rapper to perform a sonnet – they can do it, but they need clear instructions and a framework to follow.

The ability to ensure that LLM outputs adhere to a specific structure is critical for their practical application in business and technology. This is where our journey into understanding and implementing JSON Schema truly begins. Without this structure, the generated data can be difficult to parse, interpret, and utilize in automated systems, leading to integration issues and operational inefficiencies.

Understanding JSON Schema: Your Data’s Blueprint

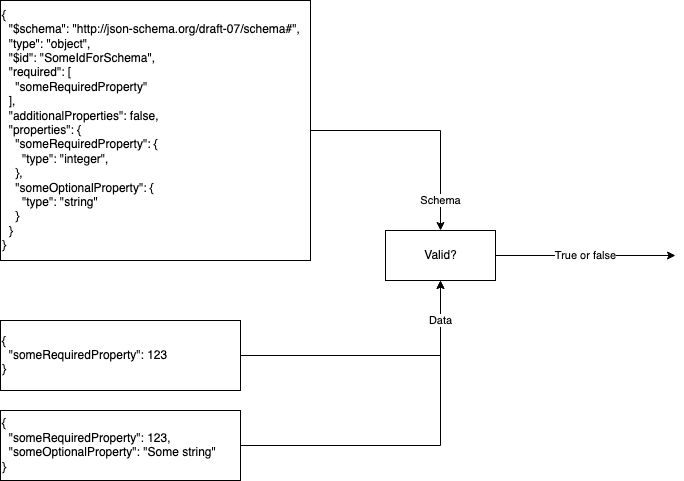

**JSON Schema** is a powerful tool that serves as a formal specification for describing and validating the structure of JSON data. Think of it as a blueprint or a contract for your data. It defines what a valid JSON object should look like, ensuring that all parties involved understand the expected format and content.

The primary purpose of JSON Schema is to meticulously define various aspects of your data:

- Data Types: Specifying whether a value should be a string, number, boolean, array, object, null, etc.

- Required Fields: Clearly marking which properties must be present in a JSON object.

- Value Constraints: Setting limits on values, such as minimum or maximum numerical values, specific string lengths, or required patterns (using regular expressions).

- Overall Organization: Detailing how JSON objects and arrays should be nested and structured.

When applied to LLM output, JSON Schema offers several critical benefits:

- Ensures Consistency: Every LLM response that conforms to the schema will have the same structure, regardless of minor variations in the generation process. This consistency is vital for predictable application behavior.

- Enables Seamless Automated Processing: Downstream systems can reliably parse and use the LLM-generated data because its structure is guaranteed. This eliminates the need for complex, brittle parsing logic.

- Dramatically Improves Data Quality: By defining strict rules, JSON Schema helps catch errors early, preventing malformed data from entering your systems. This significantly enhances overall data integrity.

- Serves as the Foundation for Robust Data Validation: It provides the explicit rules against which the generated JSON can be programmatically checked, ensuring it meets all predefined criteria.

Utilizing JSON Schema transforms LLM outputs from potentially chaotic text into structured, machine-readable data. It acts as a crucial intermediary, bridging the gap between the creative, often unconstrained nature of LLMs and the rigid, structured requirements of software applications.

“JSON Schema is the architect’s blueprint for your LLM’s data, ensuring every brick is laid exactly where it should be.”

The power of JSON Schema lies in its expressiveness and its widespread adoption. It’s a standard that can be understood by developers and machines alike. By defining your data’s structure upfront, you set clear expectations for the LLM, which, when guided correctly, can meet those expectations with remarkable accuracy. This declarative approach simplifies the process of working with LLM-generated content, making it far more practical for real-world applications.

The specification is well-defined and allows for intricate descriptions of data, including complex nested structures and conditional requirements. This level of detail ensures that even sophisticated data requirements can be precisely articulated, leaving little room for ambiguity. For anyone looking to integrate LLMs into production systems, understanding and implementing JSON Schema is no longer optional; it’s a necessity.

This schema acts as a universal language for data. Whether you are an LLM developer, a data engineer, or an application architect, a shared JSON Schema ensures everyone is on the same page regarding data expectations. This clarity is the bedrock of efficient collaboration and robust system design.

The Formatting Hurdle: Bridging LLMs and JSON Schema

A common starting point for many developers is to simply ask an LLM to “output JSON.” While this might sometimes yield a usable result, it often leads to frustration. LLMs, by default, are not inherently programmed to produce perfectly formatted JSON that adheres strictly to a schema without explicit guidance. This is the core of the “formatting hurdle.”

When LLMs generate JSON without strict instructions, several issues frequently arise:

- Extraneous Content: The LLM might include conversational text, explanations, or pleasantries before or after the actual JSON block. For example, it might say, “Here is the information you requested in JSON format:” followed by the JSON, and then “I hope this is helpful!”

- Syntax Errors: The generated JSON might contain subtle syntax errors, such as missing commas, incorrect quotation marks, or unbalanced brackets, making it invalid.

- Missing or Incorrectly Named Fields: The LLM might omit fields that are required by your intended structure or use slightly different names than expected, breaking the schema.

- Incorrect Data Types: Values might be represented in the wrong data type. For instance, a numerical ID might be returned as a string, or a boolean might be represented as a string “true” instead of the boolean `true`.

These issues highlight the critical need for carefully crafting the LLM’s output to meet the precise requirements of a JSON Schema. Achieving compliant formatting is not an accident; it’s a direct consequence of clear prompting and subsequent validation.

The LLM needs to understand not just *what* data to produce, but *how* to produce it. This involves instructing it to:

- Output *only* the JSON object.

- Ensure all required fields are present.

- Use the exact field names specified.

- Adhere to the specified data types for each field.

- Maintain valid JSON syntax.

Without these explicit directives, the LLM defaults to its primary function: generating natural-sounding text, which often means including surrounding conversational elements or making minor deviations in structure. The challenge, therefore, lies in effectively communicating the strict requirements of the JSON Schema to the LLM through the prompt. This is where prompt engineering, specifically for structured output, becomes an art form.

“The difference between a chaotic LLM response and a structured data asset is often just a few well-placed words in the prompt.”

The act of generating JSON that adheres to a schema is a direct test of how well the LLM understands and follows complex instructions. It’s not just about retrieving information, but about presenting it in a machine-consumable format. This formatting is the bridge that allows LLM-generated data to be seamlessly integrated into existing software systems, enabling a wide range of automated workflows and intelligent applications.

This hurdle can be particularly frustrating for developers who expect LLMs to behave like traditional programming functions that return predictable results. However, LLMs are fundamentally different. They are generative models, and guiding their generation process to be precise requires deliberate effort in prompt design and an understanding of their capabilities and limitations.

Successfully overcoming the formatting hurdle means establishing a reliable pipeline where LLM outputs are consistently structured, valid, and ready for immediate use. This transforms LLMs from interesting text generators into powerful tools for data extraction, processing, and integration.

The Art of Instructions: Guiding LLMs for Structured Output

The behavior of any LLM is profoundly shaped by the **instructions** provided within its prompt. When the goal is to generate structured data, particularly in the form of JSON that adheres to a JSON Schema, the quality and explicitness of these instructions become paramount.

Crafting effective **instructions** involves several key strategies:

-

Be Explicit: State the requirement for JSON output directly and unequivocally. Use strong directive language.

*Example:* “Your output *must* be a valid JSON object that conforms to the following schema.”

-

Prohibit Extraneous Content: Clearly instruct the LLM to avoid any text outside the desired JSON structure.

*Example:* “Do not include any introductory phrases, explanations, or concluding remarks. Output *only* the JSON.”

-

Specify Desired Formatting: Remind the LLM of the specific requirements dictated by the schema, such as field names and data types.

*Example:* “The JSON object must contain a ‘product_name’ field (string), a ‘price’ field (number), and an ‘in_stock’ field (boolean).”

To further enhance compliance, consider these prompt engineering techniques for structured output:

- Include Schema Details: You can embed a summarized version of the **JSON Schema** directly within the prompt. This provides the LLM with the exact structural rules to follow. For very complex schemas, this might be impractical, but key elements can always be included.

-

Provide a Clear Example: Often, an LLM learns best from seeing an example of the desired output format. Including a sample JSON object that perfectly matches your schema can guide the LLM more effectively than just textual instructions.

*Example Prompt Snippet:*

“`

Here is an example of the JSON format I need:

{

“product_id”: “XYZ123”,

“name”: “Super Widget”,

“price”: 19.99,

“in_stock”: true

}Now, generate the JSON for the following product details: [Product Details Here]

“` -

Explain the “Why”: Sometimes, explaining the importance of adherence can improve the LLM’s focus.

*Example:* “This JSON will be used for automated data processing and requires precise **formatting** and data validation.”

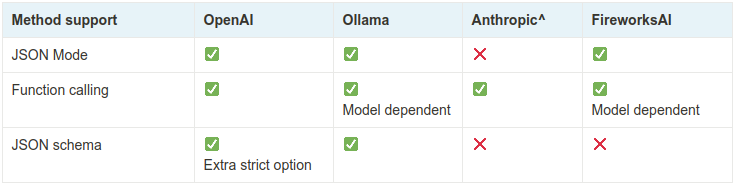

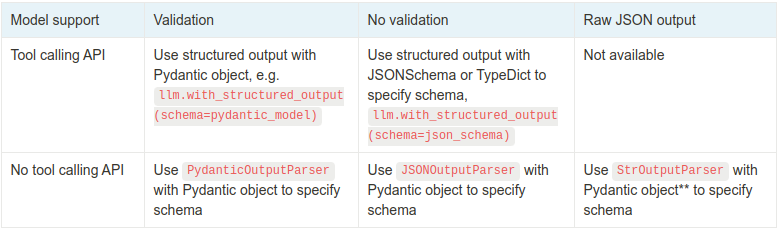

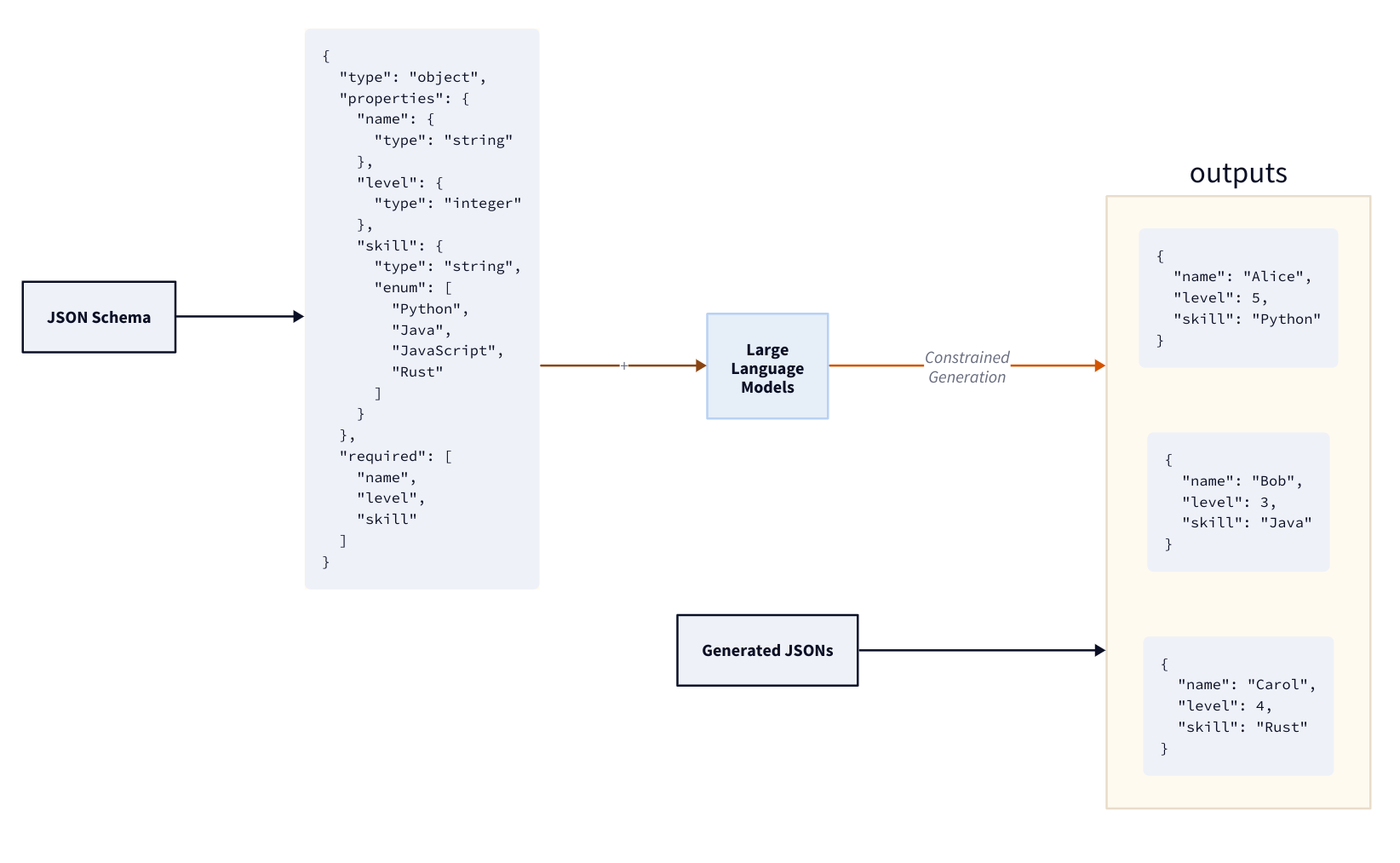

For more advanced LLMs, particularly those designed with function calling or tool-use capabilities, the integration of the **JSON Schema** can be more direct. These models can often be configured to automatically format their output according to a provided schema, or even to select specific functions that match the desired output structure.

“Clear, unambiguous instructions are the bedrock of reliable LLM output.”

The art of instruction involves anticipating how the LLM might interpret your request and proactively addressing potential ambiguities. It’s an iterative process where refining the prompt based on observed outputs is key to achieving consistent, structured results. This careful consideration of how to instruct the LLM is what bridges the gap between its generative capabilities and the structured data requirements of applications.

By mastering prompt engineering, developers can significantly increase the likelihood that LLM-generated data will be usable out-of-the-box, reducing the need for post-processing and manual error correction. This direct control over output format is a critical step towards building robust and scalable AI-powered systems.

The principle is simple: the more specific and clear your instructions, the more likely the LLM is to comply. Ambiguity is the enemy of structured output, and a well-crafted prompt leaves no room for misinterpretation.

A Practical Walkthrough: Implementing JSON Schema for LLM Output Validation

Let’s walk through the practical steps of using **JSON Schema** to ensure your LLM outputs are structured correctly and reliably. This process involves defining your schema, crafting effective prompts, and implementing validation.

Step 1: Define Your JSON Schema

The first and most critical step is to create a **JSON Schema** that accurately represents the desired structure of your **LLM output**. This schema acts as the definitive guide for what your data should look like.

Here’s a clear, illustrative example of a JSON Schema for a product description:

{

"$schema": "http://json-schema.org/draft-07/schema#",

"title": "Product",

"description": "A product in the catalog",

"type": "object",

"properties": {

"product_id": {

"description": "Unique identifier for the product",

"type": "string"

},

"name": {

"description": "Name of the product",

"type": "string"

},

"price": {

"description": "Price of the product",

"type": "number",

"exclusiveMinimum": 0

},

"in_stock": {

"description": "Whether the product is currently in stock",

"type": "boolean"

}

},

"required": ["product_id", "name", "price", "in_stock"]

}When defining your schema, start with simpler structures and gradually add complexity as your needs evolve. It’s also highly advisable to maintain version control for your schemas, especially as your application or LLM integration matures. This ensures traceability and makes it easier to manage changes over time.

“Your JSON Schema is the contract; ensure it’s clear, comprehensive, and accurately reflects your data requirements.”

Consider all necessary fields, their expected data types, and any constraints on their values. This upfront work is crucial for setting the stage for reliable LLM output. A well-defined schema minimizes ambiguity for both the LLM and your downstream systems.

Step 2: Craft Your LLM Instructions (Prompt Engineering)

Next, you need to integrate the **JSON Schema** (or its essential components) into your LLM prompt. Explicitly instruct the LLM to generate output that conforms to this structure and specific **formatting**.

Use phrases like “Strictly adhere to this schema” or “Your response must be a JSON object validated against the following schema.” You might also instruct the LLM on how to handle potential edge cases or errors during generation, if feasible within the prompt itself. For example, if a piece of information might be missing, you could specify a default value or a null representation according to your schema.

Example Prompt Snippet:

"Please extract the product details from the following description and return them as a JSON object.

Your output MUST strictly adhere to the provided JSON schema:

{

\"$schema\": \"http://json-schema.org/draft-07/schema#\",

\"title\": \"Product\",

\"description\": \"A product in the catalog\",

\"type\": \"object\",

\"properties\": {

\"product_id\": {

\"description\": \"Unique identifier for the product\",

\"type\": \"string\"

},

\"name\": {

\"description\": \"Name of the product\",

\"type\": \"string\"

},

\"price\": {

\"description\": \"Price of the product\",

\"type\": \"number\",

\"exclusiveMinimum\": 0

},

\"in_stock\": {

\"description\": \"Whether the product is currently in stock\",

\"type\": \"boolean\"

}

},

\"required\": [\"product_id\", \"name\", \"price\", \"in_stock\"]

}

Do NOT include any explanatory text before or after the JSON. Only the JSON object should be returned.

[Your product description goes here]"

Step 3: Generate and Validate LLM Output

Once your prompt is ready, send it to the LLM and capture the generated **LLM output**. The crucial next step is performing **data validation**. This involves programmatically checking the captured output against your defined **JSON Schema**.

Numerous tools and libraries exist to assist with this **data validation**:

- Python: Libraries like `jsonschema` or `pydantic` are excellent for validating JSON against a schema.

- Node.js: The `ajv` (Another JSON Schema Validator) library is a popular and performant choice.

- Prompt-Testing Frameworks: Tools such as Promptfoo (www.promptfoo.dev/docs/guides/evaluate-json/) are designed to test prompts and evaluate their outputs, including JSON validation.

This validation step is non-negotiable. It’s the final gatekeeper ensuring that the data you receive from the LLM is in the correct format and structure. If the output fails validation, it should ideally be flagged, and potentially a retry mechanism or error handling process should be triggered.

An advanced technique involves feeding validation errors back to the LLM, prompting it to correct its own output. This can create a loop of self-correction, though it requires careful implementation to avoid infinite loops.

“Validation is not a suggestion; it’s the cornerstone of trust in LLM-generated structured data.”

Step 4: Iteration and Refinement

Achieving perfect LLM output that consistently adheres to a schema is often an iterative process. You’ll likely need to analyze validation failures to refine your prompts, adjust your schemas, or improve the LLM’s **instructions**. For example, if the LLM consistently fails to provide a specific field, you might need to make the instruction for that field more explicit or provide a clearer example.

Maintaining clear schema versioning throughout this refinement process is essential. It helps you track changes, understand why a prompt that worked before might not be working now, and manage the evolution of your data structure. This cycle of define, prompt, generate, validate, and refine is key to unlocking reliable structured data from LLMs.

“The journey to perfect structured LLM output is iterative. Embrace refinement.”

By following these steps, you can transform the often unpredictable nature of LLM outputs into a dependable source of structured data, ready for integration into your applications.

Advanced Strategies and Best Practices

Beyond the fundamental steps, several advanced strategies can further enhance the reliability and complexity of LLM outputs formatted with **JSON Schema**. Understanding these can unlock more sophisticated applications.

Handling Complex Data Types: JSON Schema is incredibly versatile. It can handle complex data types beyond simple strings and numbers:

- Enums: Restrict a value to a specific list of allowed strings (e.g., “status”: {“enum”: [“pending”, “processing”, “completed”]}).

- Arrays: Define the structure of items within a list, including whether they must all be of the same type or can be mixed.

- Nested Objects: Create hierarchical data structures by embedding objects within objects.

- Custom Validation Rules: Use regular expressions (the `pattern` keyword) for intricate string validation, or define custom formats. You can also specify `minLength`, `maxLength`, `minimum`, `maximum`, `exclusiveMinimum`, `exclusiveMaximum`, and `multipleOf` for precise value constraints.

When instructing the LLM, you may need to be even more explicit about these complex types, perhaps by providing examples of nested structures or using specific terminology that the LLM is likely to understand from its training data.

Robust Data Validation Strategies: To ensure data integrity, consider these:

- Strict Schema Definitions: Define your schema as strictly as possible, using `required` fields and precise type constraints. Less flexibility for the LLM means more predictability.

- Prioritize Rejection: It’s generally better to reject an output that slightly deviates from the schema than to try and “fix” it automatically with risky parsing recovery. Rejecting allows you to trigger a retry or alert the user.

- Schema Evolution with Care: When updating schemas, ensure backward compatibility if possible or implement robust version management in your validation system.

Effective Error Handling and Fallback Mechanisms: When **LLM output** fails validation, a graceful fallback is crucial:

- Retry Mechanisms: Implement a system to retry the prompt a few times, perhaps with a slightly modified prompt or a different LLM temperature setting.

- Request Corrections: If the LLM supports it, prompt it to identify and correct the specific validation error. This can be very effective.

- Apply Default Values: For non-critical fields that failed validation, you might have predefined default values to use instead.

- Alerting and Logging: Ensure that all validation failures are logged for analysis and that critical failures trigger alerts to developers or operations teams.

Optimizing Prompt Instructions: To maximize compliance:

- Clarity and Conciseness: While being explicit, avoid overly verbose prompts that might confuse the LLM.

- Example-Driven Guidance: As mentioned, providing a well-formed example of the desired JSON output is often the most effective way to guide the LLM.

- Few-Shot Learning: For critical tasks, providing a few examples of input-output pairs that demonstrate correct JSON formatting can significantly improve performance.

“Advanced techniques turn LLMs into precise data machinery, not just text generators.”

By implementing these advanced strategies, you can leverage **JSON Schema** to create highly reliable and sophisticated data pipelines powered by LLMs. This moves beyond basic data extraction to building complex applications where LLM-generated data is a foundational, trusted component.

The key is to treat the LLM less like a magic box and more like a highly capable but literal assistant that requires precise, unambiguous instructions and verification. The combination of a well-defined **JSON Schema**, expert prompt engineering, and diligent **data validation** is what unlocks the true potential of LLMs for structured data generation.