“`html

Navigating Gemini Pro Function Call Tooling Errors: A Comprehensive Guide

Estimated reading time: 15 minutes

Key Takeaways

- Encountering errors with AI tools, especially when they disrupt expected functionality, is a common frustration.

- This post focuses on understanding and resolving prevalent issues related to “Gemini Pro function call tooling errors” and “AI agent not calling tools.”

- Errors can stem from incorrect tool definitions, parameter type mismatches, API issues, unexpected tool responses, and network problems.

- Troubleshooting involves verifying tool definitions, checking parameters, validating API accessibility, and examining execution logs.

- Platform-specific errors, such as Facebook’s “content not available” and general AI “cannot fulfill request” errors, also require targeted solutions.

- Network issues, like ERR_NETWORK_CHANGED, can indirectly lead to AI tool failures by interrupting API calls.

- Adopting best practices like robust tool integration, API maintenance, clear prompting, and network stability monitoring is crucial for error prevention.

- Community sharing and proactive error logging can significantly aid in resolving complex AI tooling issues.

Table of contents

- Navigating Gemini Pro Function Call Tooling Errors: A Comprehensive Guide

- Key Takeaways

- Understanding Gemini Pro Function Call Tooling Errors

- Troubleshooting AI Agent Tooling Issues

- Resolving Common Platform-Specific Errors

- Network-Related Errors and Their Impact

- Best Practices for Avoiding Errors

- Frequently Asked Questions

It’s a moment of frustration familiar to many AI developers and users: you set up an AI agent with specific tools, expecting seamless interaction, only to be met with errors. Perhaps the agent fails to execute a crucial function, or worse, it claims it cannot fulfill a request that seems well within its capabilities. This is particularly common when working with advanced models like Gemini Pro and its function calling capabilities. This post is dedicated to demystifying these prevalent issues, specifically focusing on understanding and resolving “Gemini Pro function call tooling errors” and the frustrating scenario where an “AI agent not calling tools.” We will explore the common causes, offer actionable troubleshooting steps, and discuss preventative measures to ensure your AI agents operate as intended.

Understanding Gemini Pro Function Call Tooling Errors

Before diving into troubleshooting, it’s essential to grasp what function calls are in the context of AI models like Gemini Pro.

Define Function Calls in Gemini Pro:

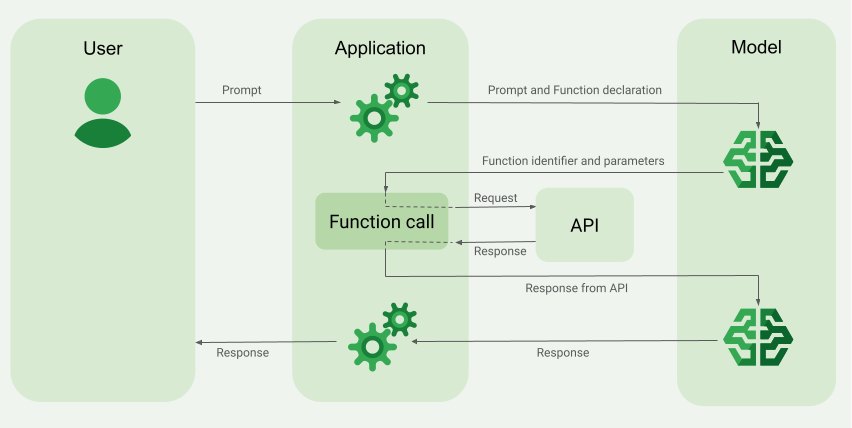

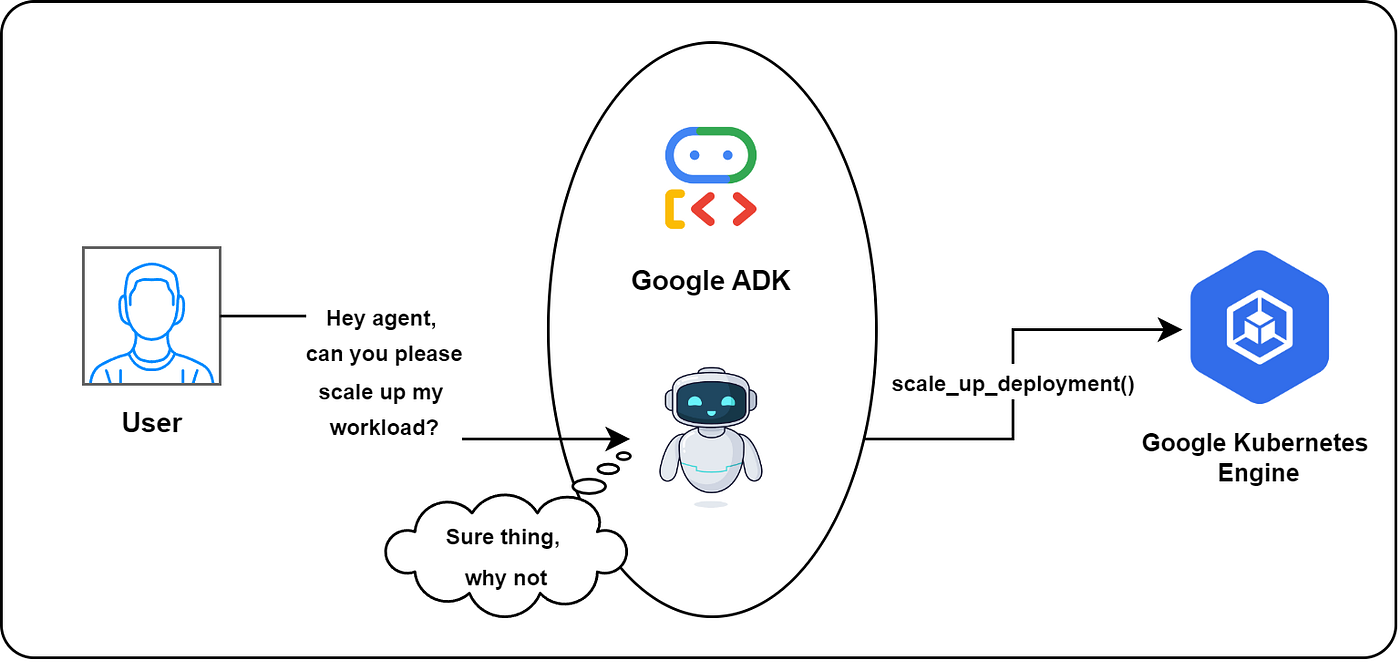

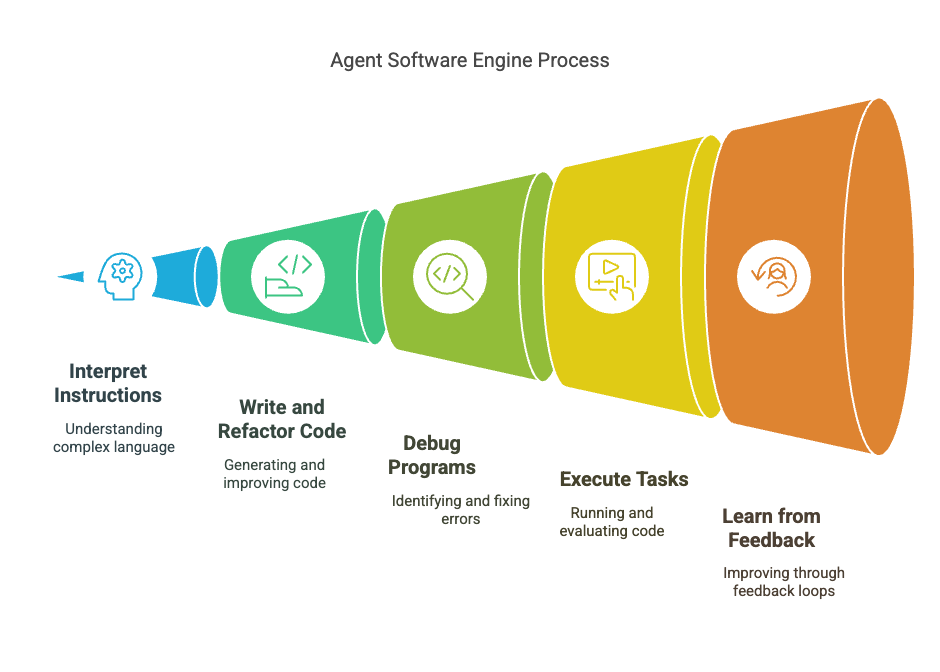

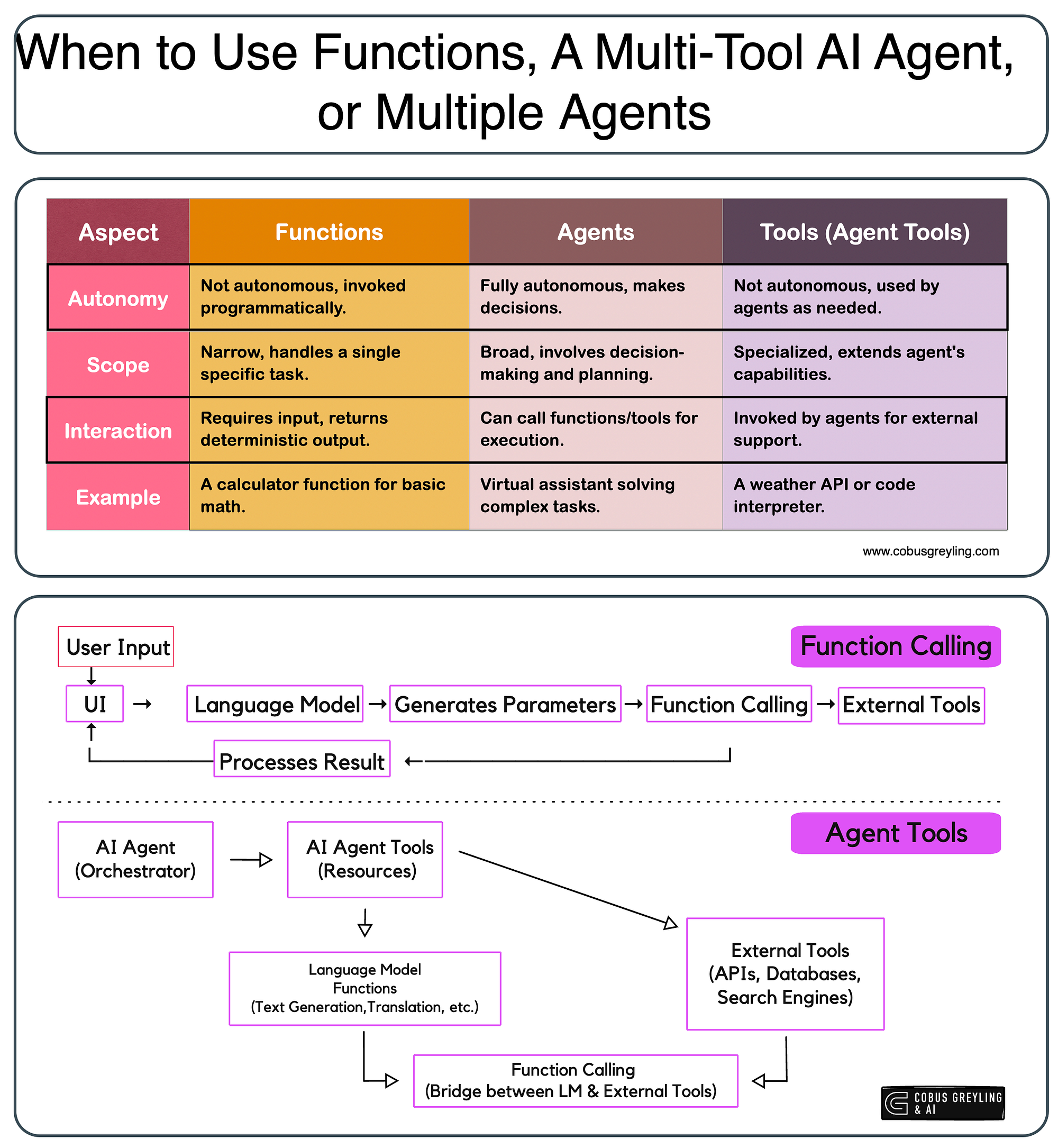

Function calling is a powerful feature that allows large language models (LLMs) to interact with external resources by executing defined functions or APIs. Essentially, it enables the AI to “call out” to other services to perform specific actions, such as editing files, fetching real-time data, running commands, or interacting with a database. This bridges the gap between an AI’s understanding and the ability to take concrete actions in the digital world. This capability is critical for building sophisticated AI agents that can go beyond simple text generation and perform complex tasks. This capability is critical for building sophisticated AI agents that can go beyond simple text generation and perform complex tasks. Gemini Pro function call tooling empowers these interactions.

Common Causes of “Gemini Pro Function Call Tooling Errors”:

When these function calls fail, it can lead to a cascade of problems, often manifesting as an “AI agent not calling tools.” Several factors can contribute to these errors:

-

Incorrect Tool Definition: Errors frequently originate from how the functions or tools are described to the AI. This includes improperly structured function declarations, missing required parameters that the AI expects, or a schema that doesn’t precisely align with the AI’s interpretation of its capabilities. The AI needs a clear, unambiguous blueprint of the tool it can use. If this blueprint is flawed, the AI won’t know how or when to invoke it. Incorrect tool definitions can be a significant culprit, as can issues in tooling descriptions.

-

Parameter Type Mismatches: Even if the tool is defined correctly, passing data in the wrong format can cause failures. For instance, expecting a JSON object but receiving a JSON string, or not adhering to Vertex AI’s specific data type requirements for parameters, will lead to errors. The AI might correctly identify that a function needs to be called, but if it can’t prepare the arguments correctly, the call will fail. This is a common pitfall, as highlighted in discussions regarding parameter mismatches and broader issues with platform integrations.

-

API Issues: The tools often rely on external APIs. Problems with these APIs can directly cause function call failures. This can range from unreachable endpoints, due to server downtime or network issues, to invalid authentication credentials that prevent the AI from accessing the service. Sometimes, unexpected changes in upstream API versions can also break the integration. These are external factors that directly impact the reliability of the function call. API accessibility and authentication problems are frequent sources of error, as are concerns raised about Gemini Pro vision and function calling.

-

Unexpected Tool Responses: The AI model needs to understand the output from the tools it calls. Issues arise when tools return data in formats the AI model cannot process, or when tool outputs change unexpectedly. If a tool that used to return a simple string now returns a complex JSON object without the AI being updated to handle it, the AI might get confused. This can lead to the AI misinterpreting the result and failing to proceed correctly. Users have reported that tool functionality can be unreliable due to unexpected responses.

-

Network Problems: Underlying network connectivity issues are a fundamental cause of many AI errors. Disruptions in network connectivity can interfere with API calls or the availability of underlying services. If the AI cannot reach the API endpoint, or if its own connection to the AI service is unstable, function calls are bound to fail. These are often subtle but critical issues. Network issues can directly impact function calls, as noted by users facing “I am sorry I cannot fulfill this request” errors, and contribute to the general unreliability mentioned in general tool functionality problems.

Impact of These Errors:

The direct consequence of these errors is that the AI agent is not calling tools as intended. This can lead to a variety of undesirable outcomes:

- The AI might respond with generic failure messages like, “*I am sorry, I cannot fulfill this request*.”

- There can be significant delays in processing as the AI attempts and fails to execute functions.

- The outputs generated by the AI become unreliable, either incorrect or incomplete.

- Users may receive confusing empty responses, indicating that the AI attempted an action but received no usable output.

These impacts disrupt the user experience and undermine the confidence in the AI’s capabilities. The sources discuss how these issues lead to the frustrating “I am sorry, I cannot fulfill this request” response (here and here), contribute to general unreliability (here), and break integrations (here).

Troubleshooting AI Agent Tooling Issues

When your AI agent isn’t behaving as expected and you suspect tool-related errors, a systematic troubleshooting approach is key. Let’s break down the general diagnostic steps for an “AI agent not calling tools.”

General Diagnostic Steps for “AI Agent Not Calling Tools”:

-

Verify Tool Definitions: This is the foundational step. Carefully confirm that all your tools are correctly defined according to the expected schema. Pay close attention to accurate names, clear and concise descriptions (which the AI uses to understand when to use a tool), and precise parameter definitions. Ensure there are no typos or structural inconsistencies. You can cross-reference with documentation like developer issue reports and community discussions for common pitfalls.

-

Check Tool Parameters and Types: Once the definition is sound, scrutinize the parameters being passed. Ensure that the data types of these parameters precisely match the requirements of the target API or function. A common mistake is passing a JSON object as a string when the API expects a true JSON object, or vice versa. Small discrepancies here can lead to significant failures. Consult resources like Agno issues and Make integration issues for examples of these problems.

-

Confirm Tool Binding and Model Compatibility: Ensure the tool is correctly associated with the specific AI agent or model instance you are using. Sometimes, tools might be defined globally but not properly bound to the active agent. Additionally, verify that the specific version of the AI model you are employing actually supports function calling. Older versions or different model families might have varying capabilities. LangchainJS discussions often cover binding issues, and Vertex AI documentation is the authoritative source for model support.

-

Validate API Accessibility: If your tools interact with external APIs, perform a sanity check on the APIs themselves. Verify that the API endpoints are reachable from your environment. Check that all authentication credentials (API keys, tokens, etc.) are valid, correctly configured, and have the necessary permissions. Problems here are external to the AI model but directly impact its ability to use the tools.

-

Examine Execution Logs: Most AI frameworks and platforms provide execution logs that can be invaluable. Investigate these logs for detailed error messages. Look for clues such as missing parameters that were not provided, specific content restrictions that were violated, or format errors that occurred during the execution of a tool. Logs are often the quickest way to pinpoint the exact point of failure.

-

Address “Cannot Fulfill Request” Errors: When the AI responds with “*cannot fulfill this request*,” it’s often a sign that it couldn’t successfully find or execute a suitable tool. Check if the requested functionality is actually available through the configured tools. Investigate any API errors that might have occurred. You may need to refine the tool definitions or the prompt to make the request clearer and more aligned with the AI’s capabilities. Resources like Google developer discussions and Cursor forum posts offer insights into this specific error.

-

Implement Context Restoration: In complex, multi-turn conversations, the AI’s internal state or context can sometimes become corrupted, leading to errors. If you observe recurring issues after a series of interactions, consider explicitly instructing the AI agent to re-read the relevant context or to restore its tool state. This can prevent a minor glitch from snowballing into a larger problem. The challenges mentioned in Cursor forums sometimes touch upon state management issues.

Prompting Best Practices for Tool Usage:

The way you prompt the AI significantly influences its ability to use tools effectively.

It’s crucial to carefully phrase your requests, ensuring they are clear, unambiguous, and directly map to the available tool functionalities. Where possible, provide step-by-step instructions within the prompt itself, or define fallback mechanisms for error handling. For example, you might prompt the AI to “first try to get the data using the `getUserData` tool, and if that fails, try to fetch it from the public profile API.” This kind of structured prompting, as discussed in relation to improving reliability, can preempt many potential errors.

Resolving Common Platform-Specific Errors

Beyond the general function call errors, users sometimes encounter specific issues tied to the platforms they are integrating with or the nature of AI responses.

Facebook Errors: “Fix ‘content not available’ Facebook error”

While not directly related to Gemini Pro function calls, the “content not available” error on Facebook is a common issue that can arise when AI agents attempt to interact with or report on social media content. Understanding its causes and resolutions is important for broader AI integration:

-

Explanation of Causes: This error typically occurs due to several reasons:

- Network Connectivity Problems: A weak or unstable internet connection can prevent the successful loading of content.

- Account Restrictions: If your Facebook account has been temporarily restricted or flagged, you might lose access to certain content.

- Content Deletion: The most common reason is that the content (post, photo, video) has been deleted by the user or removed by Facebook.

- Privacy Settings Changes: The owner of the content may have changed their privacy settings, making it inaccessible to you or the AI agent.

- Platform Issues: Occasionally, Facebook itself experiences temporary glitches or server issues.

-

Resolution Steps: To address this, users should systematically:

- Verify Internet Connection: Ensure you have a stable and strong internet connection.

- Check Facebook Login Status: Make sure you are properly logged into your Facebook account.

- Confirm Content Existence: Try to access the content directly through a browser to see if it still exists and is visible to you.

- Review Privacy Settings: If you own the content or have control over it, check and adjust privacy settings.

- Wait and Retry: If it appears to be a platform-wide issue, waiting a short period and retrying might resolve the problem.

General AI Errors: “Solve ‘cannot fulfill request’ AI error”

This is a common catch-all error message that indicates the AI model was unable to process a user’s request. It often overlaps with function call failures but can also occur in simpler interactions.

-

Explanation of Causes: This error occurs when the AI model:

- Lacks the inherent capability or knowledge to perform the requested task.

- Does not have access to the necessary tools or functions to fulfill the command.

- Receives an ambiguous or poorly phrased prompt that it cannot interpret.

- Is asked to perform an action that is outside its defined scope or ethical guidelines.

- Encounters an internal processing error or a bug.

This is consistent with the issues seen in Gemini Pro function call errors and the general unreliability mentioned in Cursor forum discussions.

-

Resolution Strategies: To resolve this, users should:

- Rephrase the Request: Try asking the question or giving the command in a different way, providing more specific context or breaking down complex tasks into simpler, sequential steps.

- Simplify the Prompt: Remove jargon or overly technical terms if the AI might not understand them.

- Verify Tool and Model Capabilities: Ensure the requested operation aligns with the available tool functions and the general capabilities of the AI model. Consult the model’s documentation or available tools list.

- Check for Conflicts: If multiple tools are available, the AI might be confused about which one to use. You might need to explicitly guide it.

- Ensure Compatibility: If the error persists, double-check the compatibility between the AI model version and the tools being integrated, as suggested in LangchainJS discussions.

Network-Related Errors and Their Impact

Network stability is a bedrock requirement for almost all online services, including AI tools that rely on external APIs and cloud infrastructure. Network disruptions can manifest in various ways, impacting AI functionality indirectly but significantly.

Understanding “Troubleshoot ERR_NETWORK_CHANGED chrome”:

The `ERR_NETWORK_CHANGED` error, commonly seen in Chrome, signifies that the browser or the operating system has detected a change in the network connection while an operation was in progress. This could be anything from switching between Wi-Fi and Ethernet, connecting to a new Wi-Fi network, or even a brief interruption in your internet service. In the context of AI tools, such a change can abruptly interrupt API calls, data transfers, or the communication channel between the user and the AI service, leading to failed operations.

Common Causes:

- Switching between different network types (e.g., Wi-Fi to Ethernet, or to a mobile hotspot).

- Connecting to or disconnecting from a VPN service.

- Manual network resets performed by the user or system.

- Temporary instability with your Internet Service Provider (ISP).

- Router or modem reboots.

Solutions:

- Reconnect to Network: Sometimes, simply reconnecting to your current network can resolve the issue.

- Restart Chrome: Closing and reopening the browser can clear temporary network state issues.

- Avoid Network Switches: During critical online sessions, try to maintain a stable network connection and avoid switching.

- Reset Network Adapters: On your device, you can try resetting the network adapters (e.g., Wi-Fi or Ethernet adapter) through your operating system’s network settings.

- Check Router/Modem: Ensure your network hardware is functioning correctly and is up-to-date.

Indirect Impact on AI Tools:

The connection between `ERR_NETWORK_CHANGED` and AI errors might not be immediately obvious, but it’s critical. When an AI agent makes a function call that requires an API request, and the network connection drops or changes mid-request, the call will fail. This can lead to timeouts, incomplete data transfers, or corrupted responses. These failures can then be interpreted by the AI or the surrounding application as a “Gemini Pro function call tooling error” or simply result in a generic “cannot fulfill request” message. As such, network stability is a fundamental requirement for the reliable functioning of AI tools, and disruptions can be a direct cause for many issues, as highlighted by the impact of network problems on function calls in Google developer discussions and Cursor forum posts.

Best Practices for Avoiding Errors

Proactive measures are always more effective than reactive troubleshooting. Implementing a set of best practices can significantly reduce the occurrence of errors related to AI tooling.

-

Robust Tool Integration: Always prioritize using well-defined, validated, and thoroughly tested tool configurations. Ensure they are correctly bound to the AI agent, following the specific guidelines provided in the official documentation for your chosen framework or platform. Referencing established patterns in communities like Agno, LangchainJS, and Google’s own Vertex AI documentation can help.

-

API Maintenance: Treat your integrated APIs as critical infrastructure. Regularly test their availability and performance. Keep authentication credentials up-to-date and ensure any changes in API versions are managed proactively to prevent integration failures. Don’t let your tools rely on outdated or insecure endpoints.

-

Clear Prompting: Invest time in crafting prompts that are clear, specific, and unambiguous. Avoid vagueness. The more precise your instructions, the better the AI model can understand your intent and select the appropriate tools. This directly impacts model performance and reduces the likelihood of errors, as emphasized in discussions on prompting for reliability and general AI agent design.

-

Network Stability: For applications that rely heavily on real-time AI interactions or API calls, actively monitor network conditions. In environments known for unstable connections (e.g., mobile networks, shared Wi-Fi), consider implementing retry mechanisms or fallback strategies for critical operations.

-

Proactive Error Logging: Implement comprehensive error logging for both AI interactions and tool executions. Regularly analyze these logs to identify recurring issues, patterns, or specific failure points. This data is invaluable for developing targeted solutions and refining your AI system over time.

-

Stay Updated: Keep abreast of updates and changes in the AI models, libraries, and platforms you are using. New versions often come with bug fixes and improved functionality that can resolve existing issues. For instance, checking Gemini API troubleshooting guides can provide valuable insights.

-

Incremental Development: Build and test your AI agent’s capabilities incrementally. Start with one or two tools, ensure they work flawlessly, and then gradually add more complexity. This makes it easier to isolate the source of any new errors that may arise.

Frequently Asked Questions

Q1: What is the most common reason for an “AI agent not calling tools”?

A1: The most common reasons are incorrect tool definitions (schema mismatches, missing parameters) and issues with parameter types or formats when the AI attempts to call the tool. Double-checking these aspects is usually the first step.

Q2: My Gemini Pro agent keeps saying “I am sorry, I cannot fulfill this request.” What should I check?

A2: This often means the AI couldn’t find a suitable tool for your request or encountered an error while trying to use one. Verify that the request aligns with your defined tools’ capabilities. Check API accessibility and ensure your tool definitions are precise. Review execution logs for specific errors. Resources like this discussion offer insights.

Q3: How can network issues like ERR_NETWORK_CHANGED affect my AI function calls?

A3: Network interruptions can break the connection between your AI agent and the APIs it needs to call, or between the user and the AI service itself. This can lead to timeouts, incomplete data, and ultimately, failed function calls. Ensuring a stable network is crucial for reliable AI operations.

Q4: I’m getting parameter type mismatches. What’s the best way to fix this?

A4: Carefully examine the expected data types for each parameter in your tool definitions and compare them against the data types you are providing. Ensure that you are passing JSON objects as actual objects (not strings that look like JSON) and adhering to specific formats like integers, booleans, or arrays as required by the API. Consult platform-specific documentation, such as on Make integrations, for common pitfalls.

Q5: What are some advanced techniques for preventing AI tooling errors?

A5: Advanced techniques include implementing robust error handling within your tools (e.g., try-catch blocks), adding validation layers for tool inputs and outputs, using AI model versions known for stability in function calling, and employing sophisticated prompt engineering that includes explicit fallback instructions. Proactive logging and analysis of execution traces are also key.

Q6: How do I debug unexpected tool responses?

A6: First, test the tool/API independently of the AI to ensure it behaves as expected. Log the exact output the tool returns. Then, review your AI’s tool definition or prompt to see how it’s designed to interpret that output. If the tool’s output format has changed, you’ll need to update the AI’s understanding of it. Issues like those described in this forum thread highlight the importance of consistent responses.

“`