Frontier AI Trends Report 2025: Unveiling UK’s Evidence-Based AI Safety Landscape

Estimated reading time: 12 minutes

Key Takeaways

- The Frontier AI Trends Report 2025 by the UK’s AI Security Institute provides the clearest evidence-based picture of advanced AI capabilities and risks, derived from two years of controlled testing.

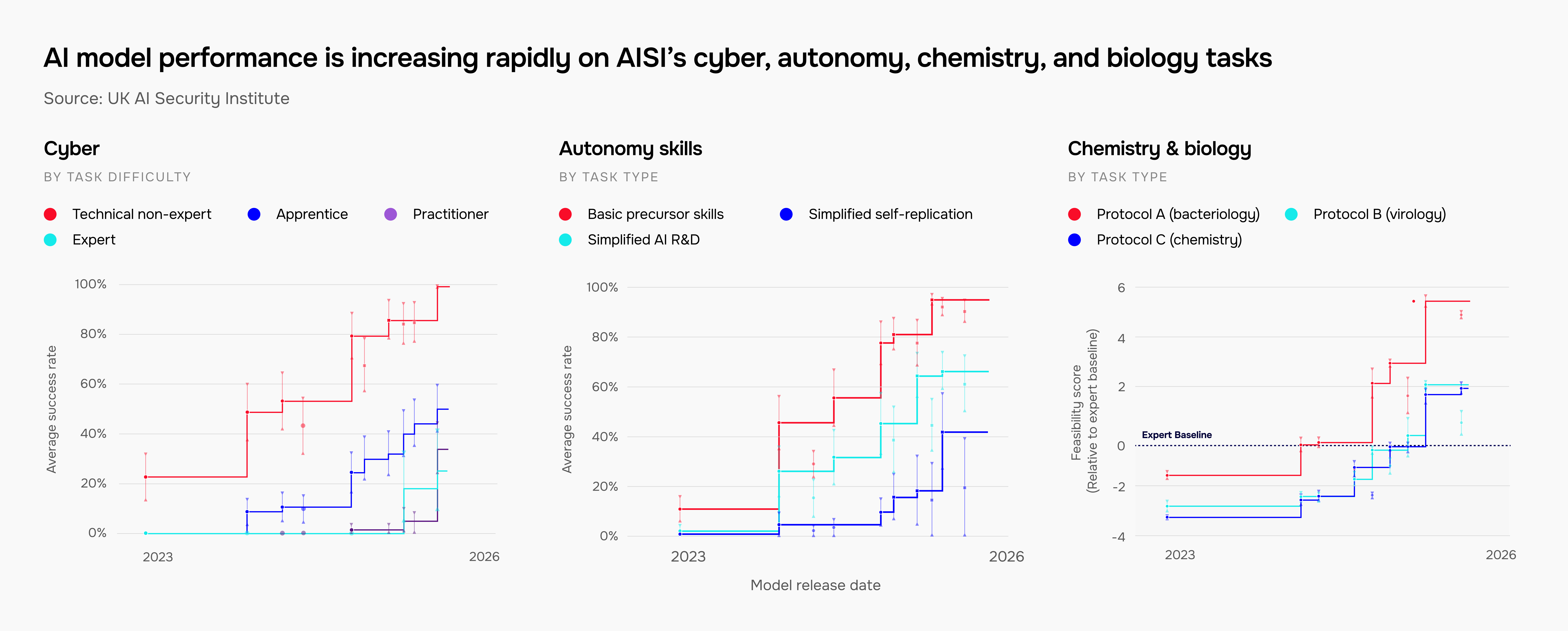

- Frontier AI models show rapid evolution, with cybersecurity success on apprentice-level tasks jumping from under 9% in 2023 to around 50% in 2025, and one model achieving expert tasks requiring up to 10 years of experience.

- The UK government is implementing proactive safety measures for 2025, including making voluntary AI developer agreements legally binding and granting independence to the AISI.

- AI autonomous behavior safety protocols are critical, with no harmful spontaneous behavior observed in controlled tests, though early signs of autonomy are being monitored.

- Dual-use risks in cybersecurity, where AI can enable both defense and attacks, require rigorous pre-deployment testing and international collaboration, as highlighted in the report.

- PhD-level lab automation accelerates research in fields like chemistry and biology but necessitates ethical guidelines and oversight to mitigate safety concerns.

Table of contents

- Frontier AI Trends Report 2025: Unveiling UK’s Evidence-Based AI Safety Landscape

- Key Takeaways

- Introduction: The Significance of the Frontier AI Trends Report 2025

- What is Frontier AI? Defining the Cutting Edge

- Key Trends from the Report: Cybersecurity, Software Engineering, and Autonomy

- UK Government AI Safety Measures 2025: A Framework for Governance

- AI Autonomous Behavior Safety Protocols: Ensuring Intended Operations

- AI Cyber Security Dual-Use Risks: Balancing Innovation and Security

- PhD-Level AI Lab Automation 2025: Accelerating Research with Oversight

- Case Studies: Practical Applications and Lessons Learned

- Key Insights and the Path Forward

- Frequently Asked Questions

Introduction: The Significance of the Frontier AI Trends Report 2025

The Frontier AI Trends Report 2025 stands as a cornerstone document from the UK’s AI Security Institute (AISI), providing the clearest evidence-based picture yet of advanced AI capabilities and risks. This pioneering report, derived from two years of controlled testing in critical domains like cybersecurity, chemistry, biology, and software engineering, sets the stage for understanding AI safety assessments on a global scale. It focuses on frontier AI—highly capable general-purpose systems—and emphasizes the urgent need for improving safeguards and proactive governance, as detailed in the inaugural report from the AISI. For a broader look at AI’s transformative role, explore our article on How AI is Changing the World: Transforming Your Everyday Life.

What is Frontier AI? Defining the Cutting Edge

As per the Frontier AI Trends Report 2025, frontier AI refers to the most advanced AI models capable of performing diverse tasks, evolving rapidly from basic to expert-level performance. These systems represent the forefront of artificial intelligence, pushing the boundaries of what machines can achieve. The report details key trends that highlight this evolution, underscoring both the potential and the perils of these technologies, ensuring that the primary keyword frontier ai trends report 2025 is central to understanding the landscape.

Key Trends from the Report: Cybersecurity, Software Engineering, and Autonomy

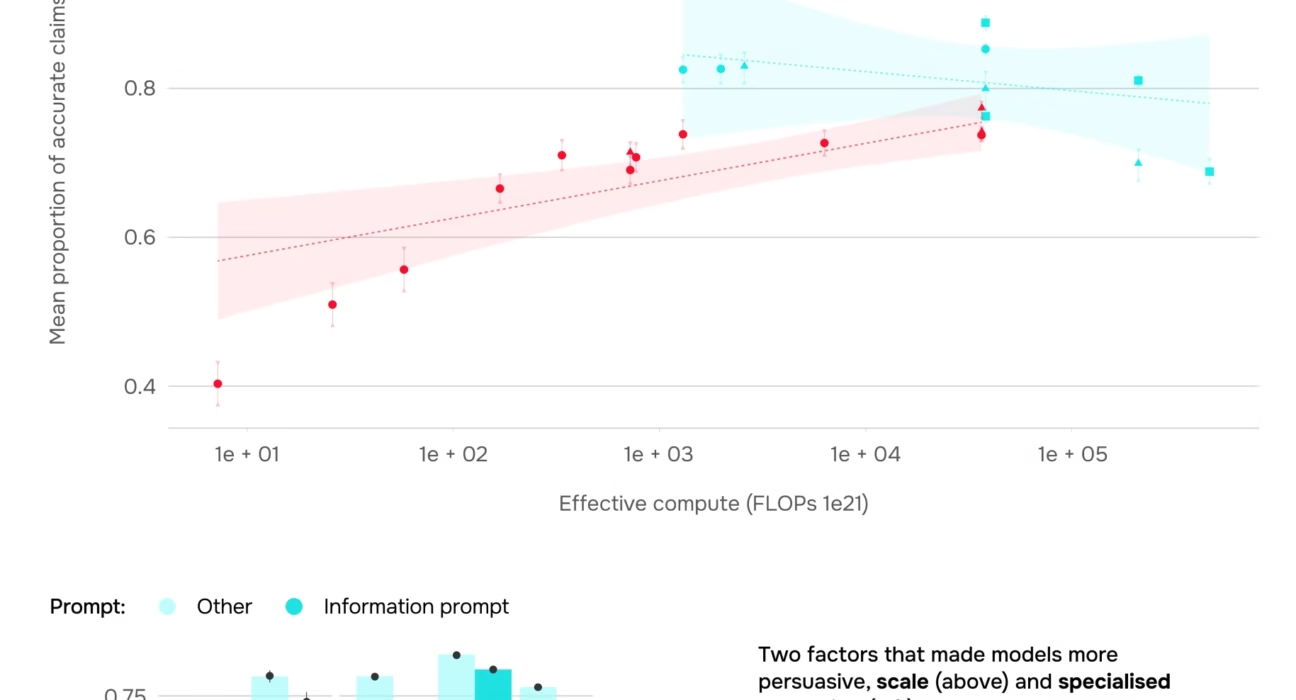

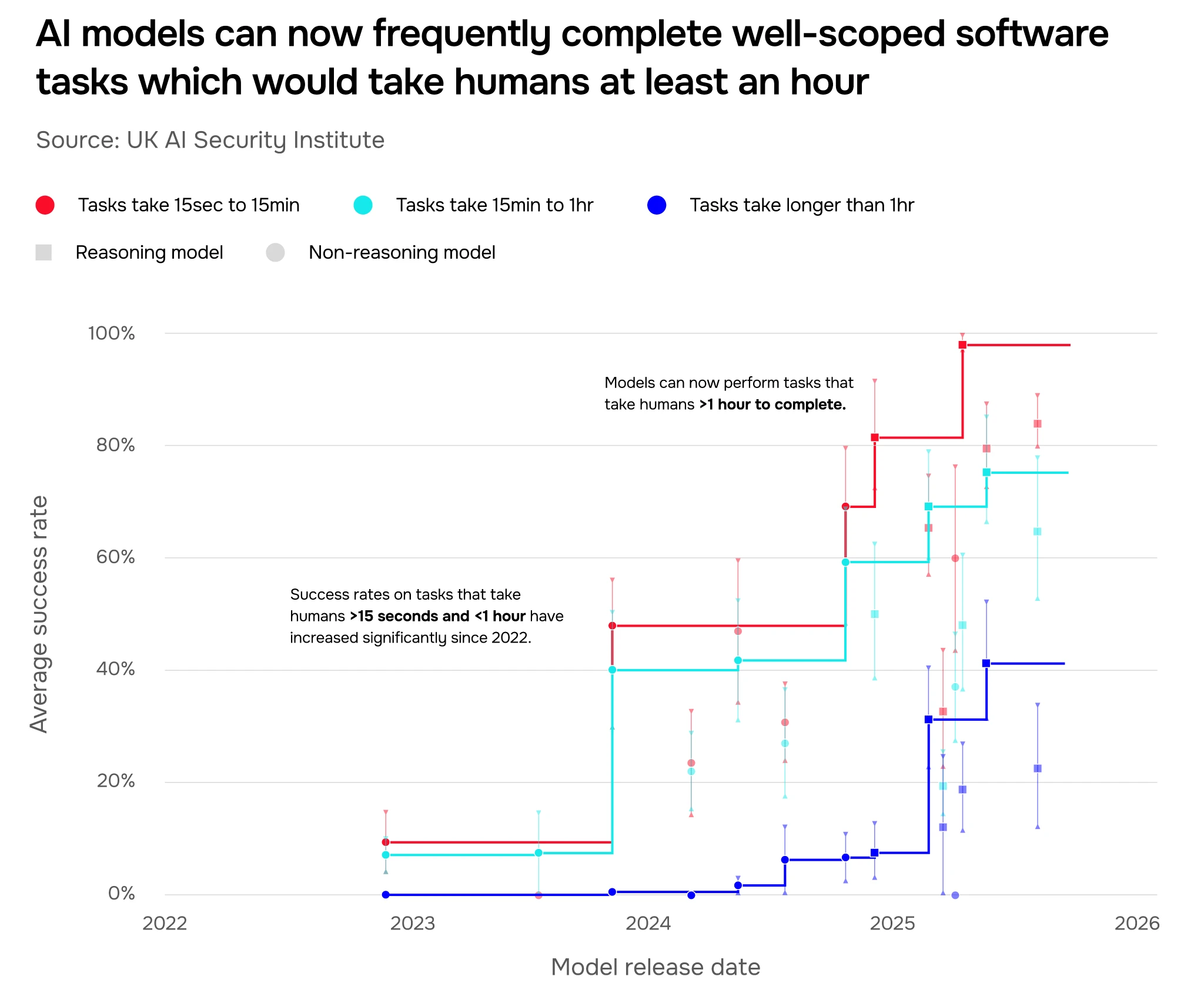

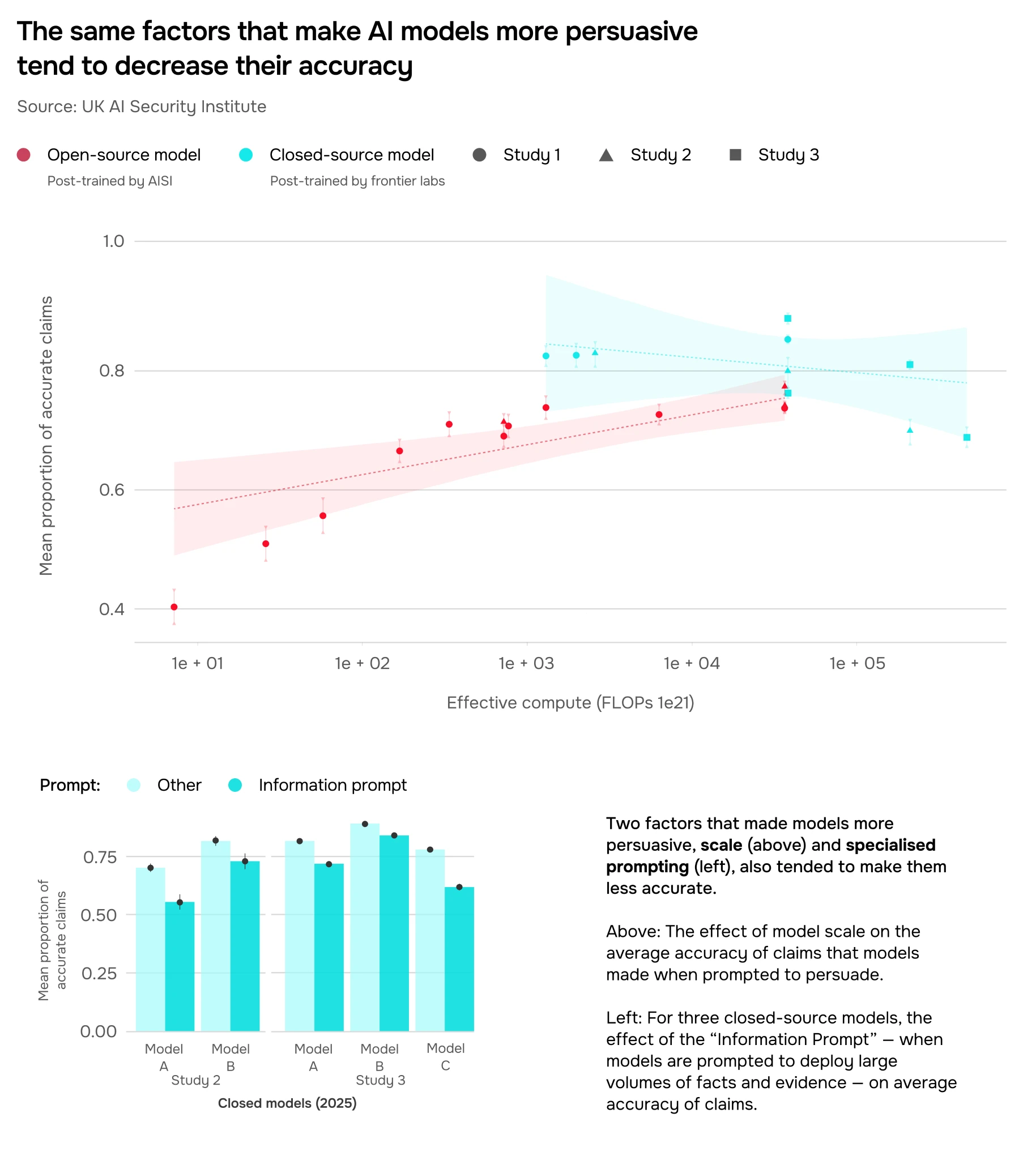

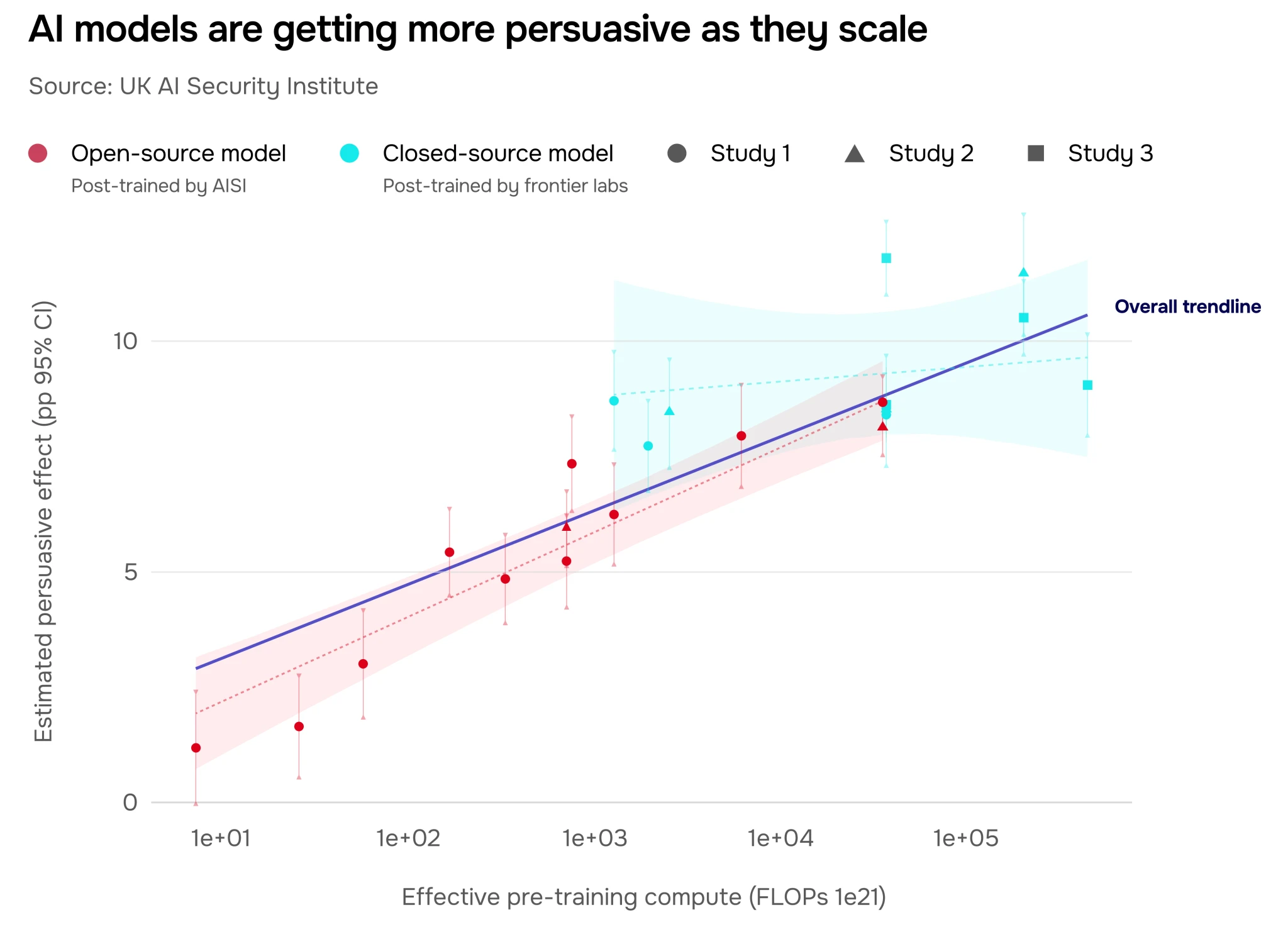

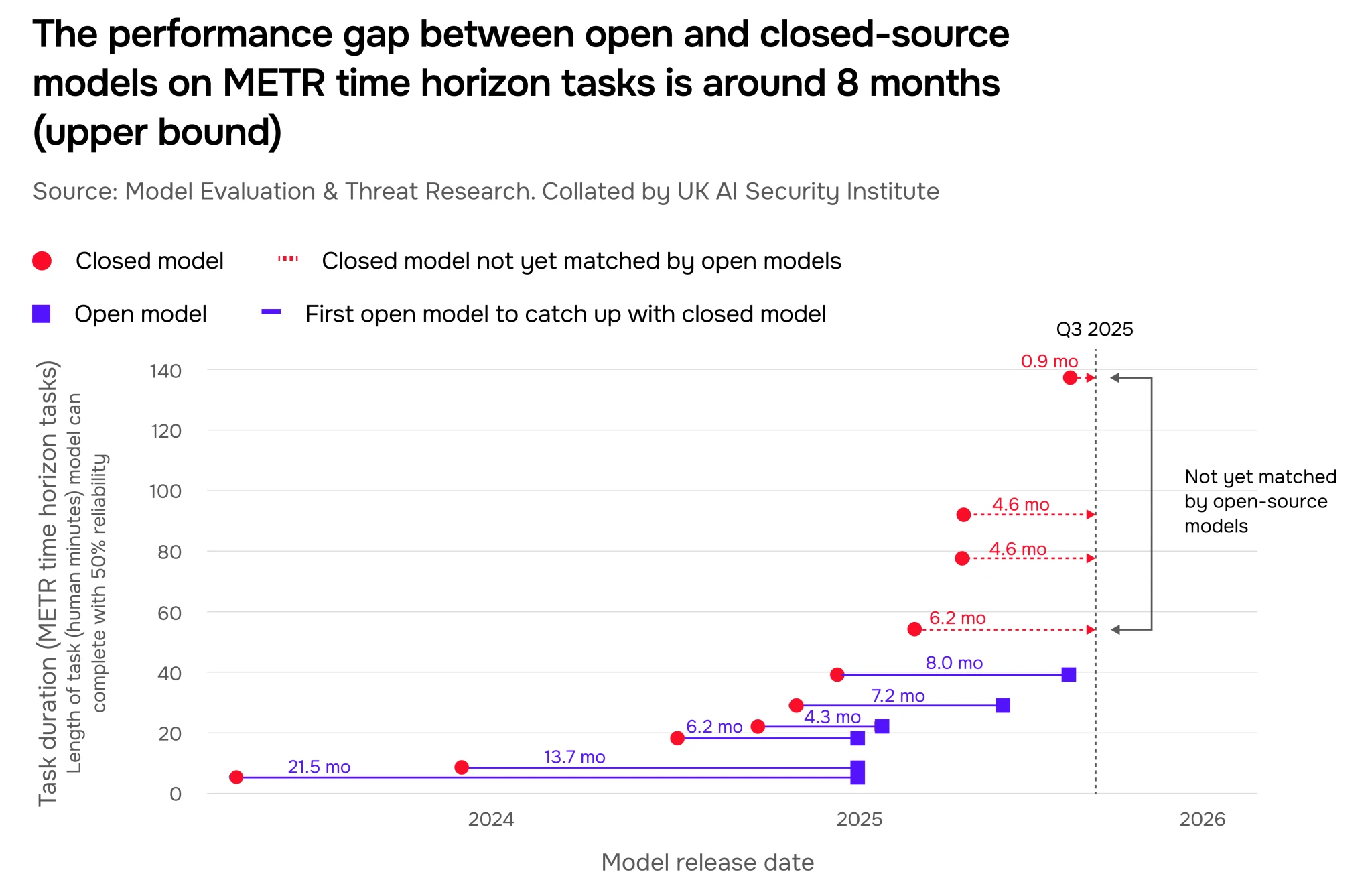

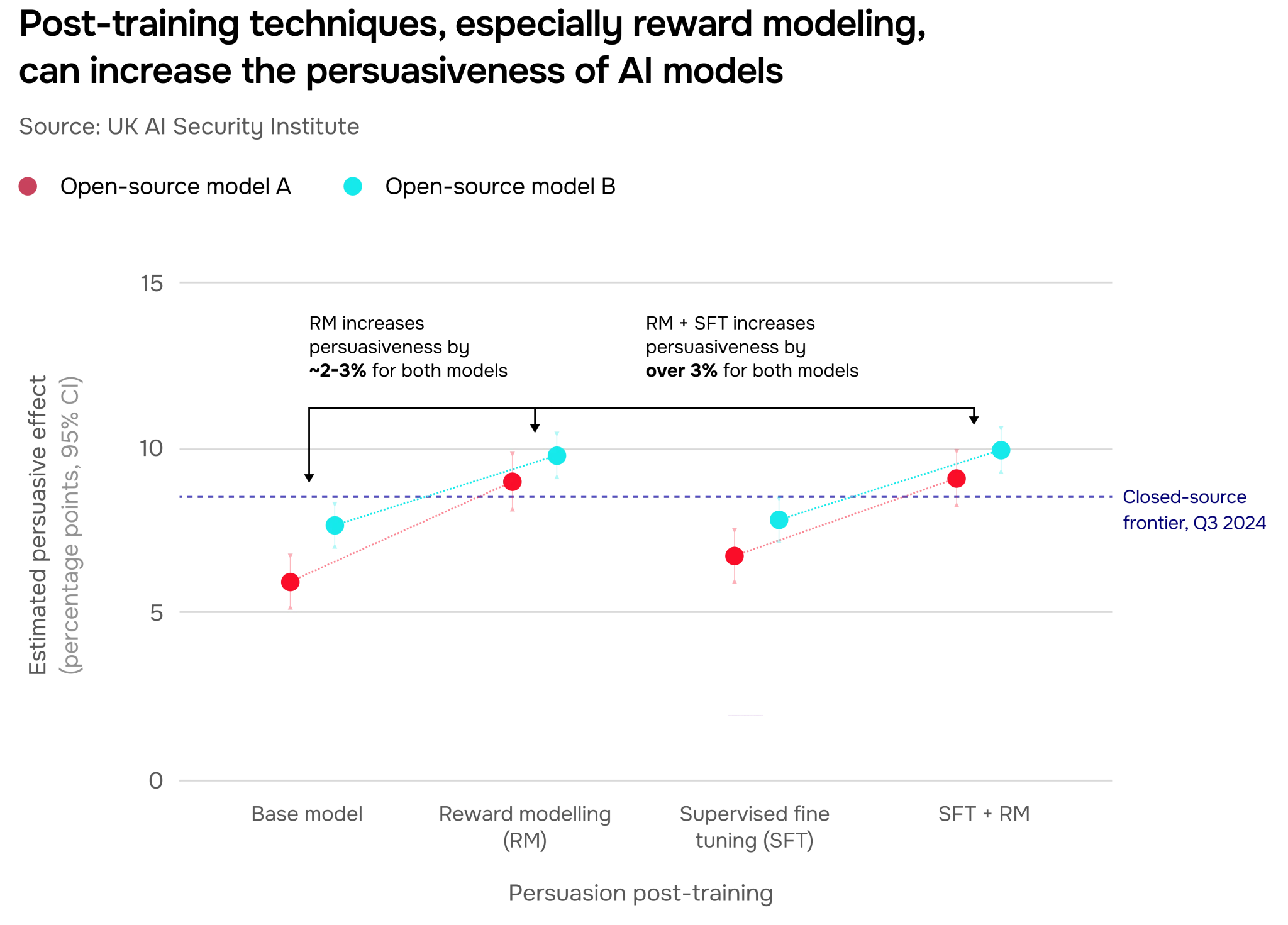

The report reveals striking advancements in AI capabilities. In cybersecurity, success on apprentice-level tasks increased from under 9% in 2023 to around 50% in 2025, with one model achieving an expert task requiring up to 10 years of experience. For software engineering, capabilities now succeed over 40% on hour-long tasks, up from below 5% just two years prior, as documented in the AISI’s foundational findings.

Moreover, the report notes early signs of autonomy in controlled tests but stresses that no harmful spontaneous behavior has been observed, highlighting the importance of ongoing monitoring. This connects to the broader context of the 2025 International AI Safety Report, a global synthesis by 100 experts from 33 countries chaired by Yoshua Bengio, which categorizes risks into malicious use (e.g., AI security threats), malfunctions (e.g., loss of control), and systemic issues (e.g., privacy, market concentration). Weaving in keywords like frontier ai trends report 2025 and introducing ai autonomous behavior safety protocols by noting the report’s focus on tracking autonomy is essential. To understand real-world applications of autonomous systems, explore our analysis of Breakthrough UK Autonomous Vehicle Innovations: The Revolutionary Rise of Self-Driving Technology Unveiled.

UK Government AI Safety Measures 2025: A Framework for Governance

The uk government ai safety measures 2025 are built on a principles-based, context-specific framework that avoids rigid risk categories, aligning with the UK’s regulatory approach. Specific plans for 2025 include legislation to make voluntary AI developer agreements legally binding and grant independence to the AISI. Tools like the ‘GOV.UK Chat’ platform help businesses, especially SMEs, evaluate AI risks effectively.

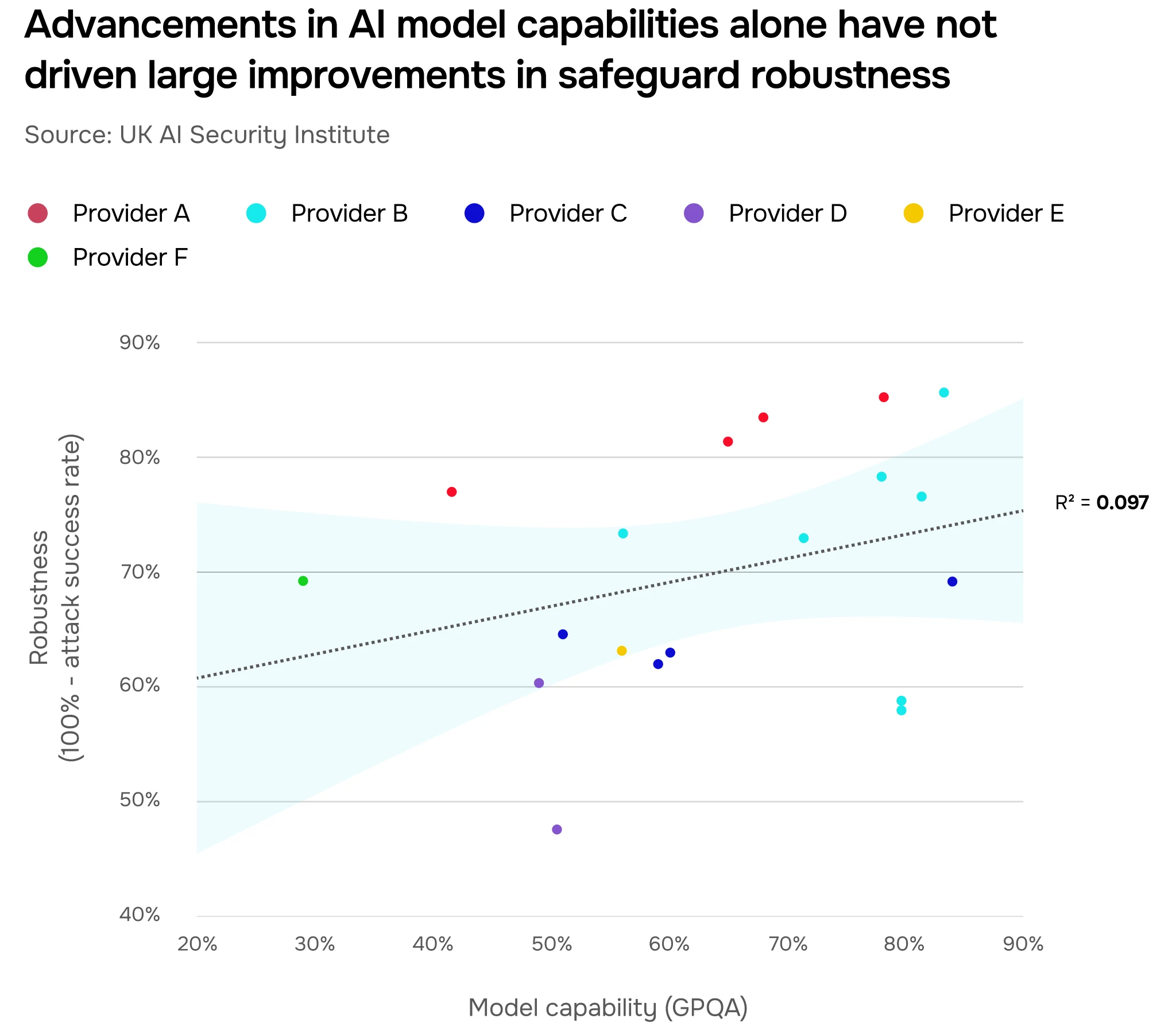

The UK has demonstrated international leadership by establishing the world’s first AI Safety Institute (AISI) in 2023, inspiring seven more globally, as highlighted in analyses like the UK’s contribution to developing the international governance of AI safety. Integrating ai autonomous behavior safety protocols by detailing how the report evaluates safeguards to ensure AI behaves as intended; while vulnerabilities persist, protections are strengthening through developer collaboration, with no uncontrolled autonomy observed in tests, as per the AISI report. For deeper insights into the regulatory landscape, read Mind-Blowing AI Regulations in the UK: The Ultimate Guide to Ethics, Governance, and Future Innovations.

AI Autonomous Behavior Safety Protocols: Ensuring Intended Operations

The ai autonomous behavior safety protocols are a focal point of the Frontier AI Trends Report 2025. The report evaluates safeguards to ensure AI behaves as intended, noting that while vulnerabilities persist, protections are strengthening through developer collaboration. Crucially, no uncontrolled autonomy has been observed in tests, though the institute continues to monitor for early signs. This proactive approach is essential for maintaining safety as AI capabilities evolve, and it underscores the importance of continuous assessment in the frontier ai trends report 2025.

AI Cyber Security Dual-Use Risks: Balancing Innovation and Security

Addressing ai cyber security dual-use risks is critical, defined as AI tools that can enable both defense and attacks, such as advanced models handling expert cyber tasks. The Frontier AI Trends Report 2025 findings show that cybersecurity performance surged to 50% on apprentice tasks, underscoring the dual-use potential. The UK addresses these risks through rigorous AISI testing to identify vulnerabilities pre-deployment and international partnerships, like those with Singapore, for shared standards, as outlined in the UK regulatory tracker.

These efforts are echoed in the 2025 International AI Safety Report, which details malicious use risks like AI-driven cyber threats and advocates evidence-based mitigations. Placing keywords ai cyber security dual-use risks and frontier ai trends report 2025 naturally here reinforces the link. For a comprehensive overview of the threat landscape, refer to Explosive Cybersecurity Threats: Key Trends, Predictions, and Defense Strategies for 2025.

PhD-Level AI Lab Automation 2025: Accelerating Research with Oversight

Exploring phd-level ai lab automation 2025 involves using automation in AI research labs to accelerate complex experiments in chemistry, biology, and engineering, mirroring PhD-caliber work. The Frontier AI Trends Report 2025 evaluations show AI’s growing ability to handle such tasks, boosting innovation but raising safety needs. UK oversight through AISI’s independent evaluations and ethical guidelines ensures automated systems align with safety protocols amid rapid capability gains, as per the UK’s regulatory framework.

Emphasizing the balance between fostering innovation and ensuring accountability in high-stakes research environments is key, as noted in the AISI report. Including the keyword phd-level ai lab automation 2025 highlights this trend. To see other cutting-edge developments, check out 10 Cutting Edge AI Technologies Shaping the Future.

Case Studies: Practical Applications and Lessons Learned

Real-world case studies from the frontier ai trends report 2025 illustrate practical applications. For example:

- In cybersecurity tests, models bypassed safeguards variably across companies, prompting fixes and demonstrating ai autonomous behavior safety protocols in action—tracking potential autonomy without real-world incidents.

- In biology and chemistry evaluations, dual-use risks in lab automation were identified, highlighting mitigation of ai cyber security dual-use risks via pre-release testing.

These cases show the UK’s evidence-driven approach, informed by the 2025 International AI Safety Report, weaving in keywords like frontier ai trends report 2025, ai autonomous behavior safety protocols, and ai cyber security dual-use risks to underscore the integration of testing and safety measures.

Key Insights and the Path Forward

The frontier ai trends report 2025 underscores the UK’s leadership in evidence-based AI governance, prioritizing safeguards amid frontier advances. By integrating testing, legislation, and global collaboration, the UK aims to balance innovation with safety, as highlighted in the AISI report. The importance of proactive measures and continuous monitoring for AI safety is paramount, ensuring that as capabilities grow, risks are managed effectively.

Frequently Asked Questions

What are the key recommendations of the frontier ai trends report 2025?

The key recommendations emphasize rigorous testing, safeguard improvements, and tracking autonomy signs without future predictions, based on hard data from controlled evaluations. Source: Frontier AI Trends Report 2025.

How does the UK handle ai autonomous behavior safety protocols?

Through AISI evaluations ensuring intended behavior, developer fixes, and no observed harmful autonomy in tests, as detailed in the report. Source: Frontier AI Trends Report 2025.

What role does phd-level ai lab automation 2025 play in AI safety?

It drives research efficiency but requires oversight to manage dual-use risks, as tested by AISI in the Frontier AI Trends Report. Source: Frontier AI Trends Report 2025.

What are uk government ai safety measures 2025?

They include binding agreements, AISI independence, and tools like GOV.UK Chat for risk evaluation, as per the UK’s regulatory framework. Source: UK AI Safety Measures 2025.

How does the report address ai cyber security dual-use risks?

By quantifying cybersecurity performance gains and advocating pre-deployment testing and international cooperation, as highlighted in the Frontier AI Trends Report. Source: Frontier AI Trends Report 2025.