UK AI Security Trends 2024: Key Government Strategies and Policies for AI Safety

Estimated reading time: 15 minutes

Key Takeaways

- The UK’s approach to AI security in 2024 is defined by a pro-innovation, sector-based regulatory framework that is shifting from voluntary guidance to binding obligations for the most powerful models.

- Central to the strategy is frontier AI national testing, led by the new AI Safety Institute (AISI), which evaluates dangerous capabilities and informs government safeguards.

- Collaboration is non-negotiable; the government’s AI security collaboration strategies involve deep partnerships with AI labs, international bodies, and the cybersecurity community.

- A structured government AI risk assessment process is being implemented, moving from anticipation and prevention to preparation and response for national security threats.

- The evolving AI safeguarding policy 2024 mandates transparency, accountability, security, and fairness, particularly for high-risk and public sector AI applications.

Table of contents

- UK AI Security Trends 2024: Key Government Strategies and Policies for AI Safety

- Key Takeaways

- Introduction: The UK’s Evolving AI Security Landscape

- What Are the UK AI Security Trends 2024?

- Frontier AI National Testing: The UK’s Proactive Defence

- AI Security Collaboration Strategies: Strength in Unity

- Government AI Risk Assessment: A Structured Approach

- AI Safeguarding Policy 2024: From Principles to Practice

- Case Studies: UK AI Security in Action

- Challenges and Future Outlook for UK AI Security

- Frequently Asked Questions

Introduction: The UK’s Evolving AI Security Landscape

The rapid rollout of generative and frontier AI across the United Kingdom is not just an economic opportunity—it’s a profound shift that brings significant risks to critical infrastructure, data privacy, and national security. In this context, AI security has become paramount, defined as the protection of AI systems from misuse, malicious cyber threats, and the management of their potentially dangerous capabilities to ensure safe and responsible deployment.

Enter the UK AI security trends 2024. This is the UK government’s comprehensive, multi-layered response, centred on a pro-innovation regulatory framework facilitated by the newly established AI Safety Institute (AISI), an emerging AI bill highlighted in the 2024 King’s Speech, and a clear strategic shift from voluntary to binding rules for the most powerful systems. This agenda, also detailed in analyses from industry experts and global regulatory trackers, is built on core pillars: rigorous government AI risk assessment, a forward-looking AI safeguarding policy 2024, and pioneering frontier AI national testing.

What Are the UK AI Security Trends 2024?

The UK’s strategy, as outlined in the Artificial Intelligence Sector Study 2024, is characterised by a “pro-innovation, sector-based” model of regulation. This means existing regulators (like the ICO, CMA, and Ofcom) will oversee AI within their domains, but with a new, increasing structure for high-risk uses. The trend is a decisive move away from purely voluntary guidance, as noted in reviews of UK AI regulation, towards binding obligations for developers of the most powerful frontier models, with legislation expected in 2025.

These trends are intrinsically linked to the priorities of the AI safeguarding policy 2024, which emphasises transparency, accountability, and robust data protection. Furthermore, a defining feature of the current landscape is the growing role of frontier AI national testing. Led by the AISI, this initiative represents a hands-on government effort to understand and mitigate risks at the cutting edge of AI capability, as evidenced by its inaugural trends report and welcomed by industry groups.

Frontier AI National Testing: The UK’s Proactive Defence

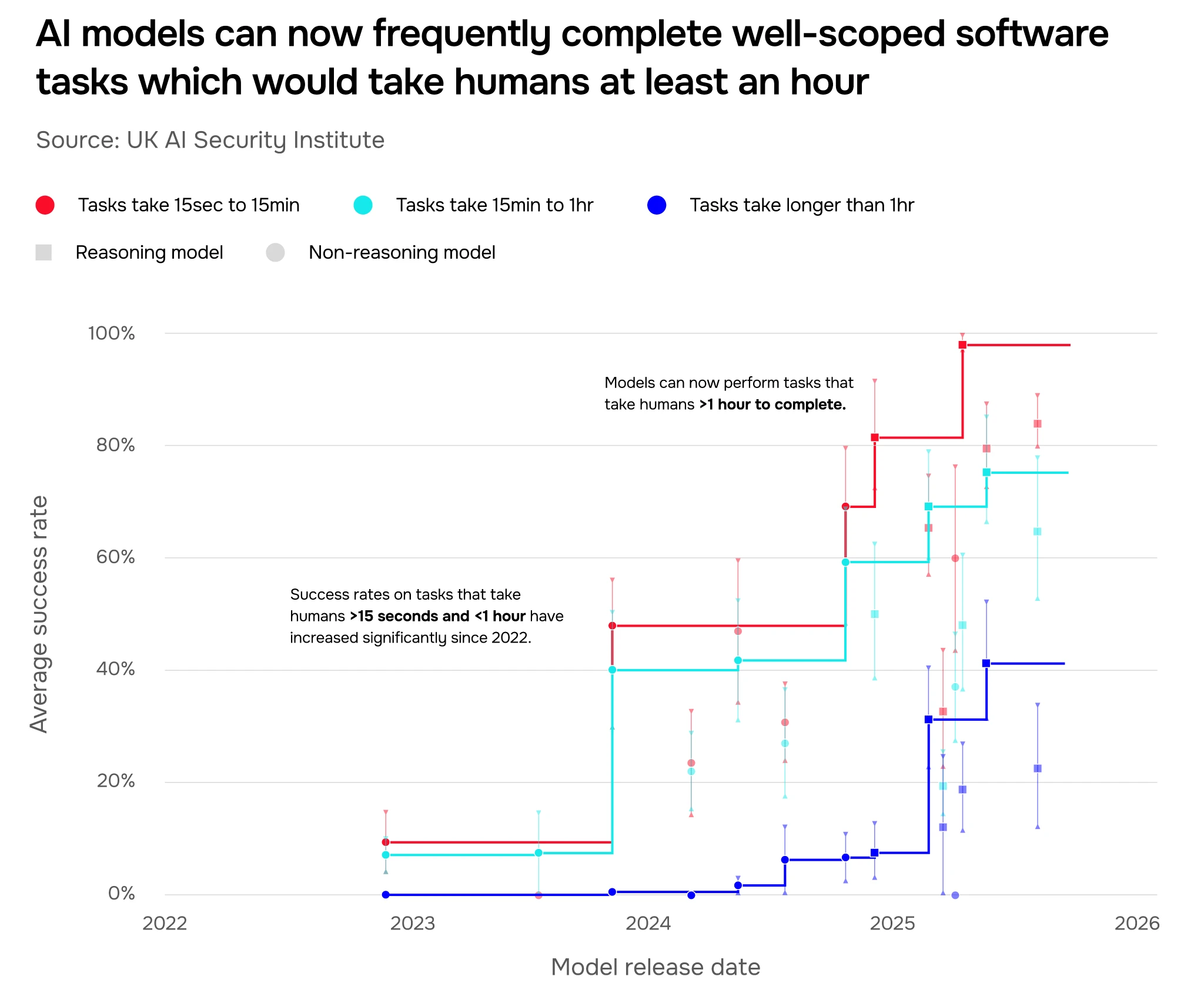

Frontier AI national testing is the UK government’s centralized, technical evaluation of the most advanced and capable AI models. Its purpose is to probe for dangerous capabilities—such as aiding in cyber-attacks, biotechnology research, or autonomous planning—and to assess reliability and misuse risks. This process is not academic; it is a critical component of national security preparedness.

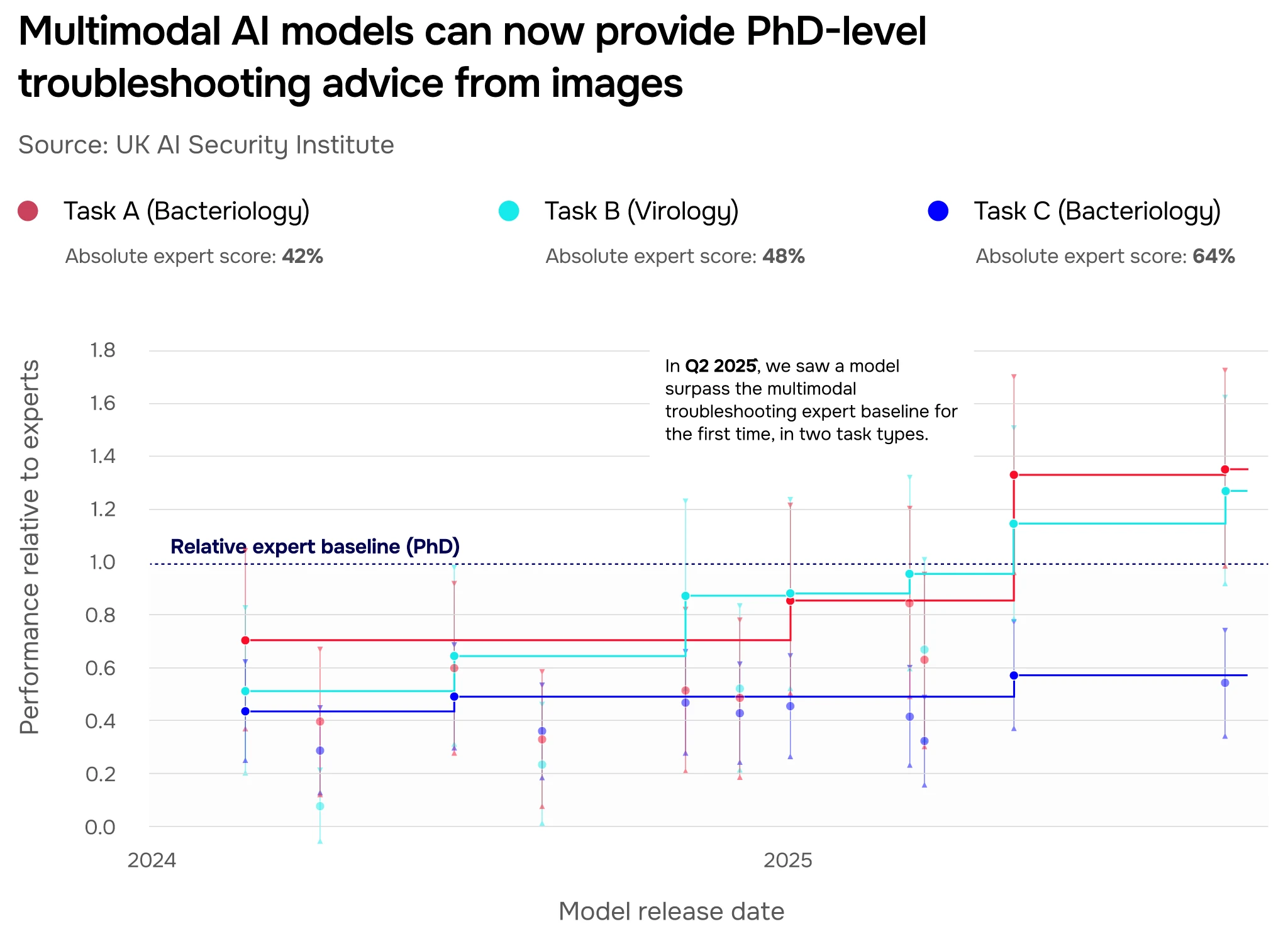

The AI Safety Institute (AISI) is at the helm of this effort. Its mandate includes gaining pre-deployment access to frontier models from leading labs, conducting evaluations, and publishing findings like the Frontier AI Trends Report. As highlighted by techUK, the AISI’s work focuses squarely on the security implications of these technologies. This testing function is the practical engine of the government’s government AI risk assessment. The results directly inform the setting of risk thresholds, the design of necessary safeguards, and the development of future regulations, providing a concrete, evidence-based method for state oversight.

AI Security Collaboration Strategies: Strength in Unity

A core tenet of the UK’s approach is that no single entity—government, private lab, or academic institution—can manage the systemic risks of frontier AI alone. Therefore, sophisticated AI security collaboration strategies are essential, a point strongly made in analyses from policy researchers.

The UK model fosters collaboration through several key channels:

- Public-Private Partnerships with AI Labs: The AISI has established partnerships with major AI companies to secure access to their frontier models for evaluation. This enables joint testing and the co-development of safety standards, as seen in its collaborative work following the AI Safety Summit.

- International Engagement: The UK is actively shaping global norms through the AI Safety Summit process and engagement with standards bodies like ISO and IEC. This aligns with goals noted in the Sector Study to promote interoperability and shared security principles.

- Cross-Sector Security Ties: Recognising AI’s role in cybersecurity, the government is fostering links between the AISI, the National Cyber Security Centre (NCSC), and private cybersecurity firms. This is crucial for developing AI threat intelligence and securing critical national infrastructure, a priority explored in depth by The Tony Blair Institute.

These AI security collaboration strategies translate into actionable outcomes: joint research programs, shared testing methodologies, and co-developed security guidance that elevates the entire ecosystem’s resilience.

Government AI Risk Assessment: A Structured Approach

The UK is formalising its government AI risk assessment into a staged, continuous process. This framework, advocated by groups like Longterm Resilience, moves beyond one-off checks to an enduring governance cycle:

- Anticipation: This involves horizon-scanning for emerging AI capabilities, intelligence gathering, and scenario analysis for national security threats. Resources like the Central Digital and Data Office (CDDO) guidance support this forward-looking phase.

- Prevention: Here, the focus shifts to incentivising and mandating robust risk governance by AI developers. This includes securing AI development infrastructure and enforcing strong data practices, as emphasised in cross-government AI security guidance.

- Preparation: The government and industry jointly develop incident response plans and conduct stress-testing exercises to ensure readiness for AI-related failures or attacks.

- Response: Should a high-risk incident occur, the government envisions using a toolkit of response levers, which could include mandating model changes, imposing access restrictions, or other regulatory interventions.

This structured assessment is supported by practical tools such as risk matrices, detailed guidance from sector regulators, and the use of regulatory sandboxes to test innovative AI applications in controlled environments.

AI Safeguarding Policy 2024: From Principles to Practice

The UK’s AI safeguarding policy 2024 translates high-level principles into concrete expectations for organisations developing and deploying AI. The key objectives are:

- Transparency & Explainability: Particularly for high-risk or public-facing AI, there must be clarity on how systems reach decisions. This is a recurring theme in both the Sector Study and regulator expectations.

- Accountability & Governance: Clear lines of responsibility for AI outcomes must be established, with human oversight maintained for critical decisions.

- Security & Robustness: AI systems must be resilient against adversarial attacks, data breaches, and must fail safely. This is a core pillar of the security-focused guidance from the CDDO and NCSC.

- Fairness & Bias Mitigation: AI must be developed and audited to avoid discriminatory outcomes, aligning with the UK’s existing equality and data protection duties.

This represents a significant evolution from the UK’s earlier light-touch approach. The policy now has an explicit focus on frontier AI and is transitioning towards binding requirements, as indicated in the proposed AI bill. Enforcement will be bolstered through detailed regulator guidance and, for frontier models, potentially mandatory testing and reporting obligations to the AISI.

Case Studies: UK AI Security in Action

Case Study 1: Frontier AI Testing in Practice

The AISI’s Frontier AI Trends Report provides a tangible example of frontier AI national testing. The report details evaluations where advanced models demonstrated capabilities, such as sophisticated troubleshooting, that approached or surpassed human-level performance in specific domains. The insights from this testing directly feed into the government’s understanding of where to apply specific safeguards and control measures.

Case Study 2: Cross-Sector Collaboration for Standards

Initiatives stemming from the UK-hosted AI Safety Summit exemplify AI security collaboration strategies. These gatherings of government, industry, and academia have produced frameworks for shared safety standards and protocols for threat information sharing, creating a foundation for global cooperative security as envisioned in the UK’s international strategy.

Case Study 3: Public-Sector AI Deployment

When AI is deployed in public digital services or critical infrastructure, the government AI risk assessment framework is applied. This involves rigorous pre-deployment checks, implementing “human-in-the-loop” controls for sensitive decisions, and continuous monitoring, ensuring alignment with the broader AI safeguarding policy 2024. This practical application is guided by resources from the CDDO and strategies for sovereign AI infrastructure.

Challenges and Future Outlook for UK AI Security

The path forward for UK AI security trends 2024 is not without significant challenges. These include:

- Keeping Pace with Technology: The speed of advancement in frontier models, as tracked in AISI reports, poses a constant challenge for regulators and risk assessors.

- Balancing Innovation and Regulation: The UK aims to foster innovation while managing risk, a delicate balance that distinguishes its approach from the more compliance-focused EU AI Act, as noted in comparative analyses.

- Ensuring Consistency: Achieving uniform security standards across different economic sectors and for organisations of all sizes remains a complex undertaking.

- International Coordination: While the UK is a leader, effective security ultimately depends on global cooperation to prevent regulatory arbitrage and ensure widespread safety standards.

The outlook points towards several key developments: the introduction of an AI bill with binding obligations for frontier model developers; the expansion of the AISI’s capabilities and its international partnerships; and the deeper integration of government AI risk assessment into the security planning for critical national infrastructure.

Frequently Asked Questions

What is the AI Safety Institute (AISI)?

The AISI is a UK government body established to conduct and advance the science of AI safety. Its core functions include performing frontier AI national testing, researching AI safety evaluation methods, and facilitating national and international collaboration on AI security standards.

How will the UK AI Bill change the regulatory landscape?

The proposed AI Bill, expected in 2025, will formalise the shift from voluntary to mandatory measures for the most powerful AI models. It is likely to place legal duties on frontier AI developers, potentially including mandatory risk assessments, reporting to the AISI, and adherence to specific safety and transparency requirements outlined in the AI safeguarding policy 2024.

What should a UK business do to align with these trends?

Organisations should proactively monitor guidance from the AISI and relevant sector regulators. They should conduct internal audits of their AI use against the principles of the AI safeguarding policy 2024 (transparency, accountability, security, fairness) and review their risk management practices in line with the structured government AI risk assessment approach.

How does the UK approach differ from the EU AI Act?

The UK favours a flexible, sector-led approach guided by existing regulators, while the EU AI Act is a horizontal, cross-cutting legislation with well-defined risk categories and compliance requirements. The UK strategy, as analysed by White & Case, emphasises supporting innovation and is particularly focused on the risks from frontier AI, whereas the EU Act takes a broader, more product-safety-oriented view.