Apple Vision Pro Latest News and Updates: Neural Engine Breakthroughs, Vision Pro 2 Rumors, and the Future of Smart Glasses

Estimated reading time: 10 minutes

Key Takeaways

- The October 2025 refresh brings a powerful M5 chip with a 16-core Neural Engine, elevating performance and AI capabilities.

- Apple Neural Engine technology is the secret sauce, enabling real-time eye/hand tracking, spatial media creation, and on-device AI.

- The latest advancements in neural interface mean intuitive, controller-free interaction using your eyes, hands, and voice.

- Official Vision Pro 2 release date rumors are just speculation; the current M5 model is Apple’s definitive spatial computing statement.

- Vision Pro is a blueprint for the future of smart glasses with neural engine capabilities, blending the digital and physical worlds.

Table of contents

- Apple Vision Pro Latest News and Updates

- Key Takeaways

- Quick Overview: What Apple Vision Pro Is and Why the M5 Refresh Matters

- Hardware at a Glance: Displays, Chips, Sensors, and Battery

- Apple Neural Engine Technology: What It Is and How It Powers Vision Pro

- Latest Advancements in Neural Interface Inside Vision Pro

- Vision Pro 2 Release Date Rumors – What We Know (and What We Don’t)

- Smart Glasses with Neural Engine Capabilities: Why Vision Pro Matters for the Whole Category

- Competitors vs Apple Vision Pro: How Neural Engine and Displays Set It Apart

- Future Prospects: What to Expect from the Next Generation

- Frequently Asked Questions

If you’re searching for the definitive apple vision pro latest news and updates, you’ve landed in the right place. The landscape shifted notably in October 2025, when Apple unveiled a significant upgrade to its flagship mixed-reality headset, now powered by the formidable M5 chip. This isn’t just a spec bump; it’s a leap forward in how we interact with digital content, thanks to deeper integration of apple neural engine technology.

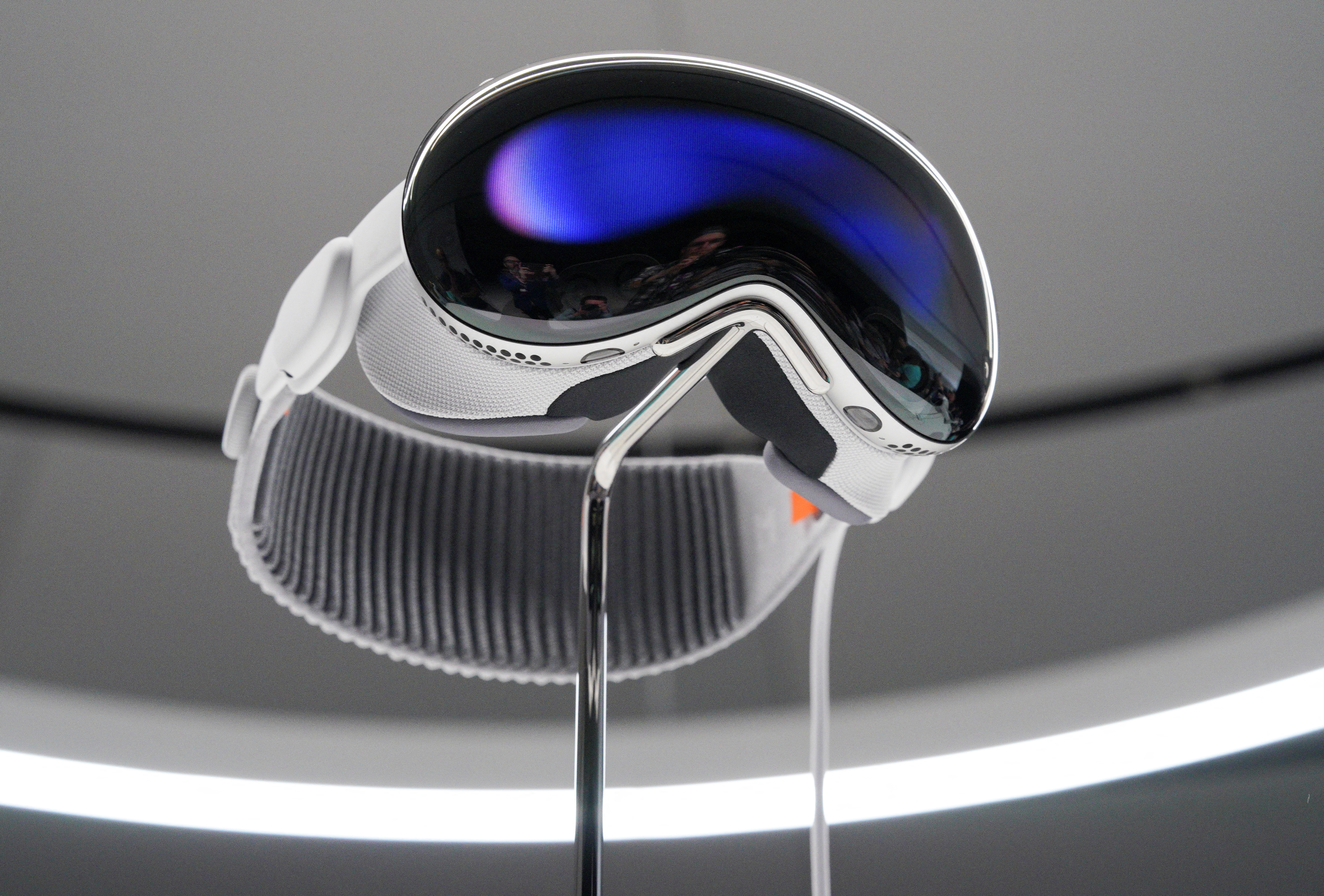

The Apple Vision Pro is designed to seamlessly overlay digital content onto your physical world while also transporting you to fully immersive virtual environments. The recent upgrade with the M5 chip brings tangible improvements in display quality, system responsiveness, and battery life, addressing some early critiques while doubling down on Apple’s spatial computing vision. Reviews acknowledge it as a “flawed wonder”—a powerful glimpse into a future that’s still being refined. Our goal here is to cut through the noise, delivering clear updates on the M5 upgrade, demystifying the latest advancements in neural interface, separating fact from fiction regarding vision pro 2 release date rumors, and exploring how this technology paves the way for true smart glasses with neural engine capabilities. We’ll break down the tech in a way that’s informative without being overwhelming, giving you a comprehensive look at where Apple’s vision stands today.

Quick Overview: What Apple Vision Pro Is and Why the M5 Refresh Matters

At its core, the Apple Vision Pro is a high-end mixed-reality headset. It masterfully blends two modes: Augmented Reality (AR), where digital objects and interfaces appear to exist in your room, and Virtual Reality (VR), where you’re fully immersed in a digital environment. This is the essence of “spatial computing”—the idea that apps and content live in the 3D space around you, not confined to a flat screen.

The heart of the current apple vision pro latest news and updates is the October 2025 refresh. Rather than launching a “Vision Pro 2,” Apple enhanced the existing model with its next-generation silicon. The headset now runs on the M5 chip, a move that brings several immediate, user-facing benefits:

- Snappier Performance: Everyday apps, complex 3D environments, and multitasking feel more responsive with less lag.

- More Immersive Visuals: Upgraded displays and graphics capabilities make virtual worlds and AR overlays more convincing and detailed.

- Improved Comfort & Battery: Alongside the new chip, an updated dual-knit band offers better weight distribution, and efficiency gains contribute to a solid battery life.

Critically, this upgrade houses a new 16-core Apple Neural Engine within the M5. This dedicated AI processor is the key to unlocking more intuitive and powerful experiences, accelerating everything from environment understanding to real-time persona animation. For a deeper dive into how AI integrates into this experience, check out our detailed review of Apple Vision Pro AI Integration.

Hardware at a Glance: Displays, Chips, Sensors, and Battery

To understand why Vision Pro stands out, let’s look under the hood. This hardware snapshot reveals the foundation of its capabilities and where the apple neural engine technology fits in.

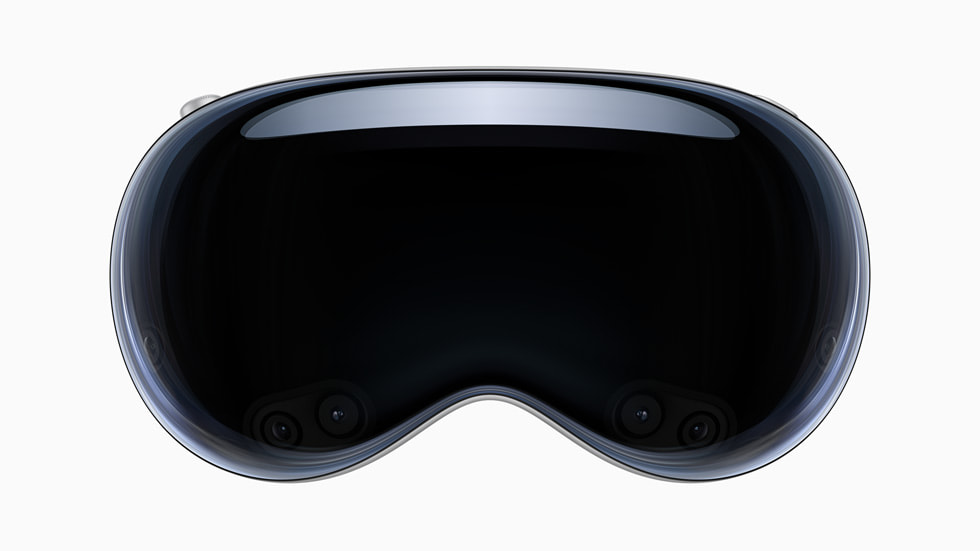

Displays: Vision Pro uses dual micro-OLED displays boasting a combined 23 million pixels—more than many 4K TVs per eye. With refresh rates up to 120Hz, motion appears incredibly smooth, reducing blur and making text sharp even during movement.

Processing Chips (The Brains):

– The M5 Chip: This system-on-a-chip includes a 10-core CPU (4 performance, 6 efficiency cores), a 10-core GPU with hardware-accelerated ray tracing for realistic lighting, and the pivotal 16-core Apple Neural Engine. According to Apple, this Neural Engine enables AI tasks to run up to 50% faster system-wide versus the prior generation.

– The R1 Chip: This dedicated sensor processor is a marvel of real-time data handling. It processes input from 12 cameras, 5 sensors, and 6 microphones to achieve a photon-to-photon latency of just 12 milliseconds. This near-instantaneous processing is why digital objects feel locked in place in the real world.

Cameras & Sensors (The Senses):

– Stereoscopic 3D Cameras: Capture depth for immersive spatial photos and videos.

– LiDAR Scanner: Measures distance with light to map your room’s geometry for precise object placement.

– Eye-Tracking Cameras: Enable gaze-based selection and foveated rendering (saving power by rendering full detail only where you look).

– Optic ID: A secure biometric authentication system that uses iris recognition.

Battery & Comfort: The external battery pack delivers up to 2.5 hours of general use. The 2025 refresh’s dual-knit band improves comfort for longer sessions.

Software: All this hardware runs visionOS 26, which introduces spatial widgets, enhanced Personas, and new on-device Apple Intelligence features for a smarter, more personalized experience.

Apple Neural Engine Technology: What It Is and How It Powers Vision Pro

So, what exactly is apple neural engine technology? In simple terms, it’s a specialized part of Apple’s chips—like the M5—built from the ground up to accelerate machine learning and AI tasks. Think of it as a co-processor that excels at pattern recognition, image transformation, and content generation. In the Vision Pro’s M5, this Neural Engine has 16 cores, allowing it to handle massive amounts of AI calculations simultaneously and efficiently.

The performance leap is concrete. Apple states the new ANE provides up to 50% faster machine-learning performance system-wide, and some third-party apps can see AI tasks run up to 2x faster. This power translates directly into your experience:

- Spatial Photo & Video Processing: The ANE accelerates the complex task of turning your standard 2D memories into immersive 3D spatial memories you can walk around in.

- Persona Creation & Animation: When you create your digital Persona for FaceTime calls, the Neural Engine analyzes facial features and expressions to build and animate a strikingly realistic avatar in real time.

- Real-Time Sensor Fusion: It helps process the constant flood of data from cameras and sensors (eye gaze, hand position, head movement) to maintain a stable, responsive, and comfortable interface.

For developers, this opens a new frontier. They can build apps with real-time object recognition, apply AI effects to spatial content, and integrate Apple Intelligence for language tasks—all processed on-device for speed and privacy. The Neural Engine is what makes Vision Pro feel intelligent and anticipatory, moving beyond a simple display into an aware spatial computer.

Latest Advancements in Neural Interface Inside Vision Pro

When we talk about the latest advancements in neural interface in the context of Vision Pro, we’re not referring to brain implants. Instead, it’s about the sophisticated, AI-enhanced system that interprets your natural inputs—eyes, hands, voice, and head movements—as commands. This creates an interaction model that feels intuitive and almost thought-driven.

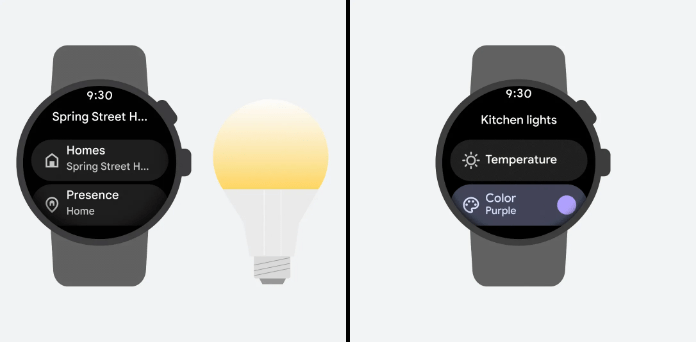

Eye & Hand Tracking as Core Interfaces: This is the star of the show. Cameras track your eyes with precision, enabling “look-to-select” where you simply glance at a button and tap your fingers together to click. Outward-facing cameras track your hands in 3D space, allowing for pinch, swipe, grab, and rotate gestures in mid-air. The Apple Neural Engine and R1 chip work in tandem to interpret these signals at ultra-low latency, making it feel seamless.

Spatial Audio & Head Tracking: Sound is a crucial part of the interface. Vision Pro uses dynamic head-tracked spatial audio so that a movie playing on a virtual screen sounds like it’s coming from that fixed point in your room, even if you turn your head. This auditory precision, processed rapidly, is key to maintaining immersion and preventing disorientation.

The Magic of Low Latency: The 12-millisecond photon-to-photon latency is the unsung hero. If there was a delay between your movement and the display updating, your brain would perceive a mismatch, causing discomfort and breaking the illusion. This speed is fundamental to making the neural interface feel “natural.”

These advancements enable breathtaking use cases. You can explore an immersive Jupiter simulation by just looking and moving your head. Professional creators use eye and hand tracking for precise control in spatial editing apps, interacting with 3D timelines and virtual canvases. To understand how VR and AR are evolving beyond Apple, explore our analysis of The Future of Virtual Reality.

Vision Pro 2 Release Date Rumors – What We Know (and What We Don’t)

Let’s address the elephant in the room: vision pro 2 release date rumors. As of the latest official information, Apple has not announced a release date for a “Vision Pro 2.” The company’s most recent move was the substantial October 2025 M5 upgrade to the existing Vision Pro model.

Rumors persist for understandable reasons. The pace of Apple silicon evolution (M-series chips typically advance every 1-2 years) leads to natural speculation. Furthermore, the jump from the original M2-based Vision Pro to the M5-based model is so significant that some view it as a generational leap in itself. However, analyst predictions and enthusiast chatter are not official announcements.

The smart approach for interested buyers and tech watchers is to focus on the reality of what’s shipping today: the current M5 Vision Pro’s features, the official visionOS roadmap, and the expansion of Apple Intelligence. Until Apple makes an announcement via its Newsroom or a keynote event, treat any specific vision pro 2 release date rumors as speculative. For now, the M5 Vision Pro remains the definitive reference point for evaluating apple vision pro latest news and updates and the state of neural interface technology.

Smart Glasses with Neural Engine Capabilities: Why Vision Pro Matters for the Whole Category

Vision Pro is more than a product; it’s a manifesto for the future of smart glasses with neural engine capabilities. This category refers to head-worn devices that overlay digital content on the real world and use dedicated neural processing hardware (like Apple’s Neural Engine) to power intelligent, context-aware experiences.

Vision Pro is Apple’s first-generation entry into this space. It demonstrates how a powerful 16-core Apple Neural Engine can enable real-time understanding of your environment, gaze, and gestures, all while running advanced Apple Intelligence features on-device. Its impact is poised to ripple across multiple domains:

- Work & Productivity: Imagine a spatial desktop with unlimited floating screens for coding, design, or research, or collaborative meetings where teammates’ Personas share a virtual 3D whiteboard.

- Entertainment: A personal theater with a screen that feels 100 feet wide, or immersive games that respond to your eye gaze and full-body movement. For more on this transformation, see How VR is Changing Gaming in 2025.

- Creative Industries: Directors could scout virtual sets, while 3D artists sculpt and animate in mid-air with intuitive hand gestures.

For everyday life, this technology points toward a future where navigating a city with AR directions, getting real-time translations overlaid on a street sign, or having personalized media follow you around your home becomes effortless. Smart glasses with neural engine capabilities aim to integrate computing into your field of view and interactions, making technology an invisible assistant rather than a distracting slab of glass.

Competitors vs Apple Vision Pro: How Neural Engine and Displays Set It Apart

While many capable VR headsets and smart glasses exist, Vision Pro carves out a unique position, largely due to its display technology and neural processing prowess.

Versus General Smart Glasses/VR Headsets: Many competitors focus on standalone VR gaming or basic AR overlays, often with lower-resolution displays and less advanced AI hardware. Vision Pro differentiates itself with its 23-million-pixel micro-OLED displays, high-quality video pass-through, and a sophisticated, controller-free interface powered by its custom chips.

Versus the Prior Vision Pro (M2-based): The M5 upgrade is a clear step up. It delivers approximately 10% more pixels, enables up to 50% faster AI performance via the upgraded Neural Engine, and introduces advanced GPU features like hardware-accelerated ray tracing for more realistic visuals.

The Neural Engine as a Key Differentiator: Even when competitors match on CPU or GPU specs, Vision Pro’s deep integration of the 16-core Apple Neural Engine with its sensor array and visionOS software is a formidable advantage. It enables more accurate, lower-latency eye and hand tracking, supports richer on-device AI features (like Apple Intelligence), and provides developers with a powerful toolkit for creating intelligent spatial experiences. A key competitor worth noting is the Meta Quest 3, which offers a different, more accessible approach to mixed reality. For a broader comparison, check out our guide to the Best VR Headsets for Gaming in 2024.

Future Prospects: What to Expect from the Next Generation

Looking ahead, the trajectory for devices like Vision Pro is clear. Hardware will continue evolving toward more efficient chips, lighter and slimmer form factors (eventually approaching everyday glasses), and even higher-fidelity displays. The inclusion of features like hardware ray tracing in the M5 signals Apple’s commitment to photorealistic spatial graphics.

The latest advancements in neural interface will focus on refinement and expansion. We can expect eye and hand tracking to become so precise and low-latency that the interface feels invisible. Context-aware AI will deepen, with the system anticipating your needs based on your environment and activity. Apple Intelligence integration will expand, offering more robust multilingual and assistant features directly within your spatial workspace.

Apple neural engine technology will be the driving force behind this evolution. It will make apps launch instantly, enable NPCs in games to react intelligently to your behavior, and power professional tools for real-time spatial video editing and automatic scene analysis. This positions future iterations of Vision Pro and similar smart glasses with neural engine capabilities to fundamentally redefine our relationship with technology, shifting from a device we look at to an intelligent space we live and work within.

Frequently Asked Questions

The most significant update is the integration of the M5 chip, which includes a vastly more powerful 16-core Apple Neural Engine. This upgrade is central to the current apple vision pro latest news and updates, delivering up to 50% faster AI performance, improved graphics with ray tracing, and overall snappier responsiveness for all spatial computing tasks.

The Neural Engine works behind the scenes to make interactions feel magical and intelligent. It powers the accuracy and speed of eye tracking (for selection), hand tracking (for gestures), and the real-time creation/animation of your digital Persona. It also accelerates the conversion of your photos into spatial memories and helps the device understand your physical environment instantly, making digital objects feel stable and real.

As of now, there is no official announcement from Apple regarding a “Vision Pro 2.” The company’s latest move was the substantial M5-chip upgrade to the existing model in October 2025. While vision pro 2 release date rumors will always circulate, the currently available M5 Vision Pro represents Apple’s cutting-edge technology and should be the benchmark for anyone evaluating a purchase today.

Absolutely. Vision Pro is designed for controller-free interaction, which is a prime example of the latest advancements in neural interface. You primarily use your eyes to look at items, your hands to make pinching or tapping gestures for selection, and your voice for commands. This creates a remarkably intuitive and natural way to navigate and interact with digital content.

Vision Pro is a proving ground for the technologies needed for practical, powerful smart glasses with neural engine capabilities. It demonstrates how miniaturized high-resolution displays, powerful on-device AI processing via a Neural Engine, and advanced sensors for eye/hand tracking can work together. As these components become smaller and more efficient, the form factor can shrink from a headset to glasses, while retaining the ability to understand and enhance your world intelligently.