Atlas Robot AI Integration: The Humanoid Blueprint for an Autonomous Future

Estimated reading time: 8 minutes

Key Takeaways

- The new, all-electric Atlas robot represents a quantum leap in AI integration, moving from pre-programmed routines to real-time, adaptive intelligence.

- Powered by a partnership with Google DeepMind, its perception systems and neural networks enable unprecedented autonomy in complex, unmapped industrial environments.

- With 56 degrees of freedom and the ability to lift 50kg (110lbs), Atlas is engineered as the ultimate industrial task execution robot for logistics, assembly, and hazardous work.

- Its deployment in Hyundai factories by 2026 signals a tangible shift towards a hybrid automation workforce future, augmenting human labor rather than simply replacing it.

- While powerful, current limitations like battery life and cost highlight that this is the beginning of a rapid evolution in humanoid robotics.

Table of Contents

Introduction: From Scripted Machines to AI Partners

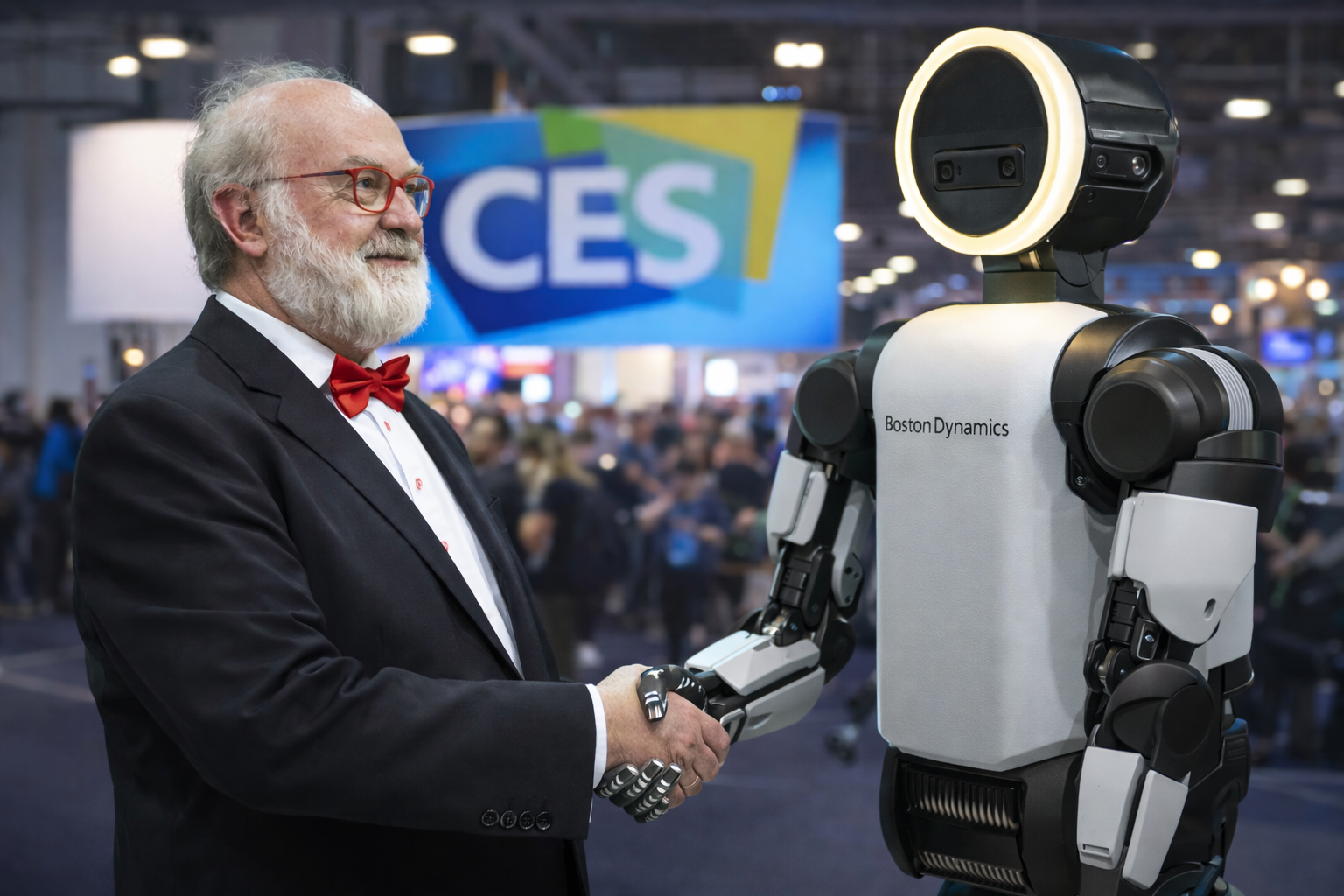

For decades, the public image of robots was one of rigidity—powerful but pre-programmed machines confined to cages and repetitive tasks. The turning point is here. The evolution has accelerated from hydraulic actuators to silent, all-electric motors, and from choreographed dance routines to genuine, adaptive intelligence. This shift is epitomized by the atlas robot ai integration, a breakthrough that redefines what humanoids can achieve.

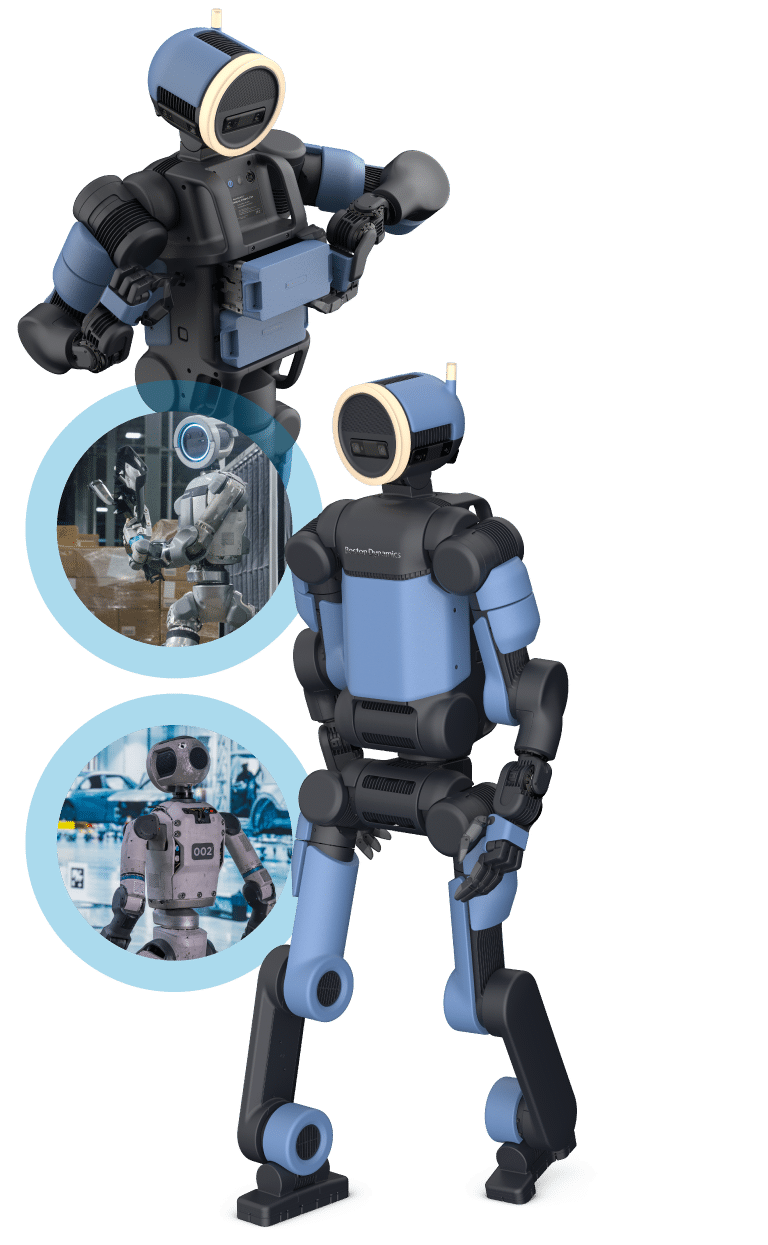

Boston Dynamics’ commercial Atlas robot, unveiled for 2026, is the physical manifestation of this new era. It’s a fully electric humanoid, standing 1.9 meters tall and weighing 90kg, but its specs tell the story of capability, not just form. With an astounding 56 degrees of freedom (DoF), it possesses a range of motion rivaling a human’s. Its strength is equally impressive, capable of lifting up to 50kg (110lbs instantly, 30kg sustained), designed explicitly for demanding industrial tasks.

This AI integration is the core differentiator. It represents the fusion of Boston Dynamics’ legendary mobility with Google DeepMind’s advanced AI, creating a machine that can learn new tasks in a day and replicate them across an entire fleet. Gone are the loud hydraulic systems; in their place is 360° perception, self-swapping 4-hour batteries, and a quiet efficiency built for continuous operation alongside humans.

This post is for robotics enthusiasts, engineers, and futurists eager to understand how this integration works. We will deconstruct how atlas robot ai integration revolutionizes humanoid capabilities through advanced deepmind robotics language perception, enables a new class of industrial task execution robots, and fundamentally shapes our automation workforce future. Let’s deconstruct how Atlas achieves these feats and what it means for the future.

Atlas AI Integration Deconstructed: The Hardware & Software Symphony

The magic of the new Atlas isn’t a single feature, but a symphony of hardware and software designed for autonomy. At its core, the atlas robot ai integration leverages neural networks developed through the DeepMind partnership. These networks manage real-time balance, dynamic obstacle avoidance, and complex manipulation—transforming raw sensor data into fluid, purposeful action.

This intelligence is channeled through a revolutionary physical form:

- Unmatched Dexterity: The robot’s hands are equipped with tactile-sensing fingers (three per hand) and a reach of 2.3 meters, allowing for everything from precise assembly to gripping irregular engine parts.

- All-Electric Agility: The shift from hydraulic to full electric actuation is monumental. It’s quieter, more energy-efficient, and enables the smooth, powerful movements that allow Atlas to perform parkour-like maneuvers or carefully place heavy objects.

- Industrial-Strength Frame: Built from titanium and aluminum, it’s rugged enough for factory floors and hazardous environments, with an IP67 rating protecting its critical sensors from dust and water.

The demonstrations are no longer mere choreography. We’ve seen Atlas perform material handling with 50kg automotive parts, execute complex component assembly, and—critically—safely collaborate with humans. Its sensor suite allows it to detect a human approach and adjust its behavior in real-time, a non-negotiable feature for shared workspaces.

The contrast with the past is stark. The pre-2024 Atlas was a research platform, famous for dazzling but scripted parkour videos. The new Atlas, integrated with Orbit software for Manufacturing Execution Systems (MES) and Warehouse Management Systems (WMS), is an industrial asset. It’s slated for autonomous pilot programs in Hyundai factories by 2026, moving from the lab to the production line.

This evolution from demo bot to co-worker is powered by AI that enables task adaptation on the fly. But what fuels this cognitive ability? It starts with the most advanced perception systems ever deployed on a humanoid.

The DeepMind Brain: Language, Perception, and Real-World Cognition

If the robot’s body is a masterpiece of engineering, its mind is a product of cutting-edge AI research. The deepmind robotics language perception initiatives are what equip Atlas with a form of situational awareness. Through 360° camera arrays and advanced vision models, the robot can map its environment, detect objects, estimate poses, and adhere to safety protocols without needing every inch of a factory pre-mapped.

This perception is the foundation for higher-order cognition. While specific model names like RT-2 aren’t confirmed for Atlas, the principle is clear: pairing vision with action models allows the robot to interpret natural commands. Imagine controlling Atlas via a tablet or even voice, giving an instruction like, “Retrieve the battery pack from the charging station and deliver it to workstation three.” The AI’s job is to break that down into perceiving the objects, planning a path, and executing the physical motions—all while avoiding dynamic obstacles.

The capabilities this unlocks are transformative:

- From Pre-Mapped to AI-Augmented: Robots no longer operate solely in rigid, digitally-twinned environments. They can adapt to changes—a pallet left in the aisle, a new piece of equipment—using their own perception.

- Fleet-Wide Learning: A task learned by one Atlas robot can be deployed across an entire fleet almost instantly, enabling rapid scaling of complex operations.

- The Language Frontier: The groundwork is laid for full large language model (LLM) integration, where a worker could give a complex, multi-step instruction in plain English. This progression is a key part of understanding how AI is fundamentally changing our world.

The atlas robot ai integration accelerated by DeepMind is what transitions this technology from lab research to real-world Hyundai pilots in 2026. It moves the needle from single-task execution to generalized task execution. This is not just an incremental improvement; it’s one of the AI-driven emerging tech innovations shaping our immediate future. With this brain in place, Atlas is ready for the factory floor.

Redefining Industrial Task Execution

The promise of humanoid robots has always been to navigate spaces built for humans while performing superhuman tasks. Atlas defines this new category of industrial task execution robots. Unlike fixed robotic arms or even mobile manipulators on wheels, a bipedal humanoid with a 2.3-meter reach and full-body mobility can operate in the cluttered, unstructured environments of existing factories and warehouses.

Its applications prioritize areas where flexibility and strength are paramount:

- Logistics and Transport: Moving heavy, irregular parts (like engine blocks or tires) between stations at a walking speed of 9km/h.

- Precision Assembly: Using its tactile hands and AI-guided perception to assemble components that require dexterity and visual alignment.

- Hazardous Material Handling: Operating in environments that are dangerous for humans, leveraging its rugged build and remote operability.

The advantages over traditional automation are clear:

- High Adaptability: It can be redeployed for different tasks without massive re-engineering of the workspace.

- Safe Human Coexistence: Integrated sensors and behavioral AI ensure it can work alongside people, a feature emphasized for Hyundai factory deployments.

- Fleet Autonomy: The AI integration means a single pilot program’s learnings can be replicated across a global network of robots.

However, a transparent view requires acknowledging current limitations. The estimated cost is high (around $120k per unit), and the 4-hour battery life, while mitigated by auto-swapping, requires infrastructure. Its design prioritizes heavy-duty strength and reach over the ultra-fine dexterity some competitors target, and full LLM-based natural language control is a future goal, not a current feature.

Yet, the atlas robot ai integration is what makes these industrial demos possible. The AI enables not just the 50kg lift, but the intelligent decision of *where* and *how* to lift it, integrated seamlessly into factory operations via the Orbit software platform. This represents a profound example of the impact of artificial intelligence on industries in 2025 and beyond. Yet the true impact extends beyond factories to society itself.

Charting the Automation Workforce Future

The arrival of capable humanoids like Atlas inevitably sparks discussions about job displacement. A more nuanced view reveals a path toward a hybrid automation workforce future. The design philosophy isn’t to replace humans but to augment them—handling the dull, dirty, dangerous, and heavy tasks (the “4 D’s”).

This creates a new operational model:

- Human-Robot Collaboration: Atlas takes on the physically taxing role of moving 50kg loads or performing repetitive overhead assembly. Human workers are freed for higher-value tasks: complex problem-solving, quality oversight, creative design, and strategic management of the robotic fleet itself.

- New Job Creation: The deployment of such systems generates demand for new skills: fleet managers, AI model tuners for specific factory tasks, Orbit software integration specialists, and maintenance engineers for advanced mechatronics.

- Safety-First Design: The embedded IP67 sensors and behavioral protocols ensure collaboration is inherently safe, building trust on the shop floor.

Addressing displacement concerns is crucial. While some roles will evolve, the history of automation shows it also creates new categories of employment. The rise of AI-powered humanoids will demand skills in programming adaptive behaviors, robotics-as-a-service (RaaS) management, and human-robot interaction safety. Understanding this complex shift is key to navigating the broader AI impact on business operations.

The Hyundai factory pilots starting in 2026 are the first concrete step in this reshaping of manufacturing labor. The goal is a net-positive outcome: elevating the human role in industry. The trajectory connects all our core themes: Atlas robot ai integration, powered by deepmind robotics language perception, creates advanced industrial task execution robots, which in turn define a more collaborative and elevated automation workforce future.

This hybrid model isn’t about replacement—it’s about elevation. It envisions a future where human ingenuity is amplified by robotic strength and AI-assisted precision.

Frequently Asked Questions

Q: How is the 2026 Atlas different from the previous hydraulic Atlas robot?

A: The differences are foundational. The new Atlas is fully electric, making it quieter, more efficient, and suitable for prolonged indoor work alongside humans. It’s a commercial product designed for real industrial tasks, featuring 56 degrees of freedom (vs. 28 in the older model), a streamlined design, and, most importantly, integrated AI for autonomous task learning and adaptation, moving far beyond the scripted demos of its predecessor.

Q: What does “AI integration” actually mean for Atlas? Can it think for itself?

A: “AI integration” means Atlas uses machine learning models, particularly from DeepMind, to process sensor data and make real-time decisions. It doesn’t “think” like a human. Instead, it perceives its environment, balances dynamically, navigates obstacles it hasn’t seen before, and manipulates objects with adaptive force. It learns task paradigms (like “place this part here”) that can be generalized to new, similar situations, which is a form of embodied AI.

Q: When and where will Atlas robots be deployed?

A: Boston Dynamics, in partnership with Hyundai, plans to start pilot programs in Hyundai manufacturing facilities in 2026. These initial deployments will focus on specific, high-value industrial tasks like material handling and component assembly to validate the robot’s utility and safety in a real-world setting before broader commercial availability.

Q: How does Atlas’s AI compare to other humanoid robots like Tesla Optimus?

A: Atlas currently emphasizes high-performance mobility, extreme strength (50kg lift), and proven AI for dynamic balance and navigation in complex environments. Its partnership with Google DeepMind is a significant AI advantage. Tesla Optimus appears focused on a different scale—higher-volume, lower-cost, with an emphasis on fine manipulation and leveraging Tesla’s expertise in AI and battery tech. The approaches are complementary, exploring different paths in the revolutionary AI innovations changing the world.

Q: What is the biggest challenge facing humanoid robots like Atlas?

A: Beyond technical hurdles like battery life and cost reduction, the biggest challenge is achieving generalized intelligence and reliability in unpredictable real-world settings. A robot must perform safely and effectively 99.9% of the time in a chaotic factory, not just in a controlled lab. The integration of robust perception, fail-safe mechanics, and adaptable AI is key to overcoming this. Successfully deploying them at scale is a critical component of the evolving AI-powered transportation and logistics landscape.