Critical 2026 Forecast AI Regulation Edge Shift: Navigating the EU AI Act, On-Device Compliance, and Real-Time Governance

Estimated reading time: 12 minutes

Key Takeaways

- The critical 2026 forecast AI regulation edge shift is the convergence of stringent new AI laws and the mass migration of AI processing to the edge.

- By August 2026, the core obligations of the EU AI Act will be in full force, creating a hard compliance deadline for high-risk and general-purpose AI systems.

- On-device AI processing offers performance and privacy benefits but introduces profound new challenges for regulatory compliance and governance.

- Organizations must build real-time AI governance frameworks to manage compliance across thousands of distributed devices, as manual oversight will be impossible.

- A proactive, compliance-by-design strategy for edge AI is no longer optional—it is a critical competitive advantage for 2026 and beyond.

Table of contents

- Introduction: Setting the Stage for the 2026 Shift

- Section 1: The EU AI Act’s Direct Impact on Edge Computing in 2026

- Section 2: On-Device AI Processing: A Double-Edged Sword of Benefits and Compliance Challenges

- Section 3: The Imperative for Real-Time AI Governance Frameworks by 2026

- Section 4: Building a 2026 AI Compliance Strategy for On-Device Models

- Section 5: The Broader Horizon: Industry Implications and Global Ripple Effects

- Frequently Asked Questions

Introduction: Setting the Stage for the 2026 Shift

We stand at the precipice of a fundamental restructuring of how artificial intelligence is built, deployed, and governed. The term critical 2026 forecast AI regulation edge shift encapsulates the pivotal convergence of two tectonic forces reshaping the technology landscape.

On one side, a wave of comprehensive, risk-based AI regulation, led by the pioneering EU AI Act, is establishing a new global standard for responsible AI. On the other, a powerful architectural shift is moving AI processing from centralized, omnipotent cloud data centers to distributed, intelligent edge and on-device environments. By 2026, these trends will collide, creating a new paradigm with non-negotiable rules.

The timeline is concrete. According to analysis from Technology’s Legal Edge, by August 2026, the majority of obligations for high-risk and general-purpose AI systems under the EU AI Act will be in full force. This isn’t a distant future scenario; it’s a hard deadline on the corporate calendar.

Simultaneously, on-device AI processing is accelerating, driven by the demand for low-latency, reliable, and private applications. From autonomous vehicles making split-second decisions to medical devices diagnosing in real-time, the edge is where AI meets the physical world. As noted by STL Partners, this shift isn’t just about performance; it presents a “unique surface for regulatory enforcement.” The very architecture chosen for efficiency becomes the frontline for compliance.

This blog provides an expert analysis of this impending shift. We will dissect the specific regulatory pressures, unravel the complex compliance challenges of distributed AI, and outline actionable strategies to not just survive but thrive in the post-2026 landscape.

Section 1: The EU AI Act’s Direct Impact on Edge Computing in 2026

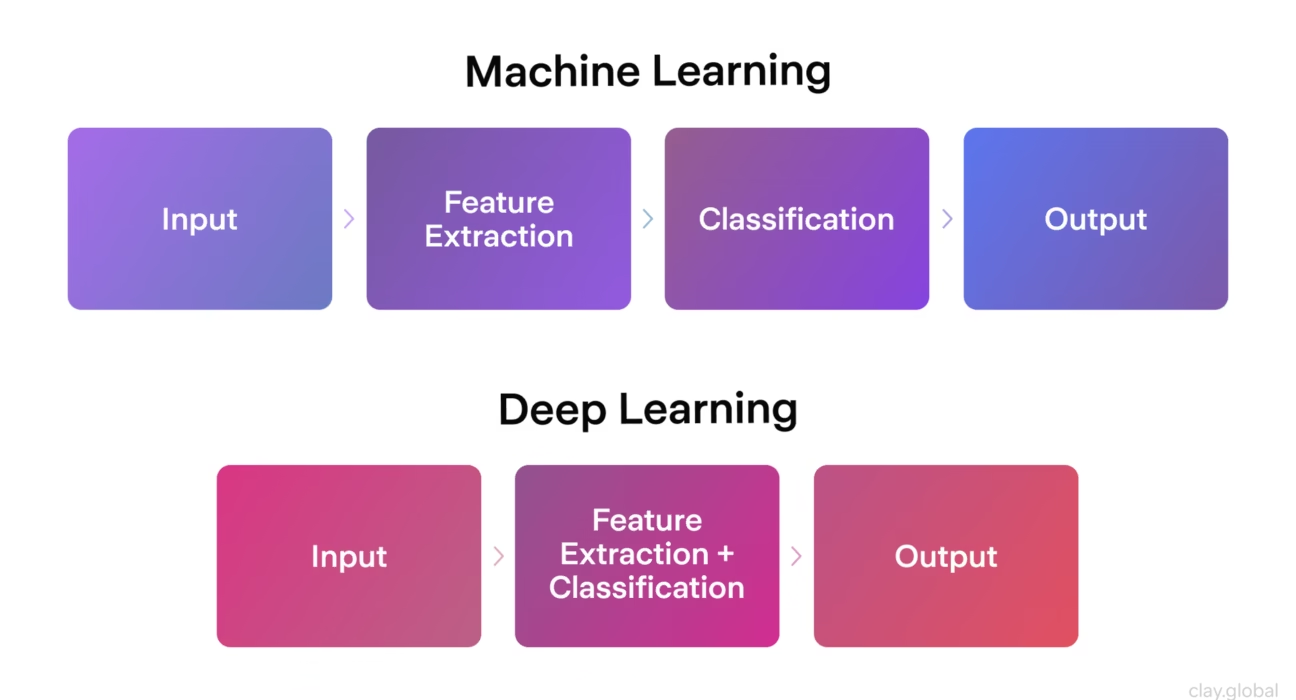

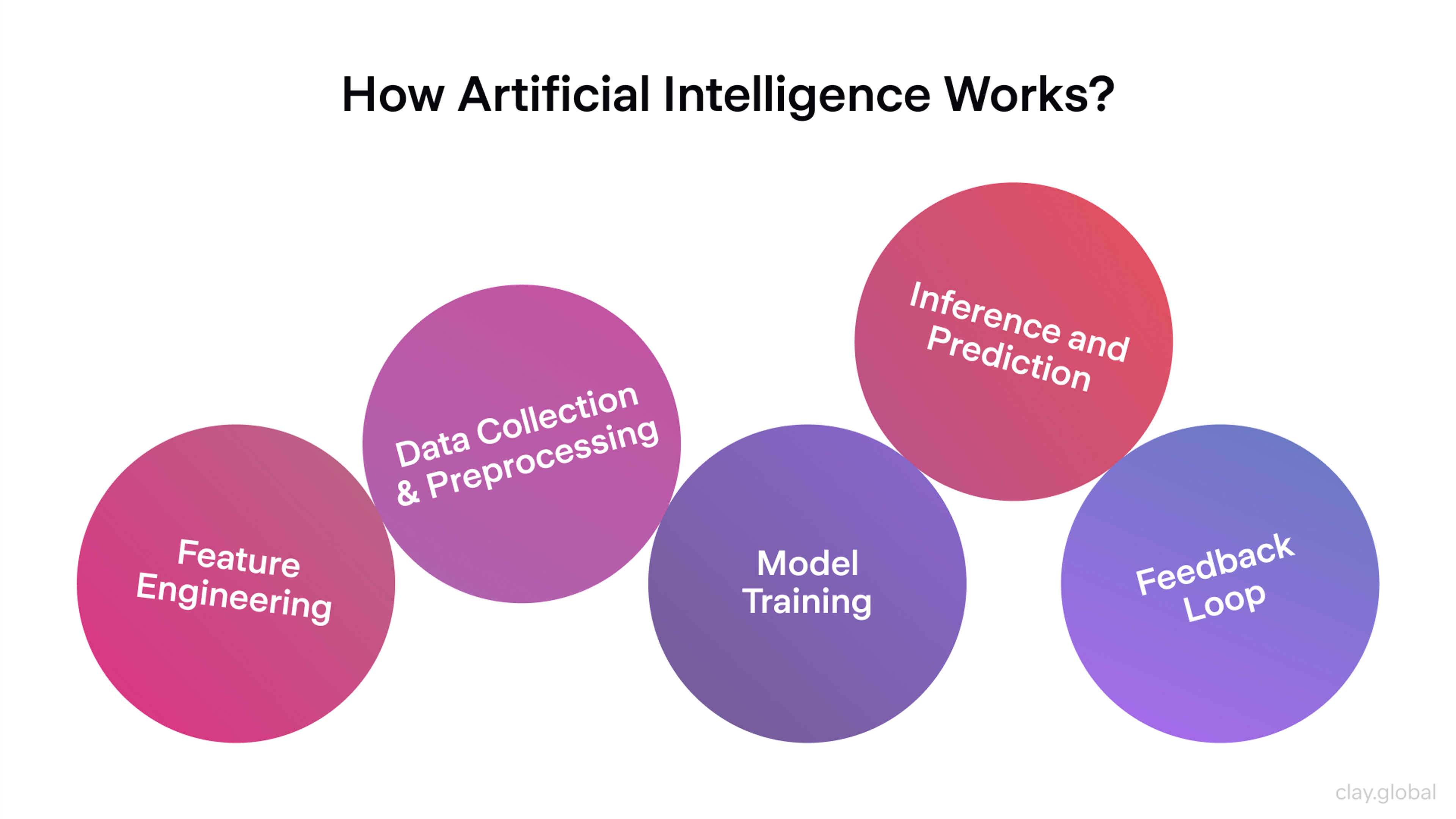

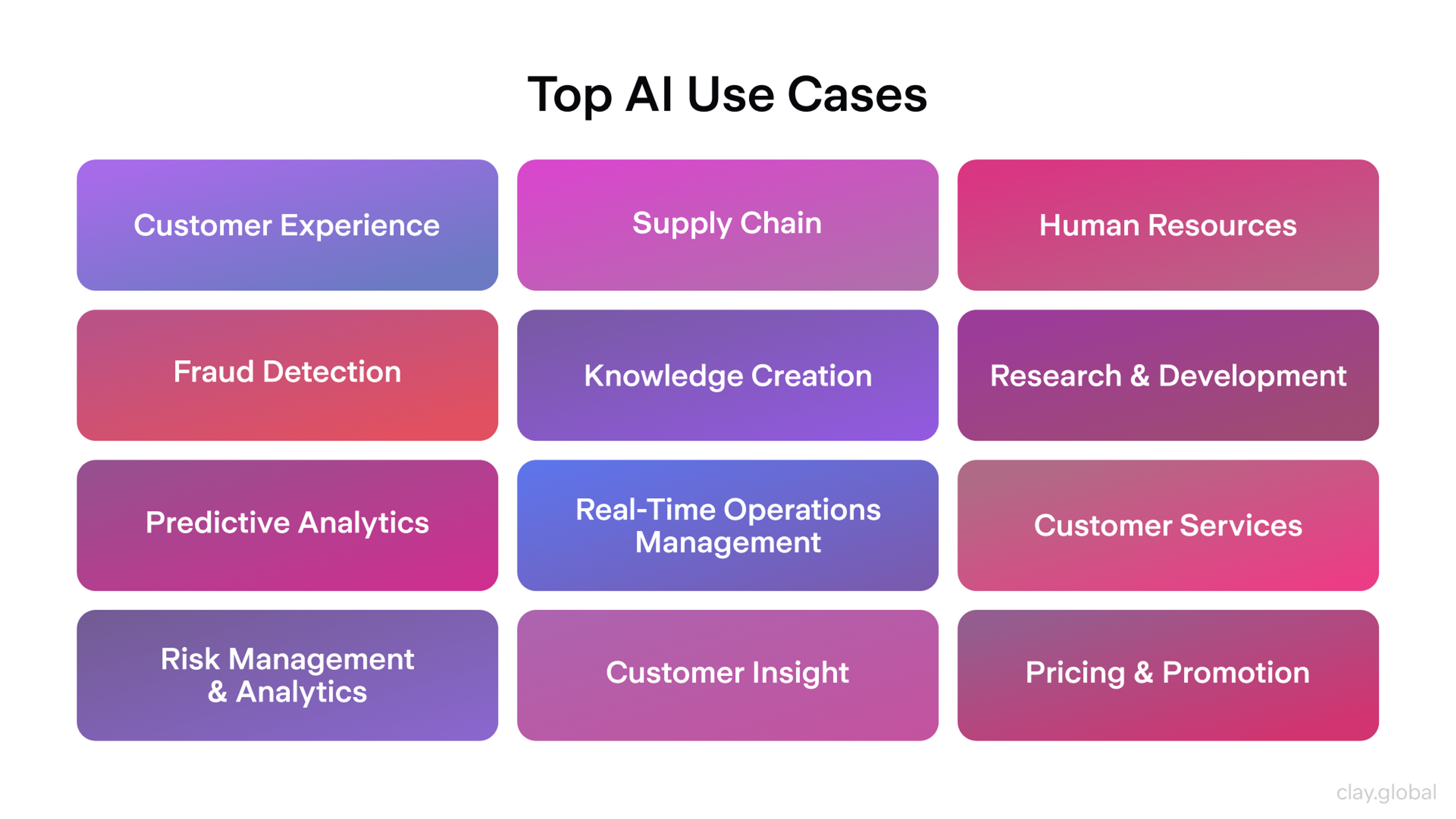

The EU AI Act operates on a risk-based classification system. Systems deemed “high-risk” are subject to the strictest requirements, encompassing rigorous conformity assessments, robust risk management, and extensive transparency obligations. Herein lies the first major impact: a vast number of compelling edge AI applications fall squarely into this “high-risk” category.

Consider the use cases: AI in medical devices performing diagnostics, AI systems managing critical infrastructure like power grids, and biometric identification in public spaces. As STL Partners’ analysis highlights, these are classic edge computing applications due to their need for real-time response and operational resilience, and they are unequivocally high-risk under the Act.

The impact of EU AI Act on edge computing 2026 will be felt through several specific, challenging obligations:

- Enhanced Documentation & Logging: Providers of high-risk AI systems must maintain comprehensive technical documentation and ensure systems are designed to automatically log events over their lifetime. Translating this to a fleet of 10,000 smart cameras or 50,000 industrial robots is a monumental task. How do you ensure consistent, secure, and verifiable logging across heterogeneous, resource-constrained, and potentially offline devices? As noted by legal experts, this logging is not optional; it’s evidence for post-market surveillance and regulatory audits.

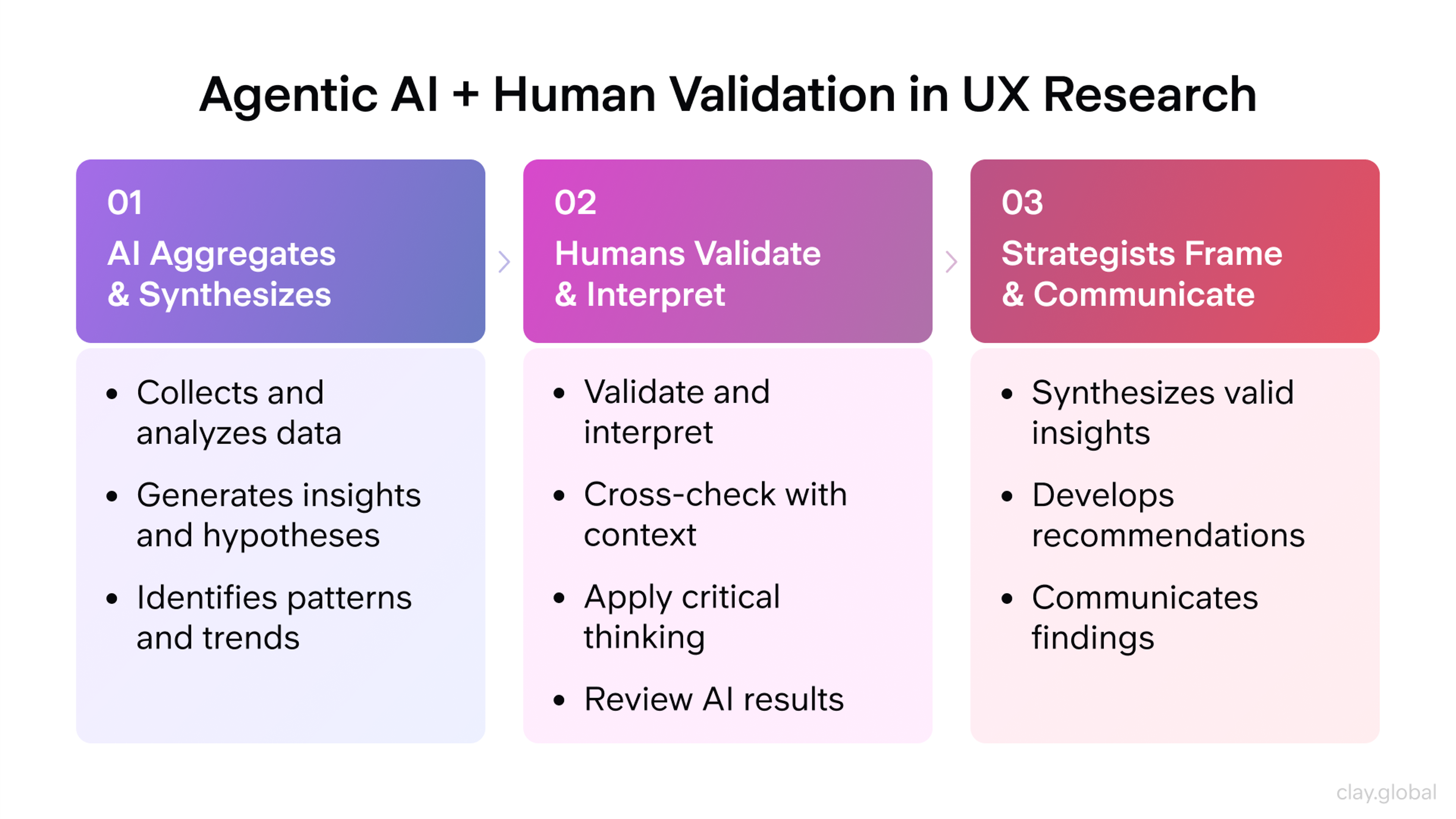

- Human Oversight: High-risk AI systems must be designed to allow for effective human oversight. This could mean the ability for a human to intervene, override a decision, or halt the system. Architecting this into an on-device feedback loop—where a remote human might need to be alerted and able to intervene in milliseconds—requires a fundamental rethink of system design. STL Partners emphasizes that this oversight must be “architecturally integrated,” not bolted on.

- Conformity Assessment & Risk Management: Before deployment, high-risk systems require a conformity assessment. For edge AI, this means proving the model performs safely and as intended not just in a lab, but in the chaotic, variable conditions of the real world. Post-deployment, a continuous risk management system is mandatory. This creates a dual challenge: managing the risk of the AI model itself and the operational risks of the distributed hardware it runs on. Analysts at Control Risks frame this as part of the broader “AI compute contest,” where control over the infrastructure layer becomes a key strategic and compliance imperative.

Furthermore, the EU AI Act does not exist in a vacuum. It interacts powerfully with existing data regulations like the GDPR and the new Data Act. On-device processing can be a powerful tool for data minimization—processing data locally without unnecessary transmission. However, this creates a new governance complexity: balancing the mandate to minimize data collection with the regulatory requirement to retain certain logs and records for auditing and safety. Navigating this interplay is a core component of the 2026 challenge.

Section 2: On-Device AI Processing: A Double-Edged Sword of Benefits and Compliance Challenges

To navigate the 2026 shift, organizations must have a clear-eyed view of both the powerful advantages and the formidable new hurdles introduced by on-device AI. Let’s systematically break down the on-device AI processing benefits and compliance challenges.

The Compelling Benefits

- Unmatched Performance: For applications where milliseconds matter, on-device inference eliminates network latency. A autonomous vehicle cannot wait for a round-trip signal to a cloud server to identify a pedestrian. This local processing also guarantees functionality in scenarios with poor or no connectivity, enhancing overall system reliability and user experience. As highlighted by Zabala Innovation, this performance drive is also fueling European initiatives for sovereign edge AI chips.

- Enhanced Privacy & Data Sovereignty: This is perhaps the most significant synergy with regulation. By processing data locally and only sending essential insights (or nothing at all) to the cloud, companies can adhere to the core GDPR principle of data minimization. It reduces the “attack surface” for large-scale data breaches. Furthermore, it aligns perfectly with growing global demands for data sovereignty—keeping data within geographic borders. STL Partners and analyses of initiatives like the proposed EU Cloud and AI Development Act show a clear political drive to create a trustworthy, sovereign European data and AI ecosystem where on-device processing plays a key role.

The Critical Compliance Challenges

- The Fragmented Governance Nightmare: In the cloud, you have one environment to monitor, update, and secure. At the edge, you have thousands or millions. Ensuring every device runs the correct, compliant version of an AI model, has the latest security patches, and is configured according to policy is an exponential increase in complexity. A vulnerability or non-compliant model in one device is a regulatory incident.

- The Auditability Black Box: How do you prove to a regulator that your 100,000 deployed edge devices have been operating within the approved parameters for the last year? You need a verifiable chain of evidence: which model version was deployed when, what data influenced key decisions, and how the system performed. Generating and collecting this “explainability metadata” from resource-constrained devices without compromising performance or privacy is a profound technical hurdle.

- The Data Minimization Paradox: Regulations encourage you to collect and store less data. Yet, for safety, accountability, and compliance, you may be required to log certain decisions, incidents, or performance metrics. Striking the right balance—keeping enough data to demonstrate compliance but not so much as to violate privacy principles—requires careful legal and technical design from the ground up.

“The edge is where AI meets the physical world—and also where it meets the regulator. The distributed nature that gives it strength also makes traditional, centralized compliance approaches utterly obsolete.”

Section 3: The Imperative for Real-Time AI Governance Frameworks by 2026

Given the challenges above, it becomes clear that legacy governance models—periodic audits, manual reporting, static policies—will collapse under the weight of distributed AI. The only viable answer is the development and deployment of real-time AI governance frameworks by 2026.