The Essential 2026 Edge AI Implementation Guide: A Strategic Roadmap

Estimated reading time: 12 minutes

Key Takeaways

- The essential 2026 edge AI implementation guide is a strategic framework for deploying AI directly on devices, enabling real-time processing and reducing cloud dependency.

- Adopting edge AI by 2026 is a commercial imperative, with potential to reduce operational downtime by up to 40% in sectors like manufacturing (source).

- Success hinges on integrating an AI native infrastructure roadmap 2026 and preparing for the future of multi-agent automation 2026.

- Mastering hybrid cloud for AI inference is critical for scalable, efficient architectures that split workloads between edge and cloud.

- Securing distributed systems requires understanding the profound impact of verified digital identity on enterprise comms to authenticate devices and AI agents.

Table of contents

- The Essential 2026 Edge AI Implementation Guide: A Strategic Roadmap

- Key Takeaways

- Introduction: Setting the Stage for 2026

- Section 1: The Foundational Shift to Edge AI and Hybrid Cloud

- Section 2: The Step-by-Step Implementation Roadmap

- Section 3: Enabling the Autonomous Enterprise with Multi-Agent Automation

- Section 4: Securing Trust in a Distributed Ecosystem

- Section 5: Navigating Pitfalls and Ensuring Long-Term Success

- Frequently Asked Questions

Introduction: Setting the Stage for 2026

We are on the cusp of a fundamental transformation in how enterprises leverage artificial intelligence. The essential 2026 edge AI implementation guide is not merely a technical manual; it is a strategic, step-by-step framework for deploying AI directly on devices—from factory sensors to medical equipment—to enable real-time processing, slash latency, and dramatically reduce dependency on centralized cloud infrastructure.

The commercial urgency is undeniable. For businesses aiming to secure a competitive advantage, adopting edge AI by 2026 is critical. Consider the potential impact: in sectors like manufacturing, edge AI implementations can reduce operational downtime by up to 40% (source). This is not a future possibility; it is a present-day imperative for resilience and efficiency.

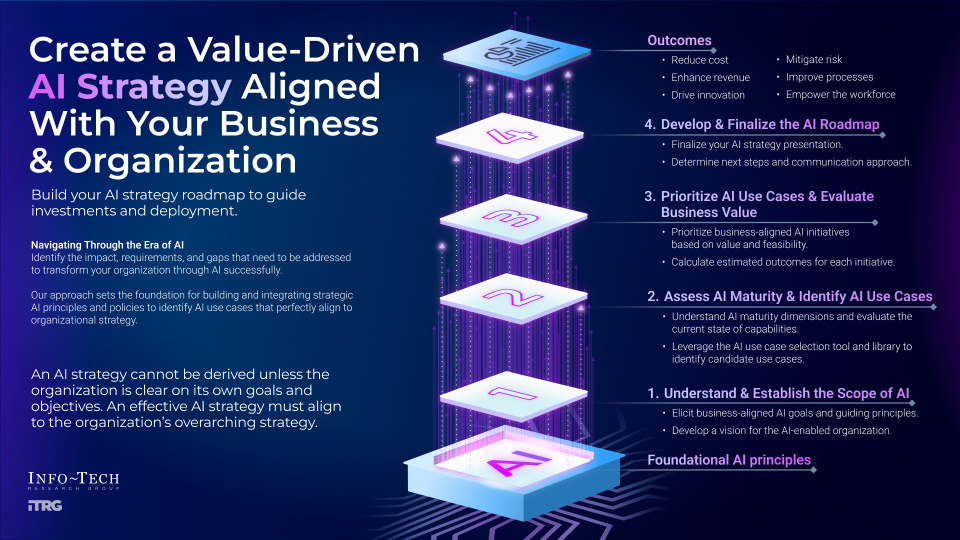

This guide investigates three interconnected themes that define the enterprise AI landscape of 2026:

- The need for an AI native infrastructure roadmap 2026, where 71% of organizations are actively modernizing their core systems specifically for AI scalability and performance (source).

- The transformative future of multi-agent automation 2026, where collaborative AI systems will redefine operational workflows (source).

- The foundational role of mastering hybrid cloud for AI inference and the critical impact of verified digital identity on enterprise comms in securing this new paradigm.

Our goal is to move beyond theory and provide a commercial investigation and actionable roadmap that integrates these key technologies into a coherent, executable strategy for your enterprise.

Section 1: The Foundational Shift to Edge AI and Hybrid Cloud

At its core, Edge AI represents a shift from centralized, cloud-dependent processing to decentralized, intelligent computation at the source of data generation. It involves running optimized AI models directly on local devices like cameras, sensors, and industrial controllers. This is made possible through advanced techniques like quantization (which can shrink AI models by 4-8x) and pruning (removing unnecessary neural network connections), allowing sophisticated algorithms to run on resource-constrained hardware (source).

However, Edge AI does not operate in a vacuum. It is powerfully complemented by the strategy of mastering hybrid cloud for AI inference. This approach leverages split inference, where initial data processing and immediate decision-making happen on the edge device for speed and privacy, while more complex, non-time-sensitive model training or analysis is offloaded to the cloud.

Imagine a retail security camera that locally identifies potential shoplifting behavior in milliseconds, alerting staff instantly, while only sending anonymized metadata to the cloud for long-term pattern analysis. Or a bedside patient monitor in a hospital that processes vital signs locally to trigger immediate alerts, while securely transmitting summarized trends to central records.

This hybrid model is the backbone of scalable AI. Furthermore, Edge AI is the critical enabler for the future of multi-agent automation 2026. It facilitates federated learning—a process where multiple AI agents on different edge devices learn collaboratively from local data without ever centralizing sensitive information. This optimizes distributed workflows, from a fleet of delivery vehicles learning route efficiencies to networked robots on a factory floor improving coordination (source) (source).

Section 2: The Step-by-Step Implementation Roadmap

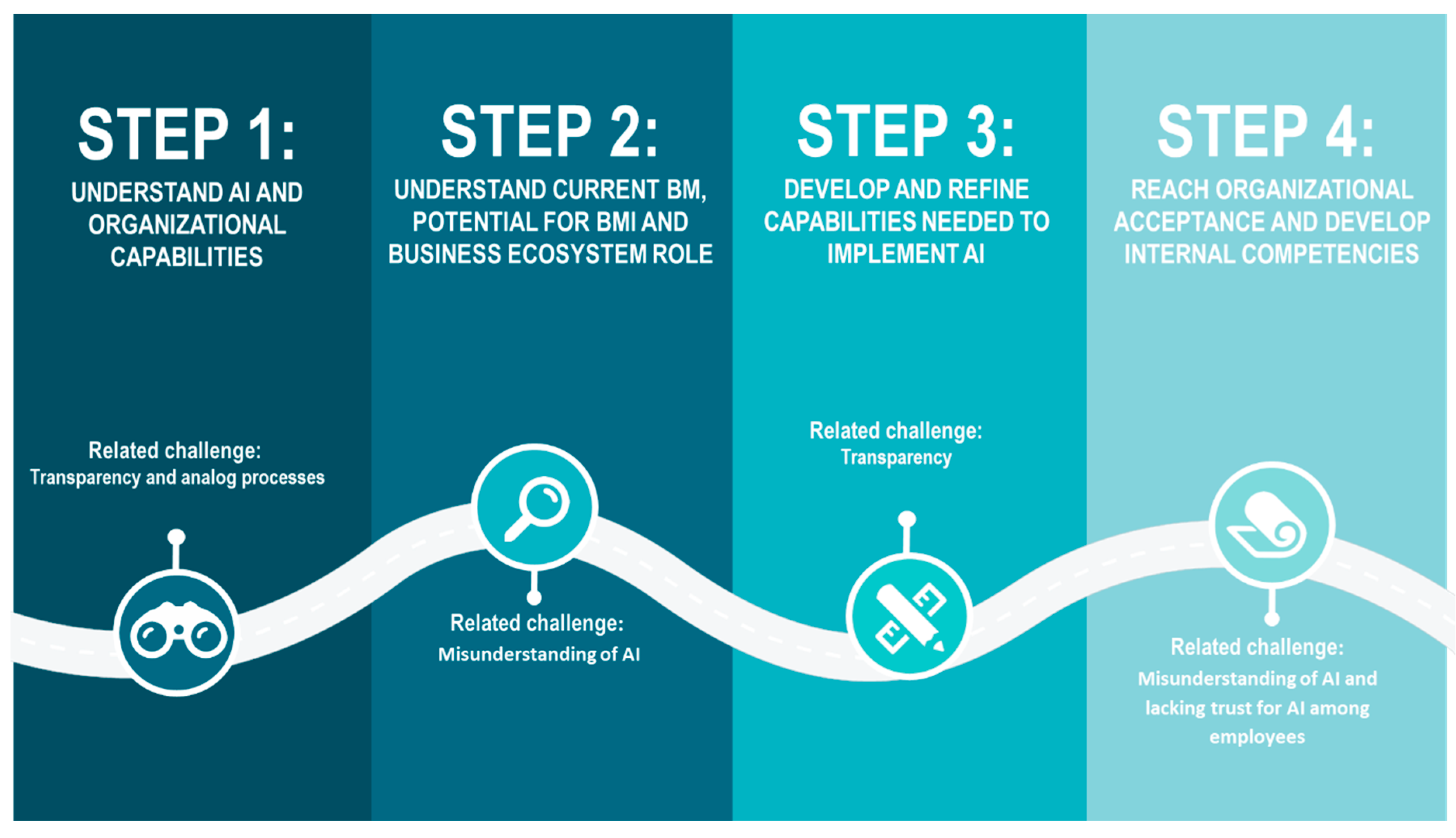

This section constitutes the core of your essential 2026 edge AI implementation guide. Moving from concept to production requires a disciplined, phased approach.

Phase 1: Assess Readiness and Prioritize Use Cases

Begin with a clear-eyed assessment of your organization’s data quality, existing IT/OT infrastructure maturity, and AI literacy across teams. Utilize established frameworks, like those from Gartner, to benchmark your position. The key is to prioritize edge-compatible, high-ROI workloads. Focus on areas where latency, bandwidth costs, or data privacy are paramount. Prime candidates include:

- Predictive Maintenance: Analyzing vibration and thermal data from machinery to forecast failures.

- Real-Time Quality Inspection: Using computer vision on production lines to detect defects at high speed.

- Personalized Customer Experiences: In-store analytics that adapt displays or offers based on local foot traffic.

Selecting the right starting point is crucial for building momentum and demonstrating value (source) (source).

Phase 2: Architect for Scale and Inference

This phase is where mastering hybrid cloud for AI inference becomes an architectural discipline. Design systems that leverage Multi-access Edge Computing (MEC) and 5G networks for ultra-low-latency data distribution between edge nodes and cloud cores. For more on the foundational role of connectivity, see our analysis of unstoppable 5G connectivity.

Your architecture must explicitly define which parts of the AI inference pipeline run where. Simpler, time-critical models reside on the edge. Larger, more complex models and the training pipeline reside in the cloud. This hybrid design is not an option; it is the foundation for scalable, efficient, and resilient AI operations (source) (source).

Phase 3: Embed Security and Trust from the Start

In a distributed AI ecosystem, security cannot be bolted on; it must be baked in. This is where understanding the impact of verified digital identity on enterprise comms is non-negotiable. Every device, sensor, and AI agent in your network must have a cryptographically verifiable identity.

Implement robust Identity and Access Management (IAM) and end-to-end encryption to authenticate all communications between edge devices and central systems. This prevents spoofing, man-in-the-middle attacks, and ensures that only authorized agents can act on or share data. In sectors like healthcare and finance, this is the bedrock of compliance and trust (source) (source).

Phase 4: Operationalize with an AI-Native Mindset

This final implementation phase aligns directly with your AI native infrastructure roadmap 2026. It involves implementing MLOps (Machine Learning Operations) practices to manage the full lifecycle of your edge AI models—from development and testing to deployment, monitoring, and continuous retraining.

An AI-native infrastructure is automated, scalable, and observable. It uses containers and orchestration (like Kubernetes) to deploy models consistently across thousands of edge devices. It monitors for model drift (where a model’s performance degrades as real-world data changes) and automatically triggers retraining pipelines. This operational maturity is what enables scaling from a successful pilot project to full enterprise-wide production (source) (source).

Section 3: Enabling the Autonomous Enterprise with Multi-Agent Automation

The future of multi-agent automation 2026 moves beyond single AI tasks to systems of collaborative, semi-autonomous agents. Agentic AI refers to intelligent software agents that can perceive their environment, make decisions, and take actions to achieve specific goals.

Edge AI is the force that makes this future practical. It provides the necessary local processing power for these agents to make real-time decisions without round-trips to the cloud. In a manufacturing context, an agent on a piece of machinery can detect an anomaly in vibration patterns, diagnose a potential bearing failure, and automatically trigger a work order in the maintenance system—contributing to that 25%+ reduction in downtime (source) (source).

Let’s examine concrete commercial case studies:

- Logistics & Supply Chain: Edge-based agents monitor engine health, tire pressure, and driver behavior across a fleet of vehicles. They predict maintenance needs, optimize routes in real-time based on traffic and weather, and enhance fuel efficiency.

- Healthcare: Diagnostic agents on portable ultrasound or ECG devices provide immediate, preliminary analysis to clinicians in the field, flagging critical conditions for urgent review while securely syncing data with electronic health records.

- Financial Services: Fraud