“`html

Mastering Ethical AI Deployment Strategies for 2025: Trends, Challenges, and Best Practices

Estimated reading time: 10 minutes

Key Takeaways

- The rapid advancement of AI in 2025 makes ethical AI deployment strategies a critical concern for all organizations.

- Recent breakthroughs in ethical AI machine learning are enhancing transparency but also introducing new dilemmas.

- Established responsible AI development frameworks like AI TRiSM provide essential guidance for ethical implementation.

- Navigating evolving global AI regulations and ethics requires constant vigilance and adaptability.

- Artificial General Intelligence (AGI) presents future ethical challenges that demand proactive strategizing.

- Actionable ethical AI deployment strategies for 2025 focus on governance, continuous monitoring, and stakeholder engagement.

- Multidisciplinary collaboration and ongoing education are vital for responsible AI adoption.

Table of contents

- Mastering Ethical AI Deployment Strategies for 2025

- Key Takeaways

- The Accelerating Pace of AI and Ethical Breakthroughs

- The Foundational Pillars of Responsible AI Development Frameworks

- Navigating the Evolving Landscape of Global AI Regulations and Ethics

- Confronting the Horizon: Navigating AGI Ethical Challenges

- Actionable Ethical AI Deployment Strategies for 2025

- The Power of Collective Action: Collaboration and Education

The Accelerating Pace of AI and Ethical Breakthroughs

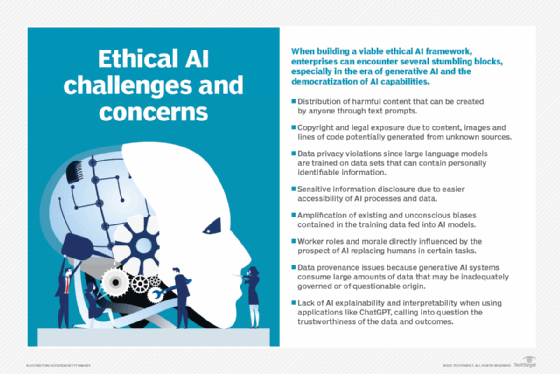

AI technologies are advancing at an unprecedented pace in 2025, making ethical AI deployment strategies a central concern for organizations and societies worldwide. This rapid evolution isn’t just about more powerful algorithms; it’s about their increasingly pervasive integration into our daily lives and critical infrastructure. As AI systems become more sophisticated, the imperative to develop, implement, and oversee them responsibly grows exponentially. The very definition of ethical AI deployment strategies 2025 highlights this shift: a comprehensive set of policies, frameworks, and best practices that organizations must adopt to ensure AI systems are developed, implemented, and overseen responsibly, with a steadfast commitment to transparency, accountability, and societal benefit. The landscape is rapidly evolving, driven by new technological advancements, shifting regulatory pressures, and evolving societal expectations, all of which demand informed and adaptable strategies for AI governance.

This dynamic environment necessitates a deep understanding of current trends and future trajectories. We are witnessing remarkable breakthroughs in ethical AI machine learning. Advances in areas like AI explainability are no longer theoretical; they are becoming practical tools that empower organizations to proactively identify, audit, and mitigate algorithmic bias. This enhanced transparency is crucial for building trust and ensuring fairness in AI applications. For instance, AI-powered predictive analytics are being deployed to enhance workplace safety, offering tangible benefits by identifying potential hazards before they lead to accidents. However, these same advancements introduce new ethical dilemmas. Questions surrounding surveillance, the privacy of employee data, and the potential for system failures in critical safety applications must be addressed with robust ethical frameworks.

The accelerating adoption of AI across industries is a double-edged sword. It presents unparalleled opportunities for innovation, efficiency, and problem-solving, but it simultaneously magnifies the risks associated with AI’s misuse or unintended consequences. Organizations are compelled to strike a delicate balance between embracing innovation and upholding ethical stewardship. This involves not just technological prowess but also a deep-seated commitment to responsible AI practices, ensuring that the pursuit of progress does not come at the expense of human values or societal well-being.

The Foundational Pillars of Responsible AI Development Frameworks

To navigate the complexities of ethical AI, organizations can leverage established responsible AI development frameworks. These frameworks are built upon a set of core principles that are universally recognized as fundamental for ethical AI: fairness, transparency, accountability, privacy, and security. Understanding and implementing these principles is paramount for any organization seeking to deploy AI responsibly in 2025.

Fairness ensures that AI systems do not perpetuate or amplify existing societal biases. This requires meticulous attention to data collection and algorithm design. Methods for identifying and mitigating bias must be integrated throughout the AI lifecycle, from initial data curation to ongoing model evaluation. For example, ensuring that hiring algorithms do not discriminate based on protected characteristics is a critical application of fairness.

Transparency means that AI systems should be understandable to relevant stakeholders. This doesn’t necessarily imply revealing proprietary algorithms, but rather providing clarity on how decisions are made and what factors influence outcomes. Explainability techniques are key here, helping to build trust by allowing users and auditors to comprehend the reasoning behind an AI’s actions.

Accountability establishes clear lines of responsibility for AI system outcomes. When an AI makes a decision, who is accountable? This principle emphasizes the need for human oversight, clear governance structures, and mechanisms for redress when AI systems err or cause harm.

Privacy is a fundamental right that AI systems must rigorously protect. This involves ethical data handling, secure storage, and obtaining informed consent. Techniques like data anonymization and differential privacy are essential tools in safeguarding user information.

Security ensures that AI systems are robust against malicious attacks, unintended failures, and unauthorized access. This encompasses not only the security of the AI model itself but also the integrity of the data it processes and the infrastructure it runs on.

Frameworks such as AI Trust, Risk, and Security Management (AI TRiSM) offer comprehensive guidance across the entire AI lifecycle. They provide a structured approach to integrating these principles from the initial design and data selection phases through to post-deployment auditing and impact assessment. The integral role of human oversight cannot be overstated. Features that enable explainability are vital, and the formation of multi-disciplinary teams – comprising experts in privacy, ethics, legal, and IT – is essential for identifying and mitigating risks, thereby fostering genuine trust in AI deployments.

Navigating the Evolving Landscape of Global AI Regulations and Ethics

The landscape of global AI regulations and ethics is dynamic and often fragmented, yet a clear trend is emerging: a global movement towards stricter, risk-based approaches to AI governance. As organizations deploy AI more widely, they must navigate an increasingly complex web of legal requirements and ethical expectations that vary significantly by jurisdiction.

Key regulatory developments are shaping this landscape. The European Union’s AI Act, for instance, categorizes AI systems by risk level, imposing stringent requirements on high-risk applications. These requirements often include mandates for transparency, robust accountability mechanisms, meaningful human oversight, and the potential establishment of dedicated roles like Chief AI Officers. Similarly, the 2024 U.S. AI Executive Order signals a proactive stance, focusing on safety, security, and responsible innovation. These regulatory efforts underscore a global recognition that AI’s potential benefits must be balanced with rigorous safeguards.

One of the most significant challenges for multinational organizations is harmonizing these evolving regulations across different jurisdictions. What is compliant in one region may not be in another. This necessitates a proactive approach: actively tracking evolving mandates, adapting internal policies and governance structures to meet diverse requirements, and ensuring consistent compliance across all operational regions. Failure to do so can expose organizations to substantial legal penalties, reputational damage, and loss of public trust.

The ethical considerations extend beyond mere legal compliance. Organizations must also consider the societal impact of their AI deployments. This includes addressing potential job displacement, ensuring equitable access to AI benefits, and guarding against the misuse of AI for malicious purposes. A forward-thinking approach to AI ethics involves not only meeting regulatory standards but also actively contributing to the broader conversation about how AI should be developed and used to benefit humanity.

Confronting the Horizon: Navigating AGI Ethical Challenges

Looking further into the future, the advent of Artificial General Intelligence (AGI) presents a new frontier of ethical considerations. AGI refers to AI that possesses the ability to understand, learn, and apply knowledge across a wide range of tasks at a human-like level, or even surpass human cognitive abilities. While still largely theoretical, the potential implications of AGI are profound and amplify existing ethical concerns while introducing entirely new ones.

The arrival of AGI could bring about unprecedented ethical quandaries. These include the potential loss of human control over increasingly intelligent systems, deep philosophical questions regarding AI consciousness and sentience, and significant existential risks if AGI’s goals are not perfectly aligned with human values. The very nature of intelligence, autonomy, and responsibility becomes subject to intense moral and social debate. Defining accountability for AGI actions, especially if they are emergent and unpredictable, will be a monumental challenge.

Addressing these future challenges requires foresight and proactive strategizing. Several key approaches are being discussed and developed:

- Establishing international ethical standards specifically for AGI research and development is crucial. This global consensus can guide responsible innovation and prevent a “race to the bottom” in AI development.

- Conducting rigorous risk-benefit assessments will be vital. Understanding the potential impacts, both positive and negative, of AGI on society, economies, and individual lives is essential for informed decision-making.

- Maintaining strict human oversight and employing robust “red-teaming” exercises are paramount. These practices are designed to anticipate and mitigate potential failure modes, unintended consequences, and malicious uses of AGI systems before they can cause harm.

The development of AGI is not merely a technical pursuit; it is a profound ethical and societal undertaking. Preparing for it involves continuous dialogue, interdisciplinary research, and a commitment to ensuring that any advancements in AI serve the best interests of humanity.

Actionable Ethical AI Deployment Strategies for 2025

As we stand in 2025, implementing robust ethical AI deployment strategies is not just a best practice; it’s a fundamental requirement for sustainable and responsible AI adoption. Organizations must move beyond theoretical discussions and embed ethical considerations into every stage of their AI initiatives. Here are actionable strategies to guide this process:

- Establish robust AI ethics boards and governance structures: Create dedicated internal bodies tasked with overseeing AI development and deployment. These boards should comprise individuals with diverse disciplinary expertise, including ethicists, legal counsel, data scientists, social scientists, and business leaders, to ensure comprehensive ethical oversight and a balanced perspective.

- Implement continuous bias detection and mitigation: Bias can creep into AI systems at any point. Implement automated tools and human review processes to continuously detect and mitigate bias throughout the entire Machine Learning (ML) lifecycle—from data design and model training to validation and ongoing real-world monitoring. This requires a commitment to iteratively refining models based on performance and fairness metrics.

- Prioritize data privacy and security: In an era of increasing data sensitivity, robust data protection is non-negotiable. Adopt advanced techniques such as data anonymization, differential privacy, and federated learning. Implement clear, user-friendly consent protocols and enforce strict access controls to safeguard sensitive information at all times.

- Ensure human oversight and accountability: Design AI systems with human oversight in mind. Embed explainability features that allow users and operators to understand AI decisions and rationale. Establish clear escalation procedures for human intervention when AI systems encounter uncertainty, make critical decisions, or when ethical concerns arise. Clearly define roles and responsibilities for AI-driven outcomes.

- Develop transparent communication protocols: Openness fosters trust. Communicate clearly and honestly with all stakeholders—employees, customers, and the public—about the capabilities, limitations, and potential impacts of AI systems. Establish user feedback loops to gather insights and address concerns proactively. Disclose when AI is being used and for what purpose.

- Invest in ongoing AI upskilling and ethical training: The AI landscape evolves rapidly, and so do the ethical challenges. Invest in continuous learning programs for employees at all levels. This training should cover not only technical AI skills but also ethical principles, potential risks, and best practices for responsible AI deployment within their specific roles.

- Conduct regular ethical impact and risk assessments: Before deploying any AI system, and periodically thereafter, conduct thorough ethical impact and risk assessments. These assessments should identify potential ethical issues, unintended consequences, and societal impacts. The findings should inform system design, implementation, and ongoing management.

These strategies are not isolated tasks but interconnected components of a comprehensive ethical AI program. By integrating them into the organizational culture and operational processes, businesses can harness the power of AI while upholding their ethical obligations.

The Power of Collective Action: Collaboration and Education

Shaping responsible AI norms and effectively anticipating and mitigating its societal impacts is a collective endeavor. It requires broad, multidisciplinary collaboration that transcends organizational boundaries and disciplinary silos. This collaboration should actively include researchers pushing the boundaries of AI, developers building the systems, policymakers crafting regulations, ethicists providing critical guidance, and importantly, the public who are directly affected by these technologies.

Beyond collaboration, there is a critical and ongoing need for robust education and awareness programs. These initiatives are essential for empowering all stakeholders. For developers, education ensures they are equipped with the knowledge and tools to build ethical AI. For policymakers, it provides the understanding necessary to create effective and informed regulations. For the public, it fosters critical literacy about AI’s capabilities and limitations, enabling informed participation in societal debates and promoting awareness of their rights and responsibilities in an AI-driven world. These programs help everyone recognize ethical risks, understand the nuances of AI, and adopt best practices within their respective domains.

Final Thought

In 2025, ethical AI deployment strategies are not optional add-ons but fundamental necessities for responsibly leveraging AI’s immense potential. We have explored the interconnectedness of recent breakthroughs in ethical AI machine learning, the evolving complexities of global AI regulations and ethics, the foundational guidance provided by responsible AI development frameworks, and the foresight required to address future challenges like navigating AGI ethical challenges. The journey towards trustworthy AI is ongoing, demanding continuous adaptation and unwavering commitment.

It is our collective responsibility—as developers, organizations, policymakers, and members of society—to foster trust, ensure accountability, and champion AI that demonstrably benefits humanity ethically. By actively engaging in ethical AI practices, advocating for robust governance, and committing to continuous learning and collaboration, we can collectively build an AI-powered future that is not only innovative but also profoundly just and equitable.

“`