Explosive AI Fairness & Ethics: Understanding Bias and Building Accountability

Estimated reading time: 7 minutes

Key Takeaways

- AI Fairness & Ethics are Crucial: Ensuring AI systems treat individuals equitably and align with societal values is non-negotiable, especially in high-stakes sectors like healthcare and finance.

- Bias is Pervasive: AI bias stems from flawed data, design oversights, and unchecked deployment, risking the amplification of historical inequalities.

- Actionable Solutions Exist: Mitigating bias involves using representative data, conducting transparency audits, fostering diverse teams, and leveraging specialized fairness tools.

- Accountability is Key: Adhering to responsible AI guidelines (like the EU AI Act), performing impact assessments, and establishing ethical oversight are vital for building trust.

- Ongoing Challenge: The field faces evolving risks (e.g., deepfakes) and a lack of universal standards, requiring continuous vigilance and collaboration.

Table of Contents

- 1. What Is AI Fairness & Ethics?

- 1.1 Why It Matters Now

- 2. AI Bias Explained: How Discrimination Creeps Into Algorithms

- 2.1 Root Causes of Bias

- 2.2 Real-World Examples

- 3. AI Discrimination Solutions: Strategies to Mitigate Harm

- 3.1 3 Key Strategies

- 3.2 Case Studies

- 4. AI-Powered Fairness Tools: Technology to the Rescue

- 4.1 Top Frameworks

- 4.2 Auditing Best Practices

- 5. Responsible AI Guidelines: Building Ethical Systems

- 5.1 Key Ethical Standards

- 5.2 Steps for Organizations

- 6. Challenges and the Future of Ethical AI

- 6.1 Unresolved Issues

- 6.2 The Path Forward

- 7. Conclusion: The Imperative of Explosive AI Fairness & Ethics

- 8. Frequently Asked Questions

Artificial intelligence (AI) systems are no longer confined to research labs; they actively shape critical decisions in healthcare, employment, finance, and even criminal justice. These are domains where fairness, equity, and ethical considerations cannot be mere afterthoughts. The concept of Explosive AI fairness & ethics highlights the urgent need to address inherent biases, prevent discrimination, and ensure this powerful technology aligns seamlessly with deeply held societal values. This blog post delves into the multifaceted nature of AI bias, explores practical solutions to counteract it, and outlines the essential tools and guidelines required to establish robust accountability frameworks.

1. What Is AI Fairness & Ethics?

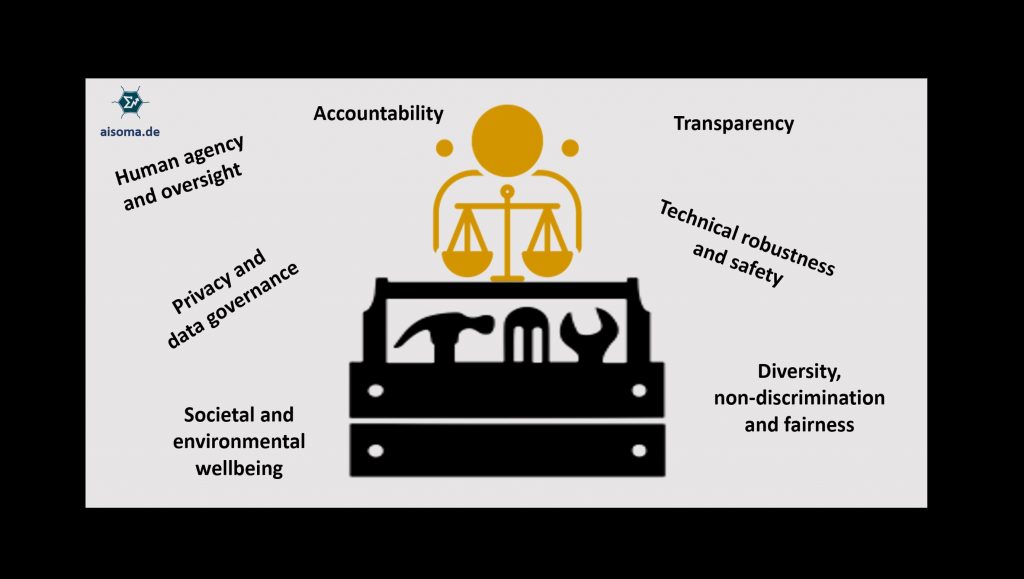

At its core, AI fairness is the commitment to designing and deploying algorithms that treat all individuals and groups equitably, without prejudice based on sensitive attributes like race, gender, age, sexual orientation, or socioeconomic status. It aims to prevent AI systems from perpetuating or even amplifying existing societal biases.

Complementing this, AI ethics provides a broader framework. It encompasses the principles and values governing the development and use of AI, ensuring these systems respect human rights, autonomy, privacy, transparency, and accountability. It’s about building AI that is not just *technically proficient* but also *morally sound* and *socially responsible*.

1.1 Why It Matters Now

- AI impacts high-stakes decisions: Algorithms are increasingly influencing life-altering outcomes, including medical diagnoses, treatment plans, loan application approvals or denials, hiring selections, and even parole recommendations.

- Risk of Amplifying Inequality: Without rigorous fairness checks and ethical oversight, these systems can inadvertently absorb and scale historical inequalities present in the data they are trained on, leading to discriminatory outcomes.

Consider the potential consequences: a biased hiring algorithm could systematically overlook highly qualified candidates from underrepresented backgrounds, hindering diversity and equal opportunity. Similarly, flawed criminal risk assessment tools, often trained on historically biased policing data, might unfairly assign higher risk scores to individuals from minority communities, perpetuating cycles of injustice. The potential for harm underscores the critical importance of embedding fairness and ethics into the AI lifecycle from the outset. *(Sources: Lumenova, Lumenalta)*

2. AI Bias Explained: How Discrimination Creeps Into Algorithms

AI bias manifests when algorithms consistently produce outcomes that are skewed, unfair, or discriminatory against certain groups. It’s crucial to understand that bias isn’t always intentional; it often emerges subtly from the data, design choices, or deployment context.

2.1 Root Causes of Bias

- Flawed Data: This is perhaps the most common source. Training datasets might overrepresent majority groups while underrepresenting minorities. They can also reflect historical societal inequities (e.g., fewer women in historical engineering roles leading an AI to deprioritize female candidates for technical jobs).

- Example: Facial recognition systems trained predominantly on datasets of lighter-skinned individuals have notoriously shown significantly lower accuracy rates when identifying darker-skinned individuals, leading to potential misidentification and harm.

- Design Gaps & Lack of Diversity: Development teams that lack diversity (in terms of gender, ethnicity, background, expertise) may inadvertently overlook specific use cases, cultural nuances, or potential harms affecting marginalized groups. Their assumptions and worldviews can unconsciously shape the algorithm’s behavior. *(Ethical oversight during design is critical, as seen in debates like the one surrounding alleged AI use in art: Wizards of the Coast refutes using AI for creating Magic: The Gathering artwork.)*

- Deployment Risks & Feedback Loops: An AI model might perform fairly in testing but exhibit bias when deployed in the real world due to unforeseen interactions or changing conditions. Furthermore, if biased predictions influence future data collection (e.g., biased policing leading to more arrests in certain areas, which then trains future policing AI), it can create harmful feedback loops that worsen bias over time if not carefully monitored and audited.

2.2 Real-World Examples

- Gender Bias in Hiring: A well-known instance involved Amazon’s experimental recruiting tool, which penalized resumes containing words associated with women (e.g., “women’s chess club captain”) because it learned from historical hiring data dominated by male applicants.

- Racial Bias in Healthcare: Certain healthcare algorithms, designed to predict health needs based on past healthcare spending, inadvertently allocated fewer resources to Black patients. This occurred because historical spending patterns reflected systemic access barriers for Black patients, not their actual health needs. The algorithm mistook lower spending for lower need.

These examples highlight how easily bias can infiltrate AI systems with significant real-world consequences. *(Sources: Built In, Chapman University, TechTarget)*

3. AI Discrimination Solutions: Strategies to Mitigate Harm

Addressing AI bias and discrimination requires a proactive, multi-pronged approach integrated throughout the AI development and deployment lifecycle. Simply acknowledging the problem isn’t enough; concrete actions are necessary.

3.1 3 Key Strategies

- Curate and Use Representative Data:

- Actively collect and curate training datasets that accurately reflect the diversity of the population the AI will affect, considering factors like age, gender, race, ethnicity, geographic location, and socioeconomic status.

- Employ techniques like data augmentation or synthetic data generation to supplement data for underrepresented groups, ensuring the model learns patterns across all demographics.

- Example: Intentionally including comprehensive medical data from diverse ethnic groups significantly improves the accuracy and fairness of diagnostic AI, preventing disparities in care quality. *(Explore more on AI’s impact in healthcare: Revolutionary AI Medical Breakthroughs: Transforming Healthcare.)*

- Conduct Rigorous Transparency Audits:

- Regularly evaluate algorithms using established fairness metrics (e.g., demographic parity, equal opportunity, equalized odds, predictive parity) to quantify potential biases across different subgroups.

- Analyze model performance beyond overall accuracy, specifically looking at error rates (like false positives and false negatives) for different demographic groups to identify disparities.

- Utilize explainability techniques (like SHAP or LIME) to understand *why* a model makes certain predictions, potentially revealing reliance on biased features.

- Build Diverse and Interdisciplinary Teams:

- Assemble teams composed not just of AI engineers and data scientists, but also ethicists, social scientists, domain experts, legal professionals, and representatives from affected communities.

- Diverse perspectives help identify potential blind spots, challenge assumptions, anticipate unintended consequences, and ensure the AI aligns with broader societal values and contexts.

3.2 Case Studies

- Healthcare Success: By implementing fairness-aware machine learning techniques and using more representative data, developers of a diagnostic algorithm significantly reduced prediction errors for Black patients, leading to more equitable health outcome predictions.

- Fairer Finance: Financial institutions adopting bias-mitigation strategies and fairness auditing in their loan application AI models have reported increased approval rates for qualified applicants from low-income or minority backgrounds, fostering greater financial inclusion. *(AI is transforming finance ethically in other ways too: Unstoppable AI Fraud Detection is Revolutionizing Finance.)*

These examples demonstrate that conscious effort and the right strategies can lead to measurably fairer AI systems. *(Sources: Lumenova, ATP Connect)*

4. AI-Powered Fairness Tools: Technology to the Rescue

Fortunately, the growing awareness of AI bias has spurred the development of specialized tools and frameworks designed specifically to help developers detect, measure, and mitigate fairness issues in machine learning models.

4.1 Top Frameworks

- IBM AI Fairness 360 (AIF360): An extensible open-source toolkit providing a comprehensive suite of over 70 fairness metrics and 10+ bias mitigation algorithms. It helps data scientists examine, report, and mitigate discrimination and bias in models throughout the AI application lifecycle.

- Google’s What-If Tool (WIT): An interactive visual interface integrated into TensorBoard and Jupyter/Colab notebooks. It allows developers to probe the behavior of trained models by exploring hypothetical scenarios, visualizing model predictions across different data points and subgroups, and analyzing fairness metrics.

- Fairlearn: Another popular open-source Python package (originally developed by Microsoft Research) that enables users to assess the fairness of AI systems (quantifying harms like disparities in allocation or quality of service) and apply mitigation algorithms. It integrates well with common machine learning libraries like scikit-learn.

These tools empower developers to move beyond intuition and apply quantitative methods to address fairness concerns systematically. *(Discover more AI innovations: 10 Cutting Edge AI Technologies Shaping the Future.)*

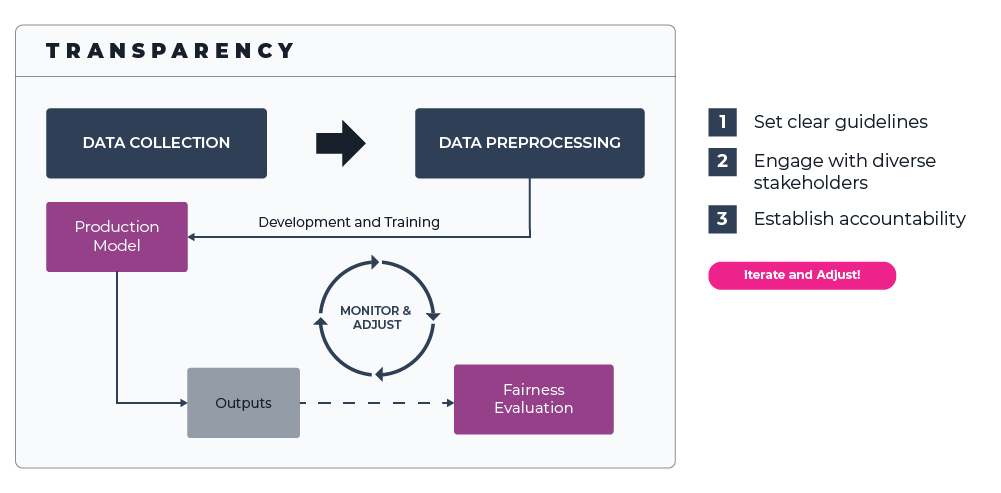

4.2 Auditing Best Practices

- Continuous Monitoring Post-Deployment: Fairness isn’t a one-time check. Monitor models continually after deployment for “drift” – changes in data distributions or user behavior that could introduce new biases or worsen existing ones over time. Regularly re-audit using fairness metrics.

- Adversarial Testing: Intentionally test the model with challenging or atypical data points designed to expose potential vulnerabilities and hidden biases that might not surface during standard testing on the main dataset.

- Red Teaming: Employ independent teams (internal or external) to actively try to “break” the model’s fairness – finding inputs or scenarios where it produces discriminatory outcomes.

- Documentation and Transparency: Thoroughly document the data used, model architecture, fairness metrics evaluated, mitigation steps taken, and known limitations. Transparency is key for accountability.

*(Sources: Lumenalta, Chapman University)*

5. Responsible AI Guidelines: Building Ethical Systems

Alongside technical tools, establishing clear ethical guidelines and governance frameworks is essential for fostering responsible AI development and deployment. Several international bodies and jurisdictions are developing standards and regulations.

5.1 Key Ethical Standards

- EU AI Act (Proposal): A landmark regulatory framework that categorizes AI systems based on risk. It proposes strict requirements for high-risk applications (e.g., in critical infrastructure, employment, law enforcement) concerning data quality, transparency, human oversight, and robustness. It outright bans certain AI uses deemed unacceptable risks (e.g., social scoring by governments, real-time remote biometric identification in public spaces with limited exceptions).

- OECD AI Principles: Adopted by numerous countries, these principles promote AI that is innovative and trustworthy. They emphasize inclusive growth, sustainable development, human-centred values, fairness, transparency and explainability, robustness, security, and accountability.

- NIST AI Risk Management Framework (US): Provides voluntary guidance to help organizations manage risks associated with AI systems, focusing on principles like fairness, accountability, transparency, reliability, and privacy.

*(For insights into specific national approaches, see Mind-Blowing AI Regulations in the UK: The Ultimate Guide to Ethics.)*

5.2 Steps for Organizations

- Conduct Bias Impact Assessments: Before and during development, systematically assess potential fairness risks. Audit the data sources, model design, intended use case, and potential outcomes for disparities across different demographic groups. Document findings and mitigation plans.

- Establish Ethical Review Boards or Councils: Create internal oversight committees with diverse expertise (technical, legal, ethical, domain-specific) to review and approve AI projects, particularly high-risk ones. These boards ensure alignment with organizational values and external regulations.

- Promote Public Reporting and Transparency: Where appropriate (respecting privacy and proprietary information), publicly share information about AI systems, their intended uses, fairness assessments, and performance metrics. This transparency builds public trust and accountability.

- Invest in Training and Awareness: Educate developers, product managers, and leadership about AI ethics, bias, fairness metrics, and responsible AI practices.

*(Sources: Vation Ventures, Lumenalta)*

6. Challenges and the Future of Ethical AI

Despite significant progress in awareness, tools, and guidelines, the path towards truly fair and ethical AI is ongoing and faces substantial challenges.

6.1 Unresolved Issues

- Lack of Universal Definitions and Standards: The very definition of “fairness” can be complex and context-dependent. Different fairness metrics sometimes conflict, meaning optimizing for one might worsen another. Achieving global consensus on standards remains difficult due to cultural and legal variations.

- Technical Complexity: Mitigating bias without sacrificing model accuracy or utility can be technically challenging. Explainability methods for complex models like deep neural networks are still evolving.

- Evolving Risks: Rapid advancements, particularly in areas like generative AI (e.g., deepfakes, large language models), introduce new ethical dilemmas concerning misinformation, authenticity, consent, and potential misuse faster than regulations can adapt.

- Accountability Gaps: Determining liability when an autonomous AI system causes harm can be legally ambiguous. Establishing clear lines of responsibility across developers, deployers, and users is still a work in progress.

6.2 The Path Forward

- Advocate for Agile and Adaptable Regulations: Policymakers must strive for regulations that are robust enough to prevent harm but flexible enough to adapt to rapid technological advancements without stifling innovation.

- Foster Multistakeholder Collaboration: Continuous dialogue and collaboration between governments, technology companies, academia, civil society organizations, and affected communities are essential to developing balanced and effective solutions.

- Prioritize Research and Education: Continued investment in research on fairness metrics, bias mitigation techniques, explainability, and the societal impacts of AI is crucial. Education and awareness programs are needed at all levels.

- Embed Ethics by Design: Shift towards proactively integrating ethical considerations and fairness checks throughout the entire AI lifecycle, rather than treating them as an afterthought or compliance checkbox.

*(Sources: 101 Blockchains, YouTube)*

7. Conclusion: The Imperative of Explosive AI Fairness & Ethics

Building fair and ethical AI systems is not merely a technical challenge or a compliance hurdle; it is a fundamental societal necessity. As AI continues its explosive growth and integration into the fabric of our lives, ensuring it operates equitably and aligns with human values is paramount. By actively implementing AI discrimination solutions, leveraging sophisticated AI-powered fairness tools, adhering to robust responsible AI guidelines, and fostering a culture of transparency and accountability, we can strive to create technology that benefits everyone, not just a select few.

The future impact of AI—whether it exacerbates inequality or promotes equity—depends heavily on the choices we make today. Collaboration, continuous learning, rigorous auditing, and unwavering commitment to ethical principles are the cornerstones of responsible innovation.

Act Now: Take concrete steps within your organization. Audit your AI models for bias. Diversify your development teams and include ethical expertise. Align your practices with emerging global ethics standards and regulations. The time for proactive engagement with explosive AI fairness & ethics is unequivocally now.

*(Sources: Lumenova, ATP Connect)*

8. Frequently Asked Questions

- Q1: What’s the difference between AI Fairness and AI Ethics?

AI Fairness specifically focuses on ensuring algorithms do not produce discriminatory outcomes based on sensitive characteristics (like race, gender, etc.). AI Ethics is broader, encompassing fairness but also including principles like transparency, accountability, privacy, security, human autonomy, and societal well-being in the design and use of AI.

- Q2: Can AI bias ever be completely eliminated?

Completely eliminating all forms of bias is likely impossible, as data often reflects complex societal realities and different fairness definitions can conflict. However, the goal is to identify, measure, understand, and significantly *mitigate* harmful biases through careful data curation, algorithmic design, auditing, and adherence to ethical guidelines. It’s an ongoing process of improvement, not a one-time fix.

- Q3: Who is responsible when a biased AI causes harm?

Determining responsibility is complex and evolving legally. It could potentially involve multiple parties, including the developers who created the algorithm, the organization that deployed it, the providers of the training data, or even users, depending on the specifics of the situation and the applicable legal framework. Establishing clear accountability frameworks is a key goal of responsible AI governance.

- Q4: Are AI fairness tools difficult for non-experts to use?

While some tools require data science expertise (like Fairlearn or AIF360), others, like Google’s What-If Tool, offer more intuitive visual interfaces designed for broader accessibility. The trend is towards creating more user-friendly tools, but a foundational understanding of fairness concepts and metrics is still generally needed for effective interpretation and application.

- Q5: How can small businesses or startups implement AI ethics practices?

Even without large resources, small organizations can adopt ethical practices. Start by fostering awareness of bias and fairness issues within the team. Carefully scrutinize data sources for potential biases. Utilize open-source fairness toolkits. Document decision-making processes. Prioritize transparency where possible. Focus on low-risk AI implementations initially and conduct thorough testing before deploying systems that impact people significantly. Building diverse teams, even small ones, is also beneficial.