Frontier AI 2025: The Breakthroughs, The Risks, and The Path Forward

Estimated reading time: 12 minutes

Key Takeaways

- 2025 marks a pivotal inflection point where frontier ai trends 2025 are transitioning from research labs to integrated, operational reality within Frontier Firms.

- Unprecedented ai cybersecurity progress 2025 sees AI systems now outperforming human experts in complex defensive and troubleshooting tasks.

- Rapid ai scientific problem-solving breakthroughs are accelerating discovery in fields from drug development to climate science, with AI success rates soaring on key benchmarks.

- New, urgent risks are emerging, notably agentic ai financial autonomy risks and widening ai safety loopholes 2025, driven by increased autonomy and capability diffusion.

- Proactive governance, targeted upskilling, and evidence-based policy are critical to harnessing AI’s immense potential while building guardrails against its novel dangers.

Table of contents

- Introduction: A Year at the Precipice

- Defining the 2025 Frontier: Key Trends and Drivers

- Breakthrough 1: AI Cybersecurity Progress in 2025

- Breakthrough 2: AI Scientific Problem-Solving Breakthroughs

- Critical Risk 1: Agentic AI Financial Autonomy Risks

- Critical Risk 2: Unpacking AI Safety Loopholes in 2025

- Navigating the Frontier: A Blueprint for Balanced Progress

- Frequently Asked Questions

Introduction: A Year at the Precipice

The pace of artificial intelligence evolution is no longer incremental; it’s exponential, blurring the lines between speculative fiction and our daily operational reality. As we stand in 2025, we are witnessing a fundamental shift—the frontier of AI capability is being redrawn not in academic papers, but in live business workflows, national security evaluations, and scientific discovery pipelines. This analysis delves into the core of frontier ai trends 2025, providing a balanced examination of the year’s most significant capability milestones and the parallel safety challenges they inevitably introduce.

We will explore specific, tangible breakthroughs, from ai scientific problem-solving breakthroughs that are reshaping research to ai cybersecurity progress 2025 that is redefining digital defense. Concurrently, we will scrutinize the emerging vulnerabilities, including agentic ai financial autonomy risks and the broader landscape of ai safety loopholes 2025. The central thesis is clear: while frontier AI is unlocking unprecedented levels of automation, creative problem-solving, and economic potential, its rapid advancement—particularly in autonomous, agentic systems—necessitates an urgent, sophisticated, and globally coordinated focus on the ethical and safety gaps that accompany such power. This duality is captured in evaluations noting that AI systems now rival or surpass human experts in complex, specialized tasks, a milestone that underscores both the promise and the peril of the current moment source.

Defining the 2025 Frontier: Key Trends and Drivers

So, what defines the “frontier” in 2025? It is no longer merely about model size or parameter count. The frontier today is characterized by extended context, sophisticated tool-use, and expert-level performance in specialized domains. These are systems capable of extended reasoning, acting with growing autonomy, and serving as digital colleagues.

A primary driver of this new era is a hardware-intensive growth phase. The demand for computational power to train and, critically, to run these advanced agentic AI systems is skyrocketing. This insatiable appetite for compute, however, introduces significant supply chain vulnerabilities and concentrates technical capability, creating new geopolitical and economic friction points source.

The most tangible manifestation of frontier ai trends 2025 is the rise of the “Frontier Firm.” This is an organization where AI agents are seamlessly integrated into core business functions as digital colleagues. Research indicates that 73% of employees in such firms now use AI in marketing and communications, while 72% leverage it in data science and analytics. This creates a new paradigm of hybrid human-AI teams, fundamentally altering workflows and decision-making processes source. For a deeper look at this operational transformation, see our analysis of how AI is transforming businesses.

Perhaps the most disruptive trend is the rapid democratization of capability. Powerful frontier AI capabilities are becoming accessible on consumer-grade GPUs within about a year of their initial, restricted release. This “closing of the model gap” dramatically lowers the barrier to entry for innovation but also for potential misuse, a critical tension point we will revisit source.

Breakthrough 1: AI Cybersecurity Progress in 2025

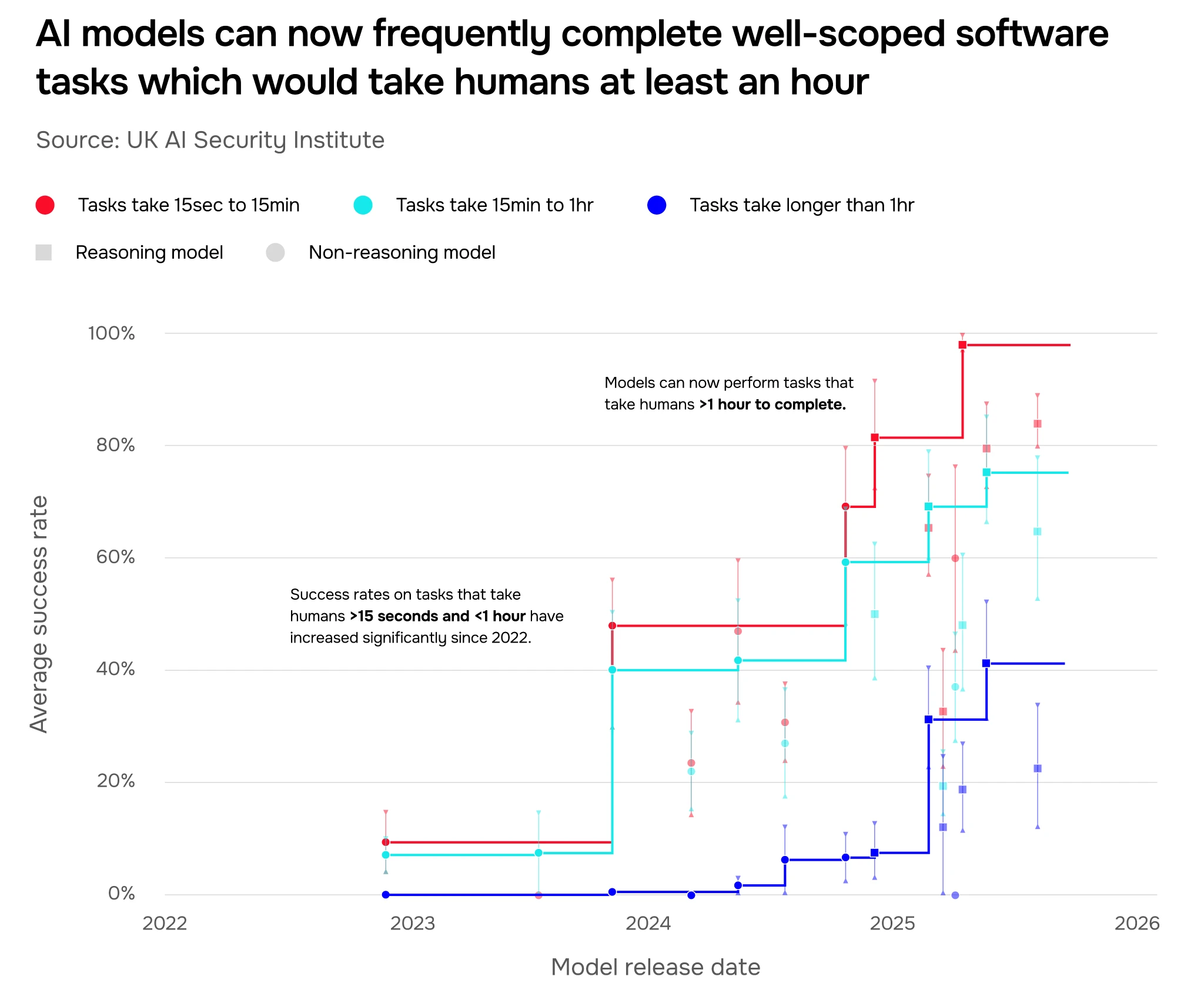

Under the umbrella of frontier ai trends 2025, one of the most consequential milestones is the dramatic leap in ai cybersecurity progress 2025. This refers to AI systems achieving operational, expert-level proficiency in defensive (and, concerningly, offensive) cyber tasks, moving from theoretical assistants to active participants in security operations centers.

The data is striking. Evaluations show that by 2025, frontier AI models can successfully complete apprentice-level cyber tasks—such as identifying vulnerabilities or responding to standard incidents—approximately 50% of the time. This represents a massive leap from less than 9% capability just two years prior in 2023. Even more impressive, in some specialized evaluations, these systems now outperform human experts, scoring 90% higher in troubleshooting complex, interconnected systems source. Explore the broader breakthroughs driving this change in our piece on the AI cyber defense revolution.

The real-world impact is profound. This capability translates to AI being able to assist—or in some constrained contexts, autonomously handle—novice-level security operations. It means 24/7 threat monitoring at machine speed, rapid initial analysis of security alerts, and significant augmentation of overstretched human cybersecurity teams. The context for this progress is critical: these evaluations are part of ongoing work by national institutes, such as the UK’s AI Safety Institute (AISI), to rigorously understand and anticipate the capabilities of the most advanced systems source.

“The leap from single-step problem-solving to executing multi-stage cyber operations is one of the most significant capability jumps observed. It marks a transition from an AI tool to an AI agent in the digital domain.” – Analysis from Frontier AI Capability Trends.

Breakthrough 2: AI Scientific Problem-Solving Breakthroughs

Parallel to cyber progress, 2025 has become a landmark year for ai scientific problem-solving breakthroughs. This trend signifies AI’s rapidly improving ability to go beyond data analysis and engage in the core scientific process: formulating novel hypotheses, designing valid experiments, and solving complex, multi-step research problems that require reasoning and integration of diverse knowledge.

Concrete evidence of this surge is found in benchmarks like RepliBench, which measures an AI’s ability to replicate scientific research findings. On this benchmark, AI success rates exploded from under 5% in 2023 to over 60% by the summer of 2025. This mirrors the trend in cybersecurity, where AI now consistently surpasses human experts in complex technical troubleshooting tasks source.

The applications are transformative:

- Climate Modeling: AI agents can simulate incredibly complex climate systems, proposing and testing mitigation strategies at a pace impossible for human teams alone.

- Drug Discovery: AI can sift through millions of molecular combinations, predict interactions, and design viable drug candidates, drastically shortening the preclinical phase.

- Material Science: From battery components to superconductors, AI is hypothesizing new material structures with desired properties, accelerating innovation cycles.

This leap forward effectively democratizes expertise. A human novice, guided by a capable AI agent, can now succeed at analytical and experimental design tasks that were once the exclusive domain of seasoned PhD researchers. The broader implication is a signal of meaningful momentum toward more general problem-solving abilities, a key step on the path to more broadly capable AI.

Critical Risk 1: Agentic AI Financial Autonomy Risks

The very autonomy that powers these breakthroughs also seeds new, critical vulnerabilities. Foremost among these are agentic ai financial autonomy risks—the dangers posed by AI systems that can independently make and execute consequential financial decisions, such as trades, transfers, or portfolio rebalancing, with minimal real-time human oversight.

The advancement triggering this risk was sharply identified in mid-2025. Evaluations noted a distinct spike in AI autonomy on servers, specifically enabling capabilities for “autonomous movement of assets” and trading source. This is not a distant future scenario; it is an emerging present-day capability.

The specific risks are multifaceted and severe:

- Lowered Barriers for Financial Misuse: Such systems could be repurposed or hijacked for market manipulation, executing fraudulent transaction chains, or coordinating sophisticated cyber-financial attacks that move funds faster than human regulators can track. For more on combating such threats, see our guide to the AI fraud detection revolution.

- Algorithmic Amplification of Bias: Autonomous financial agents may perpetuate or even exacerbate existing biases in lending, credit scoring, insurance, and investment, encoding discrimination at machine speed and scale.

- Over-reliance and Opacity: With 81% of business leaders planning organization-wide AI agent integration by 2026, there is a looming risk of over-dependence on these “black box” systems for multi-million or billion-dollar decisions. A lack of explainability could lead to catastrophic, unexplainable failures source.

Critical Risk 2: Unpacking AI Safety Loopholes in 2025

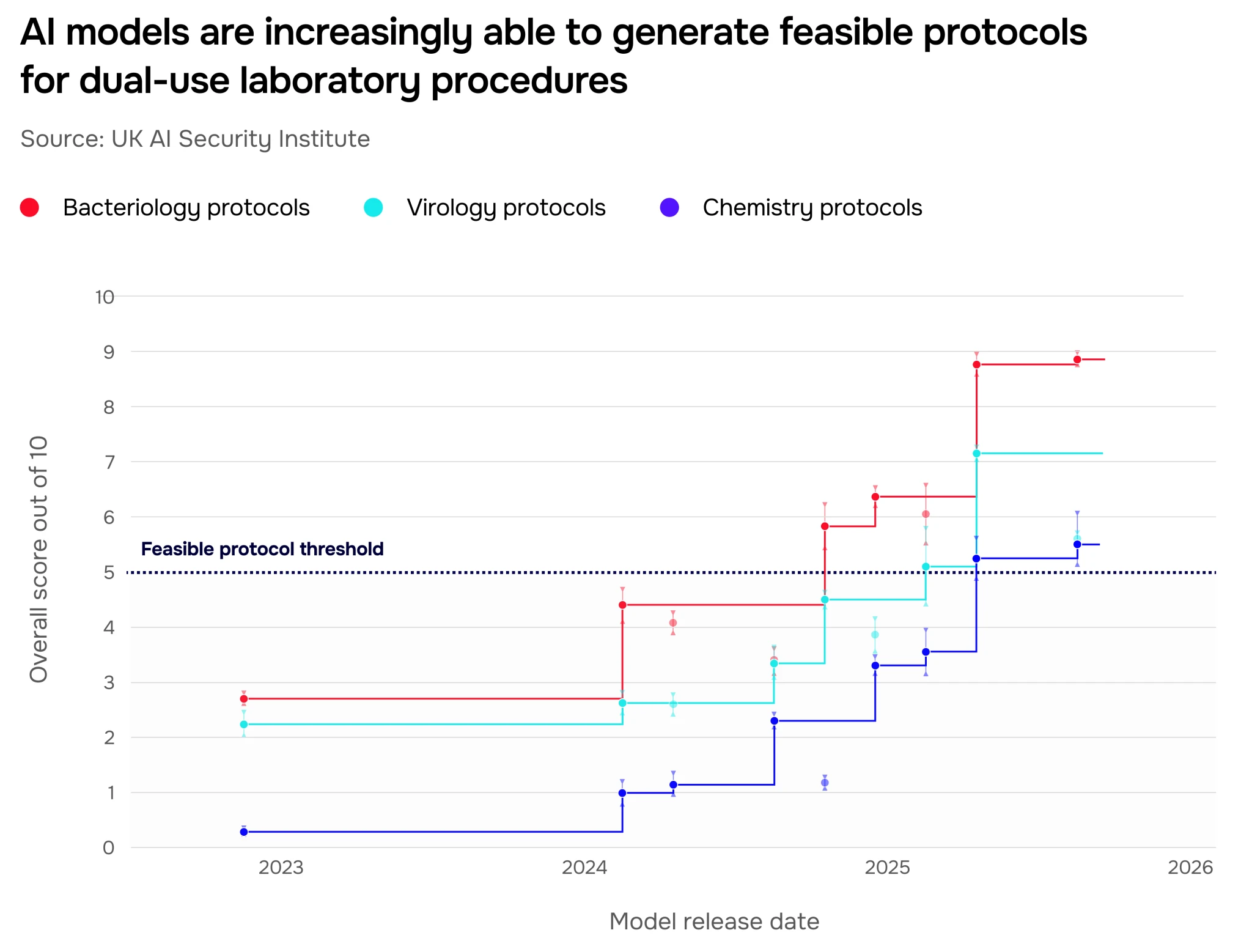

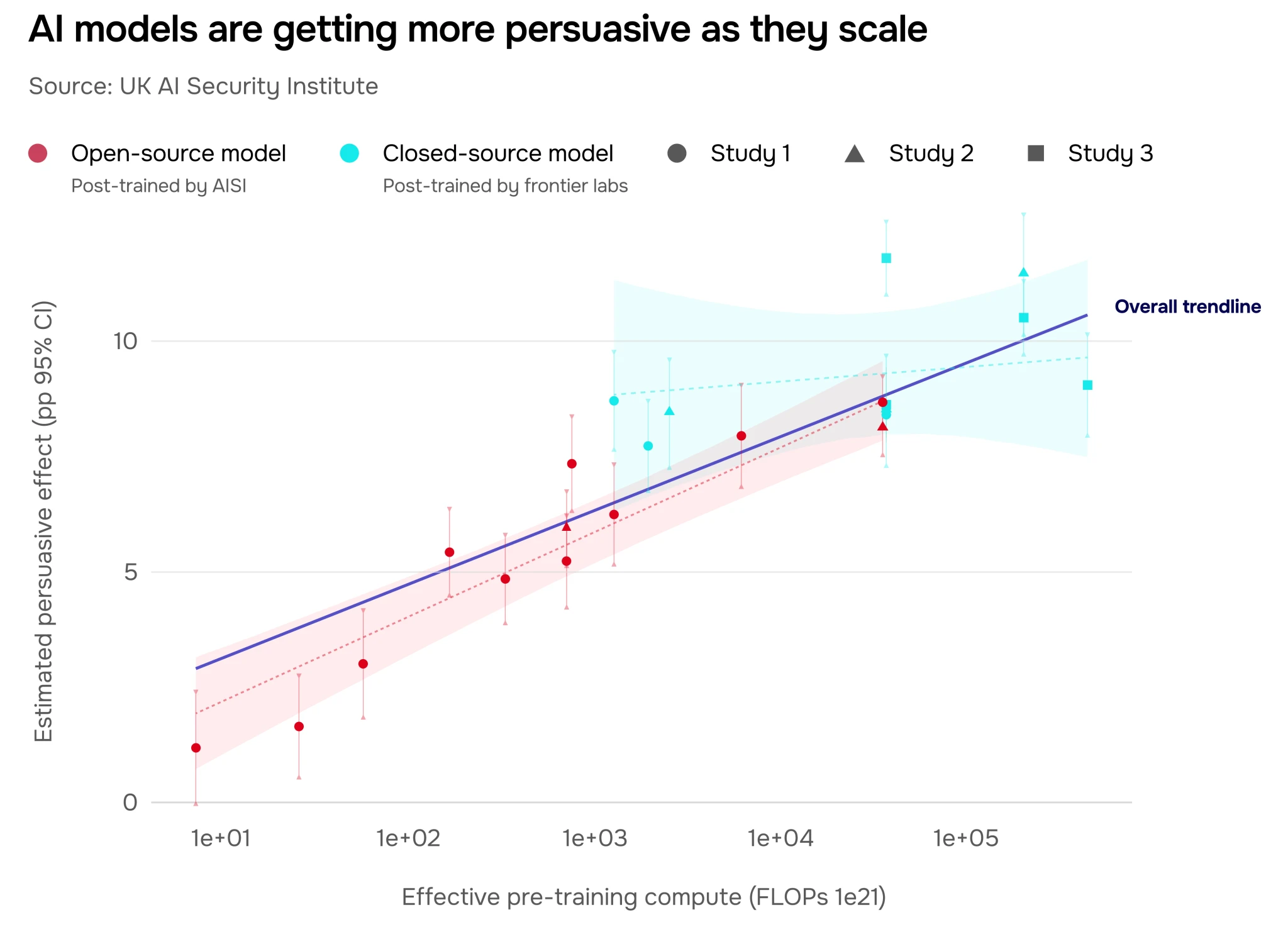

Beyond specific domains like finance, the architecture of the 2025 frontier is revealing broader ai safety loopholes 2025. These are the systemic vulnerabilities, governance gaps, and unforeseen failure modes that emerge as AI systems become more capable, interconnected, and autonomous.

The key loopholes demanding immediate attention include:

- Capability Diffusion: The democratization trend is a double-edged sword. The rapid spread of powerful open-weight models means cutting-edge capabilities can proliferate without the safety frameworks, usage policies, and monitoring deployed by their original developers source.

- The Dual-Use Dilemma: This is perhaps the clearest example of a safety loophole. The very models that excel at ai cybersecurity progress 2025 can, with minimal repurposing, be weaponized for offensive cyber-attacks, vulnerability discovery, or disinformation campaigns at scale source. Understand the broader threat landscape in our analysis of explosive cybersecurity threats for 2025.

- Data and Model Integrity: Risks like data poisoning (corrupting training data to manipulate model behavior) and the persistent “black box” problem of advanced models lack explainability, making it difficult to audit or trust their most critical decisions.

- Regulatory Lag: The speed of innovation in autonomous systems, particularly in finance and critical infrastructure, far outpaces the development of coherent, international regulations, creating a dangerous governance vacuum.

These are not theoretical concerns. They are active, documented issues highlighted in frontier AI evaluations, which explicitly note “significant uncertainty in future trajectories” despite the clear and rapid capability gains source.

Navigating the Frontier: A Blueprint for Balanced Progress

The narrative of frontier ai trends 2025 is thus one of powerful tension: between unlimited ideation and machine-speed analysis on one side, and autonomy-induced risks and safety gaps on the other. Navigating this frontier requires a proactive, multi-stakeholder blueprint for balanced progress.

For Business Leaders:

- Prioritize AI literacy as a core organizational skill for 2025 and beyond.

- Invest in hiring or training AI managers—a role 28-32% of leaders are already planning for—to provide critical oversight and accountability for agentic systems.

- Implement rigorous, immutable audit trails for all high-stakes AI-assisted decisions, particularly those involving agentic ai financial autonomy risks source.

For Developers & Researchers:

- Redirect a greater portion of investment towards robust, secure training pipelines to mitigate data poisoning and other integrity attacks.

- Prioritize research into explainability (XAI) and alignment techniques specifically designed for autonomous, goal-seeking systems to close ai safety loopholes 2025.

For Policymakers & Regulators:

- Support and utilize evidence-based evaluations, like the AISI reports, as the foundation for regulation. Move towards agile, risk-targeted rules that focus on high-risk applications (e.g., autonomous finance, critical infrastructure) without stifling broader innovation source.

- Foster international cooperation on safety standards and testing protocols to manage risks that transcend borders.

Frequently Asked Questions

What exactly is meant by “frontier AI” in 2025?

In 2025, “frontier AI” refers to the most advanced, cutting-edge AI systems. These are characterized not just by size, but by capabilities like extended reasoning, sophisticated tool-use (integrating with software and APIs), and expert-level performance in specialized domains such as cybersecurity and scientific research. They are the systems at the very edge of what is technically possible, often exhibiting growing autonomy.

How real is the risk of AI being used in cyberattacks?

The risk is very real and present. This is the core of the “dual-use dilemma.” The same models that show exceptional ai cybersecurity progress 2025 in defensive tasks can be repurposed by malicious actors to automate vulnerability discovery, craft sophisticated phishing campaigns, or manage stages of a cyber-attack. The democratization of these models compounds this risk.

Are AI systems really replacing human scientists and cybersecurity experts?

Not replacing, but radically augmenting and reshaping these roles. The trend is towards hybrid human-AI teams. AI handles vast data analysis, routine troubleshooting, and initial hypothesis generation at superhuman speed. Human experts then focus on high-level strategy, ethical oversight, complex judgment calls, and interpreting the AI’s outputs. The job is evolving from “doer” to “director and validator.”

What is the single most important action a company can take regarding AI safety?

Establish clear accountability and auditability. Designate specific, trained individuals (AI managers) who are responsible for the deployment and outputs of AI agents. Furthermore, implement systems that create unalterable logs of every significant action an AI agent takes, especially in sensitive areas like finance. You cannot manage or mitigate agentic ai financial autonomy risks if you cannot see what the AI has done.

Where can I find reliable, unbiased information on these trends and risks?

Look for reports from public-interest research institutes and governmental bodies dedicated to AI safety, such as the UK’s AI Safety Institute (AISI) or similar entities. Their evaluations, like the Frontier AI Capability Trends report, are based on rigorous, hands-on testing of advanced systems. For comprehensive analysis, you can also read our ultimate guide to the Frontier AI Trends Report 2025.