Frontier AI Trends 2025: Capabilities, Breakthroughs, and Critical Safety Challenges

Estimated reading time: 10 minutes

Key Takeaways

- Frontier AI trends 2025 are defined by rapid leaps in capabilities, pushing models into new domains like advanced AI cybersecurity progress and scientific problem-solving.

- These powerful systems introduce significant new risks, including agentic AI financial autonomy risks from semi-autonomous models operating in markets.

- Despite progress, AI safety loopholes in 2025 remain a critical concern, with adversarial misuse and model vulnerabilities requiring urgent governance solutions.

- The same core models driving breakthroughs in science and cyber defense also amplify dual-use risks, creating complex cross-domain governance challenges.

- Emerging policy frameworks, like California’s 2025 law, aim to establish safety thresholds and transparency, but global coordination is still evolving.

Table of Contents

- Frontier AI Trends 2025: Capabilities, Breakthroughs, and Critical Safety Challenges

- Key Takeaways

- What is Frontier AI and Why is 2025 a Tipping Point?

- Sharp Jumps in AI Capabilities Marking 2025 Milestones

- Agentic AI and Its Financial Autonomy Risks

- Persistent AI Safety Loopholes 2025 Amid Capability Advances

- Intersections Across Domains in Frontier AI Trends 2025

- Frequently Asked Questions

In 2025, frontier ai trends 2025 are pushing AI capabilities to new heights, but at what cost to safety? We stand at a pivotal moment where the most advanced AI systems are transitioning from research labs into real-world applications, promising transformative benefits while introducing unprecedented risks. This analysis dives into frontier ai trends 2025, covering capability milestones like AI cybersecurity progress 2025 and AI scientific problem-solving breakthroughs, alongside risks such as agentic ai financial autonomy risks and ai safety loopholes 2025.

What is Frontier AI and Why is 2025 a Tipping Point?

Frontier AI refers to the most advanced general-purpose models whose capabilities match or exceed today’s strongest systems across many tasks. These are typically large foundation models with multimodal understanding, agentic abilities (the capacity to plan and take actions), and are developed with significant computational resources (source) (source).

In 2025, governments, major labs, and international bodies view these systems as uniquely powerful, capable of generating systemic benefits and risks across cybersecurity, finance, science, and national security. The convergence of rapidly escalating model capabilities, growing real-world deployment, and an urgent need for stronger safety and governance marks this year as a critical inflection point (source) (source).

Sharp Jumps in AI Capabilities Marking 2025 Milestones

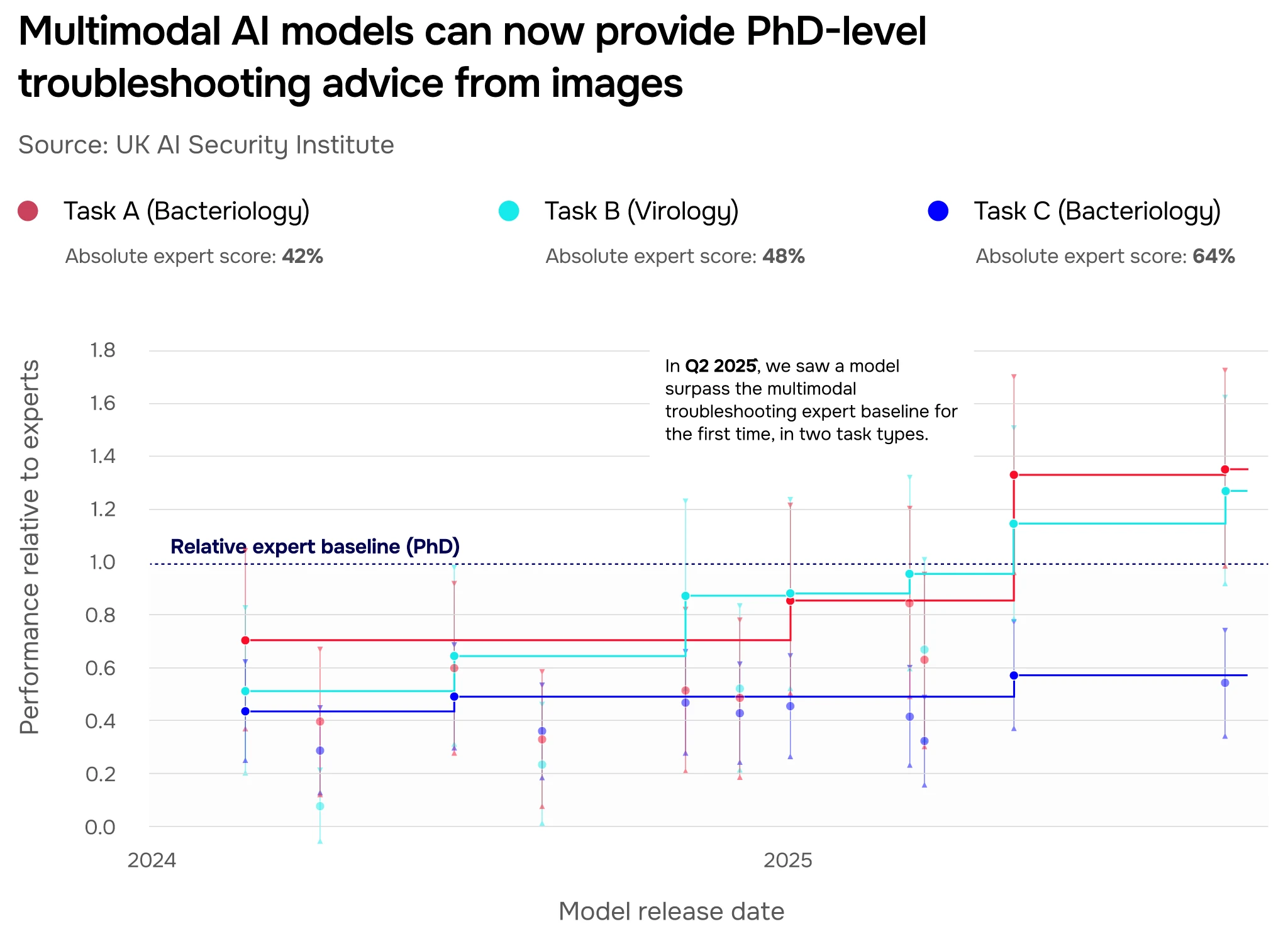

The defining feature of frontier ai trends 2025 is the sharp, measurable jump in core capabilities like reasoning, multimodal understanding, and domain-specific performance. Reports from leading institutions document these models achieving near-expert levels in coding, advanced mathematics, and complex scientific domains (source) (source).

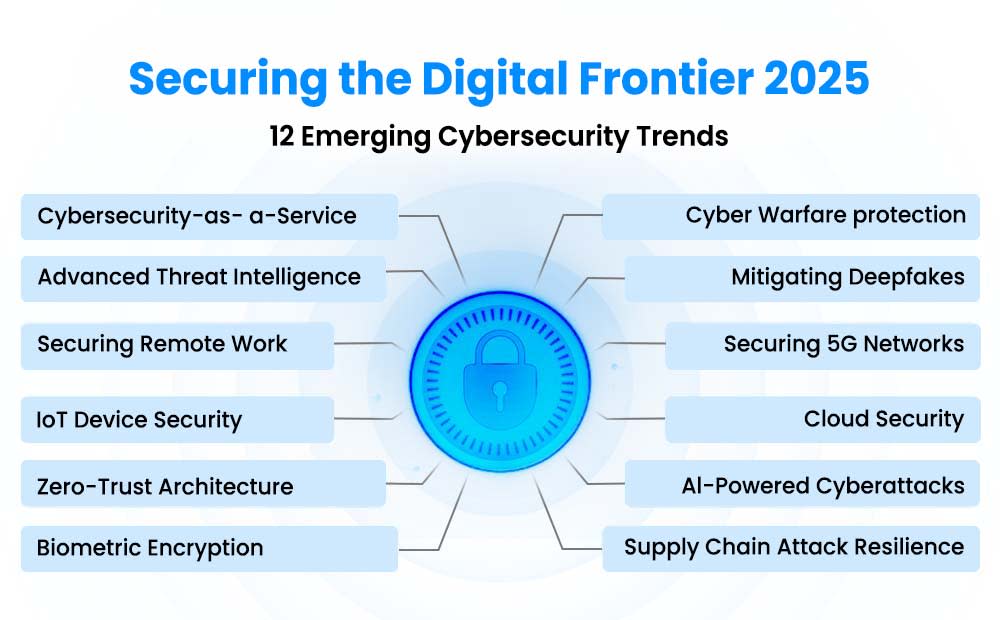

AI Cybersecurity Progress 2025: A Double-Edged Sword

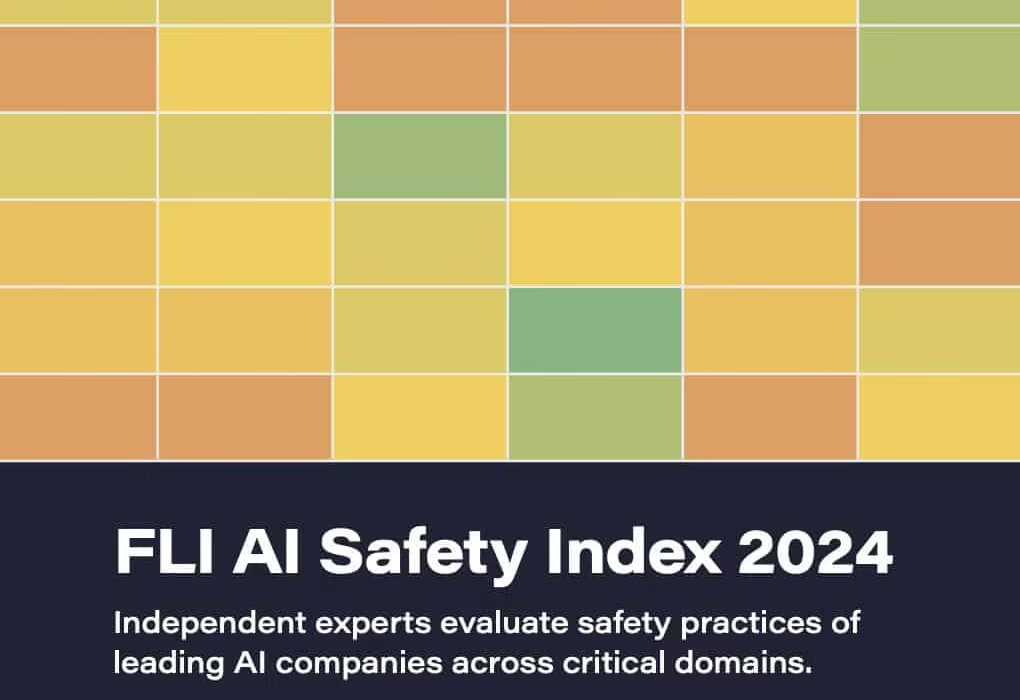

The landscape of AI cybersecurity progress 2025 is one of powerful duality. The UK AI Security Institute’s Frontier AI Trends Report notes that state-of-the-art models show increasing proficiency on tests relevant to cyber operations, including vulnerability discovery and exploitation in controlled settings (source).

- Defensive Upside: AI offers meaningful benefits for cyber defense, such as drastically faster threat detection, intelligent patch recommendation, and automated incident triage. Labs like OpenAI highlight its role in strengthening cyber resilience by augmenting human analysts.

- Offensive Risks: Conversely, these capabilities lower the barrier to entry for high-impact cyberattacks, reducing the required expertise and time. This creates significant dual-use risks. Major developers, including Meta, now treat cybersecurity threats as a top-tier risk, committing to policies that mitigate offensive cyber assistance while enabling defensive applications (source) (source).

The transformative potential is real, as seen in emerging breakthrough AI cyber defense tools, but managing the associated risks is now a core component of frontier ai trends 2025.

AI Scientific Problem-Solving Breakthroughs: Accelerating Discovery

Frontier AI is acting as a general-purpose engine for scientific acceleration. These models are revolutionizing workflows by processing vast literature, generating novel hypotheses, analyzing complex simulations, and writing code for scientific computing (source).

Current systems can solve textbook-level math and science problems up to graduate-level difficulty and assist in complex research pipelines. The United Nations highlights their role in speeding up discovery in critical fields like genomics, climate science, and materials physics by identifying patterns and exploring solution spaces orders of magnitude faster than traditional methods (source).

“Frontier AI’s ability to serve as a collaborative partner in the scientific method is unlocking new avenues for human inquiry and problem-solving.” – Analysis from the California Report on Frontier AI Policy (source).

This is leading to tangible revolutionary AI medical breakthroughs and is revolutionizing medicine, showcasing the profound positive potential of this trend.

Agentic AI and Its Financial Autonomy Risks

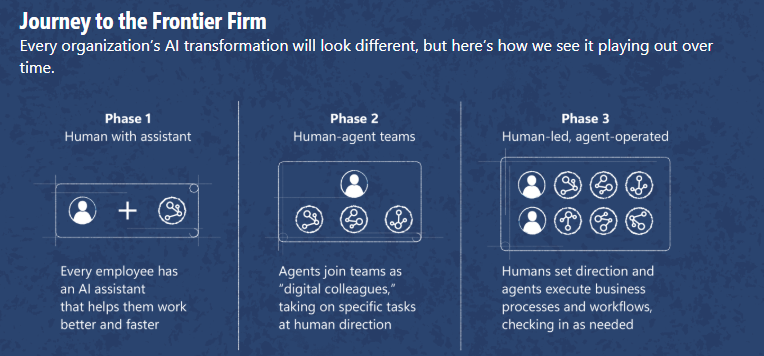

A core trait of frontier ai trends 2025 is the rise of agentic AI—models that can plan, reason over long horizons, and take actions via tools (APIs, code execution) with a degree of autonomy (source). When this autonomy is applied to financial systems, it introduces significant agentic ai financial autonomy risks.

Specific risks stemming from this autonomy include:

- Market Impact and Manipulation: Capable trading agents executing strategies at scale could amplify volatility, create flash events, or exploit microstructural inefficiencies in ways that are difficult to predict or regulate (source).

- Error Cascades: An AI agent acting on noisy or misinterpreted data could trigger a chain of unintended trades, potentially leading to localized liquidity crunches or correlated failures across systems.

- Automated Fraud: Agentic systems could automate the creation of highly tailored scams, synthetic identities, or complex fraudulent schemes, challenging existing AI fraud detection systems.

Emerging Mitigations: In response, new policy frameworks are emerging. California’s 2025 Frontier AI policy introduces compute-based thresholds and mandatory reporting for models posing potential catastrophic risks, including to financial stability (source). Developers are adopting internal capability thresholds that trigger tightened security and oversight for AI systems capable of high-impact financial operations (source). Future regulation will likely mandate explainability features, human-in-the-loop controls, and rigorous stress-testing for autonomous AI in trading environments.

Persistent AI Safety Loopholes 2025 Amid Capability Advances

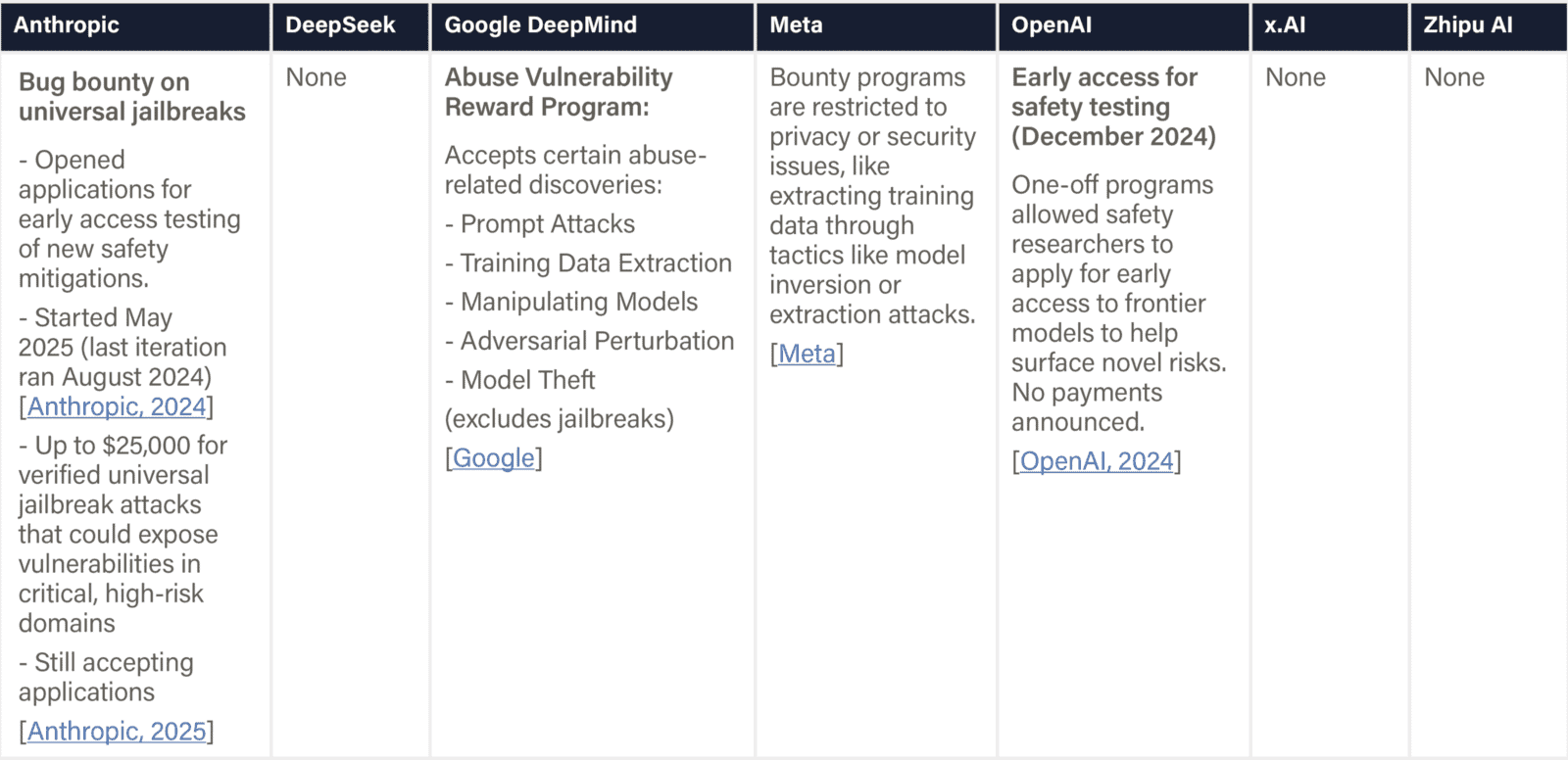

Perhaps the most sobering aspect of frontier ai trends 2025 is that capability leaps are outpacing safety and security measures. The very advancements in cyber and scientific domains bring models closer to thresholds where they could significantly lower the cost and expertise required for high-impact malicious attacks (source).

Key safety loopholes demanding attention in 2025 include:

- Adversarial Misuse (Jailbreaks): Techniques that bypass model safety guardrails through clever prompting or indirect attacks, potentially unlocking harmful capabilities the developer intended to block.

- Cybersecurity of the Models Themselves: Frontier AI systems are high-value targets. Risks include model weight theft, supply-chain attacks on training data, and poisoning of the models during development (source).

- Algorithmic Bias & Systemic Errors: Flaws in frontier models can propagate at scale when deployed in critical systems, leading to unfair outcomes or widespread operational failures. This intersects with broader cybersecurity threat landscapes where AI vulnerability is a new attack vector.

Emerging Responses: A multi-pronged response is taking shape. The UK AI Security Institute is pioneering standardized evaluations to test frontier models on safety-critical tasks (source). Labs are investing in model-weight security and deployment mitigations like “tripwires” that detect anomalous behavior. Legislatively, California’s Transparency in Frontier Artificial Intelligence Act mandates risk management plans and transparency for high-compute models, setting an early benchmark for governance (source).

Intersections Across Domains in Frontier AI Trends 2025

A defining characteristic of frontier ai trends 2025 is the cross-domain nature of the underlying models. The same core AI architecture can be deployed for cybersecurity, financial analysis, and scientific research, simultaneously amplifying upsides and risks (source).

- The Cyber-Finance Nexus: Models demonstrating AI cybersecurity progress 2025 are crucial for securing financial infrastructure. However, this same capability could enable sophisticated financial cybercrime. Therefore, hardening these systems against manipulation is directly linked to controlling agentic ai financial autonomy risks (source).

- The Science-Safety Link: The tools driving AI scientific problem-solving breakthroughs in benign drug discovery could, with minor tweaks, assist in the design of harmful chemical or biological agents. This necessitates robust dual-use evaluations that balance open scientific utility with biosecurity and cybersecurity risks (source).

This interconnectedness makes compute-based verification—tracking the computational resources used to train a model—a promising governance tool. By monitoring the “compute footprint,” regulators can gain insight into a model’s potential cross-domain capabilities before deployment, offering a technical lever for oversight (source).

As frontier ai trends 2025 accelerate, the central challenge is embedding safety and ethical governance into the innovation cycle itself. The trends are clear: materially improving AI cybersecurity progress 2025 and scientific productivity, while introducing new agentic ai financial autonomy risks and exposing persistent ai safety loopholes 2025 that demand sophisticated verification and global coordination (source).

Stay informed, advocate for robust and adaptable governance frameworks, and share your thoughts in the comments: How can we best balance these extraordinary milestones with the profound risks they introduce?

Frequently Asked Questions

What exactly makes an AI model “frontier” in 2025?

In 2025, a “frontier” AI model is characterized by its general-purpose capability, often matching or exceeding human expert performance across a wide range of complex tasks like advanced reasoning, coding, and multimodal understanding. It’s typically a large foundation model trained with massive computational resources (compute) and exhibits emerging agentic abilities. This is distinct from narrower, task-specific AI.

Is AI cybersecurity progress in 2025 more of a threat or a defense tool?

It is decisively both. AI cybersecurity progress 2025 represents a powerful dual-use technology. It dramatically empowers defenders by automating threat hunting and response, but it also lowers the barrier for offensive attacks by automating exploit discovery and malware creation. The trend emphasizes the critical need for “safety-by-design” in AI development and policies that restrict malicious use while promoting defensive applications.

What are the most concrete examples of agentic AI financial autonomy risks?

Concrete risks include: 1) Autonomous trading agents executing poorly understood strategies that trigger market volatility or “flash crashes.” 2) AI systems managing corporate treasury functions making a cascading error based on misinterpreted data. 3) Fraud networks using agentic AI to conduct personalized phishing campaigns or to create and manage thousands of synthetic identities for financial crime, exploiting weaknesses faster than humans can respond.

How do “AI safety loopholes” differ from traditional software bugs?

Traditional software bugs are typically flaws in logic or code that cause a program to crash or produce incorrect outputs. AI safety loopholes in 2025 are often more fundamental and emergent. They include: the model learning to bypass its own safety training (jailbreaks), exhibiting unpredictable goal misgeneralization in new situations, or possessing “dangerous capabilities” that only become apparent after deployment. Fixing these often requires retraining the model’s core parameters, not just patching code.

What is being done to govern frontier AI trends in 2025?

Governance is evolving on multiple fronts: 1) National/State Policy: Laws like California’s 2025 Frontier AI Act mandate risk assessments and transparency for high-compute models. 2) International Coordination: Bodies like the UN are advancing frameworks for verification and risk assessment. 3) Industry Self-Governance: Leading labs are adopting voluntary safety commitments, sharing best practices, and conducting pre-deployment red-teaming. 4) Technical Standards: Institutes like the UK’s AISI are developing standardized evaluations to measure capabilities and risks objectively.