“`html

Navigating AI Errors: A Guide to Gemini Pro Function Call Tooling Issues

Estimated reading time: 8 minutes

Key Takeaways

- The increasing integration of AI tools across industries means **AI errors** are becoming more common.

- Understanding and resolving these errors is vital for reliable AI system performance.

- **Gemini Pro function call tooling errors** often stem from issues with function signatures, data mismatches, or API configurations.

- Common error messages can range from unhelpful statements to specific `INVALID_ARGUMENT` codes.

- Systematic troubleshooting, including verifying function declarations and message order, is key to resolving these errors.

- Broader AI tool errors, like those seen with Copilot or Photoshop Generative Fill, also require specific diagnostic approaches.

- General strategies like clear prompting, understanding model limitations, and leveraging community support are universally applicable.

Table of contents

- Navigating AI Errors: A Guide to Gemini Pro Function Call Tooling Issues

- Key Takeaways

- Introduction to AI Errors and the Importance of Troubleshooting

- Deep Dive into Gemini Pro Function Call Tooling Errors

- Exploring Other Common AI Tool Errors

- General Strategies for Troubleshooting AI Errors

- Conclusion and Future Outlook

- Frequently Asked Questions

Introduction to AI Errors and the Importance of Troubleshooting

In today’s rapidly evolving technological landscape, artificial intelligence (AI) has moved from a futuristic concept to an integral part of our daily lives and a cornerstone of countless industries. From sophisticated virtual assistants and advanced medical diagnostics to personalized content recommendations and complex data analysis, AI tools are everywhere. As these systems, such as the powerful **Gemini Pro**, become increasingly complex and capable, it’s only natural that they, like any other sophisticated technology, will encounter issues. Experiencing **AI errors** is not a sign of failure, but rather an inherent part of the development and deployment lifecycle of these advanced systems.

Understanding and effectively resolving these **AI errors** is not merely a technicality; it’s a critical necessity. For developers, it ensures the integrity and reliability of the AI models they build and deploy. For users, it guarantees a smooth, predictable, and efficient experience. Without robust troubleshooting capabilities, the potential of AI cannot be fully realized, and its integration into critical applications could be jeopardized. This post will delve into the specifics of these challenges, focusing particularly on the nuanced issues surrounding **Gemini Pro function call tooling errors**, providing insights and actionable steps for resolution.

Deep Dive into Gemini Pro Function Call Tooling Errors

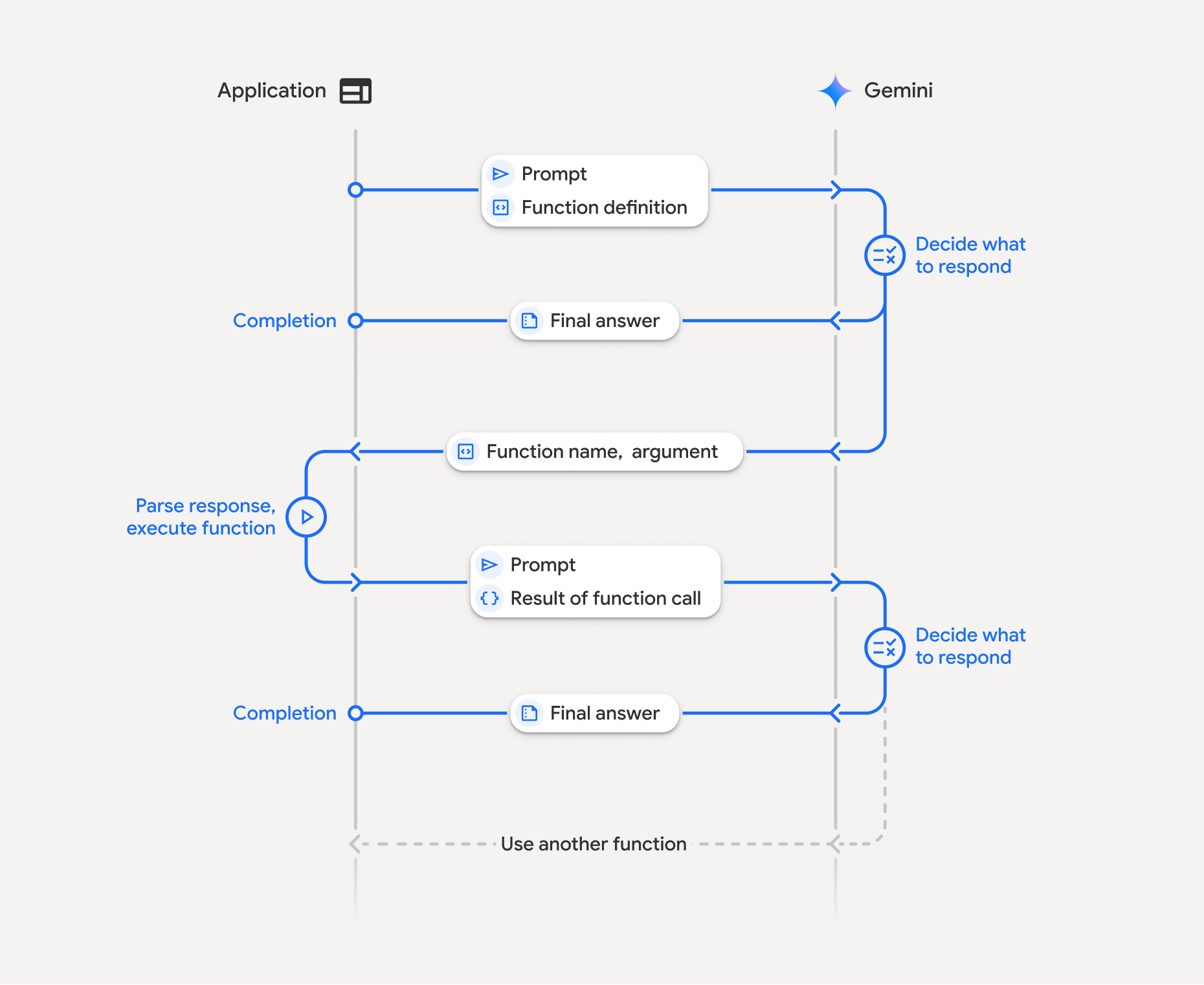

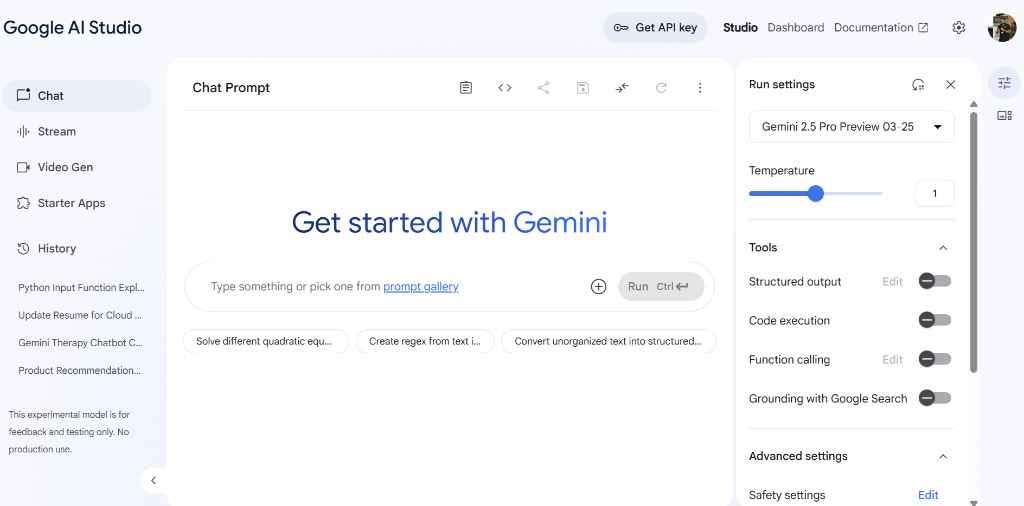

One of the most powerful features of advanced language models like **Gemini Pro** is their ability to interact with the outside world through function calling. This capability unlocks a new level of automation and intelligence, allowing the AI to perform actions beyond just generating text. However, this complexity also introduces a specific set of potential errors.

Define Function Calling in Gemini Pro:

Function calling in **Gemini Pro** refers to a feature that empowers developers to equip the model with the ability to invoke external functions or tools in response to user prompts. Essentially, when a user asks the AI to perform a task that requires external action—like retrieving real-time data, sending an email, or updating a database—the model can identify the appropriate function to call, extract the necessary parameters from the prompt, and then execute that function. This mechanism is fundamental for building advanced AI applications that can automate complex tasks and provide more interpretable results. You can learn more about this powerful feature here: Gemini Pro Function Calling Documentation.

Common Causes of Gemini Pro Function Call Tooling Errors:

Several factors can contribute to **Gemini Pro function call tooling errors**. Recognizing these common pitfalls is the first step toward effective troubleshooting:

-

Incorrect Function Signature or Registration:

The way you define your functions for Gemini Pro to use is crucial. A malformed function schema—meaning the structure or description of the function and its parameters doesn’t adhere to the expected format—or an improperly registered function can prevent the model from even recognizing or attempting to invoke it. This is a foundational requirement for successful tool use. For more details on common registration issues, see discussions like LangchainJS Discussion on Function Registration and general troubleshooting advice at Gemini API Troubleshooting.

-

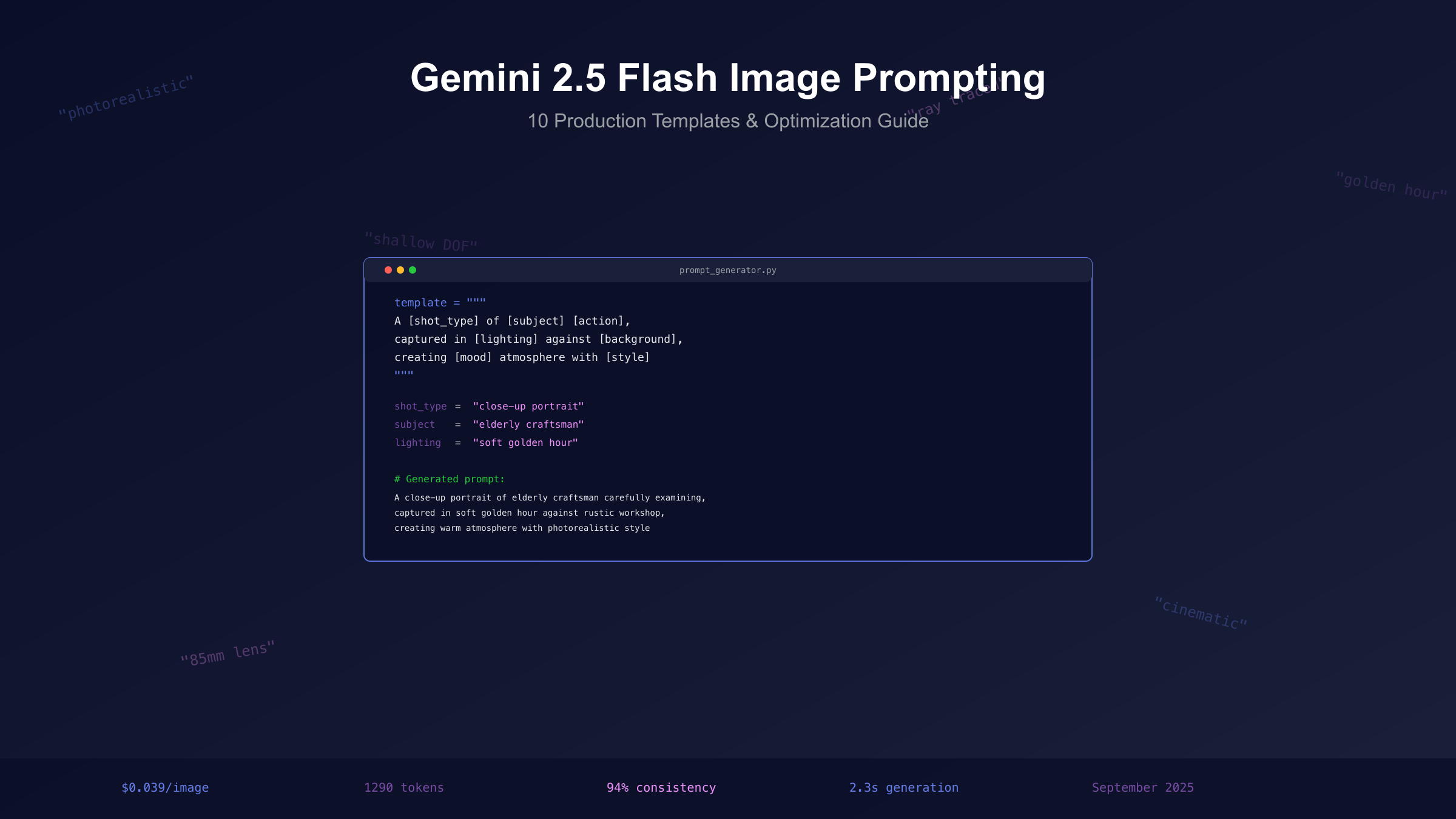

Malformed JSON or Data Type Mismatches:

Gemini Pro expects the parameters for its function calls to be structured in a precise JSON schema. If the data provided does not conform to this schema, or if there are mismatches in data types (e.g., expecting an integer but receiving a string), the function call will fail. Ensuring that the output from the model, or the input you provide, strictly adheres to the defined schema is paramount. Refer to resources like LangchainJS Discussion on JSON Schema for examples of these issues.

-

API Limits or Configuration Issues:

Sometimes, errors occur not because the function definition is wrong, but due to how the tools are integrated with the model. If tools are not correctly bound to the specific model instance you are using, or if they are not permitted for the initial message in a conversation, the model won’t be able to utilize them, leading to invocation failures. For insights into such configuration problems, consult LangchainJS Discussion on Tool Binding.

-

Sub-agent Context Restrictions:

A more complex scenario arises when function calling is used within the framework of sub-agents. In such nested agent architectures, tools that are available at a higher level might be disabled or inaccessible within the context of a sub-agent. This can manifest as cryptic errors, such as the infamous `”400 INVALID_ARGUMENT: Tool use with function calling is unsupported”`, indicating that the specific environment doesn’t permit tool use. This limitation is discussed in ADK Python Issue on Sub-agent Tool Support.

-

Ambiguous Tool Output Interpretation:

Even if a function is successfully invoked, the AI’s ability to act upon its output can be hindered by ambiguity. If the tool’s response is unclear, poorly formatted, or can be interpreted in multiple ways, the model might struggle to apply the instructions correctly. This is particularly problematic for tasks like file editing, where precise understanding of the changes is required. Users have reported instances where the tool, despite execution, incorrectly claims no changes were made or fails to perform the operation as expected. See Cursor Forum on Gemini Tool Unreliability for examples.

Example Error Messages Users Might Encounter:

When grappling with these issues, you might encounter a variety of error messages:

- Generic but unhelpful messages like: “I am sorry, I cannot fulfill this request. The available tools lack the desired functionality.” This often points to a misconfiguration or an inability for the model to map the request to an available tool. A relevant discussion can be found at Google Discuss on Cannot Fulfill Request.

- Specific error codes that give more technical insight: For instance, `”400 INVALID_ARGUMENT: Tool use with function calling is unsupported.”` This particular error, as mentioned before, often signals an unsupported context for tool execution, common in nested agent scenarios. See ADK Python Issue on Invalid Argument.

- Silent failures or empty responses: Perhaps the most frustrating errors are those where no explicit error message is provided. The tool might simply not be called, or an empty response is returned, leaving you to infer that something went wrong. This lack of feedback makes debugging significantly harder. Resources such as LangchainJS Discussion on Silent Failures and n8n Community on Gemini Tools Not Working touch upon these elusive issues.

Actionable Troubleshooting Steps for Gemini Pro Function Call Tooling Errors:

When faced with these challenges, a systematic approach is your best ally:

-

Verify Function Declarations:

Meticulously check the naming, descriptions, and parameter schemas of your functions. Ensure they are clear, unambiguous, and precisely match the expected inputs and outputs. For JavaScript/TypeScript projects, consider using schema validation libraries like Zod to define and validate your function schemas rigorously. This is a crucial step highlighted in LangchainJS Discussion on Schema Validation.

-

Message Order Matters:

A common mistake is sending tool-related messages as the very first input to the model. Gemini Pro expects the conversation to be initiated by a user prompt. Always ensure that tool messages or function calls are never the first message in a sequence. Follow the pattern of user prompt → model response → tool invocation → model response.

-

Explicit Tool Binding:

Ensure that the tools you intend to use are explicitly bound to the model during its initialization or configuration. Don’t assume the model will automatically discover them. Test your tool integrations with minimal, reproducible examples to isolate any binding issues. This practice is frequently recommended in guides like LangchainJS Discussion on Explicit Binding.

-

Refactor Agent Architectures:

If you are employing complex, multi-layered agent architectures, consider the possibility of hitting system limitations related to nested tool use. If errors like `”400 INVALID_ARGUMENT: Tool use with function calling is unsupported”` persist, refactoring your agent setup to avoid deep nesting of tool invocations might be necessary. The issue described in ADK Python Issue on Deep Nesting provides context for this.

-

Monitor and Analyze Output:

Continuously monitor the error logs and the AI’s responses. Look for patterns of ambiguity in the model’s interpretation of tool outputs or in its own generated messages. If the AI frequently claims unsupported operations or fails on tasks like file editing, it could be a strong indicator of underlying schema mismatches or interpretation issues. Resources like Google Discuss on Error Analysis and Cursor Forum on Output Ambiguity offer insights into analyzing these situations.

Exploring Other Common AI Tool Errors

While Gemini Pro function calling errors are a specific area of concern, AI tools across different domains present their own unique sets of challenges. Understanding these common issues can help in preempting and resolving them.

Copilot Error:

-

Typical Issues:

Users of AI coding assistants like GitHub Copilot sometimes face issues such as receiving inaccurate or irrelevant code suggestions, experiencing difficulties with IDE integration (e.g., prompts not appearing, suggestions not loading), or encountering authentication failures that prevent the service from working. These problems can disrupt the coding workflow significantly.

-

Resolution Strategies:

Common solutions involve resetting the Copilot extension within the IDE, ensuring both the IDE and its relevant extensions are updated to the latest versions, relogging into your GitHub account to re-authenticate the service, and clearing IDE configuration caches. For inaccurate suggestions, the approach often involves refining your prompts, providing more context within the code, or ensuring Copilot has access to sufficient project-specific code for “training” on your particular style and needs.

Photoshop Generative Fill Errors:

-

Common Problems:

Adobe Photoshop’s Generative Fill feature, while powerful, can sometimes produce unwanted artifacts in the generated content, such as strange textures or distorted shapes. Users might also notice excessive blurring, slow processing times that halt workflows, or unexpected compatibility conflicts that arise after Photoshop software updates. These issues can detract from the efficiency and quality of image editing tasks.

-

Troubleshooting Tips:

To address these problems, it’s essential to ensure Photoshop is updated to its most recent version, as updates often contain bug fixes for AI features. Increasing the system’s allocated RAM for Photoshop can also improve processing speed. Always check that your image is in the correct mode (e.g., RGB) and that there are no complex layer conflicts before applying Generative Fill. Additionally, refining the selection boundaries—making them cleaner and more precise—before initiating the generative process can lead to much better results.

AI Agent Tool Communication Issues:

-

Typical Symptoms:

In multi-agent systems or scenarios where AI agents need to collaborate or use tools in sequence, communication breakdowns are a significant concern. Symptoms include agents failing to send or receive messages correctly, misinterpreting each other’s instructions or data, becoming stuck in repetitive loops, or entering deadlocks where no agent can proceed. These issues can halt complex automated workflows.

-

Underlying Causes:

The root causes are often similar to function call errors: ambiguous instructions given between agents, errors during the serialization or deserialization of data payloads (converting data to and from formats for transmission), or overly strict context isolation between agents, which prevents them from sharing necessary information. This is particularly relevant in sophisticated multi-agent setups, including those involving Gemini, as noted in discussions like ADK Python Issue on Agent Communication.

-

Potential Solutions:

To mitigate these issues, standardizing communication protocols between agents is highly recommended. This involves defining clear message formats and expected data structures. Rigorous validation of all inter-agent data payloads, both before sending and after receiving, can catch errors early. Furthermore, avoiding excessively deep nesting of agents or tools, where context might become too restricted, can help maintain smoother communication flow.

General Strategies for Troubleshooting AI Errors

Beyond the specific issues related to particular AI models or tools, several universal strategies can significantly improve your ability to diagnose and resolve **AI errors**.

-

Craft Clear and Concise Prompts:

The quality of the input directly influences the quality of the output. Reducing ambiguity in your prompts is fundamental. Clearly state your intent, provide necessary context, and avoid jargon or vague language. This improves the AI’s ability to interpret your request correctly and reduces the likelihood of errors stemming from miscommunication.

-

Understand Model Limitations:

Every AI model has its strengths, weaknesses, and specific limitations. Before encountering errors, it’s beneficial to research and be aware of these known restrictions. For instance, understanding Gemini’s limits on nested tool calls or Copilot’s scope of code context can help you avoid designing workflows that are bound to fail. Keeping abreast of official documentation and community discussions is key here.

-

Implement Systematic Debugging:

When integrating AI tools, APIs, or custom functions, implement robust logging. Record all requests made to the AI, its responses, and any intermediate data that is processed or generated. This detailed log provides a crucial trail for debugging when errors occur, allowing you to pinpoint exactly where and why a failure happened.

-

Leverage Community and Official Support:

You are rarely the first person to encounter a specific AI error. Actively utilize community forums, GitHub issue trackers, Stack Overflow, and official vendor documentation. Often, solutions to known bugs, workarounds, or best practices are shared by other developers. Resources like Google’s Developer Community, GitHub Issues, and the Gemini API Troubleshooting Guide are invaluable.

-

Document Recurring Issues:

As you resolve errors, document them. Maintain an internal knowledge base or troubleshooting FAQ for your team. This is especially useful for recurring problems that have established solutions. Having this documentation readily available can significantly expedite resolution times for frequently encountered issues, fostering efficiency and knowledge sharing.

Conclusion and Future Outlook

As we continue to integrate artificial intelligence into increasingly complex systems and workflows, encountering **AI errors** is not just probable, but an inherent and valuable part of the journey. Each error, whether it’s a subtle misinterpretation or a catastrophic function call failure, provides invaluable insights. These experiences teach us not only about the intricacies of how AI models process information and interact with external systems but also about the robustness and limitations of our own implementations. For **Gemini Pro function call tooling errors**, understanding the nuances of schema definition, the critical importance of correct message sequencing, and the methodical analysis of error responses are paramount for successful integration.

The path forward in AI error handling is one of continuous improvement and innovation. We can anticipate several key advancements: greater transparency in how AI models generate responses and identify errors, the development of more standardized interfaces and protocols for AI tools to ensure interoperability, and the creation of more sophisticated debugging tools that can help developers diagnose complex issues with greater speed and accuracy. As AI technology matures, so too will our ability to manage and resolve the challenges it presents, paving the way for even more reliable and powerful AI applications.

Frequently Asked Questions

Q1: What is the most common reason for Gemini Pro function call errors?

A: The most frequent culprits are usually incorrect function signatures or malformed JSON schemas for parameters. If the model can’t understand how to call your function or what data to pass, it will fail.

Q2: Can AI errors be completely eliminated?

A: Currently, it’s unrealistic to expect AI errors to be completely eliminated. AI systems are complex and operate in dynamic environments. The goal is to minimize them through careful design, robust testing, and effective troubleshooting strategies.

Q3: How can I improve the accuracy of Copilot’s code suggestions?

A: To improve accuracy, provide more context in your code, use clear and descriptive comments, and ensure Copilot has access to relevant parts of your project. Sometimes, refining your prompt or the specific code block you’re asking for suggestions on can also help.

Q4: What should I do if Photoshop Generative Fill is causing artifacts?

A: Try refining your selection area to be more precise. Ensure your image is in RGB mode and that you’re not working with overly complex layer structures. Updating Photoshop and allocating more RAM to the application can also resolve artifacting issues.

Q5: How do I prevent AI agents from getting stuck in communication loops?

A: Implement clear communication protocols, use timeouts for agent responses, and ensure that each agent has a well-defined objective and a mechanism to break out of potential loops. Validation of messages between agents is also critical.

Q6: Is there a single place to check for known Gemini API issues?

A: While there isn’t one single definitive list for every possible issue, the official Gemini API Troubleshooting Guide, alongside community forums like Google’s developer discussion boards and GitHub issue trackers for related libraries (e.g., Langchain), are the best resources for staying updated on known problems and solutions.

“`