The AI Model Cannot Fulfill Request: Demystifying Gemini Pro Function Call Tooling Errors

Estimated reading time: 12 minutes

Key Takeaways

- Understanding the transformative power of AI, especially with advanced tools like Gemini Pro, is crucial for modern development.

- Encountering the error “AI model cannot fulfill request,” particularly with Gemini Pro function call tooling errors, is a common frustration for developers.

- This guide aims to demystify these errors, offering actionable solutions for both specific Gemini Pro issues and broader LLM tool integration issues.

- We will also touch upon common challenges in areas like Perplexity AI troubleshooting to provide a comprehensive perspective.

- By the end, you’ll have clarity on why your AI model cannot fulfill request and possess the tools to resolve these issues effectively.

Table of contents

- The AI Model Cannot Fulfill Request: Demystifying Gemini Pro Function Call Tooling Errors

- Key Takeaways

- Understanding Gemini Pro Function Call Tooling Errors

- What are Function Calls in Gemini Pro?

- Common Scenarios for Gemini Pro Function Call Tooling Errors

- Unpacking the Root Causes of AI Errors

- Safety Checks and Content Filtering

- Tool Configuration Issues

- Message Structure Problems

- API and Compatibility Issues

- Comprehensive Troubleshooting for Gemini Pro Function Call Tooling Errors

- Solution 1: Validate Tool Definitions and Formatting

- Solution 2: Check Message Sequencing

- Solution 3: Review Safety Settings

- Solution 4: Examine Tool Response Formatting

- Solution 5: Use Alternative Approaches When Needed

- Solution 6: Monitor for Model-Specific Issues

- Navigating Perplexity AI Troubleshooting and Broader LLM Tool Integration Issues

- Perplexity AI Troubleshooting Specifics

- Addressing Common LLM Tool Integration Issues

- Best Practices for Seamless AI and Tool Integration

- Clear and Explicit Tool Declarations

- Comprehensive Testing

- Robust Error Handling

- Maintain Up-to-Date Documentation

- Monitor and Log Function Calls

- When and Where to Seek Further Assistance

- Official Documentation

- Developer Communities

- GitHub Issues

- Support Channels

- Concluding Thoughts: Mastering AI Tool Integration

The landscape of artificial intelligence is evolving at an unprecedented pace, with models like Gemini Pro leading the charge in integrating complex functionalities. These advancements promise to unlock new levels of automation and efficiency. However, as with any cutting-edge technology, developers often encounter hurdles. One of the most perplexing is when an AI model cannot fulfill request, especially when it pertains to the advanced capabilities of Gemini Pro function call tooling errors. These errors can halt development progress and lead to significant frustration. This post is dedicated to demystifying these issues, providing a clear roadmap to understanding and resolving them, and empowering you to integrate AI tools more effectively. We’ll also briefly touch upon related challenges, such as Perplexity AI troubleshooting, to offer a broader perspective on common AI integration pitfalls. Our goal is to ensure you understand why your AI model cannot fulfill request and equip you with practical solutions for smoother AI integration.

Understanding Gemini Pro Function Call Tooling Errors

What are Function Calls in Gemini Pro?

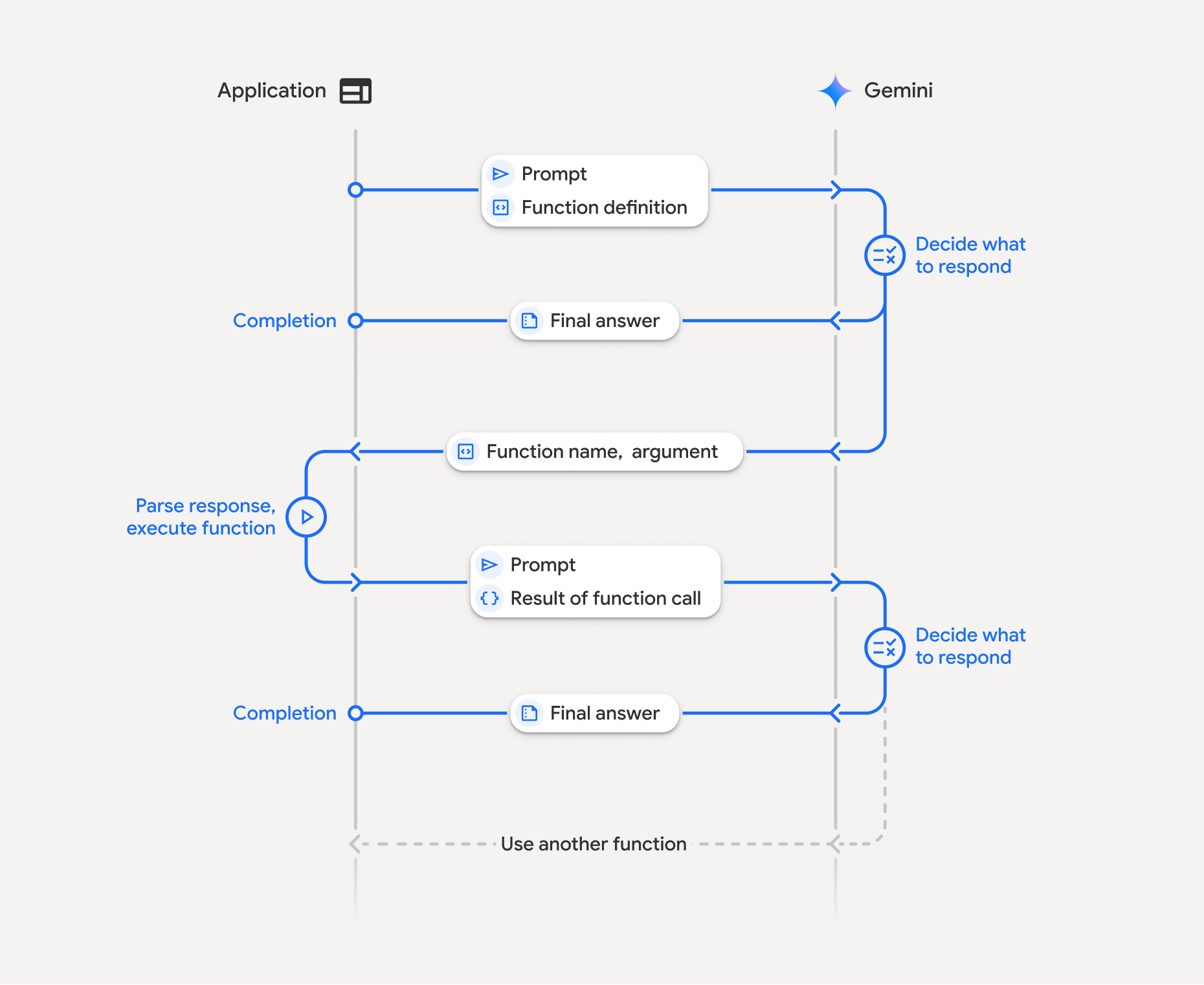

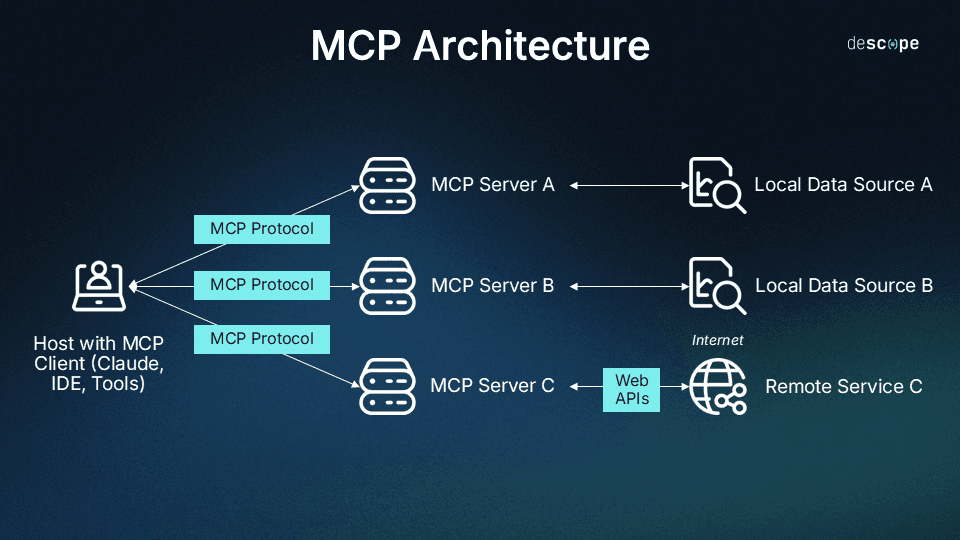

Function calling is a powerful feature that allows large language models (LLMs) like Gemini Pro to interact with external systems and perform actions beyond simple text generation. Essentially, it enables the AI to understand when a user’s request requires calling a specific function defined by the developer. The AI can then extract the necessary parameters from the user’s prompt and provide them in a structured format that your application can use to execute the function. This bridges the gap between natural language understanding and programmatic execution, allowing for dynamic and interactive AI applications.

Common Scenarios for Gemini Pro Function Call Tooling Errors

Despite the elegance of function calling, developers frequently run into issues. A particularly common and frustrating error message is:

“I am sorry, I cannot fulfill this request. The available tools lack the desired functionality.”

This message can appear even when you are confident that your tools are correctly configured and should possess the capabilities to handle the request. This often occurs when trying to perform tasks that seem straightforward, such as file operations. For instance, developers have reported instances where Gemini Pro claims an inability to perform actions like file editing, despite the tools being set up appropriately. This can manifest as the model stating that it cannot make changes, or worse, providing an empty response or failing to interpret the results of tool outputs, especially with newer model versions. These inconsistencies can be particularly baffling and lead to significant debugging efforts.

Specifically, these issues have been noted in contexts like:

- When Gemini Pro returns the frustrating error message despite tools appearing to be set up correctly for a request that should be within their scope. Source.

- When the model incorrectly claims it cannot perform basic tasks like file editing, even when the setup seems correct, leading to the user’s prompt that Gemini Pro’s tool functionality is unreliable. Source.

- Instances where the model provides empty responses or fails to properly interpret the output from tool execution, especially when using advanced or newer iterations of the model. Source.

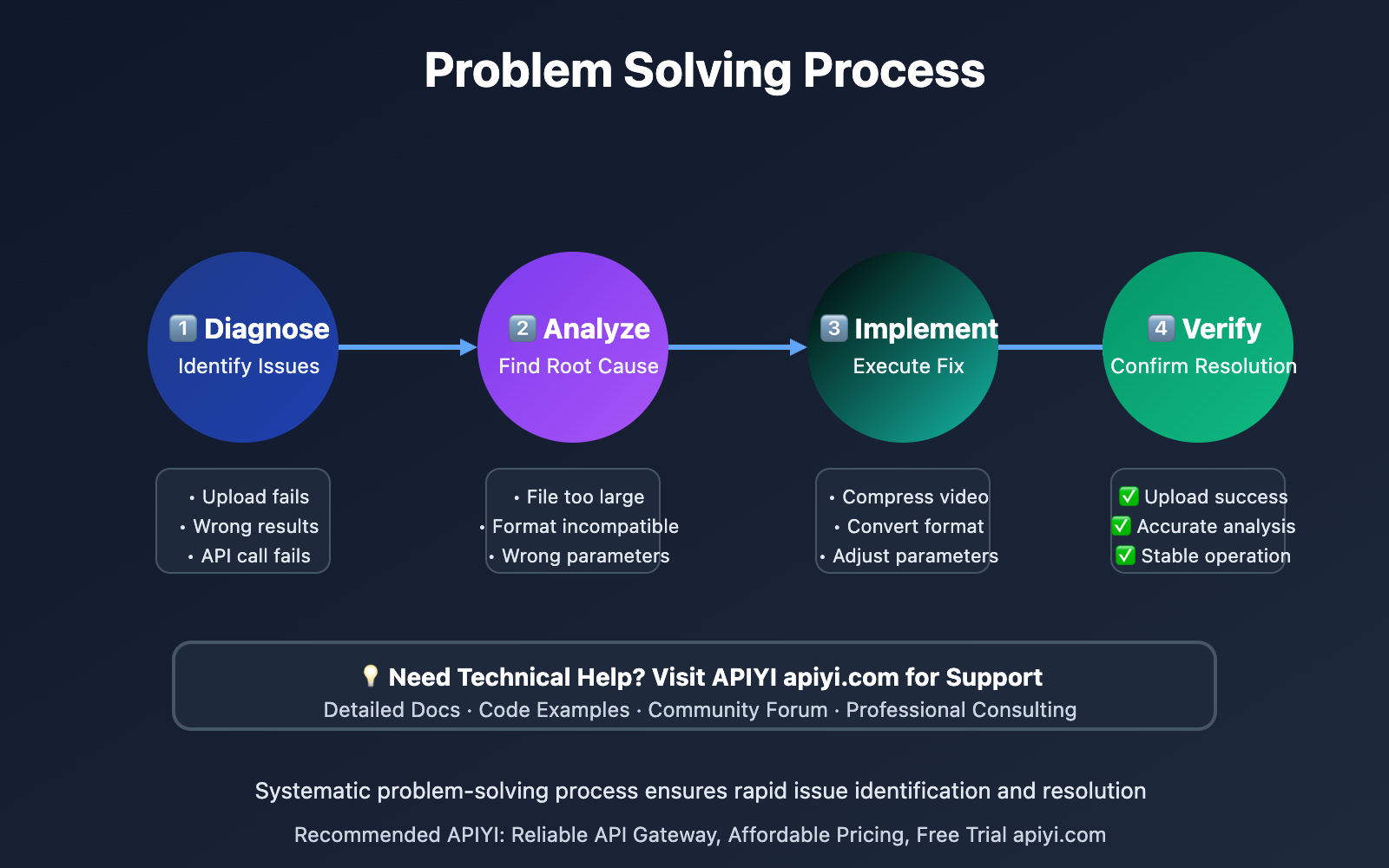

Unpacking the Root Causes of AI Errors

Safety Checks and Content Filtering

One of the primary reasons an AI model might refuse to execute a function call or return an empty response is due to its built-in safety mechanisms. LLMs are designed with robust safety filters to prevent them from generating harmful, unethical, or inappropriate content. If a prompt or the intended function call is perceived to violate these safety guidelines, the model might default to a refusal, sometimes without a clear explanation, simply stating it cannot fulfill the request. This can lead to empty responses or outright errors, even if the request itself seems benign to the developer. Source.

Tool Configuration Issues

The way tools are defined and configured is critical for successful function calling. Errors in this area are very common and can lead to the AI being unable to understand or utilize the tools provided. Key issues include:

- Malformed Function Declarations: The function declarations themselves must adhere to a strict schema. Missing essential fields like the `name` of the function, a clear `description` that explains its purpose, or the `parameters` it accepts can cause the AI to fail.

- Invalid Parameter Definitions: Parameters within the function declaration must be correctly formatted, typically adhering to JSON schema specifications. Incorrect JSON syntax, mismatched data types, or improperly defined `properties` can all lead to the AI being unable to interpret the function’s requirements.

- Missing Required Fields: Even if a parameter is optional, all *required* fields within its definition, including its type and any enumerations or constraints, must be present and correctly specified. Failure to do so can render the tool unusable by the AI.

These configuration pitfalls are a significant contributor to LLM tool integration issues. Source.

Message Structure Problems

The order and format of messages within a conversation thread are also important for function calling to work correctly. Two common issues arise here:

- Incorrect Initial Message: A critical constraint is that tool messages (messages indicating a function call or its result) cannot be the very first message in a conversation. The AI needs an initial user prompt or an assistant’s response to establish context before it can process tool-related messages. Starting a conversation with a tool call will often result in an error. Source.

- Ambiguous Tool Response Formatting: When the AI calls a tool, your application needs to send the tool’s response back to the AI in a specific, expected format. If this response is malformed, uses incorrect data types, or is not properly structured as valid JSON, the AI may fail to interpret it correctly, leading to task failure or misunderstandings. This ambiguity can be a significant hurdle in the flow of interaction. Source.

API and Compatibility Issues

The rapidly evolving nature of AI models means that updates can sometimes introduce unexpected behaviors. When a model like Gemini Pro is updated to a new version, such as Gemini 2.5 Pro, it’s possible that regressions can occur. These regressions might cause previously reliable tool functionality to become unstable or behave erratically. Developers might find that tools that worked perfectly with an older version suddenly start failing, leading to unreliable tool execution. Furthermore, ensuring compatibility between the specific implementation of your tools and the model’s expectations is paramount. Differences in how APIs are exposed or how data is handled can create friction points that result in function call failures.

This can be particularly frustrating as it highlights how model updates can impact tool functionality. Source.

Comprehensive Troubleshooting for Gemini Pro Function Call Tooling Errors

Solution 1: Validate Tool Definitions and Formatting

The cornerstone of successful function calling lies in meticulously defining your tools. Ensure that each tool declaration is complete and accurate. A properly formatted tool definition should include:

`name`: A clear, concise, and unique identifier for the function.

`description`: An unambiguous explanation of what the function does, its purpose, and when it should be used. This is crucial for the AI to understand the tool’s applicability.

`parameters`: This section defines the inputs the function expects. It must be a valid JSON schema, including:

- `type`: Typically `”object”` for functions that take multiple arguments.

- `properties`: An object where each key is a parameter name, and its value is another object defining the parameter’s type (e.g., `”string”`, `”number”`, `”boolean”`, `”object”`), description, and any constraints (like enums).

- `required`: An array listing the names of all parameters that *must* be provided for the function to be called.

Example of a well-formatted tool declaration:

{

"name": "getCurrentWeather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g., San Francisco, CA"

},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "The temperature unit to use for the weather"

}

},

"required": ["location"]

}

}Carefully review all fields for presence, correctness of types, proper JSON formatting, and ensure all required fields are explicitly listed. Even a small syntax error can prevent the AI from recognizing and using the tool.

Solution 2: Check Message Sequencing

As highlighted earlier, the order of messages in your conversation history is critical. Remember that tool messages (those related to function calls and their outputs) cannot be the initial message. Always ensure that the conversation begins with a standard user message or an assistant response. This establishes the necessary context for the AI to correctly process subsequent tool-related interactions. Referencing the documentation or community discussions can provide clarity on the expected message flow. Source.

Solution 3: Review Safety Settings

If your AI responses are unexpectedly empty or lead to refusals, consider whether your prompts or the actions your tools are trying to perform might be triggering the AI’s safety filters. Test your setup with simpler, unambiguous prompts that are clearly within acceptable use policies. If these simpler prompts work, gradually introduce more complexity to pinpoint what might be causing the safety system to intervene. Sometimes, rephrasing a prompt or adjusting the tool’s description to be more explicit about its non-harmful intent can resolve the issue.

Solution 4: Examine Tool Response Formatting

When your application executes a tool called by the AI, the response you send back must be perfectly formatted. This typically means returning a JSON object that accurately reflects the tool’s output. Pay close attention to:

- Valid JSON: Ensure the entire response is valid JSON. Use online JSON validators to check for syntax errors.

- Correct Data Types: The data types of the values in your JSON response should match the types expected by the AI in the function definition (e.g., numbers should be numeric, not strings).

- Proper Encoding: Special characters within strings should be properly escaped.

Inconsistencies here can lead the AI to misinterpret the results, causing the overall task to fail. Source.

Solution 5: Use Alternative Approaches When Needed

In situations where a specific tool’s functionality remains unreliable, even after thorough troubleshooting, consider implementing workarounds. For example, if the AI struggles with direct file editing tools, you might use it to generate commands for standard command-line utilities (like `sed` or `awk`) and then execute those commands separately. This can be a pragmatic approach to achieve the desired outcome while the AI’s direct tool integration is being refined or addressed. Source.

Solution 6: Monitor for Model-Specific Issues

Stay informed about the latest updates and known issues related to the specific version of Gemini Pro (or any LLM) you are using. Model providers often release updates that can introduce changes, bug fixes, or even new issues. Monitor official release notes, developer forums, and community discussions. If you suspect a bug in a recent model update is causing your tool issues, you might consider temporarily reverting to a previously stable version of the model until the issue is resolved. This proactive approach can save significant debugging time.

Navigating Perplexity AI Troubleshooting and Broader LLM Tool Integration Issues

Perplexity AI Troubleshooting Specifics

While Gemini Pro focuses on function calling for programmatic interaction, other AI tools like Perplexity AI present different integration challenges. Users of Perplexity AI might encounter issues such as search results that are not entirely accurate or a perceived difficulty in handling highly complex queries. To address these:

- Prompt Engineering: Framing your questions for Perplexity AI is key. Be specific, provide context, and clearly state what kind of information you are seeking. For instance, instead of “Tell me about AI,” try “Explain the current state of generative AI in natural language processing, citing recent advancements.” This helps Perplexity’s underlying LLM deliver more precise and relevant answers.

- Understanding the Underlying AI: Perplexity AI is powered by advanced LLMs. Recognizing this means that many principles of effective interaction with LLMs apply. Understanding its capabilities and limitations, much like with Gemini Pro, will help you avoid common pitfalls and get better results. Source.

Addressing Common LLM Tool Integration Issues

Beyond the specifics of Gemini Pro, many **LLM tool integration issues** are universal. These can include:

- API Mismatches: Ensuring that the API endpoints your tools interact with are correctly implemented and compatible with the model’s expectations.

- Authentication Problems: Incorrect API keys, expired tokens, or improper authentication headers can prevent tools from functioning. Thoroughly check your credentials and authentication methods. Source.

- Data Format Inconsistencies: Similar to tool response formatting, if the data being sent to or received from an external API is not in the expected format, it can cause errors. This includes issues with JSON, XML, or other data structures.

General troubleshooting steps that apply broadly include:

- Verify API Keys and Credentials: Always double-check that your API keys are correct, active, and properly configured in your environment.

- Consult Documentation: Refer to the official documentation for both the LLM provider and the external tools/APIs you are integrating.

- Validate Data Structures: Ensure that the data being passed between the LLM, your application, and external services adheres to the expected schemas.

- Check Compatibility: Confirm that the versions of libraries, SDKs, and APIs you are using are compatible with each other and with the LLM. Source.

Best Practices for Seamless AI and Tool Integration

Clear and Explicit Tool Declarations

Write tool descriptions that are not just functional but also intuitive. Use clear language to explain what the tool does, its purpose, and the exact meaning and expected format of each parameter. Avoid jargon where possible. The more explicit and unambiguous your tool definitions are, the less likely the AI is to misunderstand them.

Comprehensive Testing

Before deploying your AI-integrated application, rigorously test all your tools. Use a diverse range of inputs, including edge cases, valid inputs, and expected invalid inputs. Simulate different conversation flows to ensure the AI correctly identifies when to call each tool and how to handle its responses. This proactive testing is crucial for identifying potential issues early.

Robust Error Handling

Implement comprehensive error handling in your application logic. When an AI tool call fails, your application should be able to gracefully handle the situation. This might involve providing a user-friendly message, attempting a fallback mechanism, or logging the error for later review. Don’t let tool failures bring down your entire application.

Maintain Up-to-Date Documentation

Ensure that your tool declarations (the descriptions and parameter definitions you provide to the AI) are always synchronized with the actual implementation of your tools and APIs. If you update a parameter, change its type, or modify the function’s behavior, update its definition accordingly. Outdated documentation is a common source of integration failures.

Monitor and Log Function Calls

Implement detailed logging for all function call attempts. Record which tools are called, the parameters provided, the responses received, and any errors that occur. This logging is invaluable for debugging and for understanding patterns of failure. Analyzing these logs can help you identify recurring issues, optimize tool usage, and improve the overall reliability of your AI integration.

When and Where to Seek Further Assistance

Official Documentation

Always start with the official documentation provided by the AI model provider. For Gemini, the Gemini API troubleshooting guide and the function calling documentation are excellent resources for understanding expected behavior and common issues.

Developer Communities

Engage with other developers who are using similar tools. Platforms like GitHub discussions, Google Developer forums, and Stack Overflow are invaluable for finding solutions to common problems and sharing your own experiences. For instance, discussions on GitHub often contain insights into subtle bugs or best practices.

GitHub Issues

If you are using open-source libraries or frameworks (like LangChain) for your AI integration, check the `issues` section of their respective GitHub repositories. Many common problems have already been reported and, in some cases, solved by the community or maintainers.

Support Channels

For platform-specific issues or if you are using a managed service, don’t hesitate to reach out to the official developer support channels. They can often provide guidance on issues that are specific to the platform’s implementation or your account.

Concluding Thoughts: Mastering AI Tool Integration

The error message “AI model cannot fulfill request” can be daunting, but as we’ve explored, most Gemini Pro function call tooling errors and other LLM tool integration issues are systematic. By understanding the underlying causes—ranging from intricate tool configurations and message sequencing to safety filters and API compatibility—you can approach these challenges with a structured troubleshooting mindset. Source. Mastering AI integration means paying close attention to the details: validating your tool definitions, ensuring correct message structures, and being aware of the model’s safety protocols. The field of AI is continually advancing, with ongoing efforts to enhance reliability and usability. Embracing these developments with a proactive, problem-solving attitude will be key to harnessing the full potential of AI in your applications. Remember, persistence and a systematic approach are your greatest allies in navigating the complexities of AI tool integration.