“`html

The Frustration of Unfulfilled AI Requests

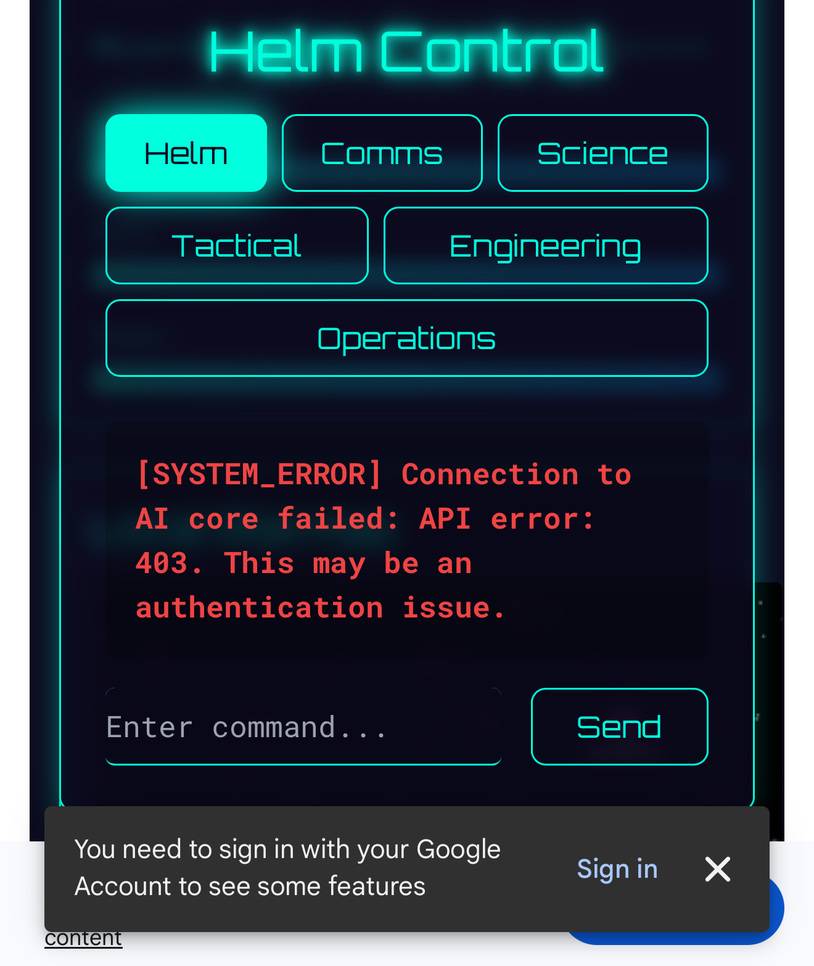

We’ve all been there. You meticulously craft a request for your AI model, expecting it to perform a specific task or interact with an external tool. Instead, you’re met with silence, an error message, or a response that completely misses the mark. This is the frustrating reality of encountering Gemini Pro function calling errors.

Function calling, in the realm of AI models like Gemini Pro, is a powerful capability that acts as a bridge. It allows the AI to understand your intent and translate it into a structured command that can trigger external tools, services, or specific actions. It’s the mechanism by which AI moves from simply generating text to *doing* things. You can learn more about function calling in Vertex AI and its integration with platforms like Firebase.

The objective of this comprehensive guide is to equip you with the knowledge to understand, diagnose, and ultimately resolve these vexing errors. We aim to ensure your AI interactions are as seamless and productive as they should be, allowing your AI requests to be fulfilled as intended. This guide is designed to help you with gemini pro function calling errors fix.

Understanding the General Landscape: Why AI Requests Sometimes Fail

Before we dive into the specifics of Gemini Pro, it’s essential to understand the broader reasons why AI cannot fulfill requests. This foundational knowledge will help in diagnosing a wider range of issues.

One of the most common culprits is improperly formatted requests. This can manifest in several ways:

-

Incorrect Schemas: The structure you define for your functions or the data you send might not align with what the AI model expects. This includes missing required fields or using data types that are not supported.

-

Missing Parameters: Essential information that the AI needs to perform a function might be omitted from the prompt or the function definition.

You can find more insights into these issues in the Gemini API troubleshooting guide and discussions like this one on LangchainJS, as well as the general principles of function calling in Firebase.

Furthermore, unsupported operations or data types can lead to rejection. Generative AI models are constantly evolving, and while they are incredibly versatile, they have their limits. Complex or nested data structures, especially those involving advanced types or intricate relationships, might not be fully supported by all models or versions. This is particularly true when dealing with newer features or experimental capabilities. As noted in discussions about Gemini 2.5 Pro and complex schemas, intricate designs can be a stumbling block.

Model limitations and specific configuration requirements also play a significant role. Different models, even within the same family like Gemini, have varying capabilities and might require specific settings or versions to function correctly. For instance, some advanced features might only be available in the latest Pro versions, while older or lighter models might not support them. This is highlighted in discussions regarding Gemini 1.0 Pro Vision and function calling and the general considerations for function calling with Gemini.

We must also acknowledge the impact of safety and policy checks. AI models are designed with safety filters to prevent the generation of harmful, unethical, or inappropriate content. These filters can sometimes lead to unexpected failures or empty responses if a request, even inadvertently, triggers a policy violation. This is a common point of discussion on platforms like LangchainJS and is also covered in the Gemini API troubleshooting documentation.

Ultimately, troubleshooting generative AI errors is a skill that requires a methodical approach. It begins with a careful examination of error codes, response payloads, and, crucially, the official documentation. Understanding these general pitfalls provides a robust framework for tackling more specific issues.

Deep Dive: Diagnosing and Fixing Gemini Pro Function Calling Errors

Now, let’s hone in on the specifics of gemini pro function calling errors fix. While the general principles apply, there are particular nuances to Gemini Pro that often lead to function calling issues.

Several common causes have been identified:

-

Complex or Nested Schemas: A significant challenge, particularly with models like Gemini 2.5 Pro, arises from overly complex function schemas. When these schemas involve deep nesting of objects, arrays with multiple defined properties, or extensive use of enumerations, the model can struggle to parse them correctly, leading to errors, often a

500 Server Error. The advice here is clear: simplify your schema structures whenever possible. This often means flattening nested objects or breaking down complex data into smaller, more manageable parts. The experience shared on this forum post is a prime example. -

Model Support Discrepancies: It’s not uncommon for different versions of Gemini to handle identical function calls with varying degrees of success. For instance, a function call that works flawlessly with Gemini 2.5 Pro might fail with Gemini 2.0 Flash, or vice-versa. This highlights the critical importance of ensuring your function call definitions and your chosen model align. Always check the documentation for the specific model version you are using to confirm its support for function calling and any associated limitations. Issues like this are discussed in relation to complex schemas and also in GitHub issues such as #843.

-

Implementation Inconsistencies: Sometimes, the issue isn’t with the model itself but with how it’s integrated into your application. In certain frameworks or specific use cases, like within integrations with tools like Livekit or custom agents, the model might output plain text representing an attempted function call rather than a properly structured JSON object that your code can parse. This can happen if the bindings or the way the tool calls are handled are not perfectly aligned with the model’s output. This is a recurring theme in discussions like #843 on GitHub.

-

Input Data Limitations: It’s crucial to be aware of the input requirements of the specific Gemini model you’re using. For example, Gemini 1.0 Pro Vision, while powerful for multimodal tasks, has stricter requirements for input data and may reject function call schemas if they don’t conform to its expected format. Always refer to the model’s documentation regarding supported input types and function calling capabilities. This limitation is specifically mentioned regarding Gemini 1.0 Pro Vision.

When you encounter these issues, a systematic diagnostic process is key:

Diagnostic Steps:

-

Meticulously review all error messages and response codes. Common codes like

400 Bad Requestoften point to malformed requests or schemas, while500 Internal Server Errorcan indicate more complex issues on the server-side, possibly related to schema parsing. Refer to detailed error analysis and general troubleshooting tips. -

Examine your function schemas for complexity. Are there deeply nested objects? Are you using supported data types? Ensure all required parameters are clearly defined and present. Simplify structures where possible. Consult discussions on schema complexity and guidelines on tool definitions.

-

If you suspect schema issues or model compatibility problems, test with a significantly simpler function schema. This isolation step can quickly reveal if the complexity of your original schema was the root cause. Additionally, try calling the same function with different Gemini versions (if available) to see if the behavior changes. Insights from schema error discussions, GitHub issues, and notes on Vision model limitations are invaluable here.

Based on your diagnostics, here are some practical fixes:

Practical Fixes:

-

*Simplify function schemas*: Reduce nesting, break down complex types, and avoid excessive enumerations. Prioritize a flat, clear structure. This is a direct recommendation from users experiencing 500 errors with complex schemas.

-

*Validate tool and parameter definitions*: Double-check that your function definitions precisely match the requirements and examples provided in the official LangchainJS and Firebase function calling documentation. Ensure data types, required fields, and descriptions are accurate.

-

*Switch to a more suitable model*: If your use case consistently runs into limitations with a particular Gemini version, consider migrating to a different one known to better support your needs. For instance, if you require advanced function calling with complex data, a newer Pro version might be more appropriate than a Flash version or an older iteration. Recommendations can be found by comparing experiences shared regarding complex schemas, Vision model constraints, and the general capabilities described in the Vertex AI function calling documentation.

-

*Ensure correct configuration*: Verify that all environment variables, API keys, model parameters, and any specific bindings within your framework (like Langchain or custom agent setups) are correctly implemented. Misconfigurations here, as noted in this LangchainJS discussion, can silently break function calls.

Beyond Function Calls: The Broader “Why AI Cannot Fulfill Request” Phenomenon

While Gemini Pro function calling errors are a significant pain point, it’s crucial to remember the wider context of why AI cannot fulfill requests. These issues extend beyond just the mechanics of function invocation.

Prompt ambiguity is a persistent challenge. If a prompt is vague, poorly structured, or contains conflicting instructions, the AI may struggle to understand the user’s true intent. This can lead to irrelevant responses or an inability to proceed, even if the underlying system is technically sound. Similarly, context limitations can play a role; if the AI doesn’t have enough relevant information or if the context window is too small to hold the necessary details, it may fail to generate a complete or accurate response.

We also encounter model capability gaps. Sometimes, a request, however well-formed, simply falls outside the scope of what a particular AI model is designed or trained to do. This is not necessarily an error in the request but a limitation of the AI’s inherent abilities. This concept is discussed in relation to specific models like Gemini 1.0 Pro Vision and the general challenges of complex schemas.

These underlying reasons for failure are not unique to Gemini. You might observe similar issues with other platforms, such as Perplexity AI, or any generative AI system. Whether it’s an unsupported feature, a safety filter being triggered, or a prompt that’s simply too abstract, the fundamental causes of AI unresponsiveness often share common threads.

It’s important to distinguish between a request that fails due to a technical error—like an improperly formatted function call or an invalid API request—and one that the AI genuinely cannot fulfill because of its inherent limitations, safety protocols, or the inherent complexity of the request itself. Both scenarios result in an unfulfilled request, but the path to resolution differs significantly.

Navigating the Minefield: Overcoming AI Policy Violations in Prompts

One of the less obvious, but critically important, reasons an AI might fail to fulfill a request is due to overcoming AI policy violations in prompts. Modern AI models are equipped with robust safety systems designed to prevent the generation of harmful, unethical, illegal, or otherwise policy-violating content.

When a prompt, even unintentionally, triggers these safety filters, the AI is programmed to refuse the request. This often results in a blank response, a generic refusal message, or an error code indicating a policy violation. This is a well-documented aspect of AI interaction, mentioned in general troubleshooting guides and the official Gemini API troubleshooting documentation.

Common policy violations can include requests related to:

-

Hate speech, discrimination, or harassment.

-

Promotion of illegal activities or dangerous behavior.

-

Sexually explicit content.

-

Misinformation or deceptive content that could cause harm.

-

Content that violates privacy or intellectual property.

Mitigating these issues requires a careful approach:

-

*Rephrase your prompts*: The simplest strategy is often to steer clear of sensitive or potentially triggering language. Focus on the core task or information you need, using neutral and objective language.

-

*Focus on outcome-driven queries*: Instead of asking *how* to do something potentially problematic, ask for the *outcome* in a way that avoids problematic steps or methodologies. For example, instead of asking “how to hack a system,” ask “how to secure a system against common vulnerabilities.”

-

*Consult official AI policy documentation*: Most AI providers publish detailed guidelines on their acceptable use policies. Familiarize yourself with these to understand the boundaries and ensure your prompts remain compliant. This proactive step can save significant debugging time.

Understanding and respecting these policy guardrails is as crucial as mastering technical aspects like function calling for successful AI integration.

A Systematic Approach: Troubleshooting Generative AI Errors

Effective troubleshooting generative AI errors demands a structured methodology. Rather than randomly trying fixes, follow these steps to systematically identify and resolve issues.

Step 1: Inspect Artifacts

Begin by thoroughly examining all available diagnostic information. This includes:

-

*Error Messages*: Capture the exact wording of any error messages returned by the API or your application.

-

*Response Codes*: Note the HTTP status codes (e.g., 200, 400, 422, 500) and any specific error codes provided in the response body.

-

*Logs*: Review your application logs, server logs, and any debugging output from the AI SDK or framework you are using. These often contain crucial details about the request and response.

Refer to the Gemini API troubleshooting guide and user-reported issues for common error patterns.

Step 2: Validate Structures

Focus on the structure of your inputs, especially function schemas and tool definitions:

-

*Function Schemas*: Simplify them. Remove unnecessary nesting, complex types, or excessive enumerations. Ensure they adhere to JSON Schema standards and model-specific requirements.

-

*Tool Definitions*: Verify that the names, parameters, and descriptions of your tools are clear, accurate, and consistent with the functions they represent.

Consult resources like discussions on schema validation and tool definition best practices.

Step 3: Consult Documentation

Always refer to the official documentation for the specific AI model and platform you are using. Pay close attention to:

-

*Supported Features*: Confirm that the function calling feature is enabled and supported for your chosen model version.

-

*Input/Output Formats*: Understand the expected data types, schema structures, and response formats.

-

*Limitations and Requirements*: Note any model-specific constraints, such as input size limits, supported data types, or specific configuration needs.

Key resources include the Vertex AI function calling guide and the Firebase documentation.

Step 4: Isolate and Compare

To pinpoint the source of an error, try to isolate the problematic component:

-

*Test with simpler inputs*: Gradually simplify your prompts and function calls until the issue disappears. This helps identify the exact trigger.

-

*Compare model versions*: If possible, test your request with different versions of the AI model. Differences in behavior can highlight version-specific issues or bugs. Information on model variations can be found in discussions like those concerning Gemini 2.5 Pro or Python GenAI issues.

-

*Compare to known working examples*: If you have a working example of function calling, use it as a baseline to compare your failing implementation against.

Step 5: Apply Debugging Principles

Treat AI integration like any other software development task:

-

*Analyze request/response pairs*: Log and inspect the exact payload sent to the AI and the response received. This is invaluable for understanding what the AI is seeing and how it’s reacting.

-

*Experiment with configurations*: Tweak parameters like temperature, top-k, and top-p to see if they affect the outcome, though this is less common for function calling errors.

-

*Create minimal reproducible examples*: Develop the simplest possible code snippet that demonstrates the error. This is crucial for isolating the problem and for seeking help.

The official troubleshooting guide and detailed discussions like those on complex schema issues are excellent resources for this stage.

Step 6: Seek Community or Support

If you’ve exhausted the above steps and are still facing issues, don’t hesitate to reach out:

-

*Official Forums*: Engage with the developer community on platforms like Stack Overflow, GitHub discussions, or official Google Cloud AI forums.

-

*Support Channels*: If you are using a paid service, consider contacting official support channels for assistance.

When asking for help, always provide a clear description of the problem, the steps you’ve taken, and your minimal reproducible example. Insights from Gemini troubleshooting and community-reported issues can be invaluable when framing your query.

Proactive Strategies for Seamless AI Integrations

While troubleshooting is essential, adopting proactive strategies is the key to minimizing AI errors and ensuring smooth, reliable integrations in the long run. Shifting focus from reactive fixes to preventative measures can save considerable time and effort.

First and foremost, invest time in crafting clear, precise, and logically structured prompts. Ambiguity is the enemy of effective AI communication. Well-defined prompts reduce the likelihood of misinterpretation and guide the AI more effectively toward the desired outcome. Think of your prompt as a contract: the clearer the terms, the less room for error.

Reinforce the importance of designing robust and simple function schemas. As we’ve seen, complexity is a common pitfall. Prioritize flatness, clarity, and adherence to documented standards. Accompanying these schemas with clear, concise documentation for your functions will not only aid the AI but also anyone else who needs to understand or integrate with your tools. This practice is strongly advised in resources discussing schema limitations and tool definition best practices.

Embrace iterative testing and diligent logging. Don’t deploy complex AI integrations without thoroughly testing them under various conditions. Implement comprehensive logging to capture every request, response, and potential error. This provides an invaluable audit trail for debugging and performance analysis. Catching subtle issues early through continuous testing and monitoring is far more efficient than fixing them after a system-wide failure.

Advise users to stay informed by actively monitoring AI model documentation and release notes. The field of generative AI is evolving at an unprecedented pace. New features are added, capabilities are enhanced, and best practices can change. Regularly checking official sources for updates related to function calling, model behavior, and usage guidelines is crucial for maintaining optimal performance and avoiding deprecated practices. Key documentation to follow includes the Gemini API troubleshooting pages, the Vertex AI function calling guide, and the Firebase AI Logic documentation.

In essence, adopting these proactive strategies—clear prompting, simplified schemas, rigorous testing, thorough logging, and continuous learning—is the cornerstone of minimizing AI errors and optimizing generative AI integrations for reliability and effectiveness.

Concluding Thoughts: Empowering Your AI Interactions

Navigating the world of AI interactions, especially when dealing with complex features like function calling in Gemini Pro, can be challenging. We’ve covered the most critical takeaways for addressing gemini pro function calling errors fix and understanding the broader landscape of why AI requests might fail.

Remember that understanding the root causes—whether it’s schema complexity, model limitations, prompt ambiguity, or policy violations—is the foundational step toward finding effective solutions. The errors you encounter are not insurmountable obstacles but rather indicators that require careful diagnosis.

We encourage you to actively apply the diagnostic and troubleshooting steps outlined in this guide. By systematically inspecting artifacts, validating structures, consulting documentation, and isolating variables, you can demystify even the most perplexing AI errors.

The field of AI is in constant flux, with developers working tirelessly to improve model capabilities and error handling. As these systems evolve, continuous learning and adaptation will remain essential for anyone aiming to leverage AI effectively. Stay curious, stay informed, and keep experimenting.

Frequently Asked Questions

What is the primary reason for Gemini Pro function calling errors?

Often, the primary reasons include overly complex or deeply nested function schemas, model version compatibility issues, incorrect parameter definitions, or mismatches between expected and actual data types.

How can I simplify a complex function schema for Gemini Pro?

Try to flatten nested objects, break down complex data structures into simpler components, and ensure you are only using data types explicitly supported by the model. Refer to the documentation and community discussions on schema design.

Can different Gemini versions cause function calling issues?

Yes, absolutely. Different versions of Gemini (e.g., 2.0 Flash vs. 2.5 Pro) may have varying levels of support for function calling or handle identical calls differently. It’s crucial to ensure your implementation matches the capabilities of the specific model version you are using. See discussions on model differences.

What should I do if my request is blocked by AI safety policies?

Review your prompt for any potentially sensitive or policy-violating language. Rephrase your request using neutral and objective terms, focusing on the desired outcome rather than problematic methods. Consult the AI provider’s acceptable use policy for guidance.

How can I systematically troubleshoot general AI request failures?

Follow a systematic approach: inspect error messages and logs, validate input structures (like schemas), consult official documentation thoroughly, isolate the issue by testing with simpler inputs or different model versions, and apply standard debugging principles. When stuck, seek help from the community or official support. Refer to the troubleshooting guide for a detailed framework.

Is function calling supported by all Gemini models?

Function calling support can vary across different Gemini models and versions. For example, while Gemini Pro models generally offer robust function calling capabilities, specific versions or specialized models (like Gemini 1.0 Pro Vision) might have different limitations or requirements. Always check the official documentation for the model you are using.

What does a 500 Server Error typically mean in the context of Gemini function calls?

A 500 Server Error often indicates an issue on the AI provider’s end, but in the context of function calling, it can frequently be triggered by problems with parsing complex or malformed schemas. It suggests the model encountered an internal problem that might stem from the complexity or invalidity of the provided function definitions. Simplifying the schema is a common first step in debugging this error.

“`