“`html

Demystifying JSON Schema for Robust Data Validation

Estimated reading time: 10 minutes

Key Takeaways

- JSON Schema is a powerful specification for defining and validating the structure of JSON data.

- Effective data validation is crucial for ensuring data integrity, preventing errors, and enabling reliable system communication. Learn more about its importance at Zuplo, JSON Schema Official, and Biomadeira.

- JSON Schema provides a standardized way to describe data expectations, facilitating automated validation. Discover more at Zuplo, JSON Schema Official, and Syncfusion.

- A schema acts as a blueprint for data structure, defining its expected format and characteristics. Refer to Syncfusion and Biomadeira for details.

Table of contents

- Demystifying JSON Schema for Robust Data Validation

- Key Takeaways

- The Foundation: Understanding What a Schema Is in JSON Schema

- Defining Data Integrity: Data Types in JSON Schema

- Enforcing Rules: Applying Constraints for Validation

- The Practical Application: How Validation Works

- The Advantages: Why Use JSON Schema for Validation?

- Real-World Applications: Where JSON Schema Shines

In the realm of data management and software development, ensuring the accuracy, consistency, and integrity of data is paramount. Without robust mechanisms for data validation, systems can quickly become unreliable, leading to bugs, unexpected behavior, and communication breakdowns between different services. This is where JSON Schema emerges as a vital tool. It’s not just a technical specification; it’s a fundamental approach to building more reliable and predictable data pipelines.

At its core, JSON Schema is a vocabulary that allows you to annotate and validate JSON documents. Think of it as a contract that defines what your JSON data should look like. This contract specifies the structure, the expected data types, and the permissible values, ensuring that data conforms to a predefined standard. This standardization is incredibly powerful, especially in distributed systems, APIs, and microservices where different components must communicate effectively using JSON.

The importance of data validation cannot be overstated. It acts as a gatekeeper, preventing malformed or unexpected data from entering your system. This proactive approach saves countless hours of debugging and troubleshooting down the line. By validating data at the point of entry or exchange, you catch errors early, making them easier and cheaper to fix. As highlighted by resources from Zuplo, JSON Schema Official, and Biomadeira, solid data validation underpins system stability and trust.

JSON Schema provides a standardized solution to this need. It offers a clear, concise, and machine-readable way to express data expectations. When you have a schema, you have a blueprint. This blueprint details everything from the required fields in an object to the specific format of a string or the range of a number. It transforms the often ambiguous task of data validation into a formal, verifiable process. The ability to automate this validation, as discussed by Zuplo, JSON Schema Official, and Syncfusion, is what makes it so indispensable in modern development workflows.

Understanding what a schema is, in the context of JSON, is the first step. It’s the architectural plan for your JSON data. Without a schema, you’re essentially working with data that has no defined structure, making it prone to inconsistencies and errors. Conversely, a well-defined schema acts as a contract, ensuring that all parties interacting with the data understand its structure and valid contents. This foundational understanding is key to leveraging the full power of JSON Schema.

The Foundation: Understanding What a Schema Is in JSON Schema

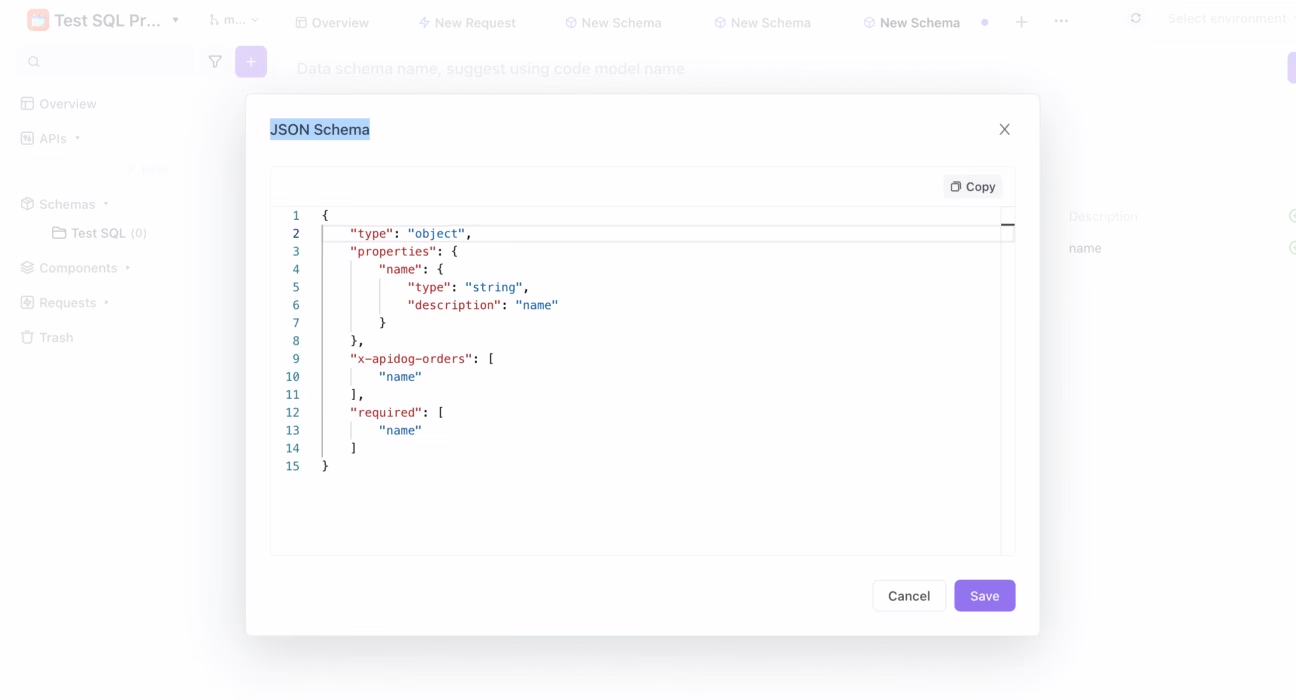

In the broad landscape of data management, a schema serves as a foundational element, dictating the rules and structure of data. When we talk about JSON Schema, this concept becomes even more concrete. A JSON Schema is itself a JSON document that describes and validates other JSON documents. It specifies the permissible structure, the exact data types allowed for each field, and which properties are mandatory for a JSON document to be considered valid.

The elegance of JSON Schema lies in its own format: it is written in JSON. This means that developers can leverage their existing knowledge of JSON syntax to define these validation rules. Using a set of standardized keywords, a JSON Schema can enforce complex requirements and document data expectations clearly. Resources from Zuplo, JSON Schema Official, and Syncfusion emphasize this dual role of JSON Schema as both a definition language and a validation tool.

Let’s break down some of the key components that form the backbone of a JSON Schema:

- `type`: This is a fundamental keyword that declares the expected data type of a value. It can specify values like `”object”`, `”array”`, `”string”`, `”number”`, `”integer”`, `”boolean”`, or `”null”`. This is crucial for basic data integrity. You can find more on this in JSON Schema Official, Syncfusion, and Biomadeira.

- `properties`: When dealing with JSON objects, the `properties` keyword is used to define the fields (keys) that the object can contain. For each property, you can specify its own nested schema, detailing its type, constraints, and so on. This is a core aspect of defining complex data structures, as detailed in JSON Schema Official, Syncfusion, and Biomadeira.

- `required`: This keyword specifies an array of property names that *must* be present in a JSON object for it to be considered valid. If a required property is missing, the validation will fail. This is a critical keyword for ensuring that essential data is always provided, as explored by Zuplo, JSON Schema Official, Syncfusion, and Biomadeira.

- `items`: For JSON arrays, the `items` keyword defines the schema that each element within the array must conform to. This is invaluable for ensuring that arrays contain consistent data, whether it’s an array of strings, numbers, or complex objects. This is a key feature highlighted in Syncfusion and Biomadeira.

Beyond these core elements, JSON Schema offers several other useful keywords for metadata and schema management. Keywords like $schema specify the version of the JSON Schema specification being used, ensuring compatibility. $id provides a unique identifier for the schema, useful for referencing and modularity. And keywords such as title and description are invaluable for documenting the purpose and meaning of the schema, making it more understandable for developers. These descriptive keywords are well-covered in Syncfusion.

Essentially, a schema in JSON Schema is a declaration of what constitutes valid JSON data for a particular purpose. It’s the rulebook that data must follow. By defining these rules upfront, developers can prevent a wide range of common errors and build more resilient applications.

Defining Data Integrity: Data Types in JSON Schema

One of the most fundamental aspects of data validation is ensuring that values have the correct data types. JSON Schema provides a robust set of standardized types that map directly to JSON’s built-in types, allowing for precise definition of what kind of data is expected for each field. This clarity is essential for maintaining data integrity and preventing type-related errors.

Here are the primary data types supported by JSON Schema:

- `string`: Represents textual data.

- `number`: Represents floating-point or integer numbers. This is a broad category for numeric values.

- `integer`: Specifically for whole numbers (positive, negative, or zero). This is a more specific type than `number`.

- `boolean`: Represents logical values, either `true` or `false`.

- `array`: Represents an ordered list of values. The `items` keyword is used within an array type to define the schema for its elements.

- `object`: Represents an unordered collection of key-value pairs, where keys are strings and values can be any valid JSON type. The `properties` and `required` keywords are used within an object type.

- `null`: Represents the absence of a value, often used to explicitly indicate that a field can be null.

These fundamental data types are crucial for establishing the basic structure and validity of your JSON data, as explained in resources like Syncfusion and Biomadeira. By clearly defining the expected type for each field, you prevent scenarios where, for example, a string is mistakenly used where a number is expected.

Let’s consider a practical example of a JSON Schema that utilizes different data types and the required keyword:

{

"type": "object",

"properties": {

"name": { "type": "string" },

"age": { "type": "integer" },

"email": { "type": "string", "format": "email" }

},

"required": ["name", "email"]

}This schema defines an object that is expected to have at least two properties: name and email. The name property must be a string, and the email property must also be a string, with the additional constraint that it must conform to an email format (e.g., `[email protected]`). The age property is optional but, if present, must be an integer. This demonstrates how JSON Schema enforces both structure and basic value types, as detailed by Zuplo and Syncfusion.

By specifying these data types and required fields, you ensure that any JSON data validated against this schema will be predictable and conform to your application’s needs. This level of detail is fundamental for robust data handling.

Enforcing Rules: Applying Constraints for Validation

While defining basic data types is essential, JSON Schema goes much further by allowing developers to apply specific constraints. These constraints provide a granular level of control over data, ensuring it not only matches the correct type but also adheres to specific rules and formats. This capability is what makes JSON Schema a truly powerful tool for comprehensive validation.

Here’s a look at some of the common types of constraints you can implement:

-

Format Constraints: These constraints are particularly useful for string values, ensuring they adhere to common formats. Examples include:

"format": "email": Validates that the string is a properly formatted email address."format": "date"or"format": "date-time": Validates ISO 8601 date or date-time formats."format": "uri": Checks if the string is a valid Uniform Resource Identifier.

These are widely used for data integrity, as noted by Zuplo and Syncfusion.

-

Value Constraints: These allow you to set boundaries or minimum/maximum requirements for numerical and string values.

- For strings:

"minLength"and"maxLength"specify the allowed length of a string."pattern"uses regular expressions to enforce specific character sequences or formats within a string. - For numbers:

"minimum","maximum","exclusiveMinimum", and"exclusiveMaximum"define the numerical range a value must fall within.

These constraints are critical for controlling data precision and adherence to business logic, as covered by Zuplo, JSON Schema Official, Syncfusion, and Biomadeira.

- For strings:

-

Array Constraints: These constraints are applied to arrays to control their contents and size.

"minItems"and"maxItems": Define the minimum and maximum number of elements an array can contain."uniqueItems": A boolean that, if set to `true`, ensures all elements within the array are unique.

These are vital for managing lists of data effectively, as detailed in Syncfusion.

-

Object Constraints: You can also apply constraints to JSON objects themselves.

"minProperties"and"maxProperties": Specify the minimum and maximum number of properties an object can have.- Reinforcing

"required"fields: While `required` specifies *which* properties must be present, these constraints focus on the *quantity* of properties.

These are important for ensuring objects conform to expected structures, as explained by Syncfusion.

-

Enum Constraints: The

"enum"keyword allows you to restrict the accepted values for a field to a predefined list of options. For example, an `”enum”: [“pending”, “processing”, “completed”]` would only allow one of those three strings. This is a simple yet powerful way to enforce specific enumerations, as shown in JSON Schema Examples.

Beyond these, JSON Schema supports advanced validation constructs like allOf, oneOf, and anyOf, which enable complex logical combinations of multiple schemas. The $ref keyword is also crucial for modularity, allowing you to reuse parts of schemas or reference external schemas. These advanced features provide immense flexibility for defining intricate data validation rules, as highlighted in Zuplo.

By leveraging these constraints, developers can build highly specific and robust validation rules, significantly improving the quality and reliability of their data. This layered approach, combining data types with precise constraints, forms the core of effective validation with JSON Schema.

The Practical Application: How Validation Works

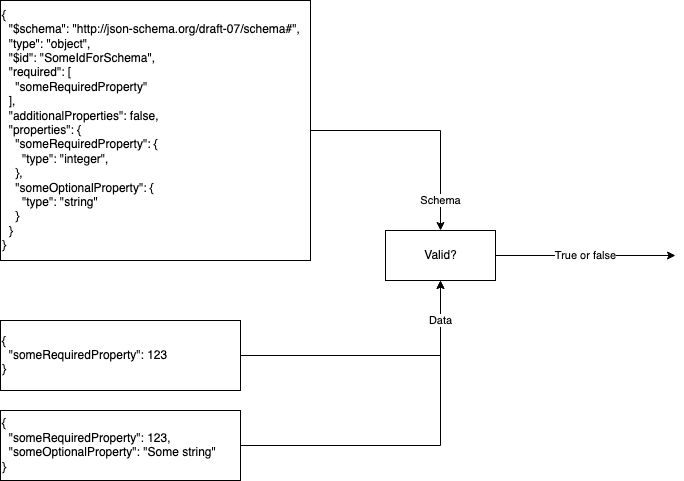

Understanding the theory behind JSON Schema is one thing, but how does it work in practice? The process of validation is typically handled by specialized tools and libraries that implement the JSON Schema specification. These libraries act as the engines that compare your JSON data against your defined schema.

A wide array of validator libraries exist across virtually every major programming language. For instance, in the JavaScript ecosystem, Ajv (Another JSON Schema Validator) is a highly popular and performant choice. In Python, the jsonschema library is the go-to solution. These tools abstract away the complexities of the specification, providing developers with straightforward APIs to perform validation. This wide availability is a testament to the utility and adoption of JSON Schema, as noted by Zuplo and Ajv.

The typical workflow for performing validation using a library generally involves these steps:

- Load the Schema and Data: You begin by loading your JSON Schema definition and the JSON data that you intend to validate. These are usually represented as parsed JSON objects within your programming environment.

- Compile the Schema (Often): For performance, many libraries first “compile” the schema into an internal representation that is optimized for validation checks. This compilation step is usually done once.

- Perform Validation: The compiled schema is then used to validate the data. The validator library iterates through the data, checking it against the rules defined in the schema (types, formats, constraints, etc.).

- Report Results: The validator will return a result indicating whether the data is valid or not. If the data is invalid, the library will typically provide detailed error messages, pinpointing exactly where and why the validation failed. This feedback is invaluable for debugging and correcting the data, as emphasized by Zuplo and Ajv.

Here’s a simplified example demonstrating the validation process using JavaScript and Ajv:

const Ajv = require("ajv");

const ajv = new Ajv(); // Initialize Ajv

// Assume 'schema' is your compiled JSON Schema object

// Assume 'data' is the JSON data you want to validate

const validate = ajv.compile(schema); // Compile the schema

const valid = validate(data); // Perform validation

if (!valid) {

console.error("Validation errors:", validate.errors); // Log specific validation errors if data is not valid

} else {

console.log("Data is valid!");

}In this snippet, `ajv.compile(schema)` prepares the schema for efficient checking. Then, `validate(data)` executes the validation. If `valid` is false, the `validate.errors` property contains an array of objects, each describing a specific validation failure. This explicit error reporting is a cornerstone of effective validation, making it easier to identify and fix issues within your JSON data, as explained by Zuplo and Ajv. It’s this practical implementation that turns the theoretical power of JSON Schema into tangible benefits for developers.

The Advantages: Why Use JSON Schema for Validation?

Adopting JSON Schema for data validation offers a multitude of benefits that significantly enhance the development process and the overall reliability of applications. It moves beyond simply catching errors; it fosters a culture of data quality and predictability.

- Improved Data Quality and Consistency: By enforcing strict formats, types, and constraints with a well-defined schema, you drastically reduce the chances of malformed or inconsistent data entering your systems. This proactive approach ensures that data is reliable and trustworthy, which is fundamental for any application. Resources from Zuplo and Syncfusion highlight this as a primary advantage.

- Enhanced Developer Productivity: A clear JSON Schema acts as a definitive contract between different parts of an application or between different teams. Developers can rely on the schema to understand data expectations, leading to fewer misunderstandings and a significant reduction in time spent debugging subtle data-related issues that arise from differing assumptions. This clarity speeds up development and integration cycles, as noted by Zuplo and Syncfusion.

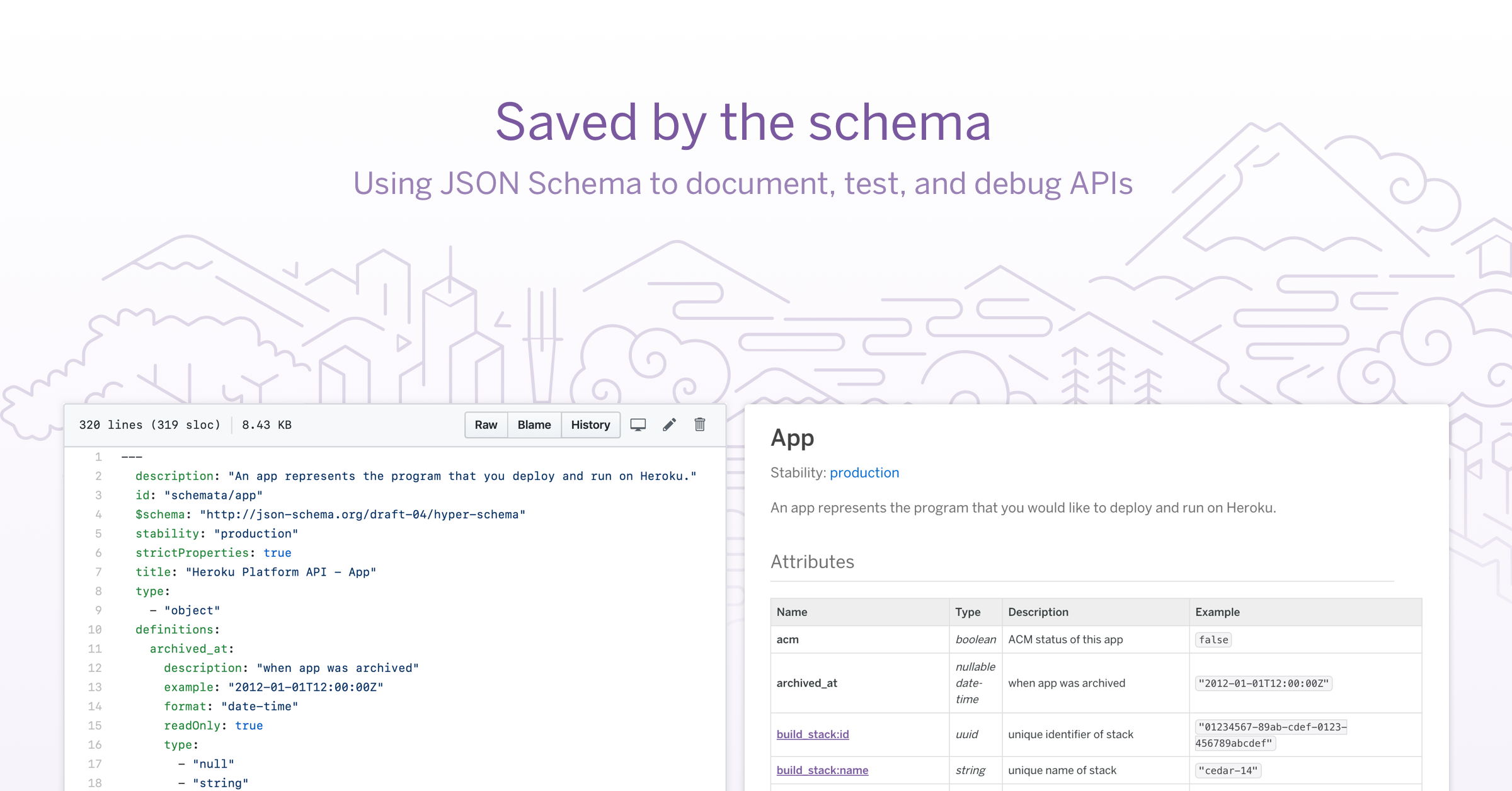

- Easier API Development and Documentation: JSON Schema is incredibly useful for API development. It can serve as the basis for automatically generating interactive API documentation (e.g., using OpenAPI/Swagger), providing clients with a clear understanding of the expected request and response payloads. Furthermore, it enables robust validation of API requests and responses, ensuring that both the client and server adhere to the defined contract. This streamlines API design and maintenance, as discussed in Zuplo, JSON Schema Official, and Syncfusion.

- Reduced Bugs and Runtime Errors: Catching data specification violations early through automated validation is far more efficient and less disruptive than discovering them during runtime. By ensuring data conforms to the schema before it’s processed, you prevent a whole class of bugs that stem from unexpected data formats or missing information. This early detection leads to more stable and reliable software, a benefit emphasized by Zuplo and Syncfusion.

- Standardization and Interoperability: As a widely adopted standard, JSON Schema promotes interoperability. When systems use JSON Schema for validation, they can more easily exchange data with other systems that also understand and use the standard. This makes it easier to build and maintain complex ecosystems of services.

- Declarative Approach: JSON Schema allows you to declare *what* your data should look like, rather than imperatively coding *how* to check it. This declarative approach is often more concise, readable, and maintainable than writing custom validation logic for every scenario.

In essence, adopting JSON Schema isn’t just about adding a validation step; it’s about fundamentally improving the way you handle data, leading to higher quality software, increased developer efficiency, and more robust systems. The combination of clear definitions, precise constraints, and standardized tooling makes it an indispensable part of modern data-centric development.

Real-World Applications: Where JSON Schema Shines

The versatility and power of JSON Schema make it applicable across a wide range of scenarios where structured data needs to be defined, validated, and managed reliably. Its ability to enforce rules and document expectations makes it invaluable in many practical applications:

- API Request/Response Validation: This is perhaps one of the most common and critical uses of JSON Schema. For any web API, whether RESTful, GraphQL, or other types, schemas can define the exact structure and content expected for incoming requests and outgoing responses. This ensures that clients send data in the correct format and that the server responds with data that clients can reliably process. Tools can automatically generate API specifications (like OpenAPI) from JSON Schemas. Resources like Zuplo frequently cite this as a prime use case.

- Configuration File Validation: Applications often rely on configuration files (e.g., in JSON format) to define their behavior. Using JSON Schema to validate these configuration files before the application starts can prevent startup failures due to malformed settings or incorrect values. This ensures that the application begins in a known, valid state. Syncfusion points this out as a key area where validation is essential.

- Data Serialization/Deserialization Checks: When data is being converted between different formats or stored/retrieved from databases or files, there’s a risk of data corruption or loss of integrity. JSON Schema can be used to validate the data immediately before or after serialization/deserialization, ensuring that the data remains consistent and accurate throughout these processes. This is crucial for maintaining data integrity during data pipelines, as noted by Syncfusion.

- Data Exchange Between Systems: In complex architectures like microservices, or in scenarios involving ETL (Extract, Transform, Load) processes, different systems need to exchange data reliably. JSON Schema provides a standardized contract for this data exchange. By defining schemas for the data being shared, developers can ensure that each system understands the expected format and can validate incoming data, preventing integration issues and data corruption. This interoperability is a significant benefit highlighted by Zuplo and Syncfusion.

- Form Input Validation: While often handled by client-side JavaScript libraries, JSON Schema can also define the structure of data submitted through web forms. This allows for server-side validation that mirrors client-side expectations, providing an extra layer of security and reliability.

- Data Migration and Transformation: When migrating data between different database schemas or transforming data for new use cases, JSON Schema can be used to define the source and target data structures, ensuring that the transformation process is accurate and that the resulting data is valid.

In each of these scenarios, JSON Schema acts as a crucial tool for enforcing structure, defining expectations, and ensuring the integrity of JSON data. Its ability to provide clear, machine-readable definitions for data makes it an indispensable part of modern software development, particularly in distributed and API-driven environments. The application of validation using well-defined schemas and constraints is what makes these real-world applications robust and reliable.

Final Thoughts: Embracing JSON Schema for Reliable Data

As we’ve explored, JSON Schema is far more than just a technical specification; it’s a fundamental enabler of data integrity and system reliability. It provides a robust, declarative framework for automating the process of data validation, ensuring that your JSON data consistently meets predefined expectations.

By embracing the use of well-defined schemas and specific constraints, developers can proactively enforce data integrity. This systematic approach is critical for building applications that are not only functional but also resilient to the inevitable variations and potential errors in data input and exchange. The benefits extend from preventing runtime errors to enhancing developer collaboration and streamlining complex data pipelines.

We strongly encourage you to explore JSON Schema further and integrate it into your development workflows. Whether you’re building APIs, managing configuration files, or enabling data exchange between services, the practice of defining and enforcing data structures with schemas will lead to higher quality data, fewer bugs, and more predictable, stable system behavior. Leveraging this standard will undoubtedly improve the overall reliability and maintainability of your software projects.

For a comprehensive understanding and practical guidance, remember to consult resources like Zuplo, the official JSON Schema documentation, and insightful articles from Syncfusion and Biomadeira.

Frequently Asked Questions

What is JSON Schema used for?

JSON Schema is used to define the structure, constraints, and data types of JSON documents. Its primary purpose is for validation, ensuring that JSON data conforms to a predefined specification. This makes it invaluable for APIs, configuration files, data exchange, and more.

How does JSON Schema ensure data integrity?

JSON Schema ensures data integrity by providing a formal, machine-readable way to define rules for JSON data. These rules include specifying expected data types (string, number, boolean, etc.), formats (like email or date), and other constraints (like minimum length or allowed values). A validator then checks data against this schema, rejecting any data that violates the rules.

Is JSON Schema difficult to learn?

The basics of JSON Schema are relatively straightforward for anyone familiar with JSON. Core keywords like `type`, `properties`, and `required` are intuitive. However, mastering advanced features like conditional logic (`allOf`, `oneOf`) and complex referencing (`$ref`) can require more effort and practice. Numerous resources are available to help users learn at their own pace.

Can JSON Schema be used for API documentation?

Yes, absolutely. JSON Schema is a foundational component for API description formats like OpenAPI (formerly Swagger). By defining request and response payloads using JSON Schema, you automatically create structured documentation that clearly outlines data expectations for API consumers. This makes APIs easier to understand and integrate with.

What are some common constraints in JSON Schema?

Common constraints in JSON Schema include `minLength` and `maxLength` for strings, `minimum` and `maximum` for numbers, `format` for specific string patterns (like email or URI), `enum` to restrict values to a predefined list, and `uniqueItems` for arrays. These allow for very precise validation rules.

Are there libraries to validate against JSON Schema?

Yes, there are many libraries available for performing JSON Schema validation across various programming languages. Popular examples include Ajv (JavaScript), `jsonschema` (Python), `System.Text.Json.JsonDocument` with custom logic (C#), and others. These libraries make it easy to integrate schema validation into your applications.

“`