OpenAI’s New Pocket Device: The Screenless, Context-Aware AI Revolution

Estimated reading time: 8 minutes

Key Takeaways

- The rumored OpenAI new pocket device screenless AI context aware represents a bold leap into ambient computing, shifting from screens to voice-first, environmental interactions.

- Speculation centers on a pen-shaped, ultra-portable gadget that is screenless and uses cameras and mics for context-aware AI, designed to complement phones and laptops.

- Core mobile AI gadget speculation functionality includes voice-first interaction, real-time translation, task automation, and seamless syncing, powered by advanced audio models.

- The device’s form factor as a micro device AI interactions wearable future possibilities catalyst could make AI an always-accessible, wearable companion.

- Challenges like battery life, privacy, and connectivity will define its success, with a realistic launch possibly stretching into 2027.

Table of Contents

- OpenAI’s New Pocket Device: The Screenless, Context-Aware AI Revolution

- Key Takeaways

- The Rumor Mill Churns: Origins and Significance

- Core Design Philosophy: Screenless and Context-Aware

- Speculated Functionality: Voice-First and Proactive Features

- Form Factor and Wearable Future

- Comparative Landscape: How It Stacks Up

- Challenges and Implications

- Recap and Outlook

- Frequently Asked Questions

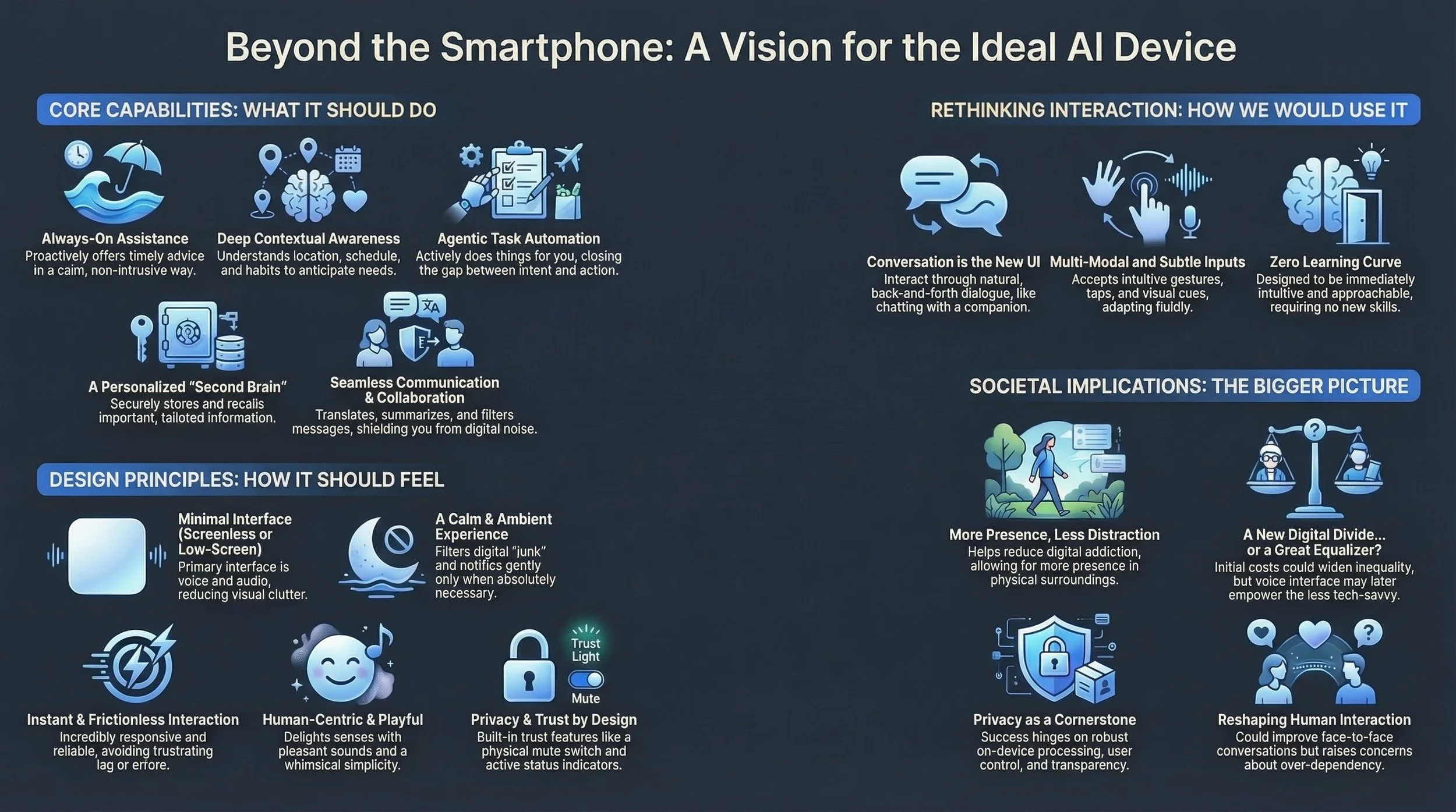

The tech world is abuzz with whispers of an OpenAI new pocket device screenless AI context aware gadget, a potential game-changer that promises to untether AI from our screens and embed it into the fabric of daily life. This speculation isn’t just about another smart device; it’s a vision for a mobile AI gadget speculation functionality that could redefine how we interact with technology, moving beyond taps and swipes to intuitive, voice-first companionship. In this article, we dive deep into every rumor, leak, and educated guess to paint a comprehensive picture of what this device might be, how it could work, and why it matters in the broader AI landscape.

The Rumor Mill Churns: Origins and Significance

Rumors of OpenAI’s foray into hardware began simmering with cryptic posts on X (formerly Twitter) from accounts like @kimmonismus, hinting at a “third core device” that would complement phones and laptops. These whispers gained credibility through OpenAI job listings seeking talent for “consumer hardware” and reports of collaboration with legendary designer Jony Ive’s firm, known for iconic Apple products. Analysts point to this as part of a strategic shift toward ambient computing, where AI becomes an invisible, always-present assistant rather than a tool we actively open.

Why does this matter? In an era dominated by screens, a screenless device represents a radical departure—a bet on context-aware AI that perceives your surroundings through audio and visual inputs to offer proactive help. Imagine a gadget that listens to a conversation and instantly translates it, or watches you scribble notes and digitizes them seamlessly. This isn’t just incremental innovation; it’s a step toward making AI as natural as breathing.

Our purpose here is to consolidate all early details on this mobile AI gadget speculation functionality, from rumored specs to potential impacts. As we explore, keep in mind that this device could be the harbinger of a micro device AI interactions wearable future possibilities where technology fades into the background, enhancing our capabilities without demanding our attention.

Core Design Philosophy: Screenless and Context-Aware

At the heart of the rumors is a device described as pen-shaped and ultra-portable, akin to an iPod Shuffle in size, designed to be worn via a neck strap or clip. This OpenAI new pocket device screenless AI context aware concept eliminates screens entirely, relying instead on a microphone and camera for environmental awareness. The core philosophy? To create a context-aware AI that understands your activities, location, and conversations in real-time, providing relevant assistance without you ever needing to look at a display.

What does “context-aware” mean in practice? It’s about the device processing audio-visual cues to infer needs. For instance:

- If you’re in a meeting, it might whisper summaries or action items via bone-conduction audio.

- If you’re traveling, it could offer translation or navigation hints based on street signs it “sees.”

- If you’re handwriting notes, it might sync them directly to ChatGPT for organization.

This approach leverages OpenAI’s advancements in multimodal AI, where models like GPT-4V (for vision) and upcoming audio capabilities enable a deeper understanding of context. The screenless AI context aware design isn’t just a gimmick; it’s a deliberate move to reduce distraction and make interactions more human. As one report notes, the goal is to shift from “pull” interactions (where you ask for help) to “push” assistance (where the device anticipates your needs).

This ties directly into the rumored user experience features rumored, which we’ll explore next. But first, consider how this design philosophy aligns with the broader trend of micro device AI interactions wearable future possibilities—small, always-on gadgets that blend into our attire and routines.

Speculated Functionality: Voice-First and Proactive Features

Diving into the mobile AI gadget speculation functionality, the cornerstone is voice-first interaction. OpenAI is reportedly developing upgraded audio models set to launch in Q1 2026, enabling faster, more natural conversations. This means the device could handle complex queries without lag, much like chatting with a knowledgeable friend. As sources suggest, these models will power everything from real-time translation to ambient information gathering.

Specific user experience features rumored include:

- Ambient Information Gathering: Using its camera and mic, the device can scan documents, recognize faces (with privacy controls), or listen to lectures to provide summaries or fact-checks on the fly.

- Real-Time Translation: Imagine speaking in English and having the device instantly output Spanish through a paired earpiece, breaking down language barriers seamlessly.

- Task Automation Based on Context: If the device knows you’re at the grocery store, it might pull up your list and suggest recipes based on what’s on sale. Or, if it detects you’re in a car, it could automatically queue up podcasts or navigate.

- Seamless Syncing with Phones/Laptops: The gadget wouldn’t replace your smartphone but complement it, offloading AI tasks to reduce battery drain and leveraging your phone’s display for visual outputs when needed.

The screenless AI context aware nature influences these features profoundly. Without a screen, output relies on audio cues, haptic feedback (like vibrations for notifications), and connected devices. This enables hands-free use—for example, whispering reminders during a workout or automating note-taking from handwritten pages. It’s about creating a conversational interface that feels less like using a tool and more like collaborating with an intelligent assistant.

As industry observers note, this functionality could redefine virtual assistants, moving beyond simple commands to proactive, context-driven aid. The device might even learn your habits over time, offering personalized suggestions without being asked—a true step toward ambient computing.

Form Factor and Wearable Future

The rumored form factor—a pen-like or iPod Shuffle-sized micro device—is key to its appeal. This isn’t a bulky gadget to carry in a bag; it’s designed for constant portability as a wearable, possibly via a pendant, clip, or lanyard. Such a design ensures AI is always within reach, whether you’re jogging, in a meeting, or cooking dinner. This aligns with the vision of micro device AI interactions wearable future possibilities, where technology becomes an extension of ourselves.

How does this enhance AI interactions? By being always-on and worn, the device can maintain persistent context tracking. It might remember conversations from hours ago, recognize locations you frequent, or monitor your daily patterns to offer timely interventions. For instance, if it hears you discussing a project deadline, it could later remind you to start working or suggest relevant resources. This turns the gadget into an ambient companion for human-AI collaboration, blurring the line between tool and partner.

Looking broader, this form factor opens up wearable future possibilities where personal AI evolves toward anticipatory computing. Devices could proactively manage schedules, optimize health based on biometrics, or even mediate social interactions by providing conversation cues. As AI approaches general intelligence, such wearables might become indispensable for navigating complex daily workflows, making technology feel invisible yet omnipresent.

Comparative Landscape: How It Stacks Up

To understand OpenAI’s potential device, it’s useful to contrast it with existing products. The Humane AI Pin, for example, is a screenless pin that projects interfaces onto surfaces, while Ray-Ban Meta glasses incorporate cameras for photo-taking and basic AI queries. However, OpenAI’s rumored gadget differs in its pen-like versatility and deep ChatGPT integration. Unlike the Humane Pin, which aims to replace phones, OpenAI’s device is speculated to complement core gadgets, acting as a dedicated AI conduit that enhances rather than supplants.

Key differentiators in mobile AI gadget speculation functionality include:

- Minimalistic Design: No projector or bulky components, focusing instead on simplicity and wearability.

- Advanced Context-Awareness: Leveraging OpenAI’s multimodal models for richer environmental understanding compared to competitors’ more limited AI.

- Seamless Ecosystem Integration: Tighter sync with ChatGPT and other OpenAI tools, potentially offering unique features like instant note-syncing or code debugging.

These user experience features rumored could give OpenAI an edge, but success hinges on execution—something we’ll explore next.

Challenges and Implications

For all its promise, the device faces significant hurdles. Battery life is a prime concern: always-on sensing and AI processing are power-hungry, requiring innovative solutions like low-power chips or wireless charging cases. Privacy is another critical issue; an always-listening, always-watching gadget must employ robust privacy models, perhaps with local processing or explicit user controls to build trust. Connectivity is also key—reliable Wi-Fi or cellular links are essential for cloud-based AI, but offline functionality will be crucial for usability in dead zones.

Moreover, refining the device’s “personality” via AI improvements is vital. As analysts highlight, a screenless AI context aware gadget must balance helpfulness with intrusiveness, avoiding the “creepy” factor by being transparent about data use. Industry implications are profound: if successful, this device could accelerate the shift toward ambient computing, pushing competitors to innovate further. It might also spur new app ecosystems, developer tools, and ethical frameworks for wearable AI.

Recap and Outlook

In summary, the most compelling elements of the OpenAI new pocket device screenless AI context aware rumor are its screenless, context-aware design for voice-first, proactive aid. From environmental smarts to note-syncing, the user experience features rumored point to a gadget that could make AI more intuitive and integrated into daily life.

A realistic outlook suggests potential delays beyond H2 2026 targets to early 2027, given challenges in audio models, privacy, and infrastructure. For verified news, follow OpenAI announcements, Jony Ive updates, or X leaks. As we await official details, imagine a future where screenless, context-aware AI becomes an invisible extension of thought, redefining routines through seamless micro device AI interactions. This wearable future possibilities vision isn’t just speculative—it’s a glimpse into how technology might soon fade into the background, empowering us without distraction.

Frequently Asked Questions

What is the OpenAI pocket device rumor based on?

It stems from leaks on X (e.g., @kimmonismus), OpenAI job listings for hardware, analyst reports, and collaborations with Jony Ive’s design firm, suggesting a screenless, context-aware AI gadget.

How would a screenless device work?

Through voice-first interaction, audio output, haptic feedback, and syncing with connected devices like phones for visual outputs. It uses cameras and mics for environmental awareness to provide proactive assistance.

What are the key rumored features?

Key features include real-time translation, ambient information gathering, task automation based on context, seamless syncing with other gadgets, and voice-powered ChatGPT integration.

When is the device expected to launch?

Initial targets pointed to H2 2026, but challenges with audio models and privacy may push it to early 2027. Follow official OpenAI channels for updates.

How does it compare to the Humane AI Pin or Ray-Ban Meta glasses?

It’s rumored to be more minimalistic (pen-shaped), with deeper ChatGPT integration and a focus on complementing phones rather than replacing them, offering advanced context-awareness.