Real-Time Multilingual Translation Technology: Breaking Global Barriers

Estimated reading time: 10 minutes

Key Takeaways

- Real-time multilingual translation technology leverages advanced AI to convert speech between languages instantly, enabling seamless cross-cultural communication.

- Core components like AI speech translation features combine speech recognition, neural translation, and voice synthesis to create fluid, human-like conversations.

- Breakthroughs with models like the Gemini 2.5 Pro language model are driving unprecedented accuracy and speed in live translation.

- Initiatives like Google’s underrepresented language support are critical for global inclusivity, bringing translation to over 40 less-common languages.

- Practical live conversation translation tools are already transforming international business, healthcare, education, and events.

- While challenges with accents, privacy, and idioms remain, the future points towards ubiquitous, immersive translation integrated into everyday life and work.

Table of Contents

- The Instant Language Revolution: A Hook

- What is Real-Time Multilingual Translation Technology?

- The Core Components: How the Magic Happens

- The AI Brain: Hybrid Architectures for Speed

- Step-by-Step: The Translation Pipeline

- The Next Leap: Gemini 2.5 Pro and Beyond

- Inclusion in Action: Google’s Underrepresented Language Support

- Tools in the Wild: Real-World Applications

- Navigating the Challenges: Limitations and Ethics

- The Future: Trends and Ubiquitous Integration

- Frequently Asked Questions

The Instant Language Revolution: A Hook

Imagine a world where a business negotiation in Tokyo, a medical consultation in Nairobi, and a classroom in Buenos Aires are no longer constrained by language. This is the promise of real-time multilingual translation technology, a revolutionary force powered by AI that is dismantling the final major barrier to a truly connected global society. By leveraging sophisticated AI for speech recognition, contextual understanding, and synthesis, this technology enables instant language conversion during live conversations. As we progress in an era where AI is changing everything, this innovation stands as a foundational pillar for global collaboration, understanding, and progress, pushing us closer to the vision of seamless, AI-powered conversations everywhere by 2025.

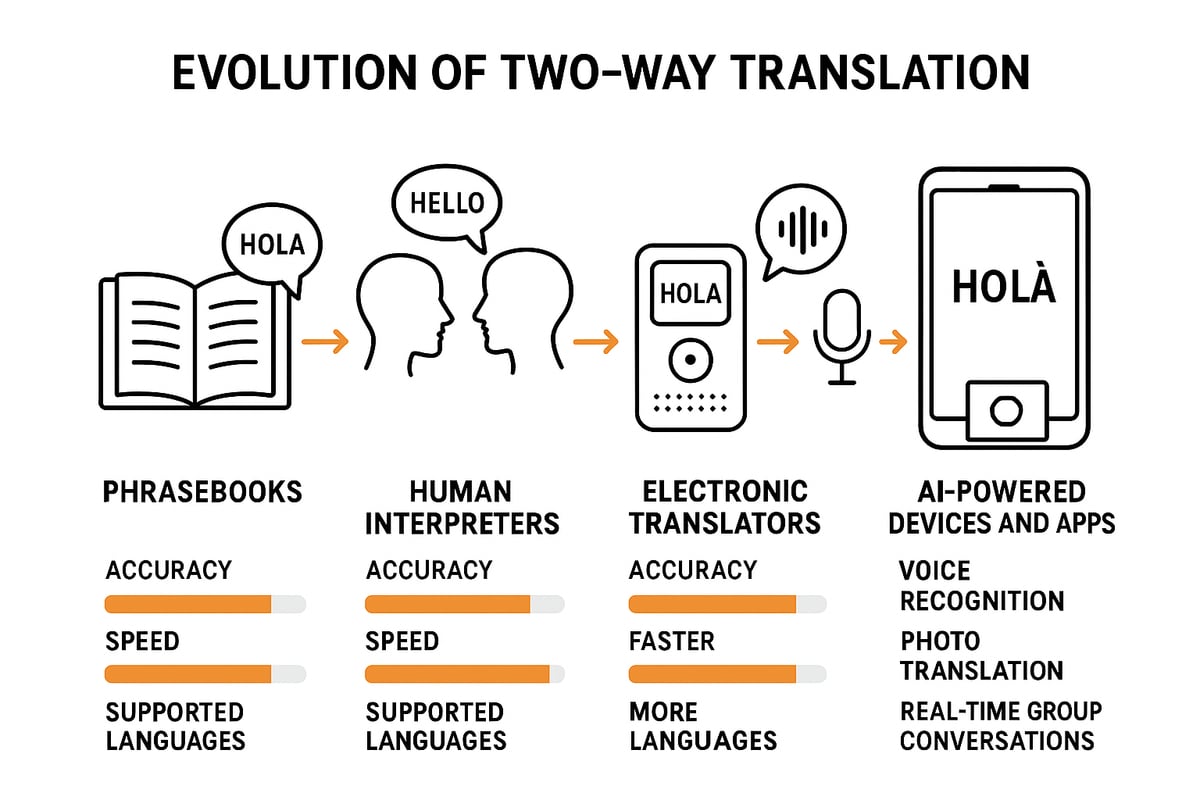

What is Real-Time Multilingual Translation Technology?

At its core, real-time multilingual translation technology refers to systems that provide immediate, accurate translation across multiple languages in live, dynamic settings. Unlike traditional translation that works on static text, this technology operates on the fly, processing spoken words as they are uttered and delivering a translated auditory or textual response with minimal delay. Its significance cannot be overstated—it’s the engine for global business deals, cross-cultural collaboration, diplomatic exchanges, and everyday human connection across borders. Everyday examples are already in our pockets and on our computers in the form of live conversation translation tools like Google Translate’s conversation mode. As the world grows more interconnected, the demand for such instantaneous understanding only intensifies, setting the stage for exploring how it works, the key advancements like the Gemini 2.5 Pro language model driving it forward, and critical initiatives for inclusivity like Google’s underrepresented language support.

The Core Components: How the Magic Happens

The seamless experience of real-time translation is built on a complex symphony of AI speech translation features. This backbone consists of three critical stages:

- Real-Time Speech-to-Text Transcription: The first step involves capturing spoken words and converting them into text almost instantaneously. Modern systems are trained on vast, diverse datasets to handle a wide array of accents, dialects, and the overlapping speech of multiple speakers, a crucial step towards unlocking human-like AI conversations.

- Neural Machine Translation (NMT): This is where the actual language conversion happens. NMT uses deep learning models to translate the transcribed text, considering not just individual words but the full context of the sentence, including idioms and cultural nuances. This push for contextual accuracy is central to making interactions feel natural.

- Low-Latency Text-to-Speech Synthesis: The final translated text is then converted back into speech. The latest systems go beyond robotic voices, aiming to preserve elements of the original speaker’s tone, emotion, and even vocal identity. This quest for voice realism is what completes the illusion of a direct conversation and is a top AI use case for 2025.

Together, these components work in a continuous loop, often achieving end-to-end processing that is so fast it feels instantaneous to the user, fulfilling predictions that AI-powered conversations and real-time translation will be everywhere.

The AI Brain: Hybrid Architectures for Speed

Powering these AI speech translation features are sophisticated hybrid AI architectures designed for one paramount goal: speed without sacrificing accuracy. These are not single algorithms but interconnected systems:

- Neural Transformers: These models are exceptional at handling long-range dependencies in language, allowing them to grasp contextual nuances and the relationship between words that are far apart in a sentence.

- Convolutional Neural Networks (CNNs): Often deployed in the initial audio processing stage, CNNs help filter out background noise and enhance speech clarity from the raw audio input, which is vital for real-world, noisy environments.

- Generative Models: Used in the final text-to-speech stage, these models are trained to generate natural, fluid speech that mimics human vocal patterns.

By optimizing the entire pipeline, these architectures strive to achieve latencies under 300 milliseconds—faster than the average human blink. This is the engineering marvel that makes fluid, real-time interaction possible, a feat being pursued in cutting-edge research and development projects aimed at next-generation communication devices.

Step-by-Step: The Translation Pipeline

Let’s walk through a typical millisecond-by-millisecond journey of a spoken phrase:

- 1. Audio Capture & Noise Filtering: A microphone captures the speaker’s voice. AI immediately works to isolate the speech from ambient noise like chatter or traffic.

- 2. Speech-to-Text Conversion with Accent Adaptation: The cleaned audio is fed into an automatic speech recognition (ASR) model. This model has been trained on millions of hours of global speech, allowing it to accurately transcribe words even with strong accents or regional dialects.

- 3. Neural Translation with Context: The transcribed text is passed to a neural machine translation engine. Here, the model doesn’t just translate word-for-word; it analyzes the full context to choose the most accurate and natural-sounding equivalent in the target language, handling tricky elements like sarcasm or local slang.

- 4. Text-to-Speech Generation with Voice Mimicry: Finally, the translated text is synthesized into speech. Advanced systems can now modulate pitch, pace, and timbre to sound more like the original speaker or a chosen voice profile, adding a layer of personalization and emotional resonance to the exchange.

The Next Leap: Gemini 2.5 Pro and Beyond

The relentless pace of AI innovation is exemplified by breakthroughs like the Gemini 2.5 Pro language model. As a cutting-edge example in the 2025 AI ecosystem, models like Gemini 2.5 Pro enhance translation by delivering significant improvements in multilingual accuracy, processing speed, and cultural adaptation over their predecessors. They are engineered to power dynamic real-time performance in the most diverse and challenging environments, from bustling trade floors to remote field sites. This evolution is part of a broader trend where advanced AI features are becoming standard, much like the intelligent capabilities expected in the latest smartphones.

Compared to earlier models, the Gemini 2.5 Pro language model offers superior handling of complex linguistic features. It better understands context, unravels the meaning behind colloquial slang, and delivers translations at a speed that makes lag a thing of the past. This direct link between advanced foundational models and real-time multilingual translation technology is what enables truly live, seamless scenarios. It’s important to note that the landscape is rich with other powerful models, such as Meta’s SEAMLESSM4T for voice-to-voice translation across 36+ languages and Google’s own suite of neural systems that continuously improve services like Live Translate, demonstrating the collaborative race towards perfect, instantaneous communication.

Inclusion in Action: Google’s Underrepresented Language Support

True global communication must be inclusive, which is why initiatives like Google’s underrepresented language support are so critical. This effort has expanded translation and assistant capabilities to over 40 less-common languages, focusing on bilingual handling and prioritizing tongues that are often excluded from technological progress. This work is vital for immigrant communities, remote populations, and preserving linguistic diversity, ensuring that the benefits of real-time multilingual translation technology reach everyone, not just speakers of dominant world languages.

This commitment involves developing algorithms that can learn from smaller datasets for languages like Quechua, Igbo, or Nepali, ensuring they are prioritized without sacrificing core accuracy. It’s part of a broader movement to make technology universally accessible, a trend seen in the push for inclusive AI camera features. By weaving these languages into the fabric of its Translate and Assistant platforms, Google is helping to bridge digital divides and foster understanding in some of the world’s most vulnerable and interconnected communities, proving that AI can be a force for equitable connection.

Tools in the Wild: Real-World Applications

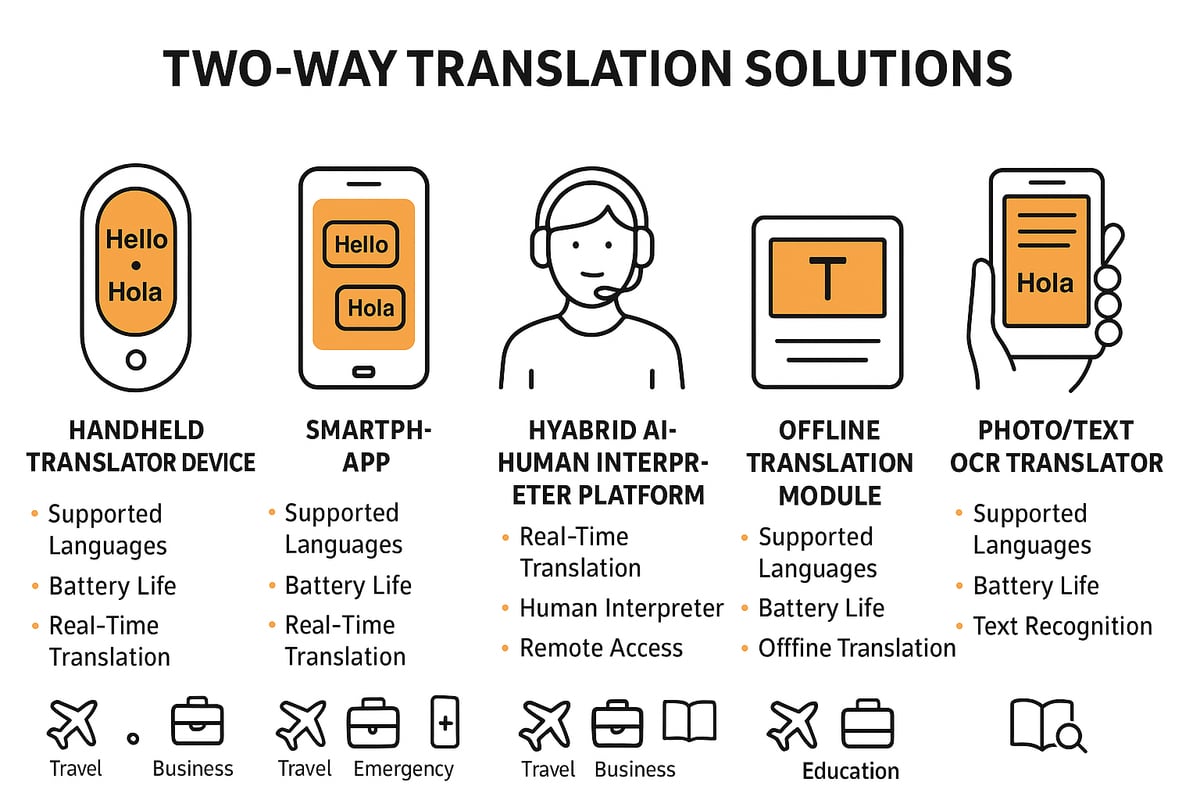

The theory comes to life through powerful live conversation translation tools. Platforms like Google Translate’s live mode, Interprefy, Microsoft Teams interpreters, TranslAItor, and KUDO are already transforming sectors by enabling seamless multilingual meetings, telehealth consultations, inclusive education, and global events with real-time captions and voice preservation. These tools exemplify the transformative power of AI, similar to how revolutionary intelligence features are redefining user experiences on personal devices.

Let’s explore some concrete applications:

- Global Workplaces: Multinational teams use tools integrated into platforms like Zoom or Teams to conduct meetings where each participant hears or sees captions in their native language, breaking down silos and boosting productivity.

- Humanitarian & Public Services: Organizations like Tarjimly connect volunteer translators with refugees and immigrants for critical, on-demand translation in medical, legal, or social service settings.

- Healthcare: For Limited English Proficiency (LEP) patients, real-time translation apps on tablets or dedicated devices allow doctors to obtain accurate medical histories and explain procedures, directly improving care outcomes and safety.

- International Events: Summits like the AI for Good 2025 leverage these tools to provide live multilingual access to attendees worldwide, democratizing knowledge sharing.

The integration of AR/VR for immersive communication is the next frontier, pointing to a future where these live conversation translation tools become as commonplace and user-friendly as text messaging.

Navigating the Challenges: Limitations and Ethics

Despite spectacular advances, real-time multilingual translation technology is not without its hurdles. Key challenges include:

- Linguistic Complexity: Heavy accents, thick dialects, regional slang, and cultural idioms can still trip up even advanced models like the Gemini 2.5 Pro language model, reducing accuracy to an estimated 85-90% in uncontrolled tests. The subtlety of humor, sarcasm, and formal honorifics remain particularly difficult.

- Technical Limitations: High-noise environments (e.g., factories, crowded streets) increase error rates and latency. Maintaining sub-300ms speed while ensuring high-fidelity translation requires immense computational power and optimized algorithms.

- Privacy and Security: Processing sensitive conversations—be it corporate strategy or personal health data—demands robust, end-to-end encryption. Users must trust that their words are not being stored or misused, a significant concern in fields like legal and emergency response translation.

- Ethical Considerations: The potential loss of vocal identity or emotional nuance in translation is an ongoing issue. Furthermore, over-reliance on technology could inadvertently diminish the incentive to learn other languages and the deep cultural understanding that comes with it.

The industry is actively working to mitigate these issues through more contextual AI, better data governance, and hybrid human-AI review systems, ensuring the technology develops responsibly.

The Future: Trends and Ubiquitous Integration

The trajectory for real-time multilingual translation technology points towards ubiquitous, seamless integration into the fabric of daily life and work. Key trends on the horizon include:

- Ubiquitous Adoption: Translation will become a built-in feature of web browsers, productivity apps, operating systems, and IoT devices, disappearing into the background as a utility we simply expect to work.

- Broader Language Inclusion: Efforts like Google’s underrepresented language support will expand, bringing thousands more language pairs into the fold and dramatically shrinking the digital language divide.

- Immersive Communication: The convergence with AR and VR will create stunning new experiences. Imagine AR glasses that subtitle the world in real-time or VR meeting rooms where everyone speaks and hears in their own language, powered by models like the Gemini 2.5 Pro language model.

- AI Dubbing and Content Creation: The same core technology will revolutionize media, enabling instant dubbing of videos and podcasts that preserve the speaker’s original voice and sync lip movements, a clear example of how generative AI is changing creative work.

- Hybrid Human-AI Workflows: In sensitive domains (legal, medical), the future lies in “human-in-the-loop” systems where AI handles the initial rapid translation, and a human expert provides real-time oversight and correction for critical nuances.

As predicted by analysts, this evolution will see AI-powered conversations and real-time translation becoming everywhere, fundamentally reshaping global interaction just as profoundly as the internet did.

Frequently Asked Questions

How accurate is real-time translation technology today?

For common language pairs in clear audio conditions, leading live conversation translation tools can achieve over 90% accuracy on straightforward conversation. However, accuracy can drop with strong accents, technical jargon, or complex cultural phrases. The technology is continually improving, with each new model generation making significant strides.

What’s the difference between real-time translation and traditional translation apps?

Traditional apps often require you to type or paste text, then wait for a translation. Real-time multilingual translation technology is designed for live, streaming audio. It listens, transcribes, translates, and speaks back continuously, enabling a natural, back-and-forth conversation without manual steps.

Is my privacy protected when using these tools?

Privacy policies vary by provider. Reputable companies encrypt audio data during transmission and often process it ephemerally (without long-term storage) for the sole purpose of translation. For sensitive conversations, it’s crucial to use tools that offer end-to-end encryption and clearly state they do not store your data. Always review the privacy settings and terms of service.

Can these tools handle specialized vocabulary, like medical or legal terms?

General-purpose tools may struggle with highly specialized terminology. However, many enterprise-focused live conversation translation tools allow for custom glossary uploads. Users can add specific terms and their approved translations, which the AI will then prioritize, making them suitable for professional use in controlled settings.

Will this technology make human translators obsolete?

No. Instead, the role of human linguists is evolving. While AI handles instantaneous, high-volume, or routine translation, human experts are increasingly needed for tasks like training AI models on rare languages, post-editing critical documents, providing cultural consultancy, and overseeing translations in high-stakes scenarios like diplomacy or publishing. The future is one of collaboration, not replacement.