This blog post is focused heavily on technical specifications, NPU performance (TOPS), and the concept of the AI PC. I will select images that visually represent the chip itself, performance metrics (like TOPS comparisons or charts), and the overall architecture/ecosystem.

Here is the revised blog post with 10 strategically placed images:

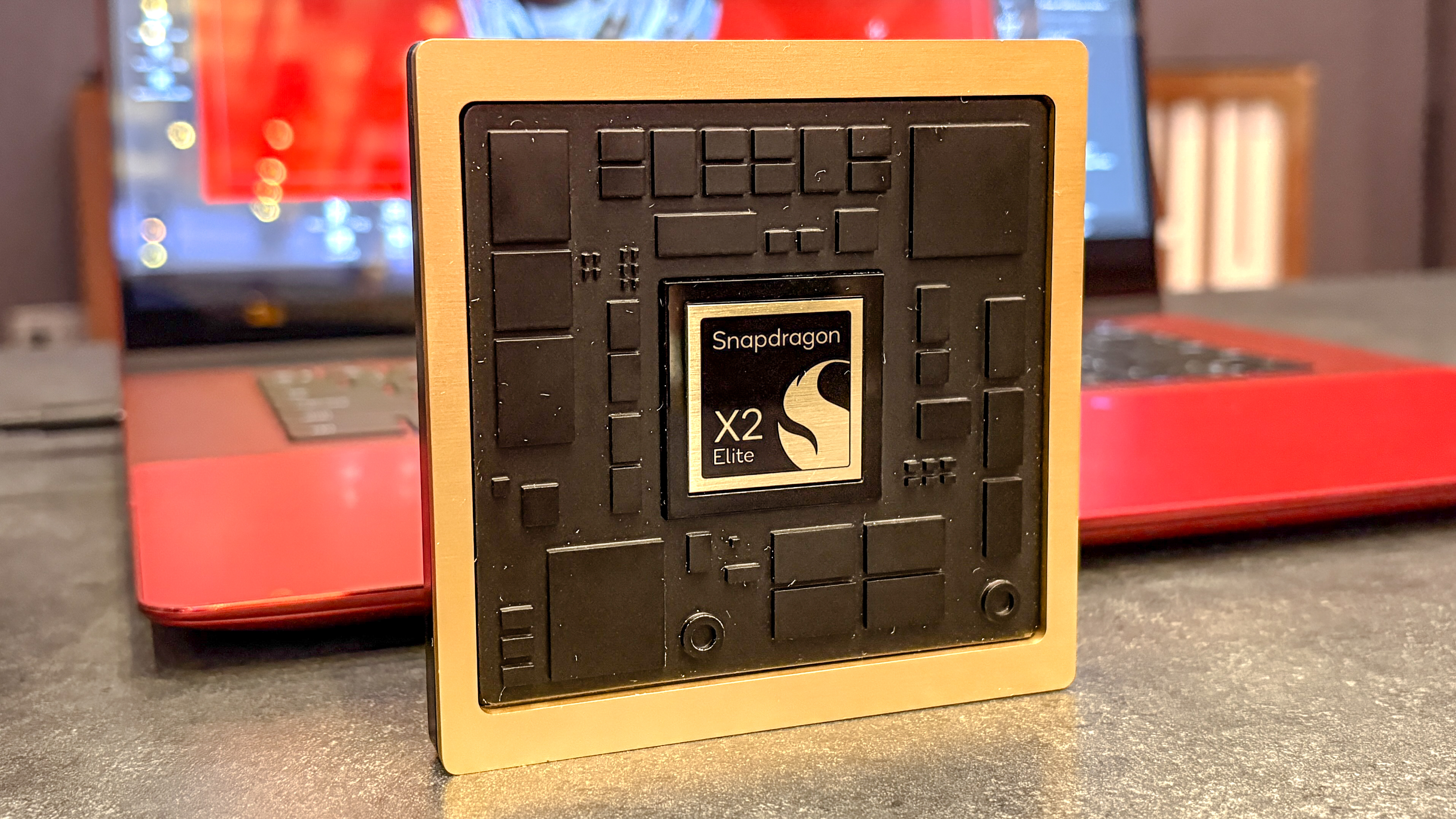

The Dawn of the AI PC: Why the Snapdragon X2 Elite Matters Now

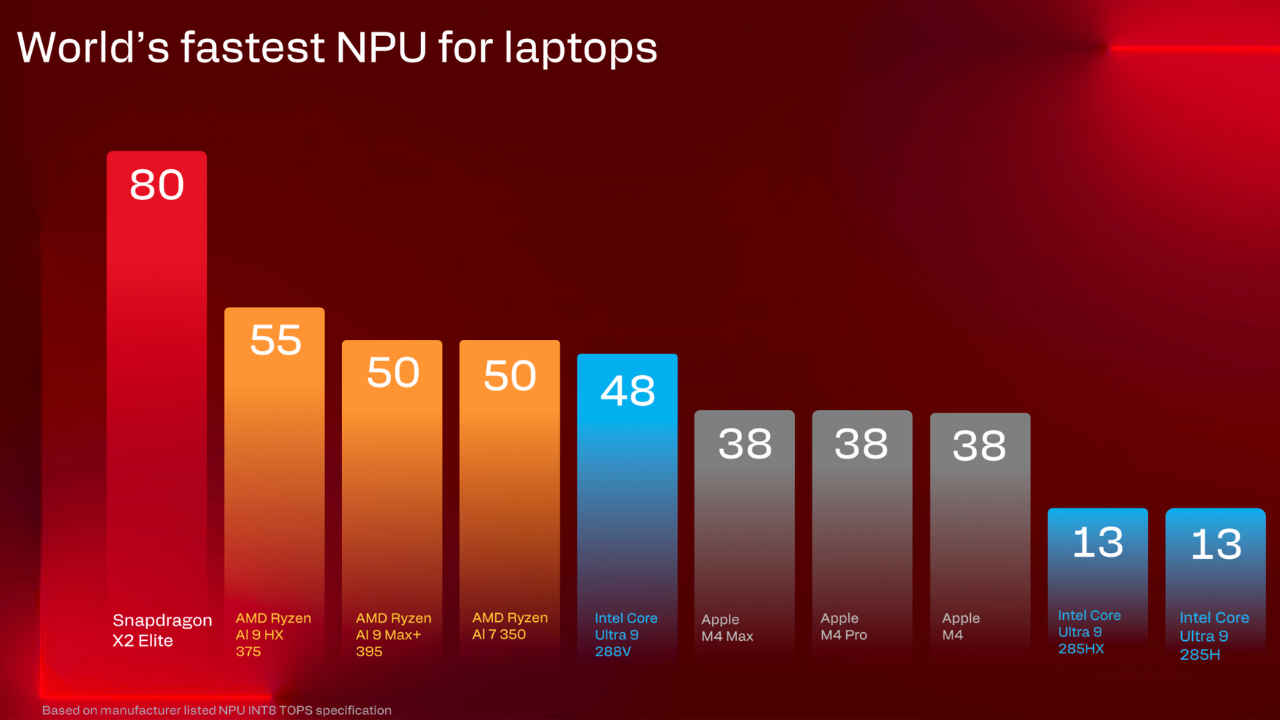

The conversation surrounding the next generation of personal computing hinges on one critical specification: the Neural Processing Unit (NPU) performance. If you are invested in the future of computing, particularly the arrival of the genuine AI PC, reading a detailed snapdragon x2 elite 80 tops npu performance review is no longer optional—it is essential.

Qualcomm has officially launched its second‑generation Windows AI PC solution: the Snapdragon X2 Elite platform. This chipset is fundamentally designed around its headline feature: the **80 TOPS Hexagon NPU**. This massive throughput is specifically engineered to handle demanding Copilot+‑class workloads and to support the pervasive use of generative AI directly on the device, moving intensive tasks off the cloud and onto your laptop. This shift marks a significant pivot for Windows hardware.

Qualcomm is making aggressive performance claims to back up this hardware push. They cite an **up to 78% NPU performance uplift** compared to the 45 TOPS first-gen Snapdragon X Elite, a feat achieved while simultaneously claiming improvements in power efficiency. These chips, built on the cutting-edge 3nm process, feature new Oryon prime cores boosting clock speeds up to 5 GHz.

This article aims to deliver a comprehensive snapdragon x2 elite 80 tops npu performance review. Our goal is to help you identify the best processors for on-device generative ai 2024 by dissecting this technical leap, exploring real-world usage scenarios, understanding its LLM capability, and clearly contrasting it with the architecture of older Qualcomm offerings.

Understanding the Benchmark – What is an 80 TOPS NPU and What Can It Do?

To begin, we must directly address the core question: what is an 80 tops npu and what can it do?

TOPS stands for Tera Operations Per Second, which is the industry standard metric used to quantify the raw throughput capacity of an AI accelerator. The Snapdragon X2 Elite’s specialized **Hexagon NPU achieves up to 80 TOPS** when performance is measured using the common INT8 quantization standard for inference tasks.

This staggering figure isn’t generated by a single component. Instead, the 80 TOPS capability is the result of the NPU’s specialized architecture, where the scalar, vector, and matrix engines work together in finely tuned parallel processes to handle the complex mathematical demands of modern AI operations with exceptional efficiency. This coordination is key to its success.

Practically, what does 80 TOPS mean for the end-user? It translates directly into the ability to manage multiple, simultaneous AI workloads without crippling system performance. Think of real-time background vision processing for conferencing, instant transcription services running constantly, and the ability to execute complex Windows features like the Recall function entirely locally. By offloading this processing from the CPU or GPU, the system dramatically preserves battery life—a hallmark requirement for a viable laptop platform.

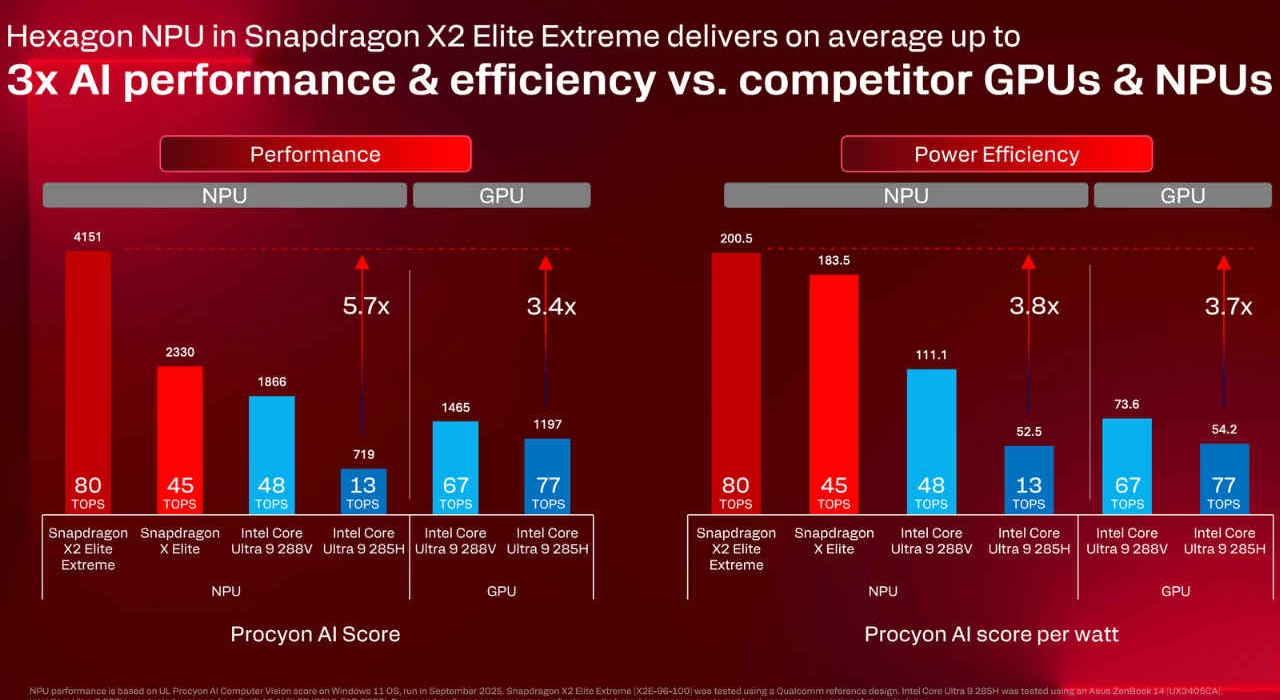

The real-world impact is observable in demanding professional applications. This power enables features like real‑time image upscaling and filtering within professional tools, reportedly leading to significant gains for partners like Adobe. Furthermore, its high throughput is evidenced in computer vision tasks, where it achieves scores in benchmarks like Procyon AI Computer Vision exceeding 4,100.

However, a responsible review requires caveats. It is crucial to clarify that the headline 80 TOPS metric applies specifically to INT8 workloads. Performance utilizing higher precision standards like FP16 or FP32 will inherently be lower, although still significantly enhanced over previous generations. Furthermore, the final user experience is intrinsically tied to the maturity and optimization of the software stacks—including Windows ML and DirectML—as well as the OEM’s ability to implement effective cooling solutions.

The Verdict – A Deep Dive in the Snapdragon X2 Elite NPU Performance Review

This section forms the core data for our analysis, providing the hard evidence for this snapdragon x2 elite 80 tops npu performance review.

The NPU does not operate in isolation; it is part of the larger X2 Elite platform built on the advanced 3 nm technology. This platform boasts up to 18 high-performance Oryon CPU cores, a newly redesigned Adreno X2‑90 GPU, and support for fast LPDDR5X memory offering bandwidth reaching **up to 228 GB/s**.

Focusing on the NPU iteration, the new Hexagon design delivers those 80 INT8 TOPS, which Qualcomm markets as providing **37% higher NPU performance and 16% less power** consumption when executing identical tasks compared to the first-generation X Elite chip. This efficiency gain is as important as the raw speed increase.

Synthetic benchmark evidence strongly supports these claims. Early reports indicate **Geekbench AI scores reportedly above 88,000**, positioning the X2 Elite favorably against contemporary mobile NPUs in standardized testing. This throughput capability is further validated by the aforementioned Procyon AI Computer Vision score surpassing 4,100, demonstrating excellent real-world pipeline execution.

When we examine application performance, the gains become tangible for creative professionals. For instance, early benchmarks shared by software partners suggest speeds up to **~28% faster in Photoshop photo editing** workflows and substantial gains of **~43% faster exports in Lightroom** when comparing X2 systems against previous Snapdragon X platforms.

The platform’s ultimate strength lies in its superior concurrency—the hardware’s ability to manage numerous AI tasks simultaneously. This means running your Copilot assistance features, ongoing local vision models, and a background generative task without causing noticeable lag or slowdown in your foreground applications. Crucially, it manages this demanding concurrency while maintaining industry-leading mobile power efficiency.

Local Powerhouse – Running Local Large Language Models on Snapdragon X2

For advanced users, the primary interest in this hardware centers on the query: how effectively is it capable of running local large language models on snapdragon x2?

The X2 Elite successfully merges two critical elements required for local LLMs: extreme acceleration and vast memory. It combines the dedicated **80 TOPS NPU** for core inference acceleration with significant system memory resources, supporting up to **48 GB of LPDDR5X** and maintaining that high memory bandwidth of up to **228 GB/s**. Memory capacity is often the biggest limiter for local LLMs.

This hardware configuration suggests robust performance tiers for local models. We expect small to mid-sized models, generally those ranging from 3B to 8B parameters when appropriately quantized (using INT4 or INT8), will be able to run in near real-time, facilitating fluid, offline conversations and summarization tasks without cloud dependency.

Beyond text, the platform excels at Multimodal Integration. This is where the NPU shines by simultaneously analyzing visual input (from a camera feed or file) alongside language models. This capability is essential for building truly advanced, private AI assistants that can understand and react to both what you type and what you see.

Contextually, this places the X2 Elite firmly among the best processors for on-device generative ai 2024. It is not merely a general CPU with an attached accelerator; it is a specialized platform engineered specifically for these persistent, local AI tasks. The combination of dedicated 80 TOPS hardware, substantial memory capacity, and high sustained CPU clock speeds (up to 5.0 GHz) provides a balanced architecture that avoids the bottlenecks common in less dedicated silicon.

The Family Tree – Qualcomm Snapdragon X Series vs 8 Series for AI

To fully appreciate the X2 Elite, we must compare its architectural role against Qualcomm’s other major offerings, addressing the common query regarding the qualcomm snapdragon x series vs 8 series for ai capabilities.

The **Snapdragon X series** is explicitly designed as the flagship PC platform. It is engineered for sustained performance, operating within higher Thermal Design Power (TDP) envelopes suitable for thin-and-light laptops. It supports massive LPDDR5X memory subsystems and features higher core counts (up to 18 cores) necessary for desktop-like productivity loads.

In contrast, Snapdragon 8‑series chips are inherently optimized for the constrained environments of smartphones. While they prioritize extreme power efficiency for short, bursty tasks, they inherently feature smaller NPUs, much lower memory bandwidth ceilings, and tighter thermal constraints.

In a direct AI comparison, while 8-series NPUs provide excellent efficiency for mobile-centric tasks like photo processing in your hand, the X2 Elite’s massive **80 TOPS NPU** offers substantially higher raw throughput. More critically, the X series possesses the necessary memory headroom to even host multi-billion parameter models that an 8-series device would simply be unable to load or process effectively.

For the prospective buyer, the guidance is clear: if your priority involves heavy, continuous AI workloads—such as extensively **running local large language models**, utilizing advanced, real-time visual processing, or demanding sustained performance from complex Copilot+ features—the X series is the correct architectural path. Its superior CPU performance, massive cache structure (up to 53 MB), and high bandwidth architecture ensure that AI tasks do not create debilitating bottlenecks across the entire system.

Frequently Asked Questions

What exactly is the difference between 45 TOPS and 80 TOPS performance?

The difference is significant raw processing capacity. 80 TOPS allows the system to process AI tasks nearly twice as fast, or alternatively, run far more complex models or more concurrent features (like running a transcription service and an image analysis tool simultaneously) without stuttering, as seen in the older 45 TOPS generation.

Can the Snapdragon X2 Elite run GPT-4 locally?

While the X2 Elite supports the memory and speed required for large models, running a full, unquantized GPT-4 equivalent locally is unlikely due to current model sizes. However, highly optimized, quantized versions of similarly capable models (perhaps 15B to 30B parameters) can be accelerated impressively by the 80 TOPS NPU, especially when utilizing all available system RAM.

Is the 80 TOPS measurement standardized across all vendors?

No, 80 TOPS is reported specifically by Qualcomm for INT8 workloads. It serves as an excellent internal comparison point against their previous generation (45 TOPS INT8), but direct comparison against NPUs measured in FP16 (like some older Intel or AMD implementations) requires careful mathematical conversion and understanding of the underlying software stack optimization.

How does the 3nm process impact AI performance?

The smaller 3nm process allows Qualcomm to pack significantly more transistors into the NPU and memory controllers, increasing raw capability (the 80 TOPS jump) while simultaneously improving the power efficiency per operation, which is vital for delivering high AI performance in a battery-powered laptop chassis.

What does the term Copilot+ class workloads mean in practice?

This refers to the suite of advanced, always-on AI features Microsoft is developing for Windows, such as Recall, advanced live captioning, and highly contextual search, all of which require sustained NPU processing power that previous generations of silicon could not reliably provide.

The Final Assessment: Is the Snapdragon X2 Elite Worth the Investment for AI?

In summarizing the findings of this comprehensive snapdragon x2 elite 80 tops npu performance review, one fact stands out: the X2 Elite represents a profound architectural leap forward for the Windows ARM ecosystem. The establishment of the **80 TOPS NPU** as the core feature positions this chip as a genuine market leader in the laptop space, showcasing significant generational gains over its already capable predecessor.

For the consumer actively investigating the best processors for on-device generative ai 2024, the X2 Elite delivers forcefully on the promise of true AI hardware. It provides the raw throughput necessary to execute complex local AI tasks efficiently, ensuring that users are not constantly tethered to the power outlet simply to leverage these new features.

The recommendation is strong: If you intend to deeply leverage AI capabilities in your next mobile workstation—specifically if running LLMs locally, engaging in high-speed visual processing, or demanding constant, low-latency execution from Copilot+ features—then the Snapdragon X2 Elite is arguably one of the most compelling and future-proof silicon architectures currently available within the ARM PC landscape. Investing in the X2 Elite is prudent if local AI acceleration is a primary purchasing criterion for your next laptop.