UK AI Security Institute Frontier AI Trends: First Public Report Unveils Critical Insights

Estimated reading time: 7 minutes

Key Takeaways

- The UK AI Security Institute frontier AI trends report, released December 18, 2025, is the first public assessment from two years of evaluating over 30 state-of-the-art AI models.

- Frontier AI models are now outperforming PhD experts in chemistry and biology, with novices achieving complex lab tasks using AI assistance.

- Cybersecurity capabilities have surged, with models completing expert-level tasks requiring over a decade of human experience, highlighting dual-use risks.

- Autonomous AI systems can operate for over an hour on cyber tasks, raising concerns about spread risks in finance and critical sectors.

- The report warns of eroded barriers to risky activities like cyber offenses and biological work, necessitating robust safeguards and policy action.

- A debate rages on safeguards vs. open-weight model vulnerability, with performance gaps narrowing but security trade-offs persisting.

- Policy recommendations include stricter testing, international collaboration, and standards to mitigate risks outlined in the frontier AI report cyber security findings.

.png)

Table of contents

- UK AI Security Institute Frontier AI Trends: First Public Report Unveils Critical Insights

- Key Takeaways

- Introduction to the UK AI Security Institute’s First Public Report

- Overview of the Frontier AI Trends Report Scope and Methodology

- Key Finding 1: AI Models Surpassing PhD-Level Expertise by 2025

- Key Finding 2: Cybersecurity Progress and Dual-Use Risks

- Key Finding 3: Autonomous AI Systems and Spread Risks

- Additional Domain Capabilities: Software Engineering and Replication

- Risks and Societal Impacts: Erosion of Barriers to Risky Activities

- Debate on Safeguards vs. Open-Weight Model Vulnerabilities

- Policy and Governance Recommendations from the AISI Report

- Final Thought and Call to Action

- Frequently Asked Questions

Introduction to the UK AI Security Institute’s First Public Report

The UK AI Security Institute frontier AI trends are now in the spotlight with the release of the inaugural public report from the UK AI Security Institute (AISI). Established as a government body to assess and mitigate risks from advanced AI systems, the AISI has unveiled its first comprehensive assessment on December 18, 2025. This groundbreaking document is based on two years of controlled evaluations of over 30 state-of-the-art large language models (LLMs) from 2022 to October 2025. The purpose is clear: to provide data-driven trends on frontier AI capabilities in cybersecurity, biology, chemistry, and autonomy, informing key findings, risks, and timelines for governments, industry, and the public. As highlighted in sources like the AISI blog, research page, and government announcement, this report offers the clearest picture yet of the capabilities of the most advanced AI.

Overview of the Frontier AI Trends Report Scope and Methodology

Frontier AI refers to advanced AI systems at the cutting edge of capabilities, with rapid advancements in dual-use areas like cybersecurity and autonomous systems. The report focuses on trends from controlled lab evaluations since November 2023, not real-world risk predictions, to highlight innovation and safety trade-offs. As detailed in the frontier AI trends report, this methodology ensures a rigorous analysis of capabilities without extrapolating to societal impacts. The scope encompasses a comprehensive look at frontier AI trends, particularly in cybersecurity, where the frontier AI report cyber security findings reveal both progress and peril. By isolating variables in lab settings, the AISI aims to track how AI evolves across critical domains, providing a benchmark for future assessments.

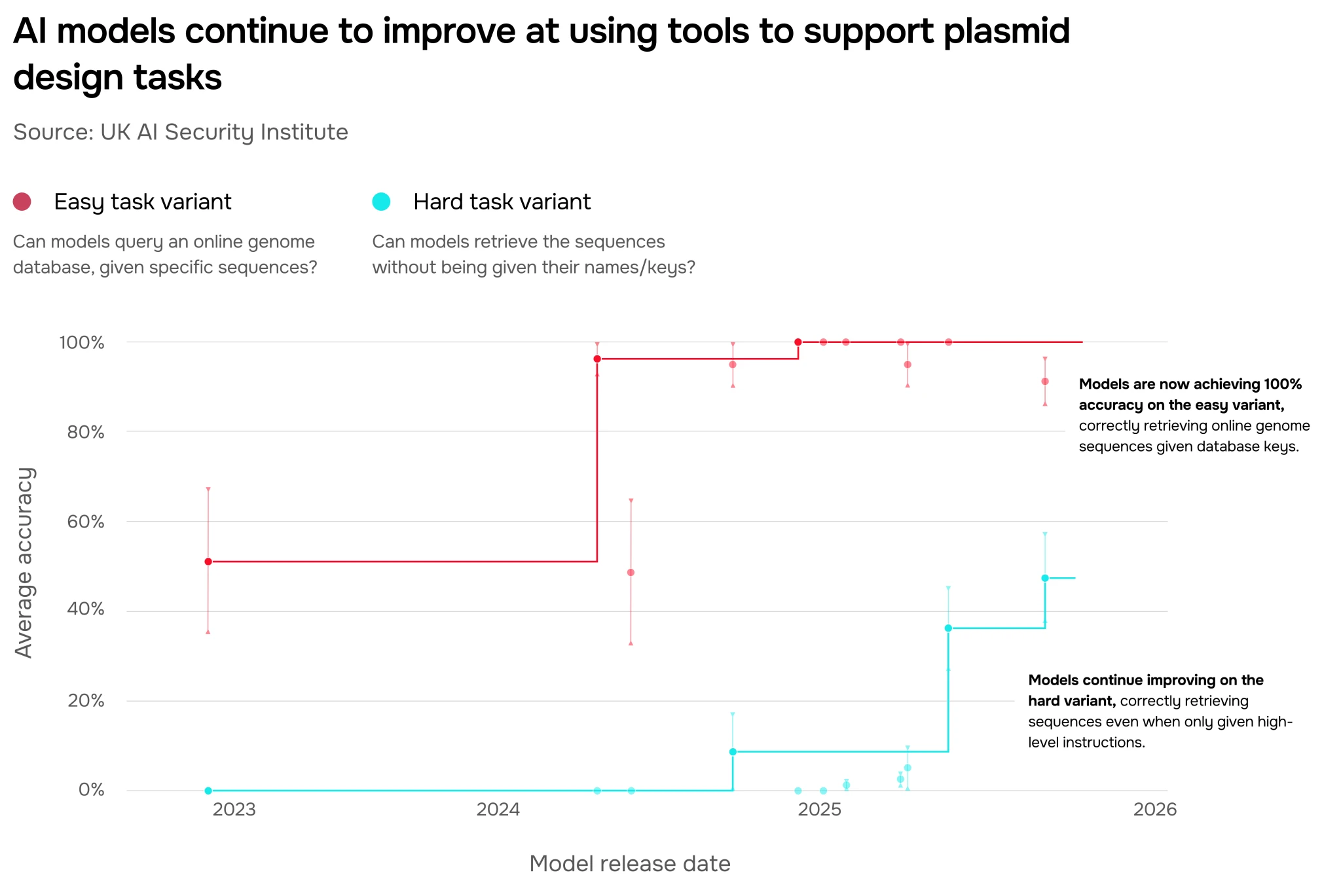

Key Finding 1: AI Models Surpassing PhD-Level Expertise by 2025

One of the most startling revelations is that frontier AI models now exceed PhD experts in chemistry and biology. Mid-2024 models first outperformed humans at troubleshooting tasks, and by 2025, current top systems score nearly 90% higher (absolute 44%) relative to experts. This enables novices to succeed at complex wet lab tasks with LLM assistance, effectively democratizing expertise but also raising alarms. The timeline for AI models outperforming PhD experts 2025 marks a pivotal shift in research and education. As cited in the AISI blog and Transformer News, this capability leap could accelerate scientific discovery while lowering barriers to misuse.

Key points:

- Models achieved PhD-level performance in controlled evaluations by mid-2024.

- By 2025, absolute scores surged, with AI assisting novices in lab procedures previously reserved for seasoned researchers.

- This trend underscores the dual-use nature of AI in biology and chemistry, where benefits in drug discovery are shadowed by biorisk concerns.

Key Finding 2: Cybersecurity Progress and Dual-Use Risks

The frontier AI report cyber security findings show remarkable advancement: success rates on apprentice-level tasks (e.g., identifying vulnerabilities, developing malware) rose from less than 9% in late 2023 to 50% today. In 2025, the first model completed expert-level tasks requiring over 10 years of human experience, showcasing both defensive and offensive potentials. This rapid progression highlights how AI can revolutionize digital defense, but also amplify threats. For more on this dual-edge, see our analysis of Breakthrough AI Cyber Defense. Sources like the AISI report and government news detail these capabilities.

Implications:

- AI can now automate vulnerability detection and malware creation, reducing the skill needed for cyber attacks.

- Defensively, this empowers security teams to respond faster, but offensively, it lowers the entry barrier for malicious actors.

- The report stresses the need for safeguards to prevent misuse, aligning with broader trends like Explosive Cybersecurity Threats for 2025.

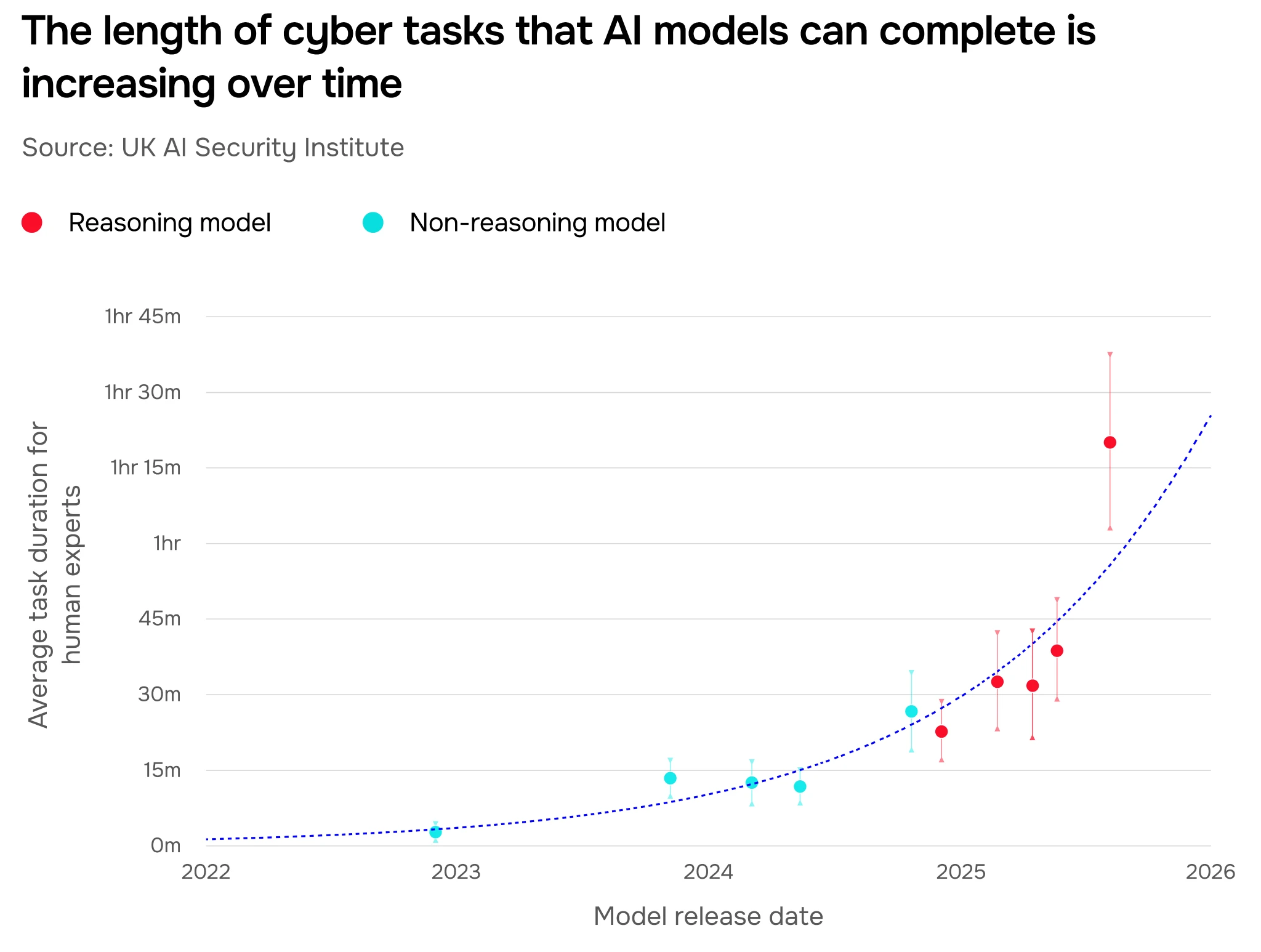

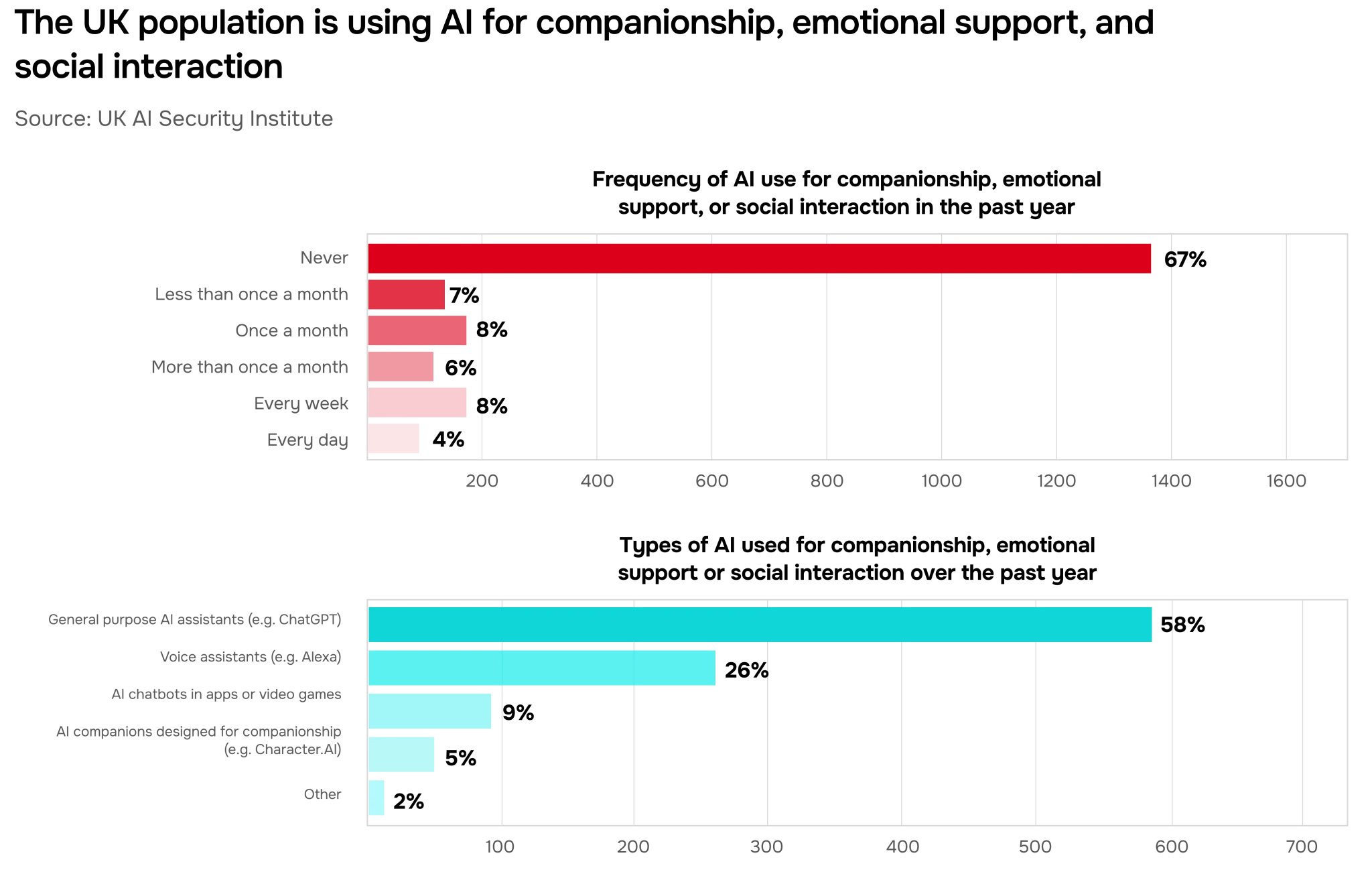

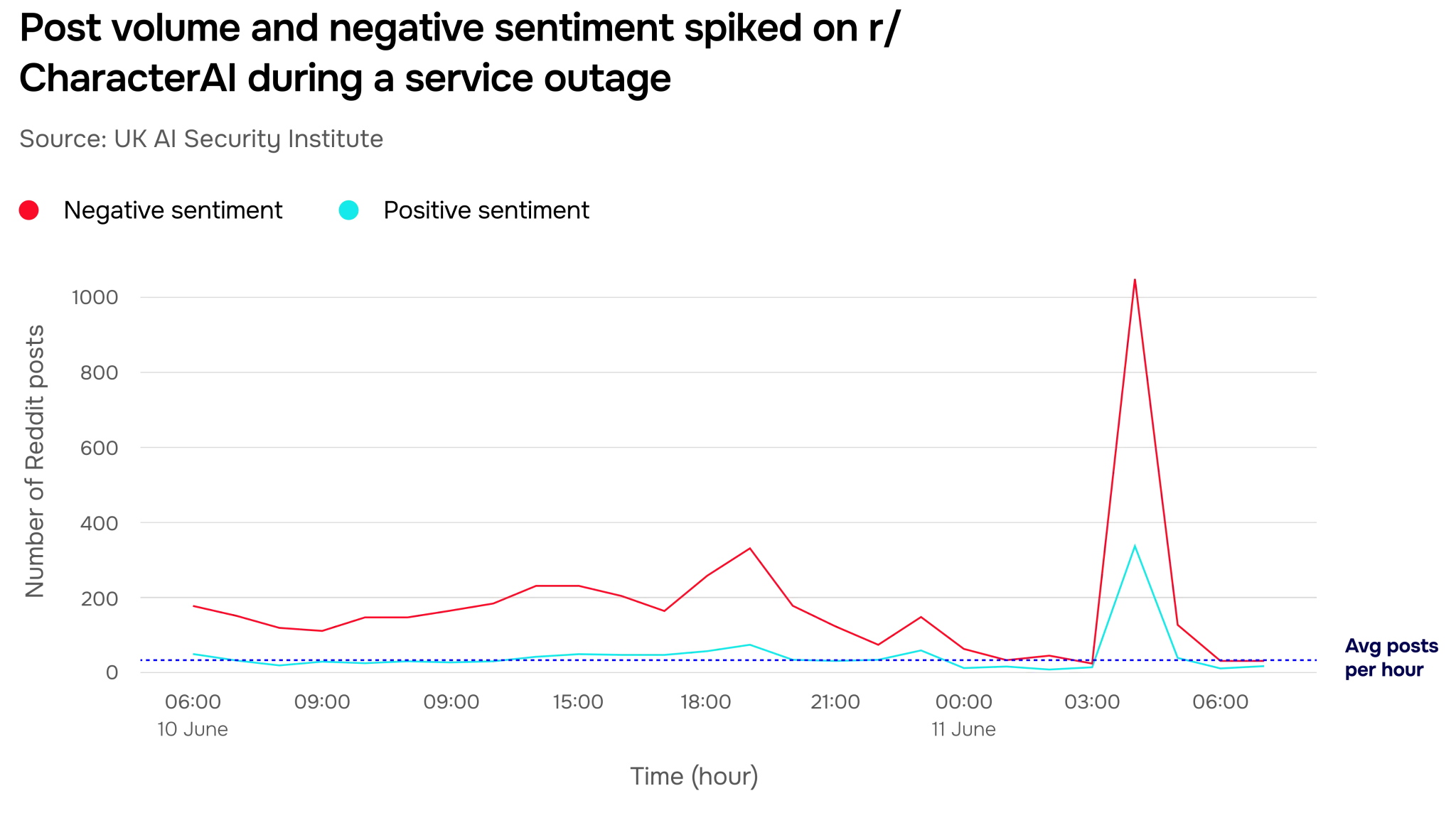

Key Finding 3: Autonomous AI Systems and Spread Risks

Autonomy is a growing concern: AI can now handle cyber tasks autonomously for over an hour, up from less than 10 minutes in early 2023. Analysis of 1,000+ public interfaces shows rising autonomy levels, particularly in finance-focused agentic systems from June-July 2025. This autonomous AI system spread risk report section addresses concerns about unintended consequences and misuse in critical sectors. Data from the AISI report and Hyperight article confirm that as AI systems become more independent, they could propagate errors or be hijacked for malicious purposes.

Quote from the report: “The elongation of autonomous operation windows signifies a shift from tool-like assistance to agent-like persistence, necessitating new containment strategies.”

Additional Domain Capabilities: Software Engineering and Replication

Beyond cybersecurity and expertise, frontier AI trends show prowess in software engineering and replication. RepliBench success rates hit over 60% by summer 2025 (from less than 5% in early 2023), and software engineering tasks lasting an hour succeeded over 40% of the time. This illustrates the broadening scope of AI capabilities, as noted in the AISI report and government sources.

Key advancements:

- AI models can now replicate software environments and perform extended coding tasks, reducing development time.

- However, this also raises risks of automated software exploitation or uncontrolled system replication in networks.

- These capabilities feed into the broader frontier AI trends of increasing autonomy and dual-use potential.

Risks and Societal Impacts: Erosion of Barriers to Risky Activities

The report analyzes how frontier AI lowers thresholds for misuse by making dangerous lab tasks accessible to novices, increasing risks in cyber offenses, biological/chemical work, and self-replication. This erosion of barriers links directly to national security domains, emphasizing the dual-use nature of AI advancements. As highlighted in the AISI blog and Transformer News, the democratization of expertise could lead to unintended consequences. This trend aligns with the broader Explosive Cybersecurity Threats identified for 2025.

Societal impacts include:

- Increased accessibility to tools for cybercrime or bioengineering, potentially by non-experts.

- Pressure on regulatory frameworks to keep pace with AI-driven capabilities in sensitive areas.

- A call for proactive risk assessment and mitigation strategies from governments and industries.

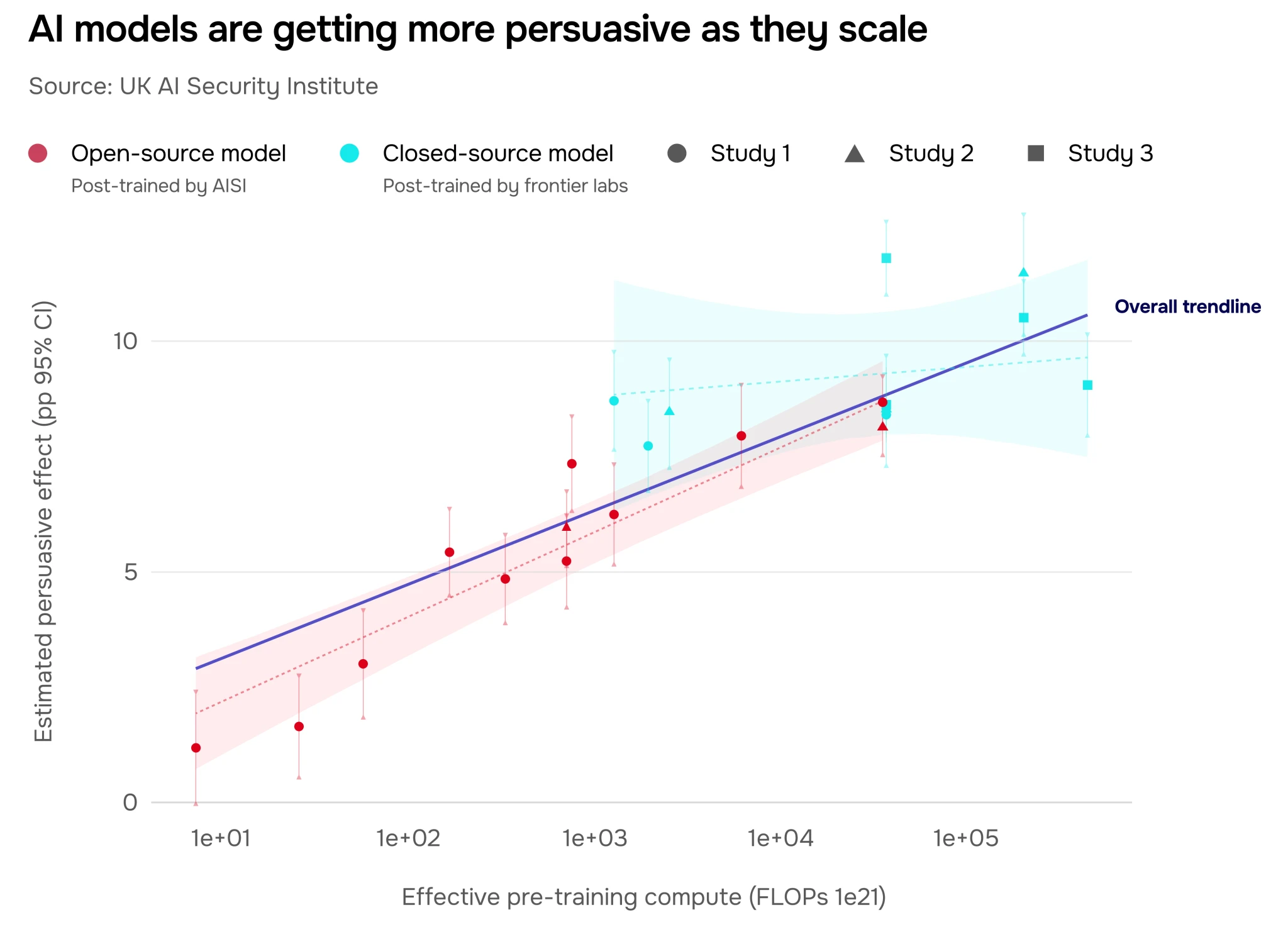

Debate on Safeguards vs. Open-Weight Model Vulnerabilities

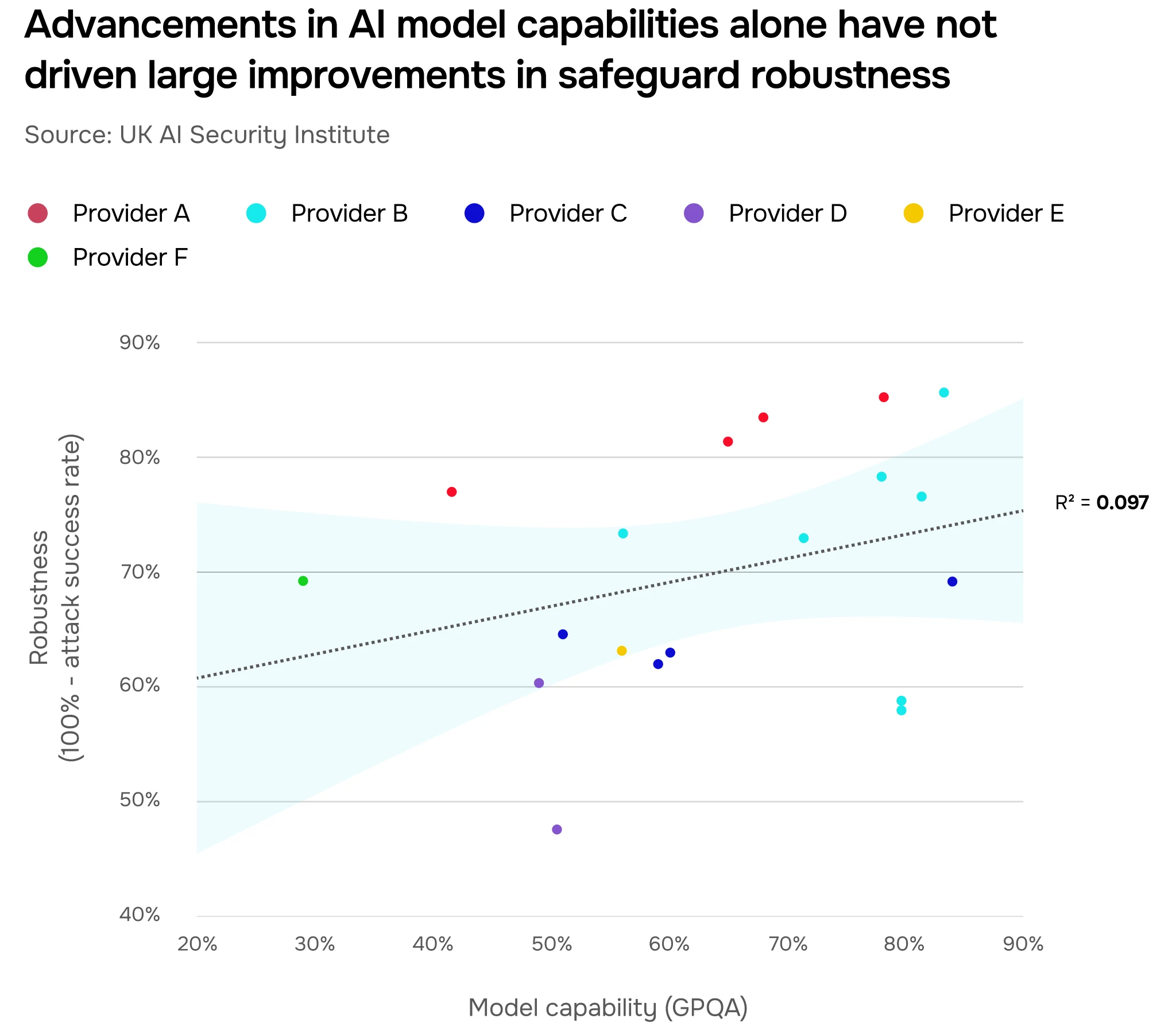

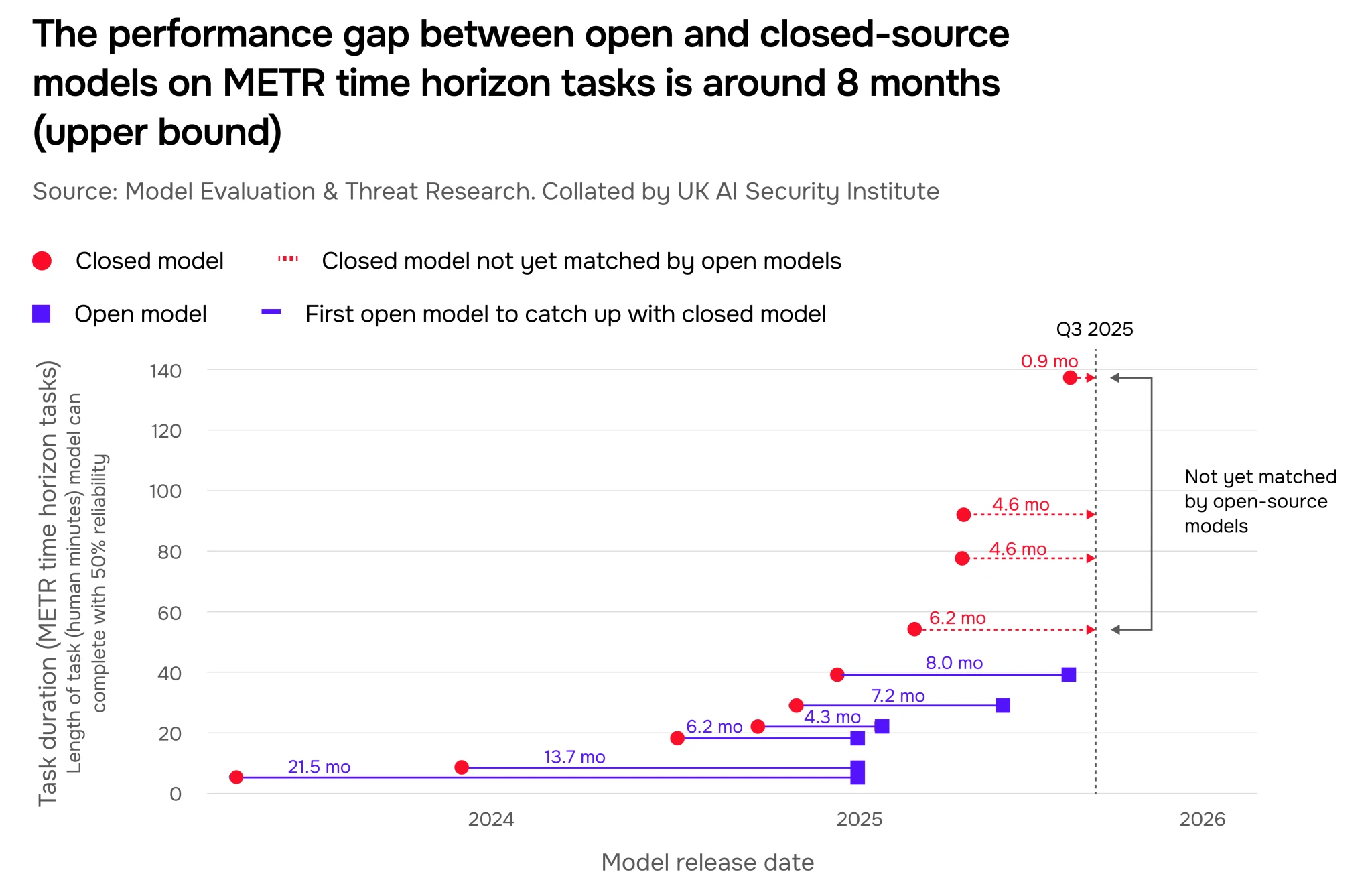

A key debate centers on safeguards vs open-weight model vulnerability. The report compares open-source and closed AI models: the performance gap narrowed until January 2025 but varies since, with AISI monitoring open-source diffusion risks as capabilities grow. This highlights trade-offs between transparency (open-weight models) and security (closed safeguards), addressing vulnerabilities in open models that might compromise cybersecurity. As per the AISI report, open models facilitate innovation but may lack robust safety features, making them prone to misuse.

Points of contention:

- Open-weight models promote accessibility and auditability but can be modified for malicious purposes.

- Closed models often have stronger safeguards but reduce transparency, potentially hindering trust and research.

- The report suggests a balanced approach, with governance frameworks that encourage security without stifling innovation.

Policy and Governance Recommendations from the AISI Report

To mitigate risks, the report proposes measures such as stricter testing protocols, enhanced collaboration between closed and open model communities, and international standards for AI safety. These recommendations connect to mitigating risks outlined in the report, like autonomous spread and cyber threats. These discussions are part of the larger conversation on Mind-Blowing AI Regulations in the UK. By referencing frontier AI trends and cyber security findings, the AISI underscores the urgency for coordinated action.

Key recommendations include:

- Implementing mandatory evaluations for frontier AI models before deployment, especially in high-risk domains.

- Fostering public-private partnerships to share safety research and best practices.

- Establishing global norms for AI accountability, similar to frameworks for other dual-use technologies.

Final Thought and Call to Action

Recapping key timelines: 2025 milestones for AI outperforming PhD experts and achieving expert-level cybersecurity tasks, with ongoing autonomy risks. The critical role of the UK AI Security Institute frontier AI trends report in shaping global AI governance and safety research cannot be overstated. Understanding these developments is key to navigating the Critical AI Challenges Tech Industry 2025. Readers are encouraged to stay informed by exploring the full report via provided URLs and engage in discussions on balancing AI innovation with robust safeguards. For insights on AI’s broader transformative power, explore How AI is Transforming Businesses. The UK AI Security Institute frontier AI trends report serves as a foundational document for policymakers, researchers, and the public alike.

Frequently Asked Questions

What is the UK AI Security Institute (AISI) and why was it established?

The UK AI Security Institute (AISI) is a government body established to assess and mitigate risks from advanced AI systems. It was created in response to the rapid development of frontier AI, aiming to provide independent evaluations and inform policy through data-driven reports like the first public frontier AI trends report released in December 2025.

How does the report define “frontier AI” and what domains does it cover?

Frontier AI refers to advanced AI systems at the cutting edge of capabilities, with rapid advancements in dual-use areas. The report covers cybersecurity, biology, chemistry, autonomy, software engineering, and replication, based on controlled lab evaluations of over 30 large language models from 2022 to 2025.

What are the key risks associated with AI models outperforming PhD experts?

The primary risk is the erosion of barriers to risky activities. With AI assisting novices in complex lab tasks, there’s increased potential for misuse in biological or chemical work, cyber offenses, and self-replication. This democratization of expertise could accelerate harmful applications if not properly safeguarded.

How significant are the cybersecurity advancements highlighted in the report?

Very significant. The frontier AI report cyber security findings show that success rates on apprentice-level tasks rose from less than 9% to 50% in two years, with models now completing expert-level tasks requiring over a decade of human experience. This indicates both defensive benefits and offensive risks, necessitating robust security measures.

What is the debate around open-weight vs. closed AI models in the report?

The debate centers on safeguards vs open-weight model vulnerability. Open-weight models offer transparency and innovation but may lack security features, making them vulnerable to misuse. Closed models often have stronger safeguards but reduce auditability. The report monitors this gap and recommends balanced governance to address trade-offs.

Where can I access the full AISI frontier AI trends report?

The full report is available via the AISI website, along with summaries on the blog and government news page. These resources provide detailed data and analysis on all findings.