Understanding New AI Regulations: A Guide for Businesses Navigating Global Rules and the Impact of the EU AI Act

Estimated reading time: 9 minutes

Key Takeaways

- Understanding new AI regulations is crucial for compliance, trust, and ethical practices in today’s AI-driven business landscape.

- The push for AI regulation is driven by critical concerns including safety, bias, privacy, accountability, and transparency.

- The EU AI Act is a landmark, *risk-based* regulation classifying AI systems and imposing stringent requirements, particularly on ‘high-risk’ applications.

- The impact of EU AI Act on business requires identifying systems, conducting risk assessments, implementing compliance frameworks, and updating internal processes.

- Navigating global AI rules is complex due to differing approaches in regions like the US (sector-specific), UK (principles-based), and others worldwide.

- Proactive steps for compliance include mapping systems, risk assessments, establishing governance, strengthening data security, and *training staff*.

- Staying informed about the latest updates on AI governance is vital as the regulatory landscape is rapidly evolving.

Table of contents

- Understanding New AI Regulations: A Guide for Businesses Navigating Global Rules and the Impact of the EU AI Act

- Key Takeaways

- The Urgent Need for New AI Regulations

- A Major Landmark: The EU AI Act and Its Business Impact

- Beyond Europe: Navigating Global AI Rules

- Practical Steps for AI Compliance: A Business Guide

- Staying Ahead: Accessing the Latest AI Governance Updates

- Conclusion

- Frequently Asked Questions

Artificial Intelligence (AI) is no longer a futuristic concept; it’s a fundamental tool woven into the fabric of modern business operations. From enhancing customer service with chatbots to optimizing supply chains and enabling advanced diagnostics, the pervasive use in various business functions today is undeniable. As organizations increasingly rely on AI for critical tasks and decision-making, the need for robust governance has become paramount. This is where understanding new AI regulations steps in, proving critical not just for maintaining compliance but also for building essential public trust and ensuring ethical practices.

Navigating this complex terrain means dealing with a diverse and rapidly evolving landscape of rules across different jurisdictions. Expertise in navigating global AI rules is no longer optional; it’s a strategic necessity for businesses operating internationally or planning to leverage AI at scale. This blog post serves as your comprehensive overview and practical guide, designed to help you understand the core drivers behind these new regulations and the practical implications for your business.

The Urgent Need for New AI Regulations

The global push for AI regulation isn’t happening in a vacuum. It’s a direct response to the unique capabilities and potential risks associated with AI technologies. While offering immense benefits, AI systems also present novel challenges that traditional laws weren’t designed to address. The core concerns driving this regulatory wave are:

- *Safety*: Ensuring AI systems do not cause unintended physical or psychological harm.

- *Bias*: Preventing AI from perpetuating or amplifying existing societal prejudices, leading to discriminatory outcomes.

- *Privacy*: Protecting sensitive personal data processed by AI systems, often on a massive scale.

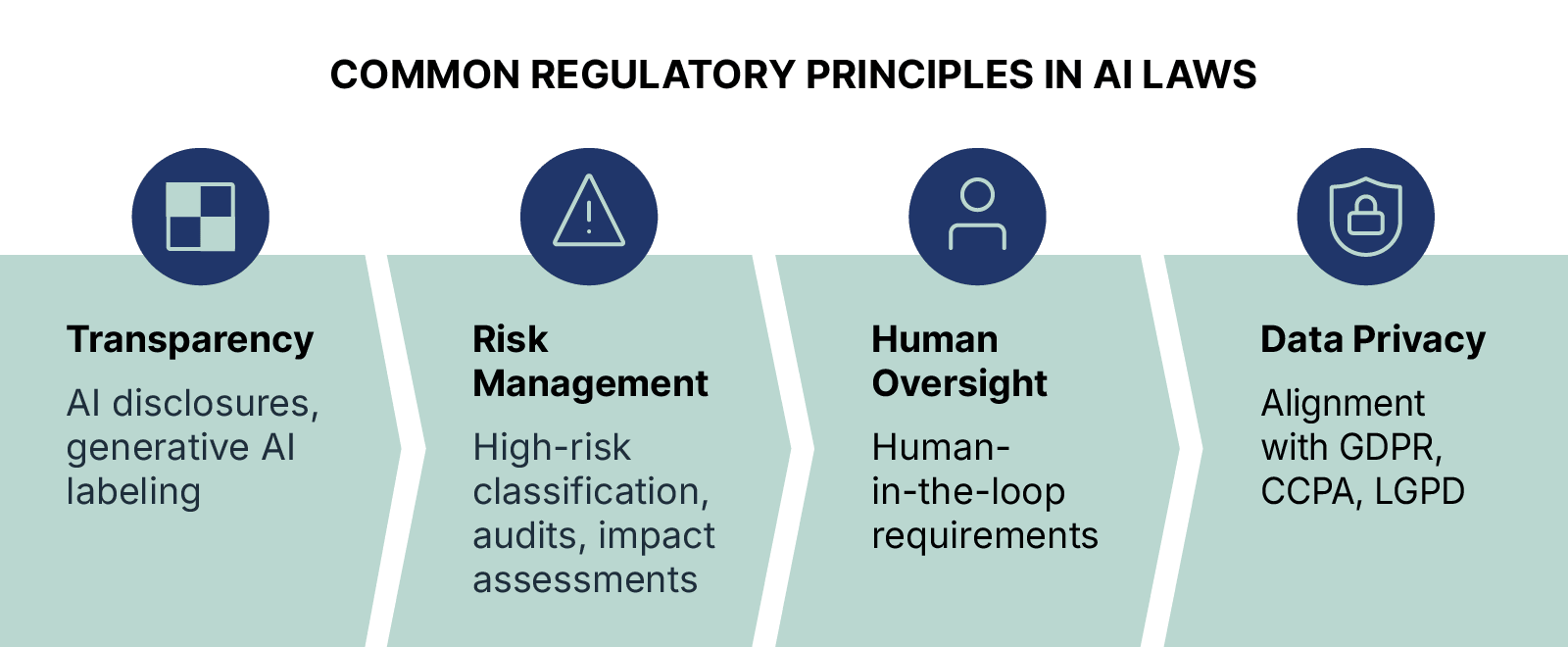

- *Accountability* (determining responsibility when AI makes errors), and *Transparency* (understanding how AI systems make decisions): Establishing clear lines of responsibility when AI systems fail and making their operations understandable to humans.

These concerns highlight why AI systems require oversight due to their potential to amplify errors or prejudices, cause unintended harm, or process sensitive data. Unlike traditional software, AI’s learning capabilities can lead to unpredictable outcomes, making rigorous testing and oversight essential.

The complexity of navigating global AI rules is amplified by the significant variations in regulatory priorities and enforcement approaches across different regions. For example, some countries might prioritize fostering innovation with lighter regulations, while others focus heavily on mitigating risks and ensuring fundamental rights protection. This means businesses must prepare for a diverse set of obligations depending on where they operate, develop, or deploy AI systems.

This variation necessitates a detailed understanding of jurisdictional differences and a flexible compliance strategy. What is permissible in one market might be strictly regulated or even prohibited in another.

“Artificial intelligence is evolving at an unprecedented pace, powering everything from customer service bots to advanced medical diagnostics. As businesses increasingly rely on AI, understanding new AI regulations has become critical for remaining compliant, building trust, and ensuring ethical practices. This post provides a comprehensive overview and guide to navigating this fast-changing regulatory landscape.”

“The push for new AI regulations is driven by concerns about safety, bias, privacy, accountability, and transparency. AI systems can amplify errors or prejudices, cause unintended harm, or process sensitive data, making oversight essential. Navigating global AI rules is especially complex due to varying regulatory priorities and enforcement across regions, meaning businesses must prepare for a patchwork of obligations.”

A Major Landmark: The EU AI Act and Its Business Impact

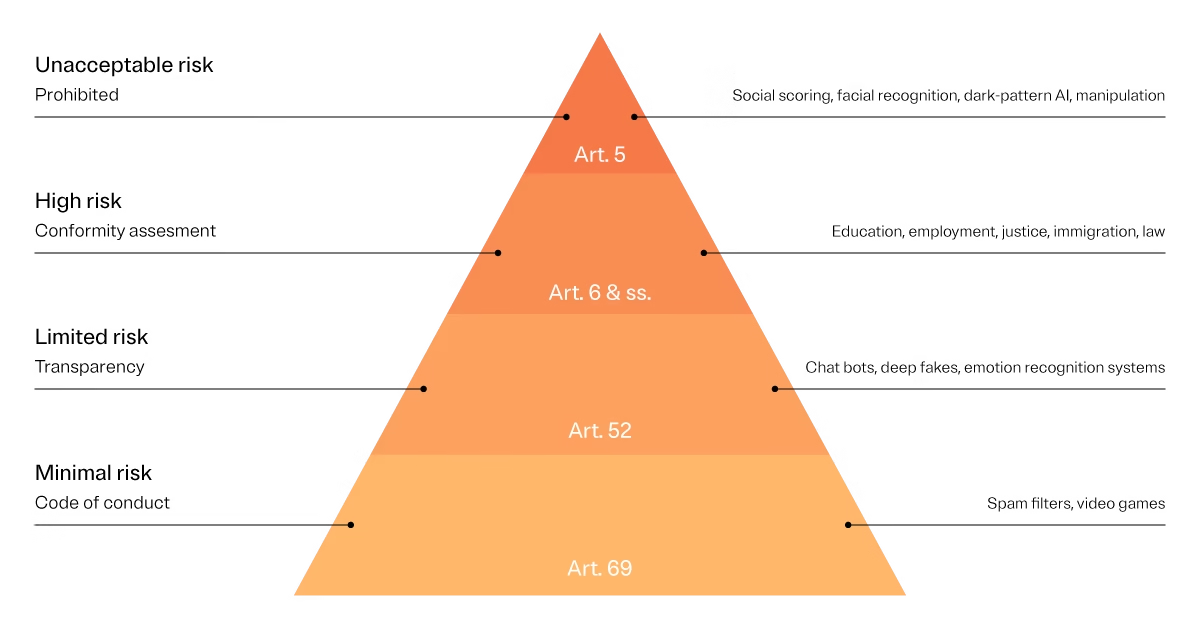

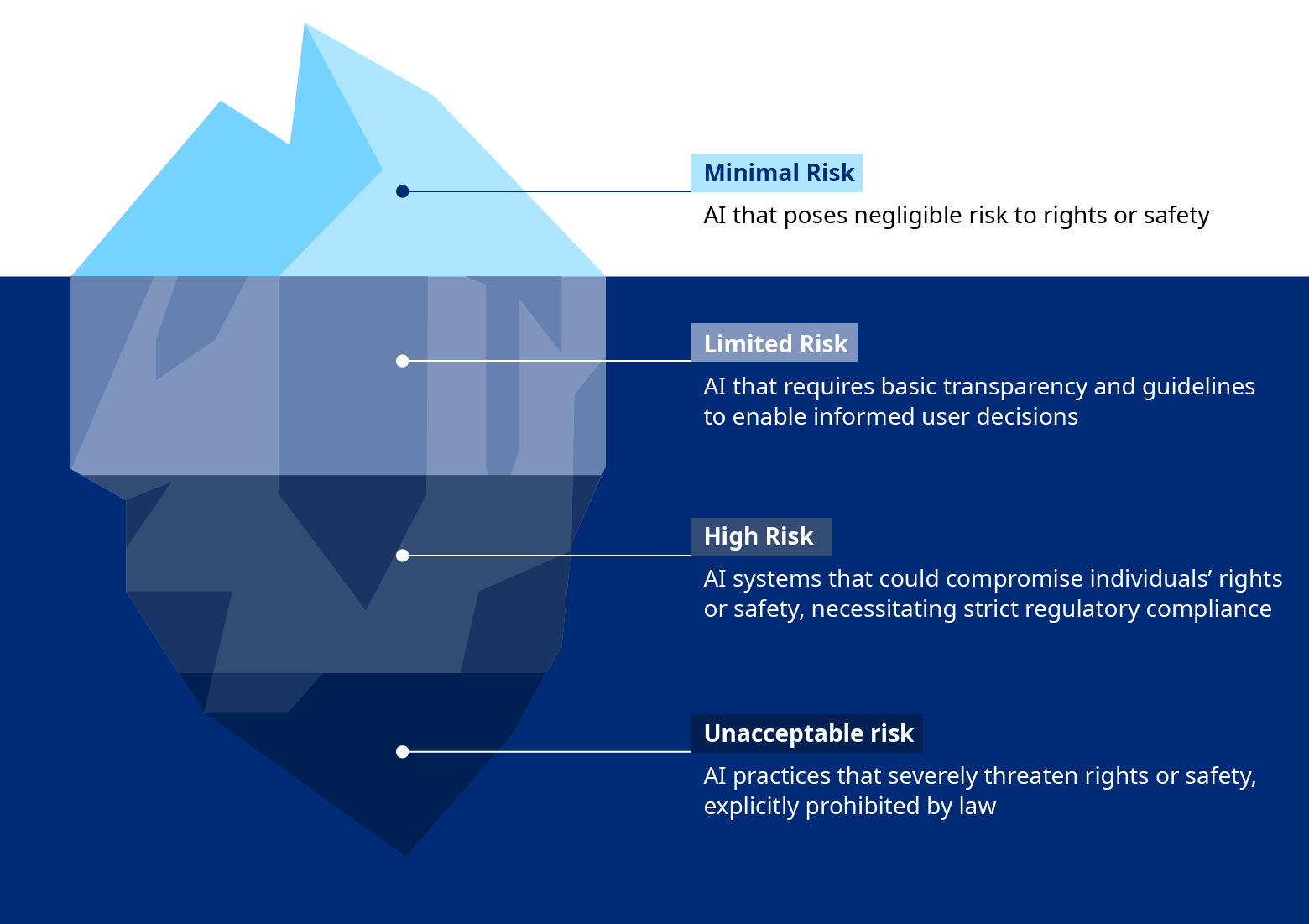

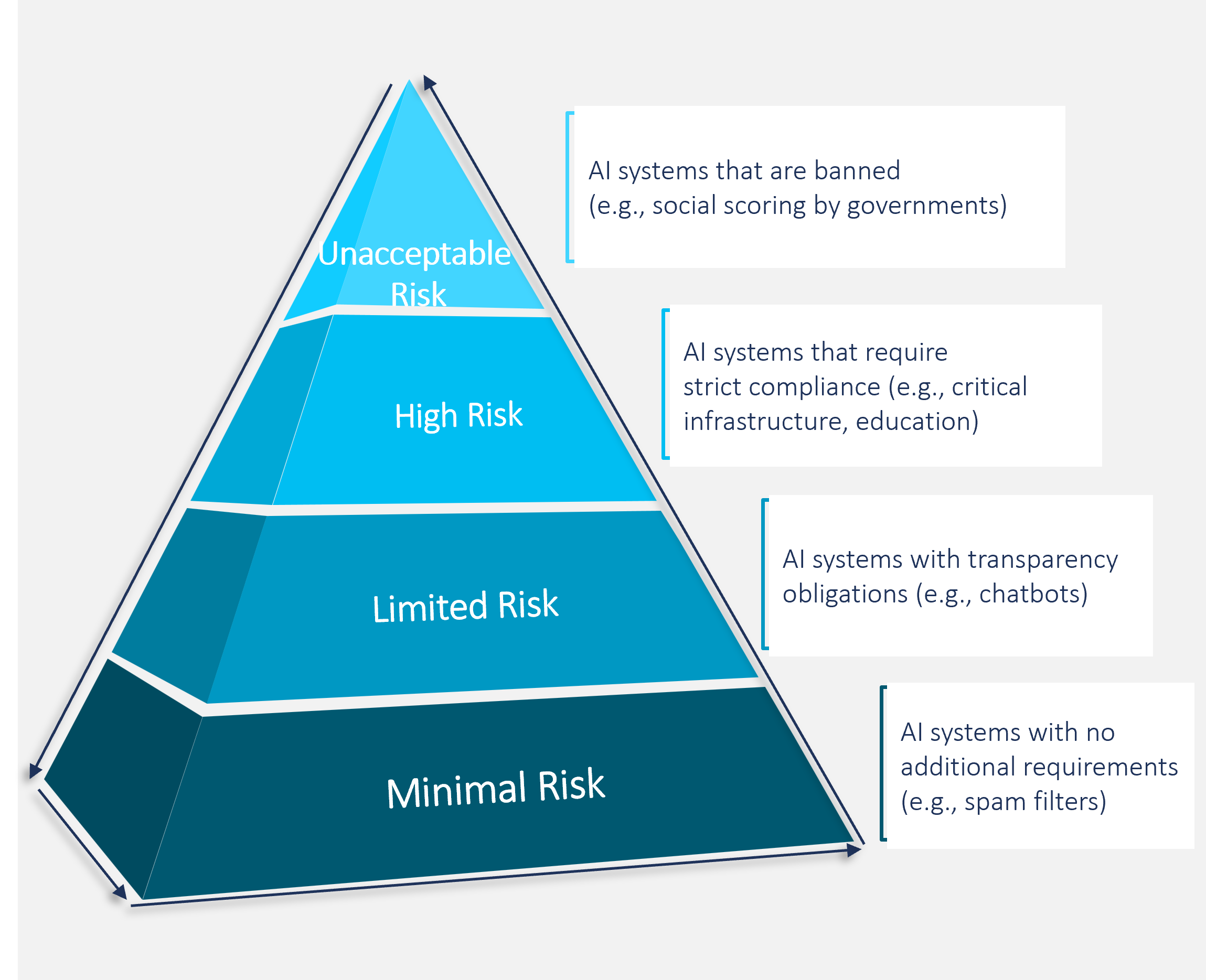

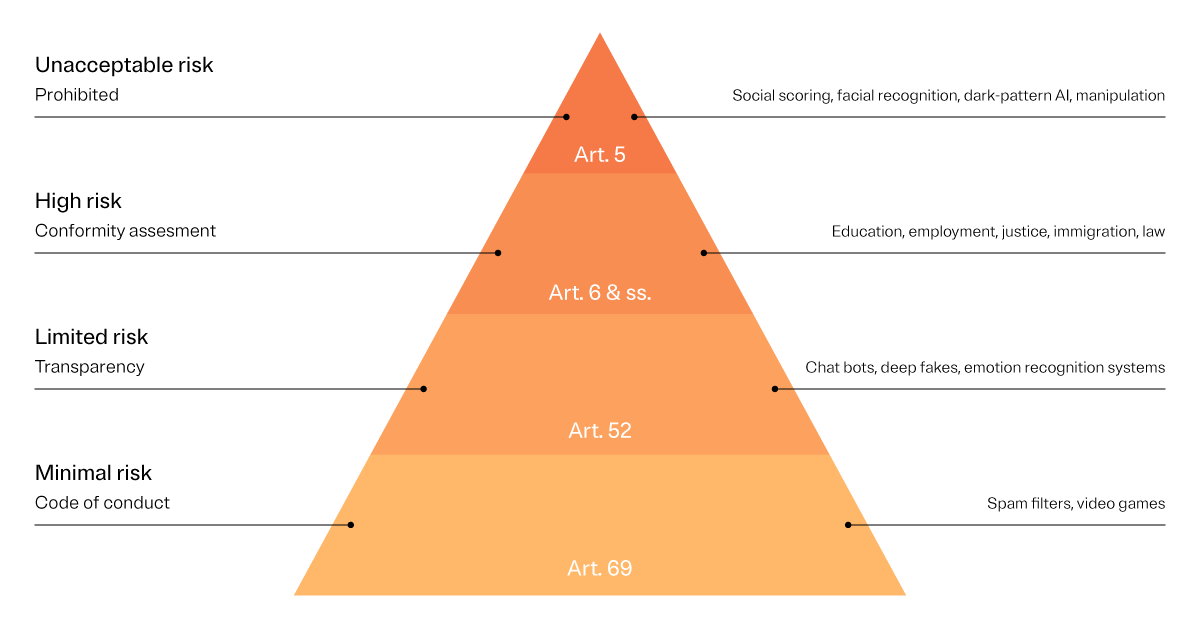

The European Union AI Act stands out as a groundbreaking piece of legislation. It is widely regarded as the world’s first comprehensive legal framework specifically designed to address the risks of Artificial Intelligence. At its heart, the Act adopts a risk-based approach, classifying AI systems into four categories based on their potential to cause harm:

- Prohibited AI: These are AI systems posing an “unacceptable risk” to fundamental rights. Examples include manipulative techniques designed to distort behaviour, social scoring systems by public authorities, and systems exploiting vulnerabilities of specific groups (like children or persons with disabilities). These applications are outright banned. The ban on unacceptable-risk AI starts February 2025, alongside some AI literacy requirements.

- High-risk AI: This is the category with the most significant compliance burden. It includes AI systems used in critical infrastructure, education/vocational training, employment/worker management, law enforcement, migration/asylum management, administration of justice, and democratic processes. For these systems, stringent requirements apply:

- Mandatory conformity assessments before market entry.

- Robust risk management systems throughout the AI lifecycle.

- High-quality data requirements to minimize bias.

- Detailed documentation and record-keeping.

- Transparency and information provision to users.

- Human oversight measures.

- High levels of cybersecurity resilience.

These high-risk obligations phase in throughout 2026 and 2027.

- Limited Risk & Minimal Risk AI: Most AI systems (like chatbots or spam filters) fall into these categories. They face much lighter requirements, primarily focused on transparency (e.g., notifying users they are interacting with AI) or voluntary codes of conduct.

The impact of EU AI Act on business is substantial, especially for companies operating within or targeting the EU market. Practical steps companies must take include:

- Identifying precisely which of their AI systems fall under the Act’s scope, paying particular attention to potential high-risk categories.

- Conducting thorough risk assessments for identified systems to determine their classification and associated obligations.

- Implementing comprehensive compliance frameworks, including technical safeguards, process adjustments, and governance structures to meet the specific requirements for each system category.

Potential operational changes are significant. Companies may need to update internal processes for AI development and deployment, refine documentation practices (technical documentation, activity logs), retrain staff on new procedures and requirements, and enhance existing data privacy (aligning with GDPR) and cybersecurity protections to meet the Act’s demanding standards.

The consequences of non-compliance are severe. They include heavy fines, which can amount to millions of euros or a significant percentage of a company’s global annual turnover (up to 7% for violations of the prohibited AI list), and restricted market access within the EU [Source: Baker McKenzie]. This makes proactive compliance a business imperative.

Key timeline milestones for the EU AI Act are critical to track:

- August 2024: The Act takes effect, marking the beginning of the two-year transition period [Source: Alexander Thamm].

- February 2025: The ban on unacceptable-risk AI systems becomes enforceable, along with obligations related to AI literacy.

- August 2026 to August 2027: Most requirements for high-risk AI systems become enforceable, giving businesses time to adapt their systems and processes [Source: Alexander Thamm].

Understanding new AI regulations, especially the detailed provisions and timeline of the EU AI Act, is foundational for businesses looking to ensure compliance and manage risks effectively in the European market. This requires a dedicated effort to assess and adapt existing AI capabilities.

Beyond Europe: Navigating Global AI Rules

While the EU AI Act is a leading example, it’s important to recognize that AI regulation is a global trend. Countries and regions worldwide are grappling with similar questions about how to govern AI safely and ethically, leading to a variety of approaches. For international businesses, navigating global AI rules presents a complex and ongoing challenge.

Let’s look at approaches taken by other major economies:

- United States: The US currently favors a more sector-specific approach. Regulations and guidelines are emerging concerning AI use in specific areas like healthcare, financial services, and housing, often building upon existing regulatory structures. While there are active discussions at the federal level about the potential for broader AI laws or executive actions, the approach is currently less centralized than the EU’s comprehensive Act.

- United Kingdom: The UK has proposed an approach favoring a pro-innovation, principles-based regulatory regime. Rather than a single comprehensive law covering all AI, the focus is on empowering existing regulators (like those for competition, data protection, and human rights) to issue sector-level guidance based on cross-cutting principles such as safety, transparency, and fairness. This aims to be adaptable and support innovation.

- Canada, Asia-Pacific, and other regions: Numerous other countries are also developing their own guidelines, frameworks, or legislative bills focused on responsible AI development and deployment. Initiatives range from ethical guidelines and voluntary standards to mandatory requirements for certain AI applications. The pace and scope of these developments vary significantly.

This diverse landscape means that for companies operating internationally or offering AI services across borders, navigating global AI rules is a significant ongoing challenge. Each jurisdiction’s laws may differ considerably in their scope (what types of AI they cover), the specific requirements they impose, and their enforcement mechanisms and penalties.

This necessitates a diligent effort for international firms to carefully map their obligations based on where their AI systems are developed, deployed, used, or where their outputs have an effect on individuals or markets. It also requires close monitoring of ongoing legal and policy developments in key markets, as frameworks are frequently updated or new legislation is introduced. Staying on top of the latest updates on AI governance globally is paramount.

“While the EU leads with its comprehensive Act, other major economies are also introducing or expanding AI regulations: – United States: Regulatory focus is sector-specific (e.g., healthcare, financial services), with active discussions about broader federal laws. – United Kingdom: Favors a pro-innovation, principles-based regime, with sector-level guidance anticipated. – Canada, Asia-Pacific, and beyond: Countries are developing guidelines or bills on responsible AI. For international firms, navigating global AI rules is a major challenge. Each jurisdiction’s laws may differ in scope and enforcement, requiring careful mapping of obligations and close monitoring of ongoing legal developments.”

Practical Steps for AI Compliance: A Business Guide

Given the rapidly evolving and complex nature of AI regulation, businesses cannot afford to wait for full clarity or enforcement actions. Proactive compliance is key to mitigating legal and reputational risks, maintaining market access, and building trust with customers and regulators. This section provides a practical guide to AI compliance laws for businesses looking to address the regulatory landscape head-on [Source: PenBrief].

Here are actionable steps companies should take:

- Identify Applicable Jurisdictions: The first step is to pinpoint all countries or regions where your AI is developed, deployed, used, or where its outputs have an effect on individuals or markets. Each location may have its own set of rules or principles governing AI use.

- Map AI Systems to Regulations: Create a comprehensive inventory of all AI systems used or developed by your company. For each system, determine which ones fall under emerging or existing regulations in the identified jurisdictions. This requires understanding the definitions and scope of AI systems used in different laws.

- Conduct Detailed Risk Assessments: This is a crucial step, especially under frameworks like the EU AI Act. Conduct thorough assessments for relevant AI systems to classify them (e.g., identifying “high-risk” systems [Source: Baker McKenzie]) and accurately determine the specific compliance obligations for each system based on its classification and use case.

- Establish Robust Governance Frameworks: Implement internal structures and processes to manage AI risks and compliance. This involves appointing a designated AI compliance lead or team, setting up clear internal policies and procedures for AI development, deployment, and monitoring, and creating internal audit systems to monitor compliance effectiveness.

- Ensure Strong Data Privacy and Cybersecurity: Many AI regulations intersect significantly with data protection and cybersecurity laws. Ensure that strong data protection principles (like data minimization, purpose limitation, and security measures) and robust cybersecurity practices are integrated throughout the entire AI lifecycle, from data collection and model training to deployment and ongoing monitoring.

- Train Staff: Educate employees involved in AI development, deployment, management, and oversight about relevant regulatory requirements, the company’s internal AI policies, ethical considerations, and internal reporting obligations for potential compliance issues. Competent and aware staff are fundamental to effective compliance.

These steps form the foundation of a proactive approach to understanding new AI regulations and building a sustainable compliance program. While the impact of EU AI Act on business may require specific focus on high-risk systems and conformity assessments, the general principles outlined here are applicable when navigating global AI rules.

“Businesses can proactively address compliance through these steps: – Identify all jurisdictions in which their AI is developed, used, or sold. – Map which AI systems fall under emerging or existing regulations. – Conduct detailed risk assessments to classify AI systems and determine obligations (especially crucial for the EU AI Act [Source: Baker McKenzie]). – Establish robust governance frameworks, such as appointing an AI compliance lead and setting up internal audit systems. – Ensure strong data privacy and cybersecurity protections throughout the AI lifecycle. – Train staff on regulatory requirements, ethical use of AI, and reporting obligations.”

Following this guide to AI compliance laws is not a one-time task but an ongoing process requiring continuous effort and adaptation.

Staying Ahead: Accessing the Latest AI Governance Updates

It’s critical to understand that the AI regulatory landscape is not static. It is a domain characterized by rapid change, driven by technological advancements, evolving societal expectations, and ongoing policy discussions worldwide. Therefore, staying informed about the latest updates on AI governance is absolutely essential for maintaining compliance, effectively managing emerging risks, and adapting business strategy to this dynamic environment.

Relying on outdated information or failing to track new developments can lead to significant compliance gaps, potentially resulting in penalties, legal challenges, and reputational damage. Proactive monitoring is key to anticipating changes and integrating new requirements into your compliance frameworks.

Reliable sources for monitoring these changes include:

- Official websites of government bodies and regulatory authorities: Direct sources such as national data protection agencies, ministries overseeing technology or economy, and specialized AI task forces or commissions provide the most authoritative information on proposed and enacted legislation, guidelines, and enforcement actions.

- Publications and analyses from reputable law firms or consultancies: Firms specializing in technology law, data protection, and AI regulation often publish timely analyses, summaries, and practical guidance on new regulations, interpreting their implications for businesses. These sources can be invaluable for understanding complex legal texts and tracking key dates and requirements [Source: Baker McKenzie].

- Industry associations: Many industry bodies track policy developments relevant to their specific sector (e.g., healthcare AI, financial services AI). Membership in such associations can provide access to tailored updates and peer insights.

- Reputable news outlets and academic institutions: Specialized technology news sites, policy-focused publications, and academic research institutions often provide insightful analysis and reporting on AI governance trends and proposals.

Businesses should dedicate resources, whether internal personnel or external legal counsel, to continuous monitoring and analysis of the regulatory landscape relevant to their operations and the markets they serve. This vigilance is a critical component of effective AI governance and risk management. It is crucial for both understanding new AI regulations as they emerge and effectively navigating global AI rules which are in constant evolution.

“AI regulations are rapidly evolving. Staying informed is essential for compliance and risk management. Reliable sources for the latest updates on AI governance include: – Official government and regulatory authority websites. – Publications from law firms or consultancies specializing in technology and data protection (e.g., legal analyses on upcoming deadlines [Source: Baker McKenzie]). – Industry associations and reputable news outlets tracking global policy developments.”

Conclusion

Understanding new AI regulations is not merely a compliance checklist item; it is a fundamental requirement for businesses aiming for responsible innovation, building sustainable trust with stakeholders, and ensuring continued successful operation in the current data-driven economy. The rapid integration of AI across sectors brings unprecedented opportunities, but these must be pursued hand-in-hand with a deep awareness of the associated legal, ethical, and societal responsibilities.

Proactive compliance, especially with landmark legislation like the EU AI Act and its structured, risk-based approach, is key. It enables businesses to effectively manage potential legal liabilities, mitigate reputational risks associated with AI failures or misuse, and crucially, demonstrate a commitment to ethical practices. This commitment is increasingly valued by customers, partners, and regulators alike, potentially enabling businesses to seize market opportunities and build essential public trust.

The regulatory environment, as we’ve explored, is in constant flux, with jurisdictions worldwide developing and refining their approaches. Therefore, companies must remain vigilant, well-informed (keeping up with the latest updates on AI governance), and ready to adapt quickly to the evolving complexities of navigating global AI rules [Source: Alexander Thamm] [Source: Baker McKenzie].

Treating AI compliance not as a burden but as a strategic imperative, guided by frameworks and steps outlined in this guide to AI compliance laws, will be crucial for long-term success and ethical operation in the age of AI.

“Understanding new AI regulations is fundamental for responsible innovation and continued operation in today’s data-driven economy. Proactive compliance—especially with landmark rules like the impact of EU AI Act on business—enables businesses to manage risks, seize market opportunities, and build public trust. With the regulatory environment in constant flux, companies must stay vigilant, informed, and ready to adapt to the complexities of navigating global AI rules [Source: Alexander Thamm] [Source: Baker McKenzie].”

Frequently Asked Questions

The primary goal is to ensure that AI systems used in the European Union are safe, transparent, non-discriminatory, and environmentally friendly. It adopts a risk-based approach, imposing stricter rules on AI systems deemed to pose higher risks.

Key concerns include safety risks (AI causing harm), bias and discrimination, privacy violations, lack of accountability when AI fails, and a lack of transparency or explainability in how AI systems reach decisions.

The EU AI Act is a single, comprehensive law covering AI across sectors, using a risk-based classification. The US approach is currently more fragmented and sector-specific, relying on existing regulatory bodies to address AI risks within their domains, although broader federal discussions are ongoing.

Non-compliance can lead to significant penalties, including substantial fines that can reach millions of euros or a percentage of a company’s global annual turnover. It can also result in restrictions on deploying or marketing AI systems within the EU.

The Act enters into force in August 2024. Prohibitions on unacceptable-risk AI systems become enforceable in February 2025. Requirements for high-risk AI systems generally become enforceable throughout 2026 and 2027, allowing businesses a transition period.

The first step is to identify all the jurisdictions where your business operates or intends to use AI, and then inventory all AI systems used internally or offered externally. This helps map which regulations potentially apply to which systems.