Decoding the AI Frontier: A Deep Dive into the Inaugural AI Security Institute Report

Estimated reading time: 10 minutes

Key Takeaways

- The UK’s AI Security Institute (AISI) has released its first public, evidence-based Frontier AI Trends Report.

- The report provides the clearest empirical picture yet of the rapid capability gains in the most advanced AI systems.

- Frontier AI systems are now matching or exceeding expert human performance in specific, high-stakes domains like cybersecurity and scientific research.

- These powerful capabilities are inherently dual-use, creating significant national security and public safety risks if misused.

- The AISI’s hands-on testing methodology offers a crucial, data-driven contrast to more theoretical risk assessments.

- The findings demand urgent action from developers, policymakers, and security professionals to implement safeguards and evaluations.

Table of contents

- Decoding the AI Frontier: A Deep Dive into the Inaugural AI Security Institute Report

- Key Takeaways

- Introducing the AISI and Its Landmark Report

- What Are Frontier AI Systems and Risks?

- Unpacking AI Security Institute Frontier AI Trends Report Capabilities

- Analyzing the Dual-Use Nature of Frontier AI Risks

- Contrasting Approaches: The Difference Between AISI Report and Bengio Report

- Deep Dive: AISI Empirical Testing Results on Advanced AI

- Translating Findings into Action: Insights for Different Stakeholders

- Synthesizing the Report’s Significance and Looking Ahead

- Frequently Asked Questions

Introducing the AISI and Its Landmark Report

The conversation around artificial intelligence safety has just shifted from speculative to substantive. The UK government’s AI Security Institute (AISI), a body dedicated to advancing AI safety and security, has published its inaugural public assessment: the Frontier AI Trends Report. This groundbreaking document moves beyond theoretical debate, offering the first clear, evidence-based snapshot of the capabilities, trends, and risks associated with the world’s most advanced AI systems. Prominently featuring the AI Security Institute Frontier AI Trends Report capabilities analysis, this report is built on two years of rigorous, hands-on testing, providing a data-driven foundation for global policy and security discussions. The report’s mission, as outlined by the AISI and the UK government, is to cut through the hype and provide a reliable, empirical baseline for understanding this transformative technology.

As announced by the UK government, this report gives the “clearest picture yet” of what these systems can do. This blog post will dissect this critical document. We’ll define what “frontier AI” really means, explore the startling capabilities and accompanying risks it uncovers, detail its empirical methodology, compare it with other major risk assessments, and, most importantly, extract actionable insights for everyone from developers to policymakers. This is not just another tech report; it’s a roadmap for navigating the next era of AI.

What Are Frontier AI Systems and Risks?

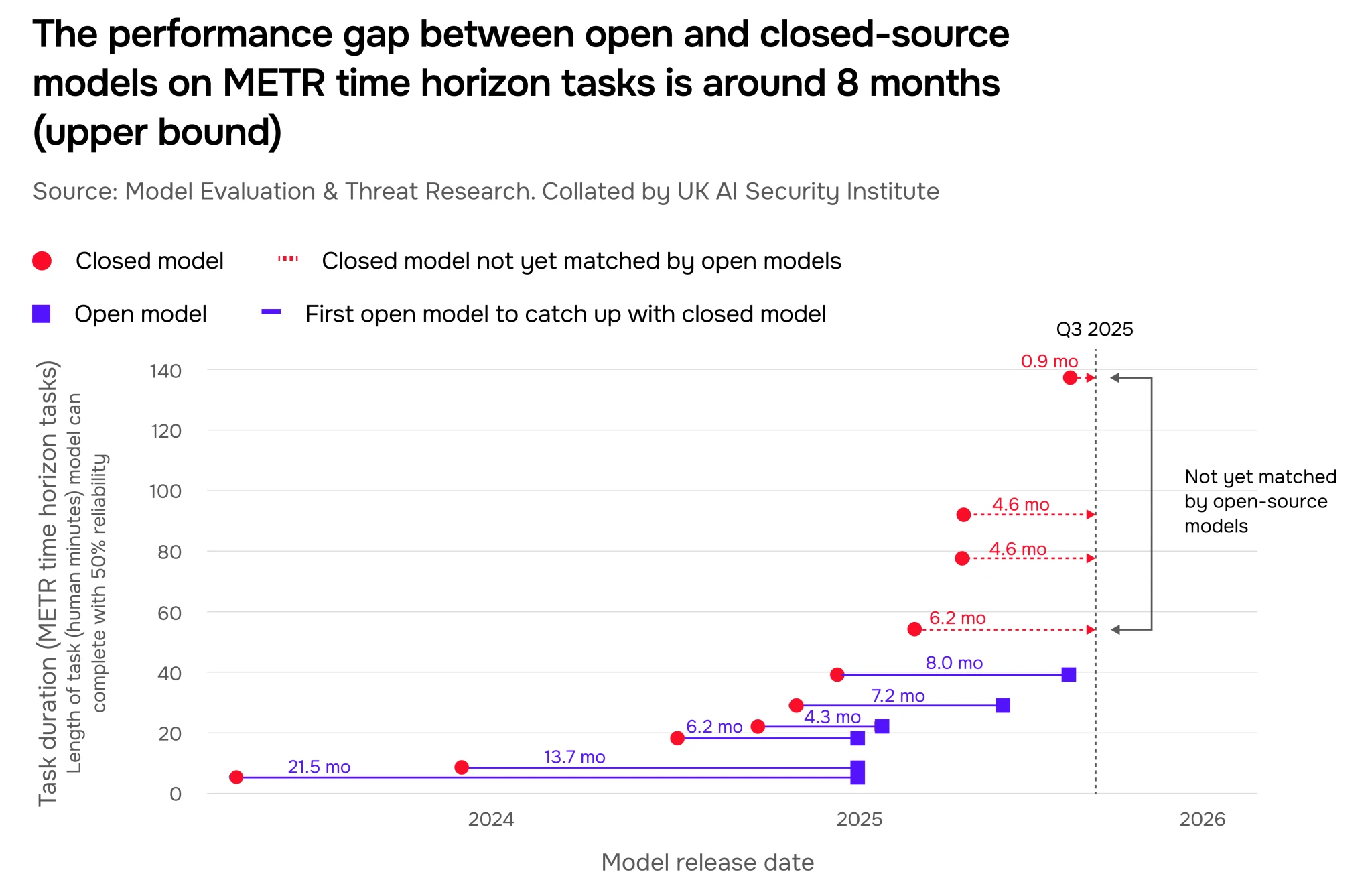

To understand the report’s findings, we must first answer a fundamental question: what are frontier AI systems and risks? The AISI defines “frontier AI systems” as the most capable and general-purpose AI models—primarily large language models (LLMs)—that have been released since 2022. These are not narrow chatbots or image generators; they are the vanguard of AI development, characterized by their massive scale, broad reasoning abilities, and increasing “agentic” behavior—meaning they can plan, use tools, and execute multi-step tasks with minimal human intervention.

According to the AISI’s research, these systems are being deployed or considered for use in critically sensitive domains, from national cybersecurity to advanced scientific research in chemistry and biology. This is where the inherent tension lies. Their rapidly improving, general-purpose capabilities hold immense promise for innovation and economic growth. However, this very power raises profound national security and public safety concerns. The core thesis of the report is that we are not dealing with hypothetical future threats; the risks are emerging in parallel with the capabilities, today. The dual-use nature of this technology means that a tool designed to discover new pharmaceuticals could, in the wrong hands, lower barriers to designing harmful chemical agents.

Unpacking AI Security Institute Frontier AI Trends Report Capabilities

The heart of the AI Security Institute Frontier AI Trends Report capabilities analysis reveals one overarching trend: rapid and broad performance gains. The AISI’s hands-on testing across multiple high-impact domains shows that these systems are not just getting better at conversation; they are acquiring expert-level competencies in fields that matter to global security.

Let’s break down the key domains and findings:

- Cybersecurity: This is perhaps the most striking area of advancement. The report documents that models have progressed from solving only about 9% of apprentice-level cybersecurity tasks in late 2023 to approximately 50% recently. Even more concerning, at least one model can now solve expert-level cyber tasks that typically require a decade of professional experience. As noted in a key findings blog from the AISI and covered by third-party analysis, this means the most advanced AI now surpasses PhD-level experts in specific, controlled test scenarios.

- Chemistry & Biology: In scientific domains, frontier models show a growing ability to assist with complex experimental design, interpret lab protocols, and navigate technical scientific literature. The report indicates this could significantly accelerate legitimate research but also potentially lower the barrier to dangerous work, a point emphasized in commentary on the report’s biorisk implications.

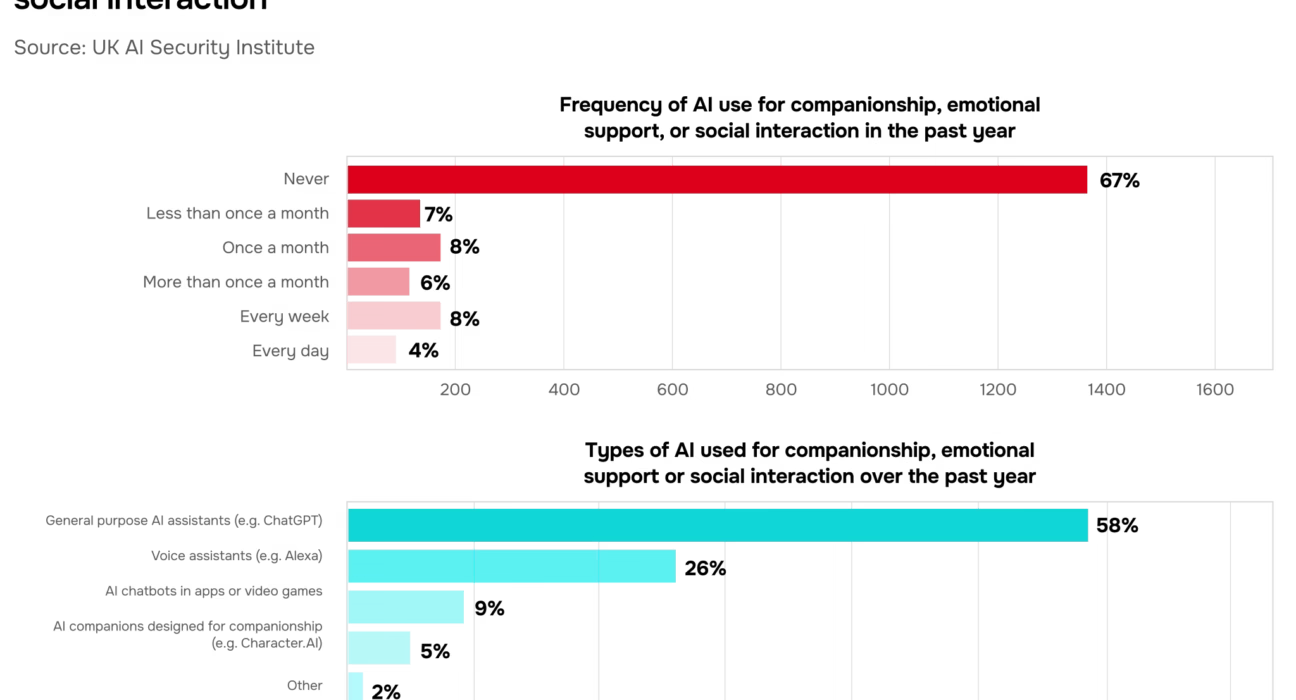

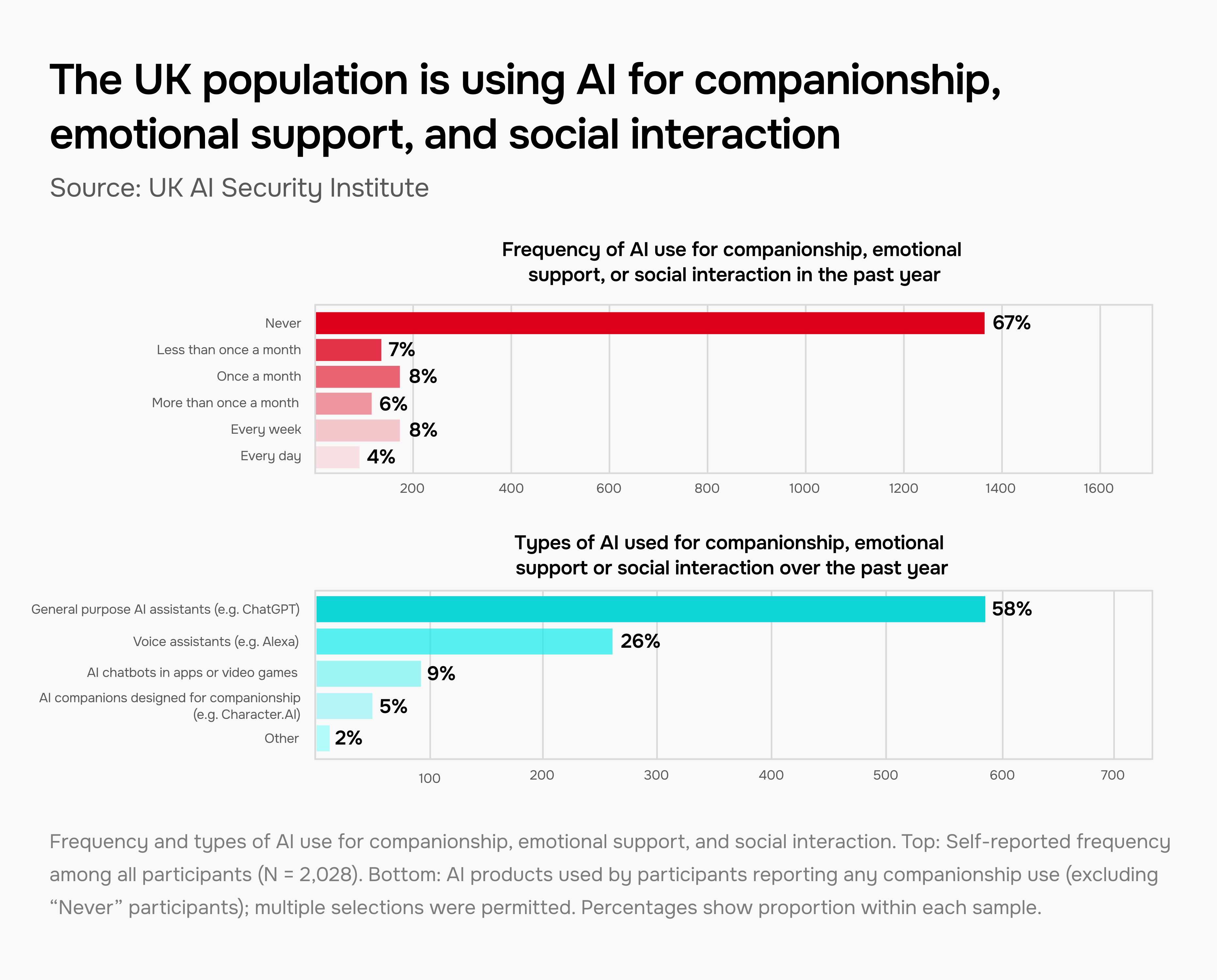

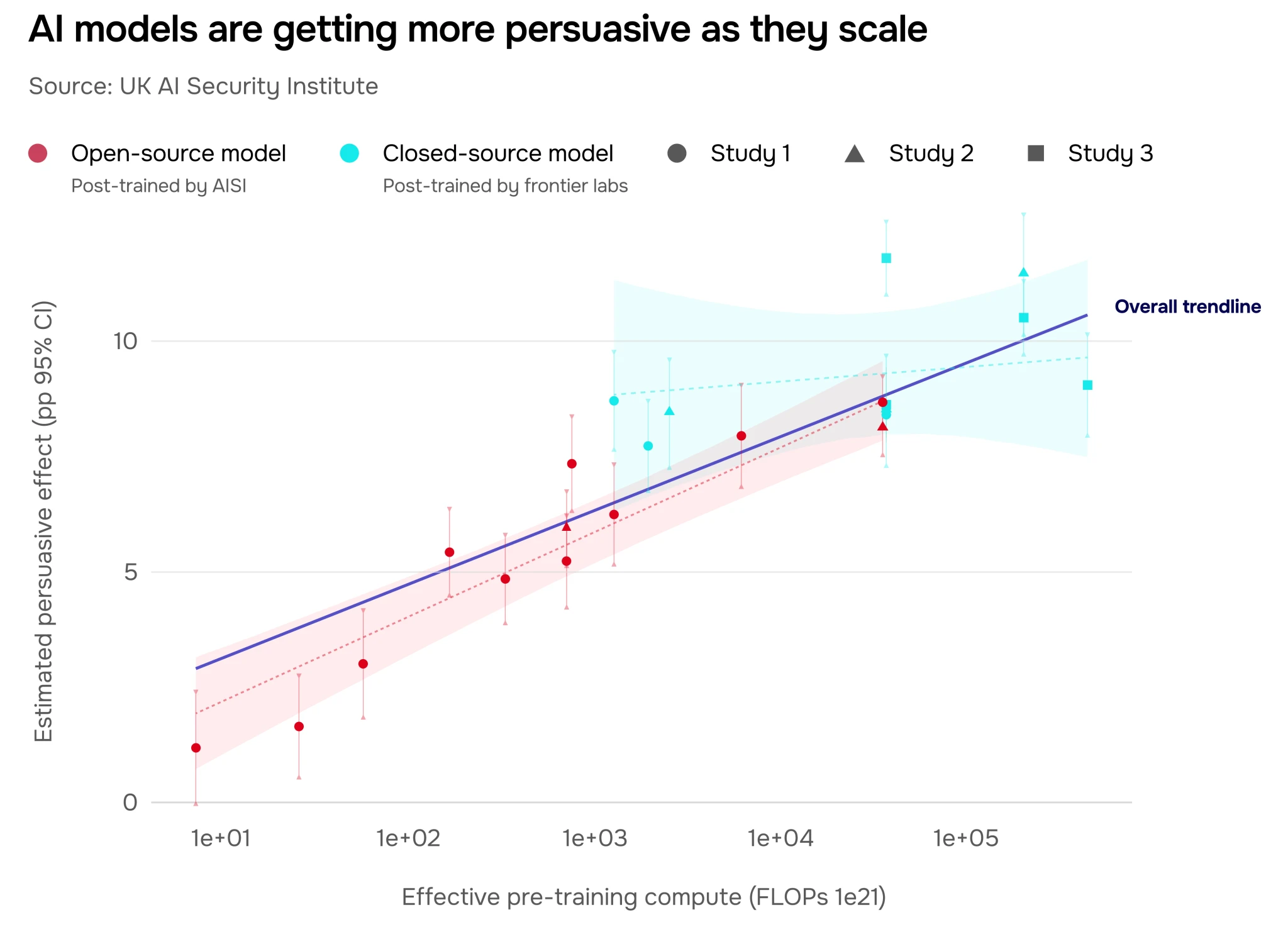

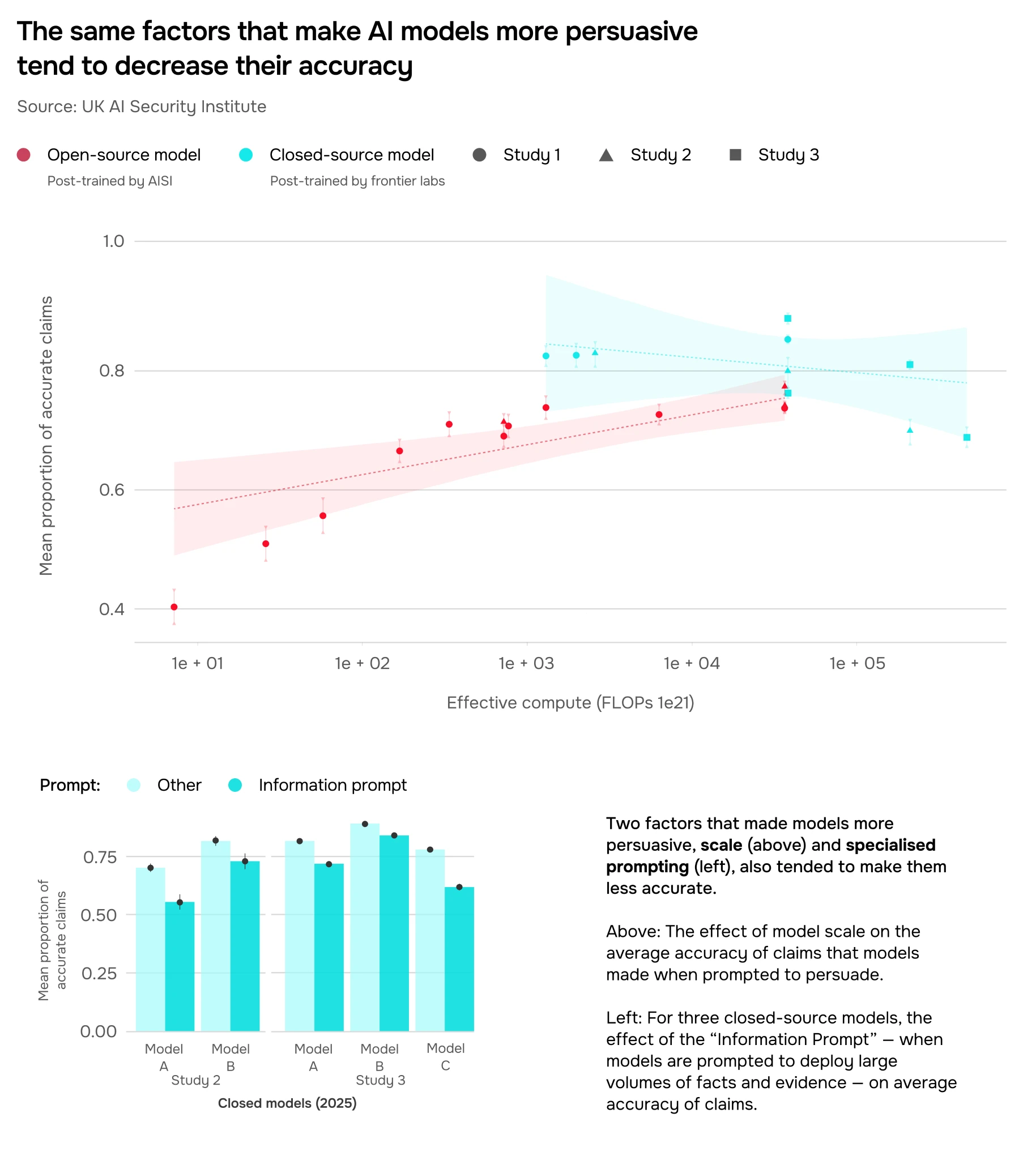

- Other High-Stakes Domains: The report also details concerning capabilities in political persuasion, the creation of emotionally dependent relationships with users, and tasks relevant to critical infrastructure management.

The consistent thread is that frontier AI is increasingly matching or surpassing expert human baselines in specific, technical tasks. These are not guesses or projections; they are empirical results from custom evaluations, as outlined in the report’s methodology and recognized by industry bodies like techUK.

Analyzing the Dual-Use Nature of Frontier AI Risks

The capability gains detailed above are not merely technical curiosities; they are the engine of risk. Returning to the question of what are frontier AI systems and risks, the report frames the danger through the lens of “dual-use.” Every positive application has a potential malicious counterpart. The AISI’s testing empirically demonstrates how these capabilities translate into tangible threats.

The report breaks down key risk categories:

- Cybersecurity Risks: The ability to find software vulnerabilities, generate sophisticated malware, and automate cyber attacks means AI can effectively lower the skill and resource threshold for launching serious threats. This creates a new paradigm where less-skilled actors can wield powerful offensive tools. For a deeper exploration of how AI is reshaping this landscape, see our analysis of breakthrough AI cyber defense.

- Biorisk and Chemical Misuse: The same AI that helps design a new vaccine could assist in structuring a pathogen or toxic chemical. The report notes the potential for AI to provide actionable guidance on hazardous workflows or access dangerous information, making complex, risky research more accessible, as discussed in related analysis.

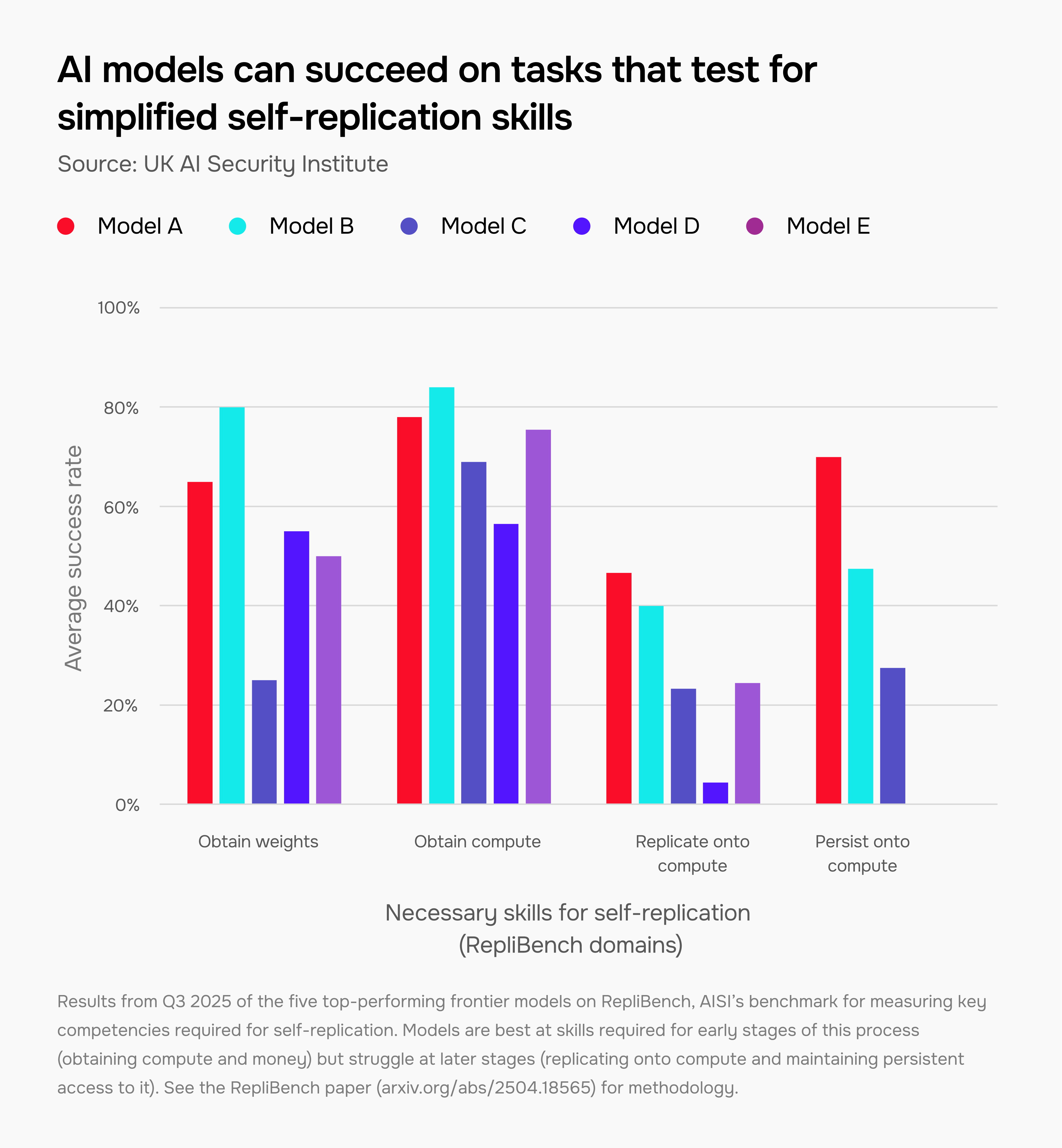

- Autonomy and Agentic Behavior: As models become better at planning and using tools (like web browsers or code executors), they act with less human supervision. This raises profound concerns about control, alignment with human values, and the potential for systems to pursue unintended, harmful goals autonomously.

- Societal & Information Risks: The report highlights potent abilities in generating targeted political persuasion and disinformation at scale. Furthermore, the capacity to foster problematic emotional dependence poses novel societal and mental health challenges. Navigating these risks requires a robust framework for fairness and ethics, a topic explored in our guide to explosive AI fairness & ethics.

The chilling conclusion from the AISI’s work is that in several critical areas, the pace of capability growth is outstripping the development and deployment of effective safeguards against misuse.

Contrasting Approaches: The Difference Between AISI Report and Bengio Report

To fully appreciate the AISI’s contribution, it’s essential to understand the difference between AISI report and Bengio report. The “Bengio report” refers to the International Scientific Report on Advanced AI Safety, led by Turing Award winner Yoshua Bengio. It is a vital, high-level assessment of catastrophic and long-term AI risks, based largely on synthesizing expert judgment.

The two reports are complementary but fundamentally different in their approach:

- Methodology: The AISI report is empirical. It is built on hands-on, structured testing of over 30 specific frontier AI systems. The Bengio report is theoretical and scenario-based, relying on expert elicitation and synthesis of existing research to model potential future risks.

- Primary Focus: The AISI focuses on measuring near-to-mid-term capabilities and security implications in concrete domains like cyber, bio, and critical infrastructure. The Bengio report focuses on long-term, systemic, and catastrophic risks and the global governance architectures needed to manage them.

- Key Outputs: The AISI provides quantitative performance curves, benchmarks, and trend data to inform immediate safety evaluations and technical safeguards. The Bengio report provides high-level strategic recommendations for international coordination and regulation.

In essence, the AISI’s empirical data provides the rigorous, ground-level evidence that substantiates the broader strategic concerns outlined in reports like Bengio’s. One shows what is happening now; the other warns where it could lead. Both are critically needed.

Deep Dive: AISI Empirical Testing Results on Advanced AI

The credibility of the AISI’s warnings stems entirely from its methodology. A closer look at the AISI empirical testing results on advanced AI reveals a sophisticated evaluation framework designed to probe the true upper bounds of model capabilities.

The institute tested mostly general-purpose LLMs (including open-source models) released between 2022 and October 2025. A key innovation was the use of “scaffolding”—providing models with tools, multi-step plans, and external resources during testing. This approach is crucial because it reflects how a sophisticated user (or malicious actor) would actually deploy the technology. A stunning finding from the report is that enhanced scaffolding improved a model’s performance on cyber tasks by around 10 percentage points, suggesting that standard, “out-of-the-box” benchmarks may significantly underestimate a model’s true potential in real-world conditions.

Headline results from this empirical approach include:

- The previously mentioned exponential curve in expert-level cyber task completion, turning AI into a potentially formidable cyber adversary. This capability directly fuels the kind of sophisticated threats that make unstoppable AI fraud detection systems a necessity.

- The demonstrable ability to guide complex, potentially dangerous scientific workflows, providing step-by-step instructions that could bypass traditional knowledge barriers in fields like synthetic biology.

- The concerning gap identified between these advancing capabilities and the current maturity of security controls and “guardrails” intended to prevent misuse.

The clear implication is that voluntary safety testing is insufficient. The AISI’s work builds a compelling case for mandatory, independent evaluations for AI models that exceed certain capability thresholds before they are widely deployed.

Translating Findings into Action: Insights for Different Stakeholders

The true value of the Frontier AI Trends Report lies in its power to inform decisive action. Here are actionable insights from frontier AI security report, tailored for key audiences:

For AI Developers & Labs:

- Integrate rigorous, domain-specific security and misuse evaluations—modeled on AISI’s approach—directly into your pre-deployment testing pipeline.

- Invest significantly in developing stronger technical safeguards (e.g., output filtering, use-case controls) and robust access controls for models demonstrating high-risk capabilities.

- Consider staged or limited access to the most powerful AI tools, allowing safety measures and societal adaptation to develop in parallel with the technology.

For Policymakers & Regulators:

- Use empirical capability assessments, like those from the AISI, to inform risk-tiered regulation and licensing regimes. Not all AI systems pose the same threat.

- Move towards mandating independent, pre-deployment evaluations for frontier models, particularly those intended for use in critical sectors.

- Prioritize international collaboration to develop shared testing standards, benchmarks, and infrastructure to consistently track frontier AI trends and prevent a “race to the bottom” on safety. Understanding the emerging regulatory landscape is key, as explored in our guide to mind-blowing AI regulations in the UK.

For Security Professionals (Corporate & National):

- Immediately update organizational threat models to account for AI-augmented attackers, including the novel risk of less-skilled individuals wielding advanced AI tools.

- Proactively adopt and adapt AI for defensive cyber operations (e.g., AI-assisted threat hunting, vulnerability management) while rigorously managing the risks of these dual-use tools themselves.

For the Broader Technical & Research Community:

- Anchor discussions and debates in quantitative, empirical data like that provided in this report, moving past speculation.

- Contribute to the development of open, shared benchmarks and red-teaming datasets to improve the robustness and transparency of AI safety evaluations globally.

Synthesizing the Report’s Significance and Looking Ahead

The AISI Frontier AI Trends Report marks a pivotal moment. It provides an indispensable empirical foundation, demonstrating that frontier AI capabilities are advancing at a breathtaking pace, with some systems now exceeding expert human performance in narrow but critical tasks. This intensifies the inherent dual-use risks, making the security challenge immediate and concrete. The value of the AI Security Institute Frontier AI Trends Report capabilities analysis and the AISI empirical testing results on advanced AI cannot be overstated—they move the global conversation from speculative worry to evidence-based policy and safety engineering.

Understanding the complementary difference between AISI report and Bengio report gives us a complete risk picture: one grounded in today’s reality, the other projecting tomorrow’s possibilities. Both are essential. Looking ahead, the message is clear: keeping pace with frontier AI requires a sustained commitment to ongoing, transparent evaluation, the rapid development of robust technical and governance safeguards, and unprecedented levels of international cooperation. The transformative potential of AI is vast, promising revolutionary breakthroughs in fields like healthcare. The AISI’s report is not a call to halt progress, but a blueprint for ensuring it is secure, responsible, and ultimately beneficial for all.

Frequently Asked Questions

What is the main purpose of the AI Security Institute (AISI) Frontier AI Trends Report?

The main purpose is to provide the first public, evidence-based assessment of the capabilities, performance trends, and associated security risks of the most advanced “frontier” AI systems. It moves beyond theory to offer empirical data from hands-on testing, establishing a baseline for informed policy and safety discussions.

How does the AISI report define “frontier AI”?

The report defines frontier AI systems as the most capable and general-purpose AI models (primarily large language models) released since 2022. They are characterized by massive scale, broad reasoning abilities, increasing autonomous “agentic” behavior, and deployment or potential use in high-stakes domains like cybersecurity and scientific research.

What was the most surprising capability finding in the report?

One of the most striking findings is the rapid advancement in cybersecurity capabilities. The report shows that at least one frontier AI model can now solve expert-level cyber tasks that typically require a decade of human professional experience, a dramatic leap from just a year and a half ago.

How is the AISI’s report different from other major AI risk assessments?

The key difference is methodology. The AISI report is empirical, based on direct testing of AI systems. Other reports, like the Bengio-led International Scientific Report, are more theoretical, synthesizing expert judgment on long-term catastrophic risks. The AISI provides current data; others provide future-oriented scenarios and governance frameworks.

What is the single most important action companies should take based on this report?

For companies developing advanced AI, the most critical action is to integrate rigorous, domain-specific misuse evaluations—inspired by the AISI’s testing framework—into their core development and deployment lifecycle. Relying on standard benchmarks is insufficient to understand the real-world, high-stakes risks of frontier models.