AMD MI400 AI Chips: The Next-Gen Challenger in the AI Hardware Race

Estimated reading time: 10 minutes

Key Takeaways

- The AMD MI400 AI chips represent AMD’s next major advancement in AI accelerators, expected in 2026, positioning as a critical alternative to NVIDIA.

- Architectural enhancements include a 50% increase in memory capacity to 432GB HBM4 and more than double the memory bandwidth at 19.6TB/sec.

- Compute performance sees a doubling in FP4 and FP8 operations, with scale-out bandwidth optimized for distributed training.

- AMD’s AI silicon roadmap extends to the MI500 series in 2027, promising further leaps in performance and scale.

- Enterprises can expect a significant AI performance boost with faster training times, larger model deployment, and reduced total cost of ownership.

Table of contents

- AMD MI400 AI Chips: The Next-Gen Challenger in the AI Hardware Race

- Key Takeaways

- Introduction: The AI Hardware Race and AMD’s Entry

- The Evolution: From MI300 to MI400 with CDNA 5 Architecture

- Architectural Deep Dive: Specs That Matter

- The AI Silicon Roadmap: MI400, Helios, and a Glimpse at MI500

- Head-to-Head: How MI450 Stacks Against the Competition

- Enterprise AI Performance Boost: Tangible Benefits for Businesses

- Strategic Context: Why AMD’s Roadmap Matters in the Market

- Frequently Asked Questions

Introduction: The AI Hardware Race and AMD’s Entry

The demand for specialized AI processors is escalating at a breakneck pace as organizations worldwide deploy advanced machine learning models. In this intense AI hardware race where NVIDIA dominates, the search for viable alternatives is heating up as alternatives emerge. Enter the AMD MI400 AI chips—AMD’s Instinct MI400 series, the next major advancement in AI accelerators expected to drop in 2026. This series positions AMD as a critical alternative to NVIDIA, offering a fresh competitive edge in the market.

This post explores how the MI400 series delivers a meaningful enterprise AI performance boost, diving into its specifications, the broader AI silicon roadmap, a MI500 preview for 2027, and the tangible gains for enterprise deployments. For readers seeking next-gen AI processors, the AMD MI400 AI chips represent a pivotal development worth watching.

We’ll structure our deep dive as follows:

- A thorough examination of the MI400 architecture and its enhancements.

- A preview of AMD’s roadmap, including the upcoming MI500 series.

- The direct impacts on enterprise AI deployments and performance.

- The strategic context to satisfy informational intent on upcoming AI silicon.

The Evolution: From MI300 to MI400 with CDNA 5 Architecture

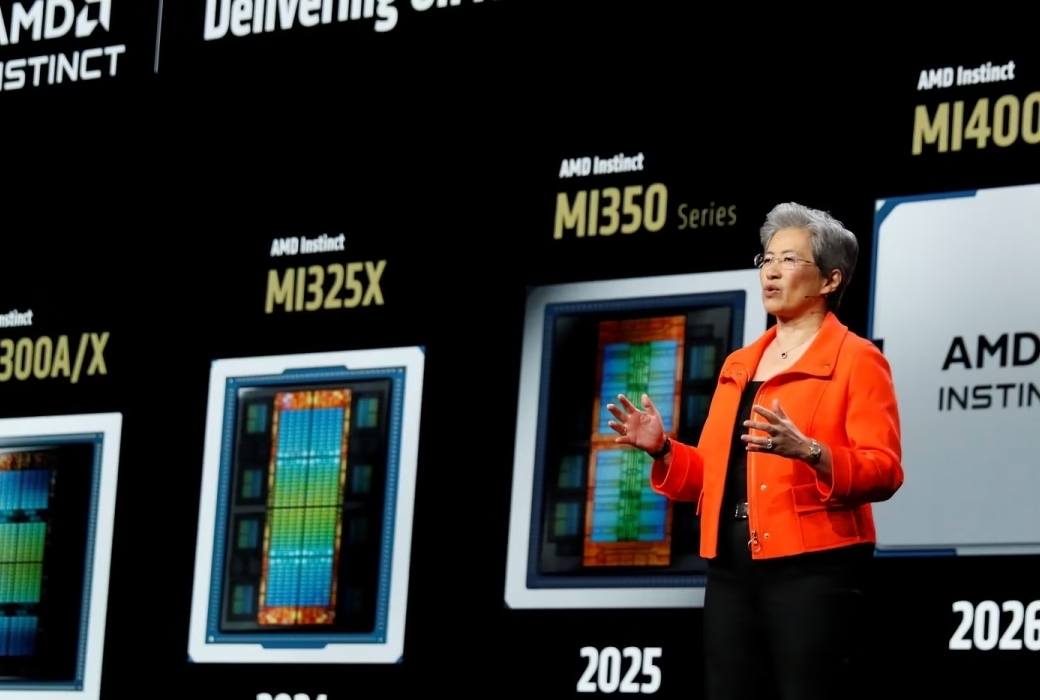

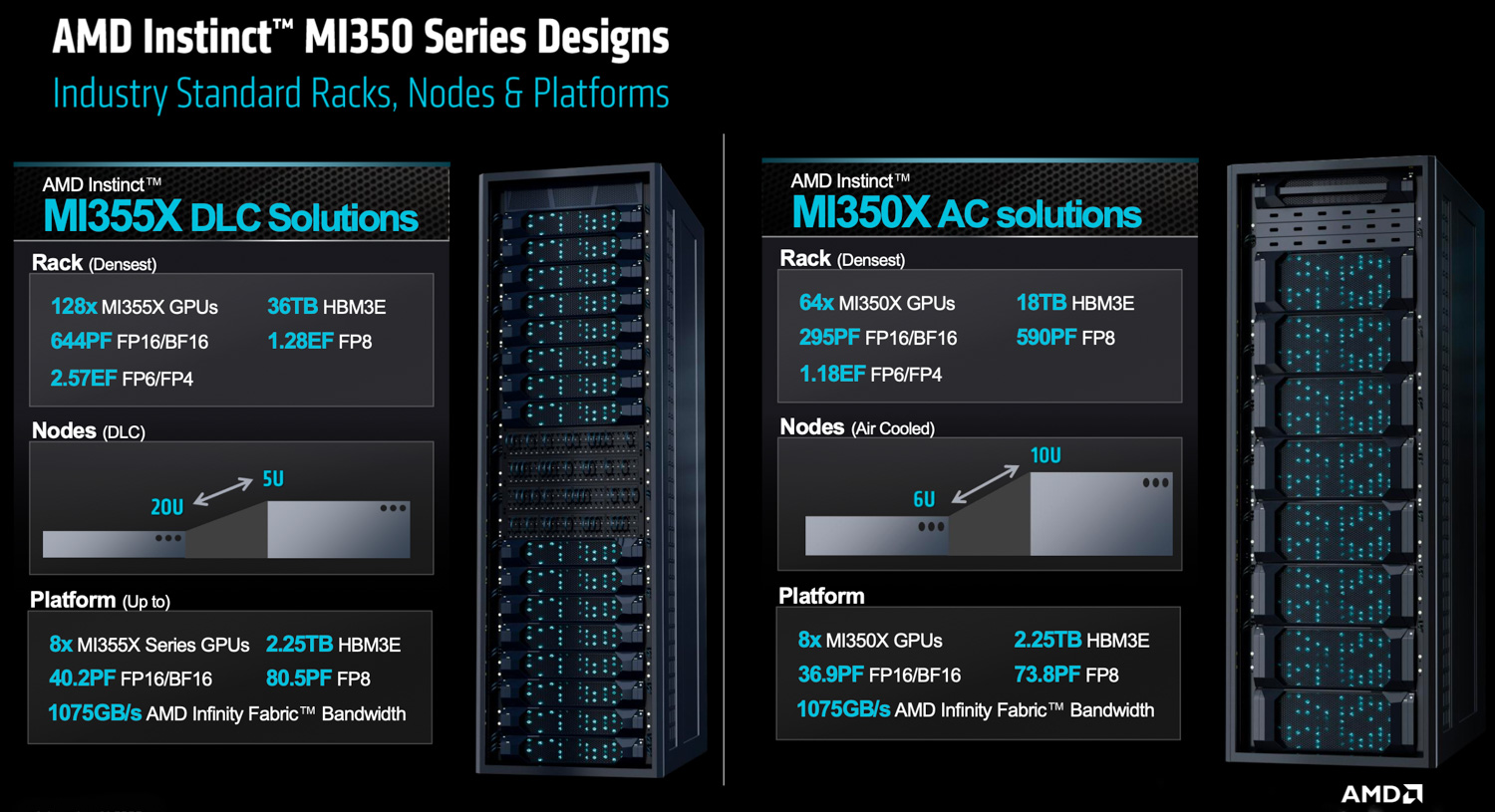

The AMD MI400 AI chips mark a substantial improvement over the current MI300 and MI350 series, built on the new CDNA 5 architecture. According to sources, this architectural shift is designed to address the growing demands of massive AI models and complex workloads.

Key Architectural Enhancements

Let’s break down the key enhancements that set the MI400 apart:

- Memory Capacity: A staggering 432GB of HBM4, which is a 50% increase over the MI350’s 288GB HBM3E. This boost is crucial for handling larger models without constant data shuffling. Source: TweakTown.

- Memory Bandwidth: 19.6TB/sec, more than double the MI350’s 8TB/sec. This translates to faster data access and processing, reducing bottlenecks in training and inference. Source: TweakTown.

- Compute Performance: On the flagship MI450 series, expect 40 PFLOPs FP4 and 20 PFLOPs FP8, effectively doubling the performance of the MI350. This leap enables more efficient handling of lower-precision computations, which are essential for cost-effective inference. Source: TweakTown.

- Scale-Out Bandwidth: 300GB/sec per GPU for distributed training, facilitating smoother scaling across clusters and reducing communication overhead. Source: TweakTown.

MI400 Family Variants

AMD is tailoring the MI400 series to different use cases with specific variants:

- MI455X: Optimized for both training and inference, balancing performance and efficiency.

- MI430X: Designed for HPC deployments, where high computational power is paramount.

- MI440X GPU: Targeted at on-premises enterprise AI, offering robust performance for dedicated infrastructure. Sources: TweakTown and AMD.

Performance Targets and Design Philosophy

Early benchmarks suggest impressive gains:

- 2.8x to 4.2x improvement over MI300 in models like DeepSeek R1 and Llama 3.1 405B.

- 40% more tokens per dollar compared to NVIDIA’s offerings, highlighting cost efficiency.

- In certain workloads, up to 10x faster than MI355, though this isn’t universal. Source: XPU Pub.

The design philosophy behind the AMD MI400 AI chips prioritizes scaling for massive LLMs and inference by increasing memory and bandwidth to address bottlenecks. Support for FP4 and FP8 lower-precision computations allows for more cost-effective inference, making these chips versatile for various AI tasks. Source: TweakTown.

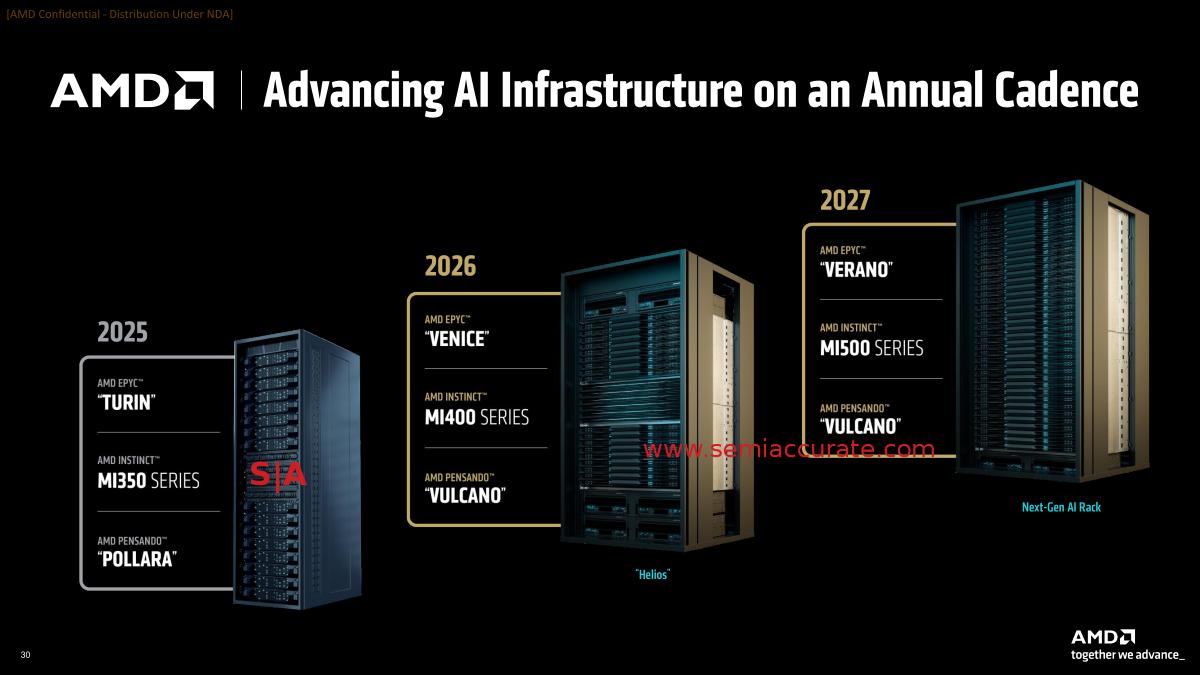

The AI Silicon Roadmap: MI400, Helios, and a Glimpse at MI500

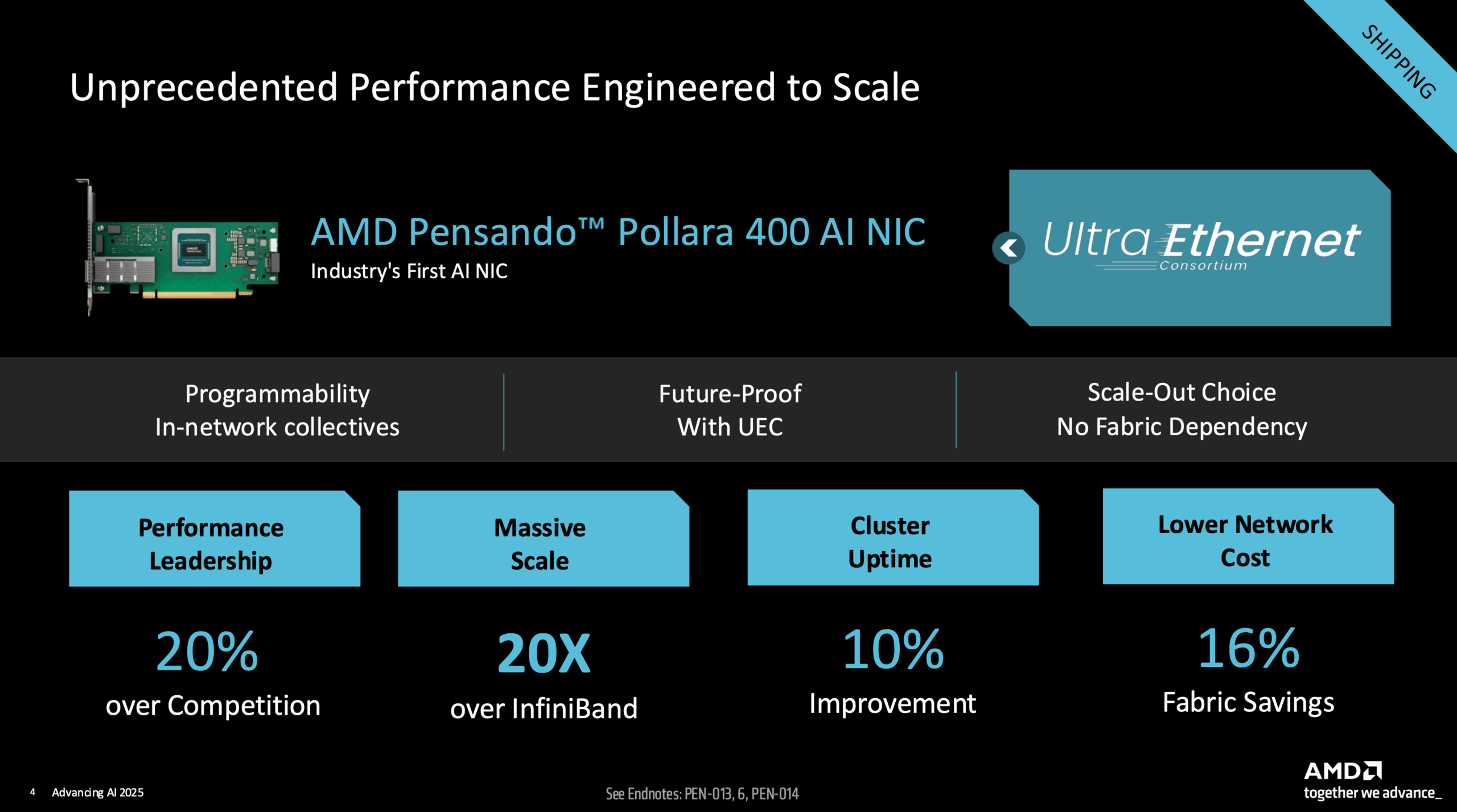

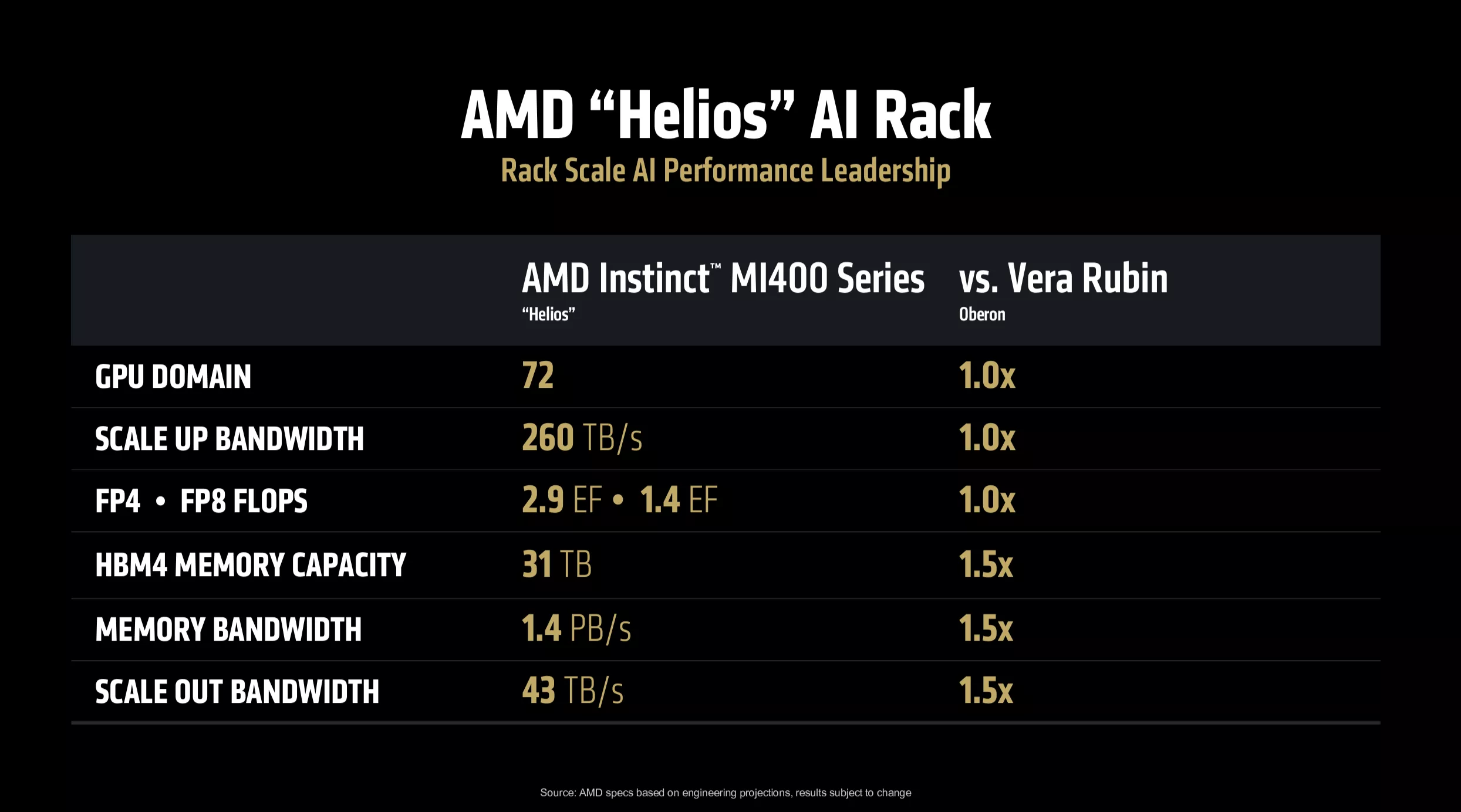

AMD’s AI silicon roadmap outlines a multi-year progression aimed at challenging NVIDIA’s dominance. It starts with the MI400 series integrated into rack-scale Helios systems in 2026. These systems are designed to match NVIDIA’s Vera Rubin but with a 1.5x advantage in memory capacity and bandwidth. Sources: XPU Pub and TweakTown [PenBrief].

Helios will leverage advanced networking protocols like UALoE, UAL, and UEC for efficient cluster communication, ensuring that data flows seamlessly across nodes. This integration is critical for large-scale AI training and inference. Sources: TweakTown and XPU Pub.

MI500 Preview for 2027

Looking ahead, the Instinct MI500 series is slated for late 2027. Dubbed the “Ultra” variant, similar to NVIDIA’s Blackwell Ultra, the MI500 UAL256 will feature 256 physical/logical chips, compared to NVIDIA VR300 NVL576’s 144. This represents a major leap in scale-out capabilities. Sources: TweakTown and SemiAnalysis.

Projections indicate a 1,000x AI performance increase over previous generations in best-case scenarios for specific workloads. While this is an optimistic figure, it underscores AMD’s ambitious targets. Source: Data Center Dynamics.

The long-term strategy focuses on:

- Continuous growth in memory and bandwidth to support ever-larger models.

- Enhanced scale-out capabilities for efficient clustering.

- Power efficiency with competitive thermal design power (TDP).

- Support for advanced formats like FP4 and FP6 to balance accuracy and performance.

Head-to-Head: How MI450 Stacks Against the Competition

To understand the competitive landscape, let’s compare the AMD MI450 with its rivals in key areas:

| Feature | AMD MI450 | Competition (e.g., NVIDIA) | Advantage |

|---|---|---|---|

| Memory Capacity | 432GB HBM4 | ~288GB HBM3E | 1.5x advantage |

| Memory Bandwidth | 19.6TB/sec | Equivalent | Equivalent |

| FP4/FP8 Compute | 40/20 PFLOPs | Equivalent | Equivalent |

| Scale-Up Bandwidth | High | Equivalent | Equivalent |

| Scale-Out Bandwidth | 300GB/sec per GPU | Lower | 1.5x advantage |

Source: TweakTown

This table highlights where the AMD MI400 AI chips excel, particularly in memory capacity and scale-out bandwidth, giving enterprises reasons to consider AMD for their AI infrastructure.

Enterprise AI Performance Boost: Tangible Benefits for Businesses

The enterprise AI performance boost delivered by the MI400 series is not just theoretical; it translates into real-world advantages:

- Faster Training Times: The doubled bandwidth and higher compute performance accelerate the training of large language models (LLMs), reducing time-to-market for AI applications.

- Larger Model Deployment: With a 50% increase in memory, enterprises can deploy larger models without the complexity of distributed systems, simplifying infrastructure and management.

- Reduced Total Cost of Ownership (TCO): The 40% improvement in token efficiency and competitive pricing mean lower costs per inference, making AI deployments more economical. Source: XPU Pub.

- Flexible Formats: Support for FP4 and FP8 allows businesses to trade off between accuracy, speed, and power consumption, optimizing for specific use cases.

Applications and Use Cases

The AMD MI400 AI chips are designed for a variety of demanding applications:

- LLM training and fine-tuning for generative AI models.

- High-throughput generative AI inference in real-time scenarios.

- Massive recommender systems that require rapid data processing.

- Scientific computing tasks that benefit from high memory and compute capabilities.

By addressing these areas, AMD ensures that its chips deliver the enterprise AI performance boost needed to keep pace with evolving AI demands. As AI chip demand fuels growth, alternatives like AMD become increasingly valuable.

Strategic Context: Why AMD’s Roadmap Matters in the Market

In the broader market dynamics, AMD’s AI silicon roadmap positions the company as a credible alternative to NVIDIA. While matching core specifications, AMD excels in scale-out capabilities and memory, offering distinct advantages. For instance, the MI450X forced NVIDIA to increase the TDP of its Rubin VR200 from 1800W to 2300W, and AMD responded with a 2500W TDP, highlighting the thermal challenges in this race. Source: TweakTown.

Why does this matter for enterprises and the industry?

- Sustained Commitment: AMD’s roadmap through 2027 shows a long-term dedication to AI hardware, providing confidence for investors and customers.

- Targeted Innovations: By focusing on enterprise pain points like memory bottlenecks and scale-out, AMD addresses real needs.

- Price-Performance Focus: With competitive pricing and efficiency, AMD offers a cost-effective path to advanced AI capabilities.

- Avoiding Lock-in: Alternatives to NVIDIA help prevent vendor lock-in, fostering a healthier competitive landscape. Source: PenBrief.

To recap, the AMD MI400 AI chips bring significant spec improvements, the MI500 preview for 2027 promises further leaps, and the overall enterprise AI performance boost is grounded in AMD’s strategic AI silicon roadmap.

As AI models continue to grow in size and complexity, AMD’s roadmap ensures that competitive, accessible compute remains available. Businesses should closely monitor these advancements to future-proof their AI infrastructure.

Call to Action: Subscribe for updates on AI silicon developments or share your thoughts on AMD vs. NVIDIA in the comments below.

Frequently Asked Questions

When will the AMD MI400 AI chips be released?

The AMD Instinct MI400 series is expected to launch in 2026, with specific variants rolling out throughout the year.

What is the CDNA 5 architecture?

CDNA 5 is AMD’s next-generation GPU architecture designed specifically for compute and AI workloads. It introduces enhancements in memory capacity, bandwidth, and compute efficiency, forming the foundation of the MI400 series.

How do the AMD MI400 chips compare to NVIDIA’s offerings?

The MI400 series competes closely with NVIDIA’s accelerators, offering advantages in memory capacity (1.5x) and scale-out bandwidth. In other areas like compute performance and memory bandwidth, they are equivalent, making AMD a strong alternative.

What are the key benefits for enterprises?

Enterprises can expect faster training times, the ability to deploy larger models, reduced total cost of ownership, and flexibility in precision formats for inference, leading to a significant AI performance boost.

What is the MI500 preview for 2027?

The MI500 series, slated for late 2027, is AMD’s “Ultra” variant expected to feature 256 physical/logical chips and project a 1,000x AI performance increase in specific workloads, representing a major scale-out leap.

Will AMD’s AI chips work with existing software frameworks?

Yes, AMD is committed to ensuring compatibility with popular AI software frameworks like PyTorch and TensorFlow through its ROCm software stack, minimizing integration hurdles for developers.