Apple AR Glasses Interface Leak – What the Rumors Reveal

Estimated reading time: 12 minutes

Key Takeaways

- The apple ar glasses interface leak points to a potential 2026 launch, featuring a sleek, controller-free design powered by visionOS features.

- Interaction relies on revolutionary gesture controls and advanced eye tracking, creating a natural, hands-free user experience.

- Privacy is paramount, with on-device processing for sensitive data like gaze tracking and no always-on cameras for basic overlays.

- Deep integration with the iPhone ecosystem and ARKit updates will enable a rich app landscape from day one.

- Apple positions these glasses as everyday productivity tools, distinct from VR headsets, aiming to redefine wearable computing.

Table of contents

- Apple AR Glasses Interface Leak – What the Rumors Reveal

- Key Takeaways

- Opening Hook & Introduction

- Dissecting the Leaked Interface Design

- Deep Dive into Gesture Controls

- Exploring Eye Tracking as a Primary Input Method

- VisionOS Integration and Software Foundation

- Bringing It All Together: The Complete User Experience

- Competitive Context and Market Positioning

- Real-World Use Cases and Potential Applications

- Challenges and Barriers to Adoption

- Timeline and Expected Launch

- Closing Vision and Call to Action

- Frequently Asked Questions

Opening Hook & Introduction

Imagine slipping on a pair of lightweight glasses that seamlessly blend the digital and physical worlds. This isn’t science fiction—it’s the future hinted at by the recent apple ar glasses interface leak. According to credible reports, Apple is targeting a 2026 launch for its AR glasses, a device that promises to be radically different from the Vision Pro.

Drawing from design patents, prototypes, and insider sources like AppleInsider, these leaks reveal a focus on advanced visionOS features, AI-driven visual lookups, and a design that overlays digital elements onto reality without clunky controllers. As explored in analyses on spatial computing’s future, this could be Apple’s next wearable revolution.

Dissecting the Leaked Interface Design

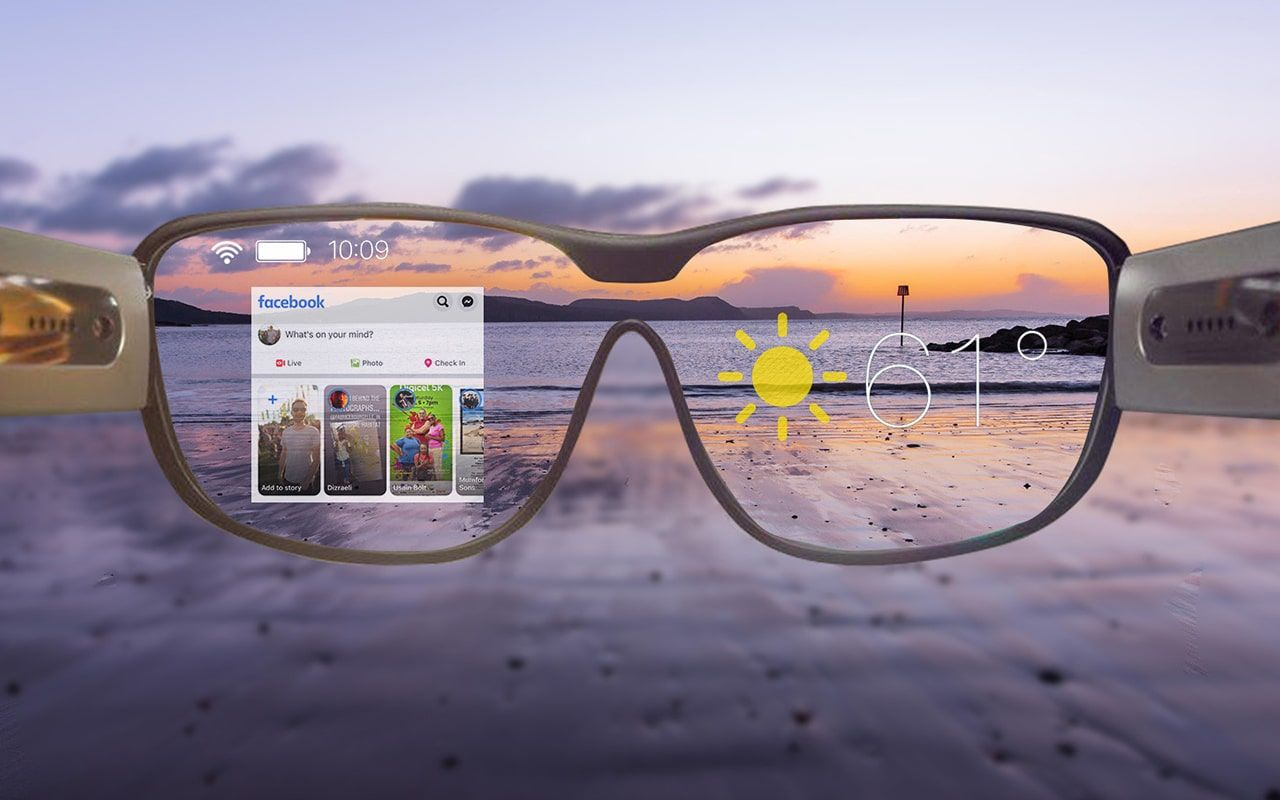

While no full UI screenshots have surfaced, the apple ar glasses interface leak describes a codenamed “Starboard” system. Think of it as an AR version of the iPhone’s Springboard—a home screen with transparent, 2.5D objects floating in your field of view, directly from visionOS.

- Transparent Displays: Unlike opaque VR screens, Apple’s glasses are rumored to use transparent microOLED displays. These allow light to pass through while projecting AR content, so you see the real world naturally. Paired with LiDAR sensors that map your environment in 3D, digital objects can be anchored to physical surfaces with precision.

- Heads-Up Display (HUD): As detailed in leak analyses, a HUD reflects AR content onto clear lenses. You’ll interact with floating icons and contextual menus without blocking your vision—a stark contrast to bulky headsets.

- Privacy First: Apple’s approach avoids always-on cameras for basic features, addressing a major consumer concern. This design prioritizes blending digital enhancements with real-world awareness.

Deep Dive into Gesture Controls

Forget controllers. The apple ar glasses interface leak emphasizes gesture controls as a cornerstone. Building on ARKit’s hand-tracking, patents describe intuitive, controller-free interactions:

- Pinching to select virtual items.

- Swiping in the air to navigate menus.

- Pointing at overlays to activate content.

This system uses integrated sensors—likely cameras or depth sensors—to track hand position and finger movements in real-time. It’s computationally intensive but essential for making AR feel natural. As sources note, this eliminates the need for physical input devices, offering a hands-free advantage over traditional VR.

Exploring Eye Tracking as a Primary Input Method

Alongside gestures, eye tracking emerges as a game-changer. The leaks highlight it as a primary input method, enabling features that define eye tracking visionOS features.

- Foveated Rendering: This technique sharpens focus only where you’re looking, saving battery and processing power by rendering detail selectively.

- Gaze-Based Selection: Look at an interface element to select it—no gesture or voice needed. The UI can shift dynamically based on your attention, popping up contextual info when you fixate on real-world objects.

- Privacy and Sensors: Eye tracking data stays on-device, processed locally via infrared LEDs and cameras. It works with head tilt, compass, and geolocation sensors for precise navigation, as per AppleInsider reports.

VisionOS Integration and Software Foundation

The hardware is nothing without software. These glasses will run a variant of visionOS, Apple’s spatial computing OS. visionOS features leverage ARKit updates for environmental understanding via LiDAR, requiring no camera for basic overlays.

Developers will tap into enhanced ARKit for advanced apps—think face recognition using iPhone-stored data for privacy, or real-time object analysis. Rumors suggest Apple Intelligence integration, allowing voice or gaze-triggered queries about your surroundings. Imagine identifying a plant or summarizing notes instantly.

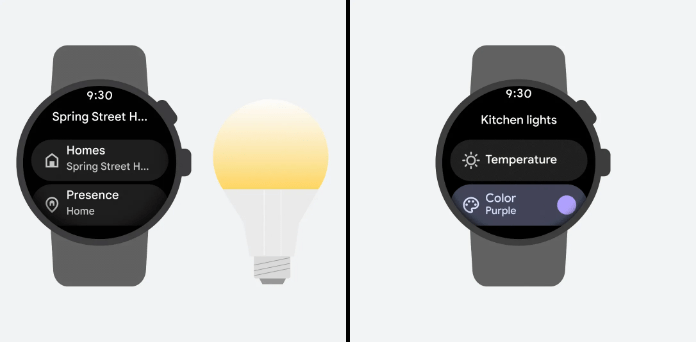

Your iPhone and Apple Watch will anchor shared experiences, creating a seamless ecosystem. With ARKit’s mature developer community, a rich app landscape should be ready at launch, making the apple ar glasses interface leak a beacon for coders.

Bringing It All Together: The Complete User Experience

Let’s paint a picture. You put on lightweight glasses connected via Bluetooth to your iPhone. You glance at a historic building—eye tracking pulls up contextual info via visionOS. With a finger flick, gesture controls let you expand a menu. AI summarizes details on a subtle HUD, all while you see the world unobstructed.

This workflow prioritizes natural interaction, blending digital aid with daily life seamlessly.

LiDAR anchors virtual elements to physical spaces. Need directions? AR arrows overlay on streets. Checking messages? A glance suffices. As demonstrations suggest, this is immersive yet lightweight. For more on AR’s potential, see how VR is evolving entertainment.

Competitive Context and Market Positioning

How does Apple stack up? The apple ar glasses interface leak reveals a vertically integrated ecosystem, building on iPhone LiDAR and ARKit. Compare this to Meta’s Ray-Ban glasses, which focus on AI cameras for translation, or Google’s broader prototypes.

- Apple’s Edge: Emphasis on augmenting iPhone functionality, privacy-first design, and seamless Apple integration. It’s about personal productivity, not world surveillance or the metaverse.

- Market Shift: As spatial computing gains traction, Apple positions itself as a leader in everyday AR, distinct from VR experiences.

Real-World Use Cases and Potential Applications

The practical value is immense. Here’s where gesture controls and eye tracking visionOS features shine:

- Hands-Free Productivity: Manage emails, calendars, or notes without pulling out your phone.

- Social Reminders: Facial recognition (with on-device data) could prompt names at events.

- Shopping: Visualize furniture in your home before buying, with reviews accessed via a glance.

- Education: Real-time language translation or interactive learning overlays.

- Navigation: AR turn-by-turn directions overlaid on streets, as AI wearables evolve.

Challenges and Barriers to Adoption

Despite the hype, the apple ar glasses interface leak hints at hurdles:

- Battery Life: All-day wear requires efficient power management for continuous eye tracking and gesture processing.

- Social Acceptance: The “glasshole” aesthetic—will people embrace visible AR glasses in public?

- Thermal Management: Keeping devices cool during spatial computing tasks.

- Ecosystem Lock-In: Android incompatibility may limit appeal versus open platforms.

- Privacy Concerns: Even with on-device processing, users may worry about gaze data inferences.

Timeline and Expected Launch

When can we expect this? Based on credible leaks, the timeline is:

- Unveiling: Late 2026, likely at WWDC to showcase ARKit updates and visionOS features to developers.

- Shipping: 2027, following the scrapping of the Vision Air project.

- Software: A major Siri overhaul for voice-driven features, as tipped by sources. For more on release rumors, see this analysis.

Note: These are unofficial timelines—await Apple’s announcement for confirmation.

Closing Vision and Call to Action

The apple ar glasses interface leak collectively paints a picture of a gesture controls and eye tracking visionOS features powerhouse. Powered by ARKit updates, this device aims to shift wearables toward everyday AR integration.

Apple’s ambitious push against Meta and Google signals a paradigm shift—from mobile-first to spatial AR as the primary interface. As multiple sources converge, these rumors feel substantive.

What’s next? Watch for official announcements at WWDC 2026. Developers, dive into ARKit documentation. Readers, share your thoughts: what features matter most in AR glasses?

Frequently Asked Questions

What is the Apple AR glasses interface leak?

It refers to rumors and leaked details about Apple’s upcoming augmented reality glasses, suggesting a 2026 launch with a visionOS-based interface, gesture controls, and eye tracking, as reported by sources like YouTube analysts and AppleInsider.

How do gesture controls work on Apple AR glasses?

They use hand-tracking sensors to detect pinches, swipes, and points in the air, allowing controller-free interaction with virtual elements. This builds on ARKit technology, as detailed in leaks.

What is eye tracking and how is it used?

Eye tracking monitors where you look using infrared sensors. It enables foveated rendering for efficiency, gaze-based selection, and dynamic UI changes. Data is processed on-device for privacy, a key visionOS feature.

When are Apple AR glasses expected to launch?

Current leaks point to an unveiling in late 2026, with shipping in 2027, as per Tom’s Guide and 9to5Mac.

How do Apple AR glasses compare to competitors like Meta?

Apple focuses on iPhone integration, privacy, and productivity, while Meta emphasizes AI cameras. Apple’s approach avoids always-on cameras for basic features, as leaks indicate.

What are the privacy implications?

Apple designs for on-device processing of sensitive data like eye tracking, with no video capture for basic overlays. However, users may still have concerns about gaze data collection.

Will Apple AR glasses work with Android?

Likely not. The leaks suggest deep ecosystem lock-in with iPhone and Apple Watch, which could limit appeal compared to more open platforms.