Deconstructing Apple Intelligence: The Technical Deep Dive into Hybrid On-Device and Private Cloud Compute Architecture

Estimated reading time: 10 minutes

Key Takeaways

- Apple Intelligence (AI) is defined by its hybrid architecture, leveraging specialized **on-device** models for speed and a highly secure **Private Cloud Compute (PCC)** system for scale.

- The foundation is a compact, highly optimized ~3B‑parameter language foundation model that executes locally using the Apple Silicon Neural Engine, enabling core tasks like rewriting and summarization with extremely low latency.

- PCC handles complex, resource-intensive requests (long-context reasoning), employing a **zero‑retention, attested, and auditable security model** to ensure data is never logged or stored by Apple.

- Privacy is maintained through the Semantic Index, a local vector database of personal data that facilitates personalization without ever requiring cloud data upload.

- The system uses cryptographic verification (attestation) to prove to the user’s device that the PCC server is running certified, publicly auditable software before any data transfer occurs.

Table of Contents

- Deconstructing Apple Intelligence: The Technical Deep Dive into Hybrid On-Device and Private Cloud Compute Architecture

- Key Takeaways

- The Architectural Imperative: On-Device Versus Cloud Scaling

- Detail the On-Device Foundation Models (The P-K Factor)

- Defining and Explaining Private Cloud Compute (PCC)

- Analyzing Apple’s Privacy Strategy and Decision Logic

- Deep Dive into Agentic Features: Siri and Writing Tools

- Developer Integration and SDK Best Practices

- Frequently Asked Questions

The Architectural Imperative: On-Device Versus Cloud Scaling

Apple Intelligence (AI) is not merely a collection of features; it is Apple’s unified generative AI framework, built from the ground up for the iPhone, iPad, and Mac. Its fundamental distinction in the rapidly evolving AI landscape rests on its two core pillars: **personal context** and **privacy by architecture**. *This commitment to privacy is deeply embedded in the very structure of the platform*, as emphasized by the official design philosophy, which you can read about on the Apple Intelligence page.

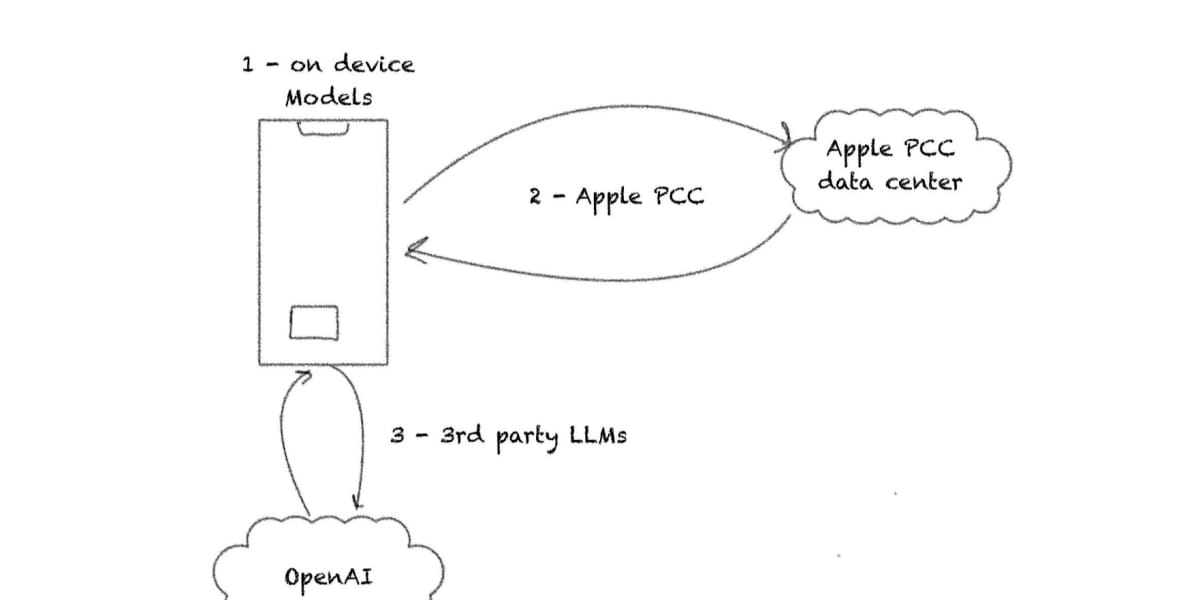

The core objective of this technical analysis is to provide a deep explanation of the hybrid architecture that makes this unique blend of power and privacy possible. Traditional AI either runs fully in the cloud (high scale, low privacy) or fully on-device (high privacy, low scale). Apple needed to deliver both.

This introduces the key dichotomy: highly optimized models running **on‑device** leveraging the Neural Engine in Apple Silicon, and much larger, more powerful models executing in the highly secured **Private Cloud Compute (PCC)** environment. The strategic segmentation ensures that requests involving sensitive personal data never leave the device, while complex reasoning tasks gain the necessary computational power. Further technical insights into this split can be found in Apple’s machine learning research, discussing the need for hybrid foundation models Source: Apple Foundation Models.

The following sections detail exactly how the **apple intelligence ios 19 on-device models explained** forms the foundational layer for this scalable, secure AI stack. This foundation is crucial for setting the stage for unmatched personalization and lightning-fast speed, radically transforming how generative AI transforms smartphones Source: Generative AI on Smartphones.

Detail the On-Device Foundation Models (The P-K Factor)

The foundation of the entire Apple Intelligence architecture is a compact, highly optimized **~3B‑parameter language foundation model**. This model is delivered directly with the operating system and runs locally on the device. This is a monumental technical feat, considering the size and performance constraints of mobile devices. *This model’s efficacy stems from intense optimization, striking a perfect balance between capability and physical constraints*.

Achieving optimal performance for **apple intelligence ios 19 on-device models explained** required leveraging the full spectrum of Apple Silicon:

- **Neural Engine:** Dedicated hardware for rapid matrix multiplication and low-precision inference. This is the primary workhorse.

- **CPU & GPU Co-Optimization:** Tasks are intelligently offloaded and shared to manage thermal envelopes and power consumption, ensuring performance remains high even under sustained usage.

You can review the benchmarks and performance requirements necessary to run such models efficiently on modern hardware Source: Apple M4 Chip AI Performance.

To ensure the small model can handle diverse tasks, specialized variants are derived from the core foundation model. These variants are tailored for specific functions like rewriting, summarizing long texts, and crucial **tool selection** (the ability for the model to decide which system feature or API to call next). This specialized approach means that the model can be highly efficient for tasks that might otherwise require a much larger general-purpose LLM, as detailed in the Apple Foundation Models research.

Model Runtime Techniques: Ensuring Efficiency and Reliability

To make the ~3B model operate reliably within the strict memory and thermal budgets of a mobile device, Apple employs sophisticated model runtime techniques:

- **Quantization and Pruning:** The model weights are aggressively optimized. Quantization reduces the bit depth (e.g., from 16-bit to 4-bit) of the weights and activations, drastically reducing memory footprint while maintaining acceptable accuracy. Pruning removes redundant connections.

- **Constrained Decoding:** This technique is a game-changer for developer integration. It guarantees that model outputs conform to declared Swift data types (like specific structures or enums), eliminating the need for developers to parse raw, potentially ambiguous text. This significantly simplifies development and increases the reliability of agentic actions Source: Constrained Decoding.

- **Speculative Decoding:** Used to maintain high token throughput. The system uses a smaller, faster draft model to generate candidate tokens ahead of the main model, which then verifies them in batches, maintaining the illusion of real-time responsiveness.

The core benefits of this intensive on-device processing are manifold: **low latency** (responses are instantaneous as no network call is needed), **network independence**, and paramount **guaranteed data security**. Because personal context—your private emails, photos, messages, and notes—never leaves the device, the data security model is absolute Source: Apple Intelligence Privacy.

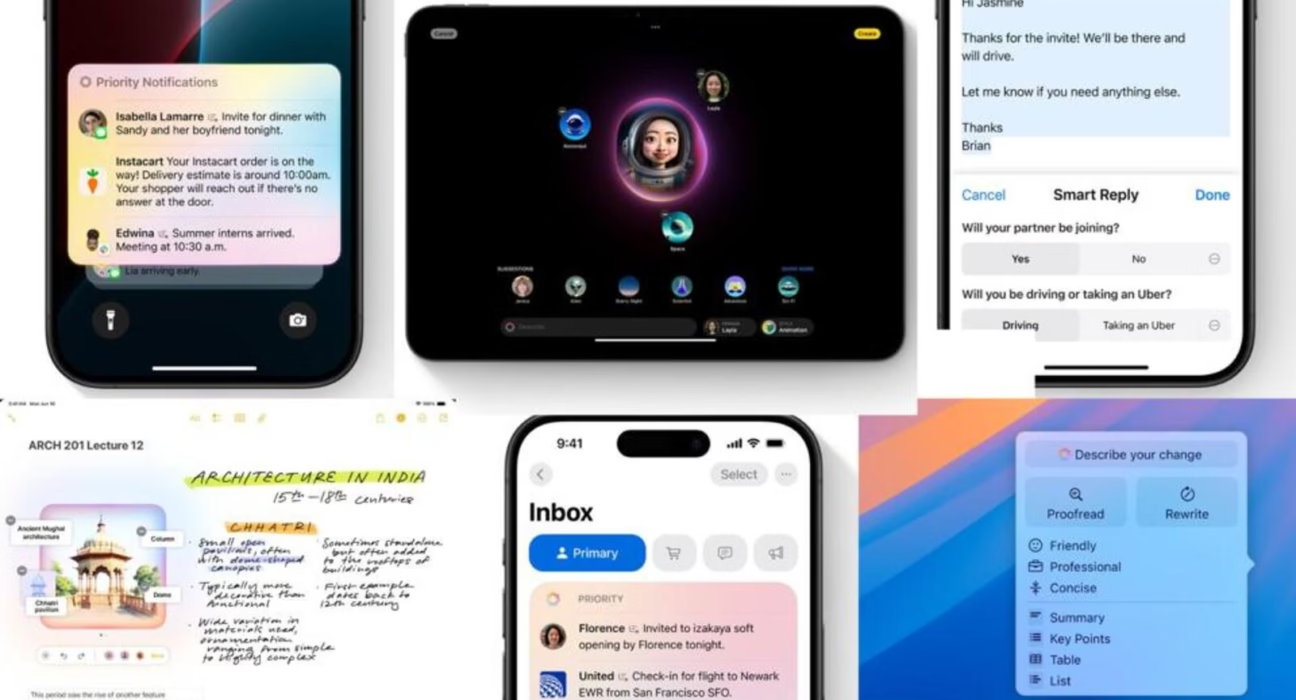

Examples of tasks handled purely on-device include real-time writing tools (rewrite, summarize, proofread) and critical system functions like notification prioritization and performing local agentic actions based on cross-app context, such as scheduling or accessing specific files Source: iPhone 16 Features.

Defining and Explaining Private Cloud Compute (PCC)

While the on-device model provides speed and privacy for everyday tasks, it cannot handle every complex request. Private Cloud Compute (PCC) is the necessary scaling solution designed for large-scale, intricate requests—for instance, long-context reasoning across hundreds of emails or generating high-fidelity images—that exceed the capacity of the **~3B on‑device model** Source: PCC Scaling.

Technical Description and Security Commitment

PCC is not just a standard cloud server farm. It is a highly specialized infrastructure built using Apple-designed server-class silicon. Crucially, each server unit features a **Secure Enclave** and a hardware root of trust. This ensures that the server itself can guarantee its operating environment is pure and uncompromised, even from Apple itself Source: Secure Enclave.

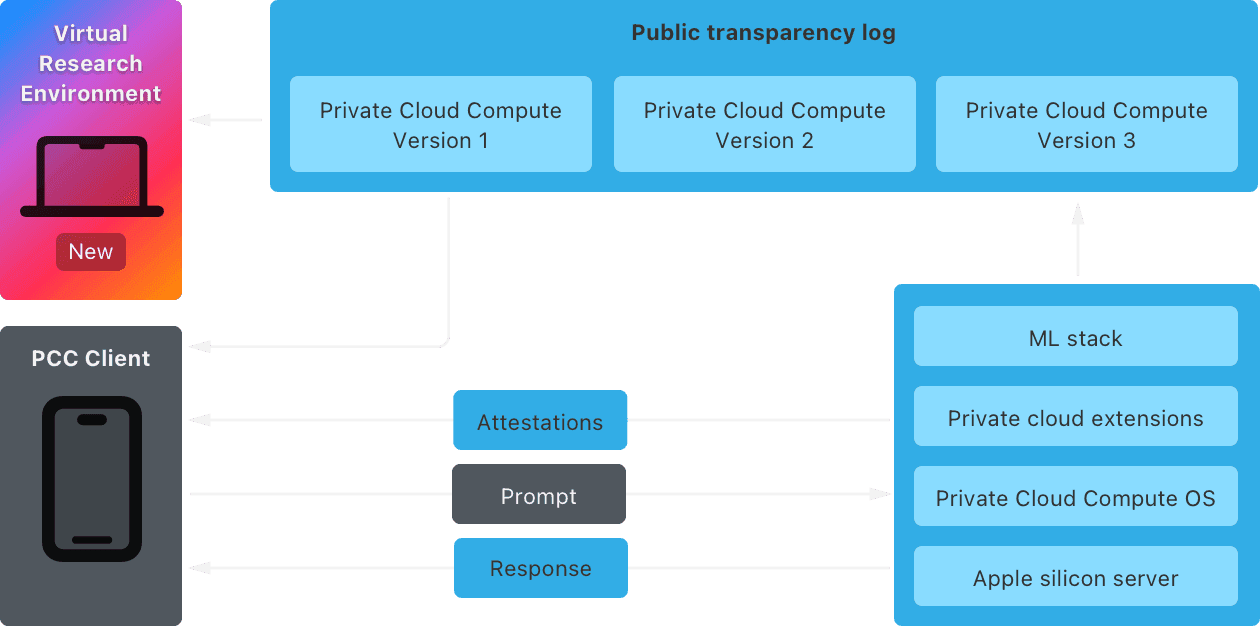

The core security commitment of PCC is defined by its **zero‑retention, attested, and auditable security model**. This commitment is powerful and unprecedented in public cloud computing:

- **Zero-Retention:** Data sent to PCC is processed in an ephemeral session and immediately destroyed. It is never logged, stored, or reused for training.

- **Attested:** The server proves to the user device that it is running known, certified software.

- **Auditable:** The software running on the PCC hardware is cryptographically signed and available for public audit by security researchers, transitioning user trust from corporate policy to verifiable cryptography.

This radical approach ensures that the processing of personal data remains private, establishing a new standard for protecting personal data online Source: Protecting Personal Data.

The Verification and Attestation Chain

Perhaps the most crucial technical innovation is the verification process. Before a user’s device sends any sensitive data to a PCC server, it cryptographically verifies the server. The device checks the server’s signed software image against a publicly auditable manifest.

*“The device essentially asks the server, ‘Prove you are running the exact, clean, secure operating system and model weights that Apple publicly guarantees are zero-retention,’*” Apple explains. Only upon successful cryptographic attestation—a technical guarantee verified locally on the user’s device—is the secure, ephemeral, encrypted connection established and the request offloaded Source: Attestation Detail.

How Private Cloud Compute Works for Developers

For developers utilizing Apple Intelligence, the system offers a tremendous simplification: a unified API. The operating system’s decision engine automatically handles the routing decision (on-device vs. PCC). This means developers do not need to write conditional logic based on model size or server availability.

Developers trust the OS to establish secure, ephemeral, encrypted sessions when offloading is required. This abstraction allows developers to focus on the feature’s intelligence, not the underlying infrastructure or security guarantees, knowing that the most secure and capable endpoint will be chosen transparently based on request complexity and resource availability.

Analyzing Apple’s Privacy Strategy and Decision Logic

Apple Intelligence is strategically positioned to address modern concerns about large language models. The entire design forms **apple’s privacy-preserving generative ai strategy**, establishing a strong market differentiator. By prioritizing data minimization and keeping the most sensitive data local, the hybrid architecture directly addresses key ethical and regulatory concerns, such as those mandated by GDPR, by making privacy the default, technical state Source: Privacy Strategy.

The Semantic Index: The Engine of Local Personalization

Central to personalization is the **Semantic Index**. This is a local, on-device vector index created using local machine learning models. It catalogs the user’s personal data—emails, files, photos, messages, calendar events, and browsing history—but it is stored *only* on the device.

The index creates context-aware vectors (embeddings) that allow the local LLM to quickly and intelligently reason across the user’s information. For example, Siri can answer, “Where was my wife’s flight confirmation email from last Tuesday?” without ever having to upload any emails to the cloud. The combination of the compact on-device model and the Semantic Index allows for deep personalization *without* cloud upload Source: Semantic Index Detail and Source: Indexing Research.

The Crucial Routing Logic

The system’s decision engine is the gatekeeper of user privacy. It constantly evaluates five main parameters for every request:

- **Task Complexity:** Is it a simple rewrite (low complexity) or long-context reasoning (high complexity)?

- **Context Size:** How much data needs to be processed?

- **Data Sensitivity:** Does the prompt contain deeply personal data (Semantic Index access)?

- **Device Resources:** Is the Neural Engine available and sufficiently powered?

The system determines if the task should stay **on-device only** or be securely routed to PCC. The rule is simple: if the on-device model can handle the task reliably and quickly, it remains local. Only when scale is strictly necessary, and after confirming that the necessary context can be handled in a privacy-preserving manner, is the request offloaded. This design ensures that privacy is the default behavior.

This crucial commitment shifts trust from abstract policy statements to cryptographic and auditable technical guarantees. Users are informed transparently when a request requires cloud processing, though the session remains attested and zero-retention.

Deep Dive into Agentic Features: Siri and Writing Tools

Apple Intelligence marks the fundamental evolution of Siri, transforming it from a simple voice assistant into an integrated AI agent. This transformation is entirely enabled by the hybrid LLM structure. The new **ios 19 siri agent features and capabilities** are massive, moving beyond simple commands to complex, multi-step actions, aligning with the broader industry trend of agentic AI Source: Agentic vs Generative AI. Early reports on the planned Siri fixes highlighted the crucial need for this LLM foundation Source: Siri Fixes Plan.

Contextual Awareness and Cross-App Actions

The agentic capabilities of the new Siri leverage the Semantic Index and local models to understand deep personal context. Siri can now perform tasks like:

- **Complex Chaining:** “Summarize the presentation slides I downloaded last week and send the summary to my colleague John in Messages.”

- **Cross-App Context:** “Find that photo of the sunset I took in Greece last summer and use Image Playground to add a fantasy overlay, then attach it to the email I am currently writing.”

The ability to string together multiple app actions based on personal context requires sophisticated on-device reasoning and tool selection, a direct benefit of the optimized local foundation model Source: Agentic Actions.

The Writing Tools Paradigm

Writing Tools (Summarize, Rewrite, Proofread) are excellent examples of the on-device processing paradigm. For most day-to-day text-centric tasks—like refining the tone of an email or summarizing a short article—the low-latency ~3B on-device model is sufficient and preferred.

Only in scenarios requiring extremely complex, long-form content generation or highly specialized linguistic reasoning might the system transparently and securely invoke PCC. This seamless routing ensures that performance is never sacrificed, while privacy remains the default state for sensitive user input Source: Writing Tool Routing.

Image Playground and Generative Tasks

Generative AI, such as Image Playground, also uses the hybrid approach. Light, playful, or simple image generations (e.g., generating a character in a specific style within Messages) are often handled locally by specialized on-device diffusion models. This provides instantaneous results. However, when a user requests a higher-fidelity, larger, or more complex image—tasks that demand significantly higher parameter counts and VRAM—the request may be handled by the specialized, large server-class models running securely in PCC Source: Image Playground.

Developer Integration and SDK Best Practices

For developers looking to integrate these powerful capabilities, utilizing the high-level Foundation Models framework APIs is key for **developing with apple intelligence sdk best practices**. These APIs abstract away the complexities of model management, routing, and hardware utilization, providing simple entry points for text generation, classification, and reliable structured output Source: Foundation Models API.

Emphasizing Constrained Decoding

The most critical best practice for integration is fully utilizing **constrained decoding**. Developers should avoid asking the model for raw, unstructured text output that requires complex downstream parsing. Instead, define outputs as standard Swift types—`structs`, `enums`, or specific classes.

*Example:* Instead of asking, “Give me a list of tasks,” ask the model to return a `[TaskItem]` array, where `TaskItem` is a defined Swift struct. This reliability leverages the framework’s deep optimizations and significantly reduces runtime errors.

Privacy and Performance Optimization Tips

Adhering to privacy best practices is non-negotiable:

- **Data Minimization in Prompts:** Only include the sensitive personal data in the prompt absolutely necessary for the immediate task. Rely on system APIs (like the Semantic Index) to provide context locally, rather than explicitly passing sensitive user files into the prompt string.

- **Transparent Communication:** If a feature relies heavily on complex reasoning that might frequently invoke PCC, ensure clear, but non-interruptive, communication to the user about when cloud processing is involved.

For maximizing performance, especially when targeting the on-device model:

- **Batching Requests:** When possible, batch small, low-latency requests together to optimize Neural Engine usage efficiency, minimizing the overhead of model loading and initialization.

- **Latency-Aware UI:** Design user interfaces that anticipate slight latency variances and provide graceful degradation or visual feedback when AI features are temporarily unavailable due to resource constraints (e.g., when the device is thermally constrained).

Final Thoughts and Outlook

Apple Intelligence represents a fundamental strategic shift in how large-scale generative AI is delivered to the consumer market. It is the sophisticated integration of compact, highly optimized **on-device models** working in concert with the provable, cryptographically secured environment of **Private Cloud Compute**. This hybrid model is not a compromise; it is a necessary evolution.

This architecture provides the scale required to run world-class foundation models (e.g., the large models in PCC) while maintaining the highest standard of user data privacy, leveraging the unique control Apple holds over its hardware and software stack. By shifting trust from corporate policy to publicly auditable, cryptographic guarantees, Apple has effectively neutralized many of the chief privacy anxieties associated with current AI systems.

This hybrid model positions Apple to evolve AI features rapidly, introducing even more capable agents and deep personalization in future iOS releases, all while retaining and reinforcing user trust Source: Future Features.

Frequently Asked Questions

- Q: What is the primary role of the Neural Engine in Apple Intelligence?

A: The Neural Engine is the dedicated hardware accelerator utilized by the **on-device foundation model** to perform rapid inference and matrix calculations. Its optimized use is critical for achieving the low-latency performance required for tasks like real-time text summarization and rewrite features without relying on the network.

- Q: How can PCC guarantee zero data retention?

A: PCC guarantees zero data retention through two mechanisms: the physical isolation provided by the Secure Enclave within the server-class silicon, and the cryptographic attestation chain. The user’s device verifies that the server software is running a publicly audited, zero-logging image before sending data. Once the processing session is complete, the data environment is wiped.

- Q: Does the Semantic Index ever get uploaded to the cloud?

A: No. The Semantic Index is an on-device vector database of personal context (emails, photos, files) created by local models. It is designed to remain exclusively on the user’s device. It enables personalization locally, preventing the need to upload sensitive information for cloud-based reasoning.

- Q: Is constrained decoding mandatory for developers using the SDK?

A: While not strictly mandatory, using constrained decoding is highly recommended as a best practice. It ensures the model output is reliably formatted as a predefined Swift data type (struct, enum), making development more robust, leveraging system optimizations, and avoiding potential parsing errors associated with raw text generation.