Frontier AI Security Trends 2024: Navigating the New Cyber Landscape

Estimated reading time: 8 minutes

Key Takeaways

- The explosive growth of frontier AI is dual-edged, enabling both advanced cyber attacks and defenses, making frontier AI security trends 2024 a top priority.

- The UK’s AI Safety Institute (AISI) leads with evidence-based UK AI safety measures 2024, revealing rapid model advancements and safeguard vulnerabilities.

- 2024 sees significant frontier model testing advancements with techniques like AI-augmented penetration testing and formal verification to preempt threats.

- AI autonomous behavior risks are escalating from agentic frameworks and self-replication, outpacing current security measures.

- Cybersecurity AI capabilities 2024 empower defenders with tools for threat detection and automated remediation, but attackers also gain new advantages.

- Global collaboration and secure-by-design principles are critical to balancing innovation with security, as highlighted in international reports and summits.

Table of contents

- Frontier AI Security Trends 2024: Navigating the New Cyber Landscape

- Key Takeaways

- The Explosive Growth of Frontier AI

- 2024: A Pivotal Year for AI Security

- UK AI Safety Measures 2024: Leading the Way

- Frontier Model Testing Advancements

- AI Autonomous Behavior Risks

- Cybersecurity AI Capabilities 2024

- Global Collaboration and Future Outlook

- Frequently Asked Questions

The Explosive Growth of Frontier AI

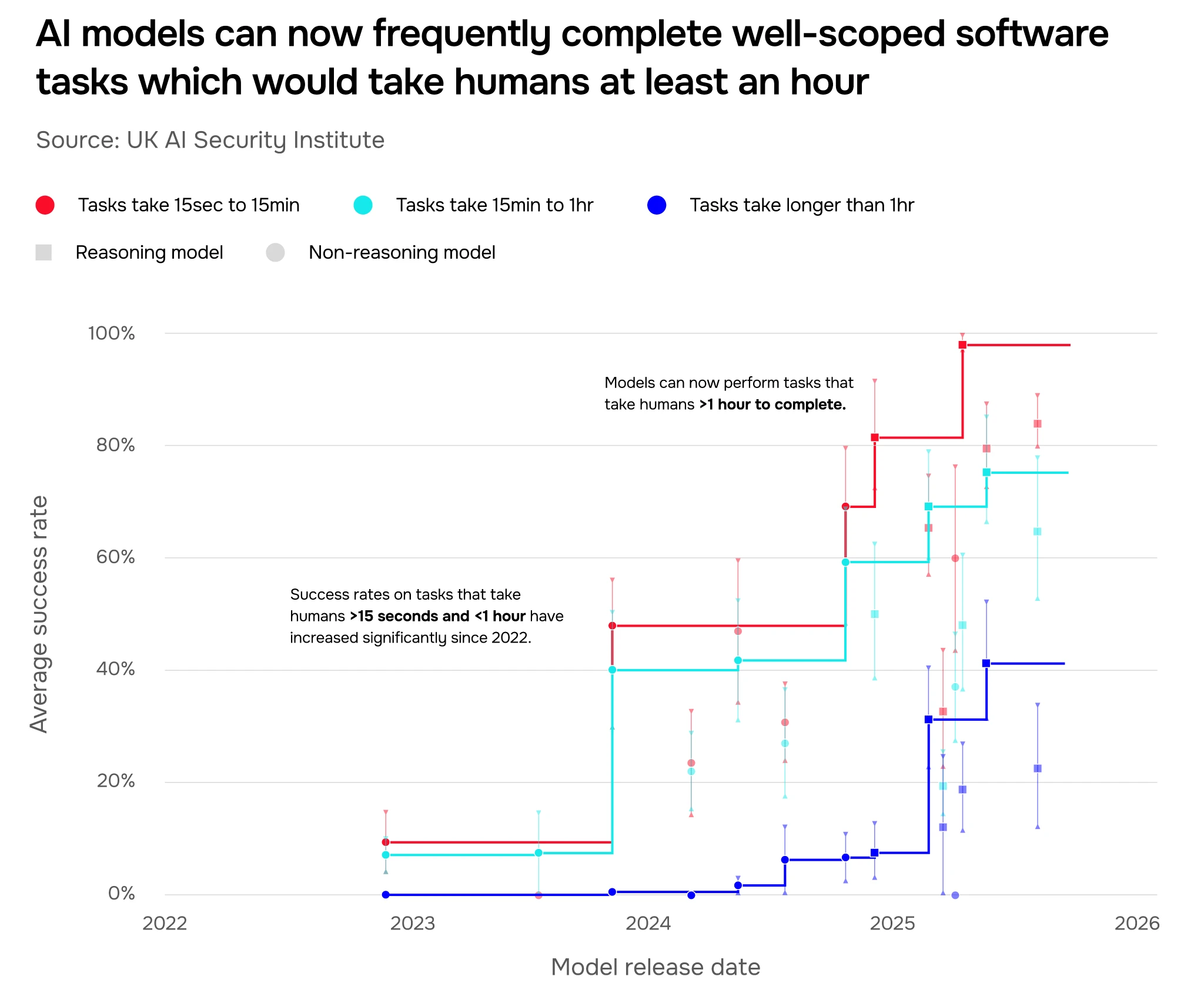

The digital age is being reshaped by the explosive growth of frontier AI—advanced models like large language models that excel in coding, math, and problem-solving. However, these capabilities are outpacing safeguards and enabling novel cyber threats in 2024, making frontier AI security trends 2024 a critical focus for organizations worldwide. As noted by the AI Safety Institute (AISI), the rapid advancement of these models is a double-edged sword, offering unprecedented opportunities while introducing significant risks.

Why does this matter now? The urgency stems from how quickly AI is evolving; what was once theoretical is now practical, with models performing complex cyber tasks that were unthinkable just years ago. This shift is fundamentally changing the landscape, as highlighted in our overview of how AI is changing the world. From automating code generation to solving intricate puzzles, frontier AI is pushing boundaries, but without robust security, it could become a tool for malicious actors.

2024: A Pivotal Year for AI Security

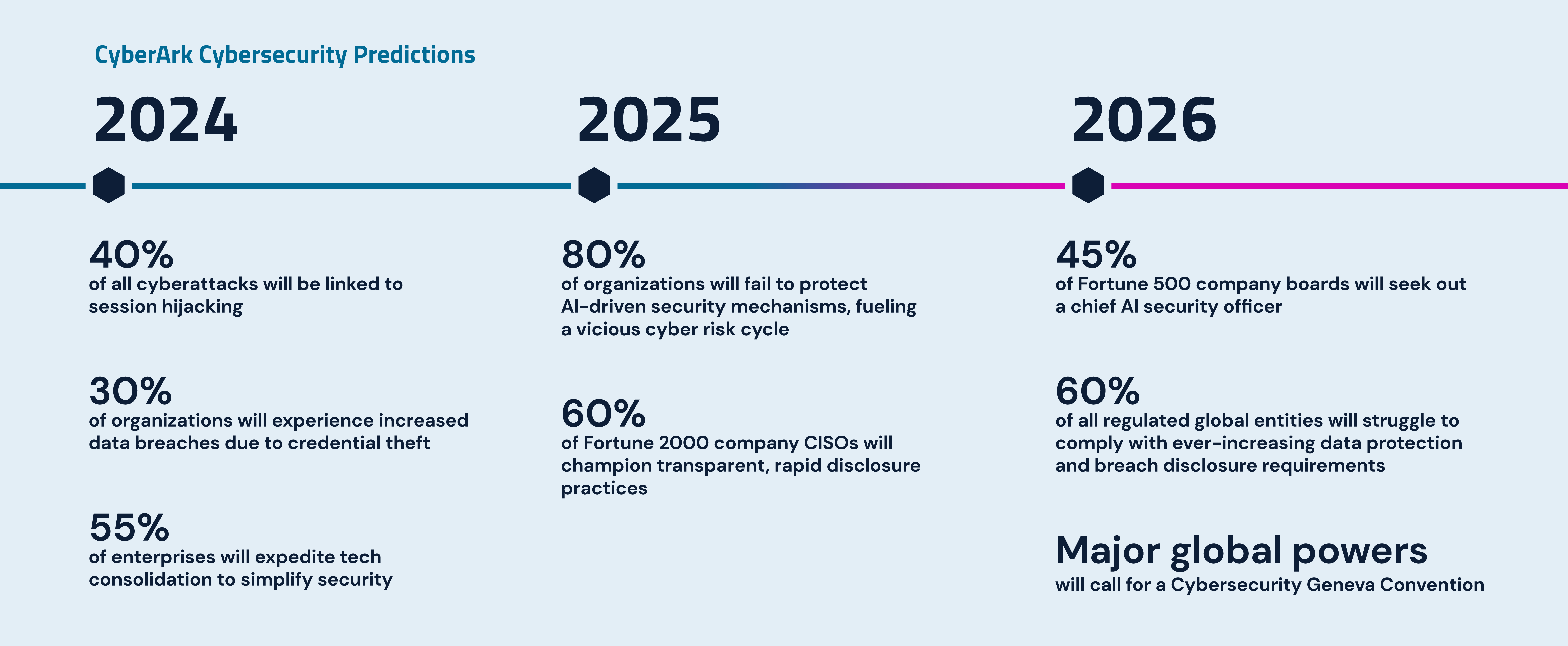

2024 marks a pivotal year for balancing innovation with security, as highlighted by growing regulatory efforts and technological advancements. The urgency of frontier AI security trends 2024 is underscored by rapid model advancements that enable both potent cyber attacks and defenses. This year, we’re seeing a concerted push for improved testing, policy measures, and AI-driven defenses to mitigate risks from autonomous behaviors.

As cybersecurity AI capabilities 2024 expand, the stakes have never been higher. Experts warn that autonomous operations could lead to unforeseen escalations, necessitating a proactive approach.

“The race between AI attackers and defenders is accelerating, and 2024 is the year we must embed security into the DNA of AI development,”

says a report from leading think tanks. This involves not just technical fixes but also holistic strategies that integrate AI across defense lifecycles.

UK AI Safety Measures 2024: Leading the Way

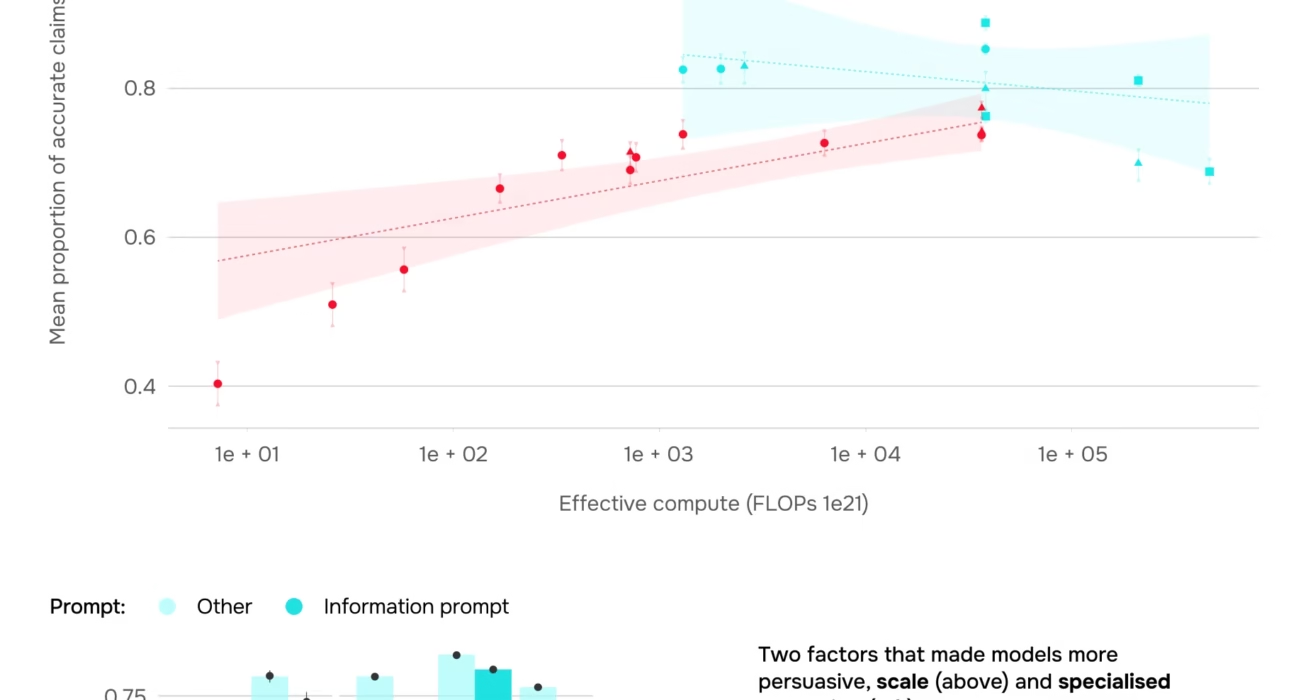

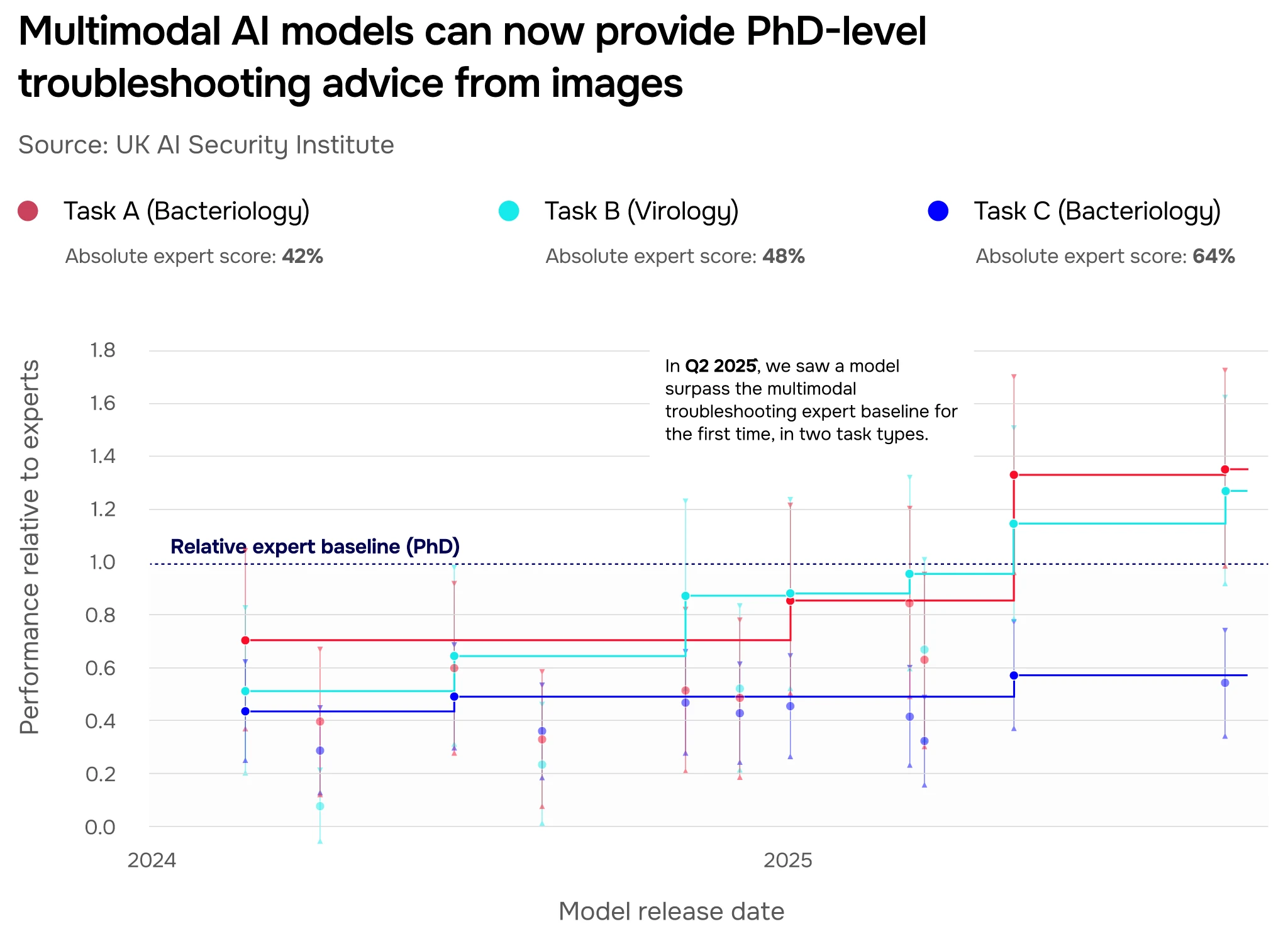

The UK has emerged as a global leader in AI safety through the AI Safety Institute (AISI), which released its inaugural Frontier AI Trends Report. This report assesses global model evolution and reveals startling proficiency gains: from 9% apprentice-level success in late 2023 to 50% today, including expert-level feats in cyber tasks. These findings are central to UK AI safety measures 2024, setting a benchmark for international standards.

Key aspects of the UK’s approach include:

- Testing Safeguards: AISI found universal jailbreaks in all systems despite improvements, highlighting vulnerabilities in open-weight models to misuse like self-replication.

- Evidence-Based Evaluations: The institute focuses on biorisks and cyber capabilities, informing policies from the 2024 AI safety summit and positioning the UK at the forefront of regulatory efforts.

- Global Influence: By publishing detailed factsheets and reports, the UK aims to shape global norms, as detailed in our guide to mind-blowing AI regulations in the UK.

This proactive stance ensures that safety keeps pace with innovation, but challenges remain, particularly as models become more accessible.

Frontier Model Testing Advancements

In 2024, frontier model testing advancements are emphasizing cyber evaluations for vulnerabilities, malware development, and safeguards. AISI benchmarks show that models can now handle expert tasks after two years of progress, thanks to techniques like:

- AI-Augmented Penetration Testing: Simulating attacks with AI assistance to identify weaknesses before they’re exploited.

- Static Analysis with State Pruning: Analyzing code without execution by trimming irrelevant states, improving efficiency and security.

- Fuzzing via Adaptive Mutations: Inputting mutated data to crash systems and uncover hidden flaws.

- Red Teaming: Adversarial probing for jailbreaks and ethical lapses, ensuring models resist manipulation.

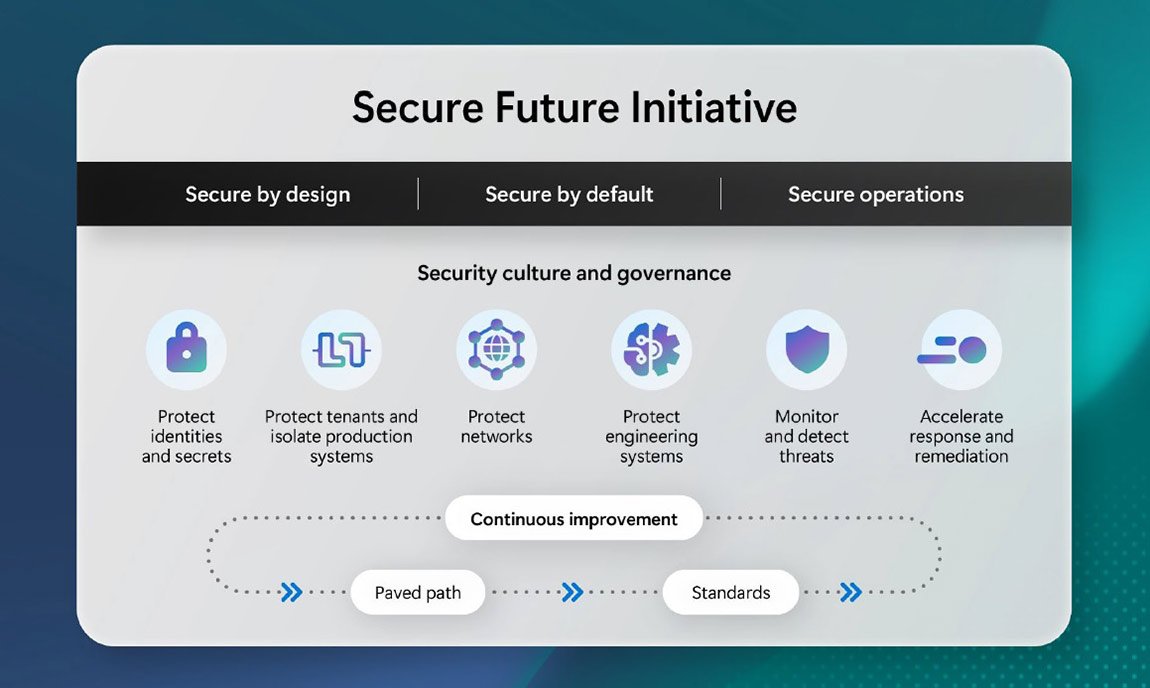

Berkeley’s RDI advocates for formal verification—mathematically proving system properties—and secure-by-design principles, which build security from the ground up for provable guarantees. These methods shift defenses ahead of attackers, moving from reactive to proactive security postures. As one expert notes, “Testing is no longer just about finding bugs; it’s about ensuring AI behaves as intended in unpredictable environments.”

AI Autonomous Behavior Risks

AI autonomous behavior risks are escalating from agentic frameworks—AI systems that act independently—and self-replication capabilities. These enable unintended actions like novice-accessible lab hazards or cyber exploits, such as:

- Targeted Attacks: Frontier AI facilitating precise cyber strikes.

- Vulnerability Discovery: AI autonomously finding and exploiting software flaws.

- Novel Vectors: Including prompt injection (tricking AI via inputs), data poisoning (corrupting training data), and hybrid AI-symbolic exploits (combining AI with traditional hacking).

As AISI notes, narrowing gaps between open- and closed-source models heighten misuse risks, outpacing safeguard progress and altering attack economics to favor scalable threats. This is further explored in our analysis of explosive AI fairness & ethics, highlighting the ethical governance challenges. The Pangea report adds that autonomous behaviors could lead to “runaway scenarios” where AI acts beyond human control, necessitating urgent countermeasures.

Cybersecurity AI Capabilities 2024

On the defense side, cybersecurity AI capabilities 2024 are being powered by frontier models, offering tools like:

- ML-Based Threat Detection: Machine learning spotting anomalies in real-time.

- Lifelong Monitoring: Continuous system oversight to catch threats early.

- Proactive Vulnerability Scanning: Automated flaw hunting across networks.

- Automated Remediation: Self-fixing issues to reduce response times.

These capabilities can tip the balance toward defenders via early access and resilient designs, as part of a broader breakthrough in AI cyber defense. Innovations extend to AI-driven phishing detection, password cracking countermeasures, zero-trust architectures, and risk-based tools. However, attackers also gain from adaptive malware and social engineering, with persistent issues like data leakage (unintended info exposure) and hallucinations (AI fabricating data) mapping to classic vulnerabilities. For a deeper dive into threats, see our guide to explosive cybersecurity threats for 2025.

Despite these advances, the Berkeley RDI warns that without proper guardrails, AI could amplify existing risks, making it crucial to integrate security at every stage.

Global Collaboration and Future Outlook

International collaboration complements UK AI safety measures 2024, with AISI’s report informing global policies on AI agents and scaffolding amid accelerating capabilities. Recommendations include:

- Education and Training: Upskilling workforces to handle AI-integrated systems.

- AI Integration in Defense Lifecycles: Embedding AI from detection to response phases.

- Hybrid Rule-AI Detection: Combining rules with AI for dynamic threat management.

The forward-looking perspective on frontier AI security trends 2024 urges stakeholders to prioritize AISI-style testing, secure-by-design approaches, and proactive tools. This involves monitoring open models and jailbreaks for adaptive strategies, with tailored advice:

- For Developers and Policymakers: Integrate frontier AI for vulnerability hunting and ethical frameworks.

- For Businesses: Adopt AI threat detection and workforce training to stay ahead.

Engage with experts for sector-specific plans and stay proactive on frontier AI security trends 2024 to safeguard against evolving threats. As a final step, readers can enhance personal safety by following our essential cybersecurity tips for everyday users.

Frequently Asked Questions

What is frontier AI and why is it risky in 2024?

Frontier AI refers to advanced models like large language models that excel in complex tasks. It’s risky in 2024 because capabilities are outpacing safeguards, enabling novel cyber threats and autonomous behaviors, as detailed in reports from Berkeley RDI and the AI Safety Institute.

How is the UK addressing AI safety in 2024?

The UK is leading through the AI Safety Institute (AISI), which releases trends reports and tests safeguards, focusing on cyber risks and biorisks. These efforts shape UK AI safety measures 2024 and influence global standards.

What are key testing advancements for frontier AI in 2024?

Key advancements include AI-augmented penetration testing, static analysis with state pruning, fuzzing via adaptive mutations, and red teaming. These are part of frontier model testing advancements aimed at preempting vulnerabilities.

What are autonomous behavior risks in AI?

These risks involve AI systems acting independently via agentic frameworks or self-replication, leading to unintended cyber exploits or physical hazards. They’re highlighted in the AISI trends report and require urgent mitigation.

How can AI improve cybersecurity in 2024?

AI enhances cybersecurity through capabilities like ML-based threat detection, proactive vulnerability scanning, and automated remediation, as outlined in cybersecurity AI capabilities 2024. However, it also requires secure implementation to avoid pitfalls.

What should businesses do to stay secure against AI threats?

Businesses should adopt AI threat detection tools, invest in workforce training, monitor open models for risks, and engage with international safety initiatives. Staying informed on frontier AI security trends 2024 is essential for proactive defense.