Meta AI Content Moderation Platform: The Engine Behind Safer Online Spaces

Estimated reading time: 10 minutes

Key Takeaways

- The Meta AI content moderation platform is essential for managing billions of content pieces daily, enabling scalable safety.

- AI moderation shifts from reactive to proactive safety using real-time filtering AI and automated safety AI tools to catch violations before they spread.

- Meta’s technology stack includes NLP, computer vision, and tools like HMA for consistent community guideline enforcement AI across languages and regions.

- Benefits include operational scalability, reduced psychological harm for moderators, and safer online content for users, fostering trust and engagement.

- Limitations like context blindness and regional gaps necessitate human oversight, transparency, and continuous model training for effective implementation.

Table of contents

- Meta AI Content Moderation Platform: The Engine Behind Safer Online Spaces

- Key Takeaways

- Opening Section: The Urgency of Content Moderation

- Section 1: Understanding AI Content Moderation Platforms

- Section 2: The Shift from Reactive to Proactive Safety

- Section 3: Technical Deep Dive – Meta’s AI Moderation Technology Stack

- Section 4: Measurable Benefits Across Three Stakeholder Groups

- Section 5: Critical Limitations and Implementation Challenges

- Section 6: Essential Implementation Practices and Best Practices

- Closing Section: The Future of AI-Powered Moderation

- Frequently Asked Questions

Opening Section: The Urgency of Content Moderation

Every minute, social media platforms face a deluge of user-generated content—think millions of posts, comments, images, and videos. With over 3 billion active users across Meta’s apps alone, human moderators alone cannot possibly review everything. This scale crisis underscores why the Meta AI content moderation platform is not just a luxury but a necessity. These AI-driven systems are the backbone of modern safer online content, blending automation with human insight to protect users. In this deep dive, we’ll unravel how Meta’s platform operates, its transformative benefits, inherent challenges, and the best practices that make it work.

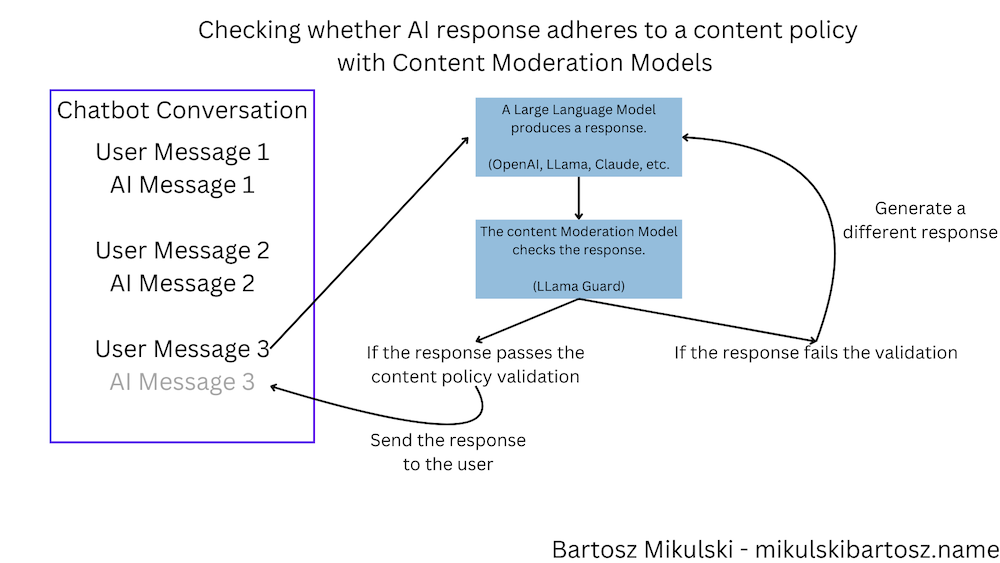

Section 1: Understanding AI Content Moderation Platforms – Core Functions and Architecture

An AI content moderation platform is a sophisticated ecosystem, not a single tool. It scans, analyzes, and categorizes content to ensure compliance with community guidelines. The Meta AI content moderation platform exemplifies this with a three-step process: detection, analysis, and action. First, it scans content in real-time; second, it uses algorithms to assess factors like violation probability, severity, and virality; third, it flags or removes content based on policies. This integrated approach enables uniform community guideline enforcement AI at scale, handling diverse languages and media types consistently. As the Oversight Board notes, this architecture is key to managing the “tsunami of content” modern platforms face.

Section 2: The Shift from Reactive to Proactive Safety – How Real-Time Filtering Works

Gone are the days of waiting for user reports. Today, automated safety AI tools enable proactive moderation by continuously monitoring for harmful patterns. These tools leverage real-time filtering AI to identify violations like hate speech, graphic violence, spam, and misinformation as they emerge. Consider live-streamed incidents or coordinated disinformation campaigns—speed is critical. By catching content before it goes viral, platforms prevent psychological harm and legal risks, directly advancing safer online content goals. As Meta’s research shows, this shift reduces the “visibility time” of harmful material from hours to seconds.

Section 3: Technical Deep Dive – Meta’s AI Moderation Technology Stack

The Meta AI content moderation platform relies on a multi-layered tech stack to power its capabilities. Here’s a breakdown:

- Natural Language Processing (NLP): This analyzes text for policy violations, understanding nuance and context, as detailed in Meta’s blog. It helps distinguish between harmful speech and benign conversations.

- Computer Vision and Image Recognition: It scans images and videos for graphic content, nudity, or violent imagery at scale, also covered in the same source.

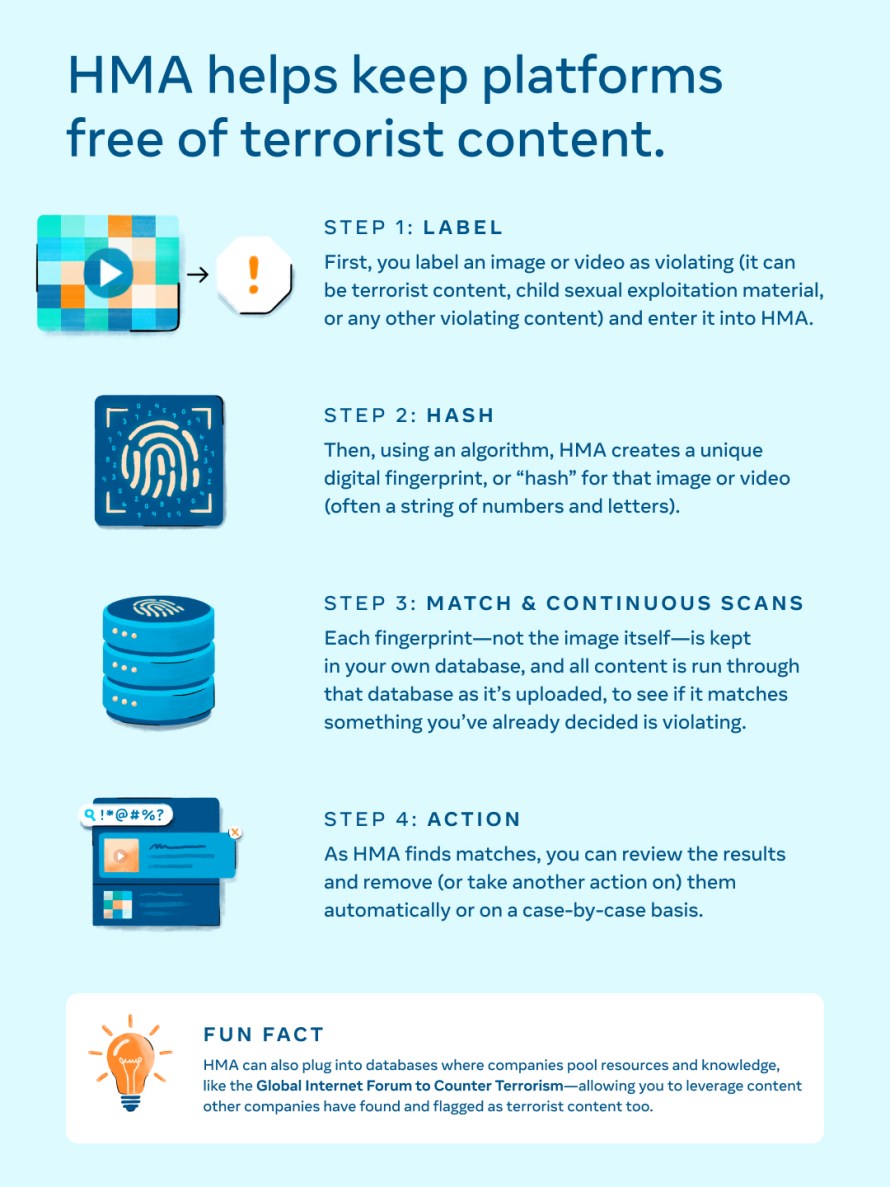

- Media Matching Service Banks: These databases store hashes of previously reviewed content, flagging duplicates to speed up enforcement, as explained by the Oversight Board. They enable learning from past decisions.

- Hasher-Matcher-Actioner (HMA): An open-source tool launched by Meta that identifies and removes copies of violating media across networks, enhancing real-time filtering AI efficiency.

Together, these technologies form a robust system for community guideline enforcement AI, ensuring both speed and accuracy.

Section 4: Measurable Benefits Across Three Stakeholder Groups

The Meta AI content moderation platform delivers concrete value to different audiences, creating a ripple effect of safety and efficiency.

For Platform Operations

Scalability: AI can process billions of content pieces daily, supporting platform growth without proportional increases in human moderators, as Meta notes.

Consistency: Automated systems apply rules uniformly, reducing arbitrary enforcement and bias, per the Oversight Board.

Speed: Real-time detection minimizes the spread of harmful content, fostering safer online content environments almost instantly.

For Human Content Moderators

Reduced Psychological Harm: By handling routine violations, automated safety AI tools shield moderators from constant exposure to traumatic content, improving well-being.

Enhanced Decision-Making: AI flags complex cases for human review, allowing moderators to focus on nuanced judgments, as Meta’s blog highlights.

For Online Communities

Safer Environments: Rapid removal of hate speech and violence cultivates respectful interactions, directly contributing to safer online content.

Improved User Experience: Fewer harmful encounters boost engagement and trust, making platforms more enjoyable and reliable, per Meta’s insights.

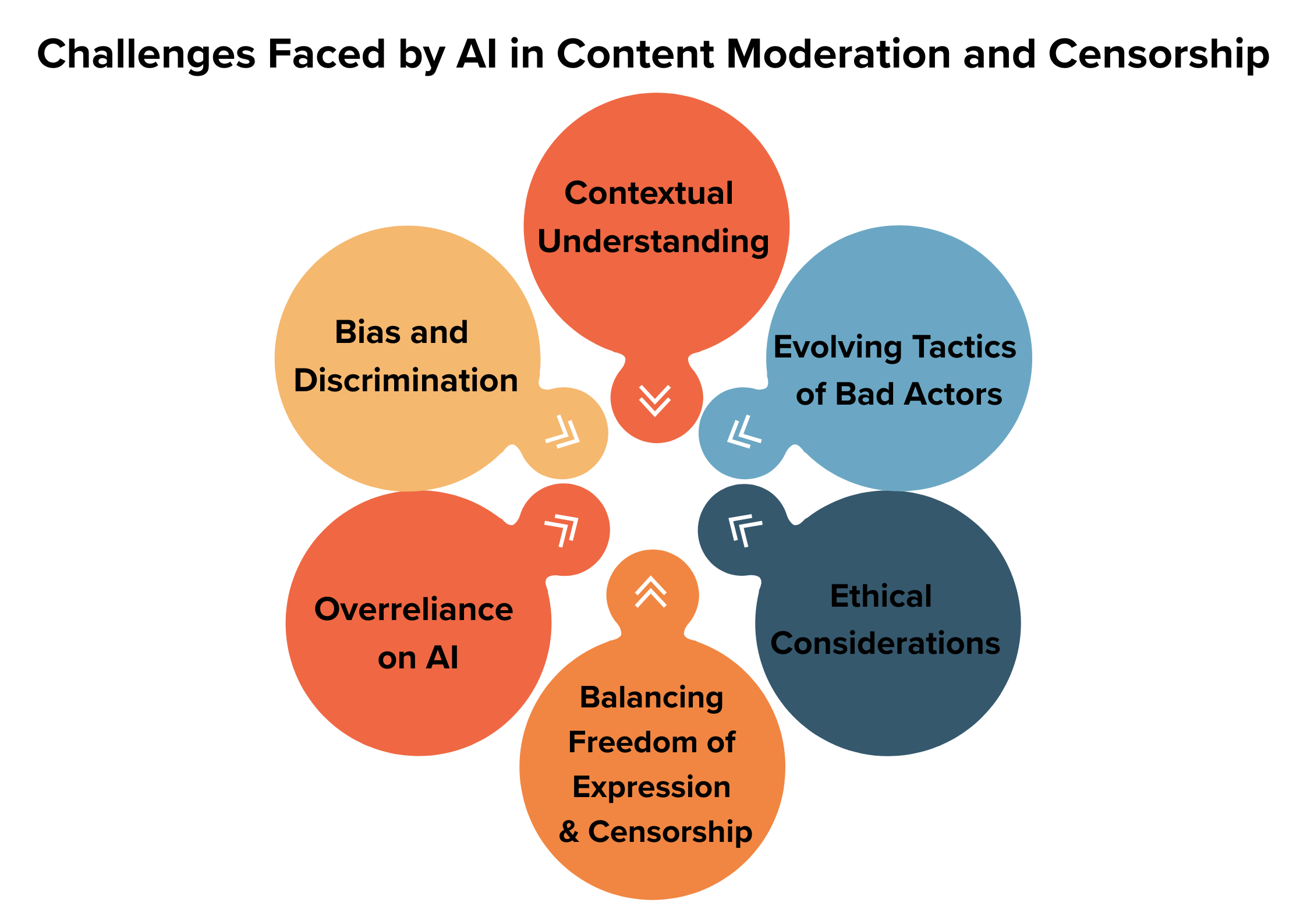

Section 5: Critical Limitations and Implementation Challenges – Honest Assessment of AI Moderation

Despite advancements, automated safety AI tools and real-time filtering AI are not flawless. Key limitations include:

- Context Blindness: AI often misses satire, coded language, or cultural references, leading to false positives or negatives, as Meta acknowledges. For example, a post discussing breast cancer awareness might be incorrectly flagged.

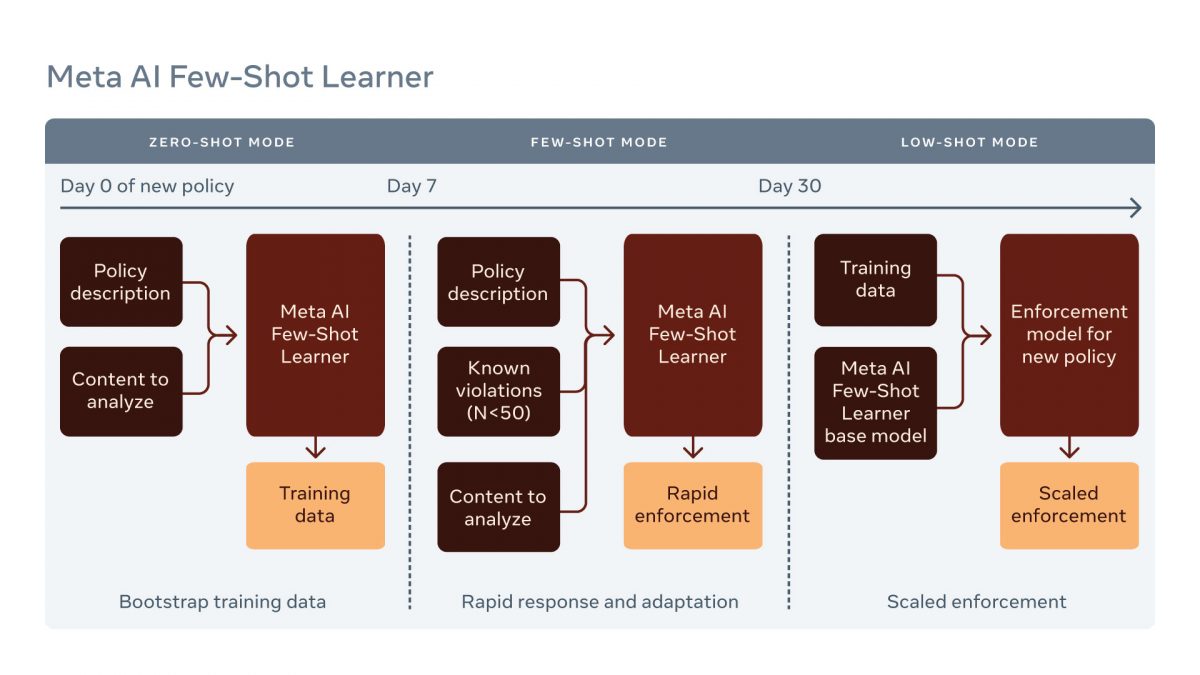

- Emerging Threats: New forms of harmful content, like deepfakes or evolving slang, challenge detection systems, requiring continuous updates, per the same source.

- Regional and Linguistic Gaps: AI resources are skewed toward English and Western markets, affecting equitable community guideline enforcement globally, as the Oversight Board reports. This can leave non-English speakers vulnerable.

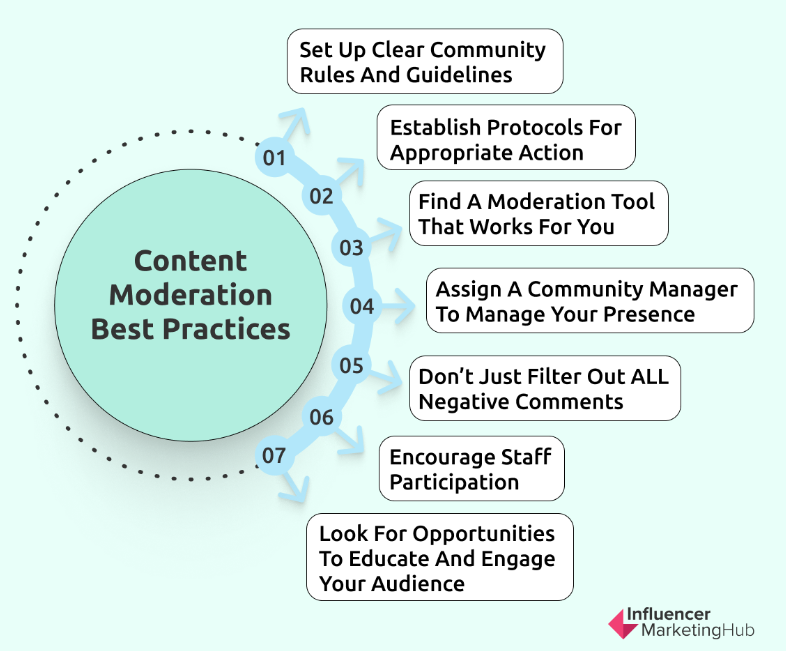

Section 6: Essential Implementation Practices and Best Practices

To maximize the Meta AI content moderation platform, platforms must adopt complementary practices.

Human Oversight as Non-Negotiable

AI cannot replace human judgment for ambiguous cases. A case study from the Oversight Board shows how Meta’s systems mistakenly removed breast cancer awareness posts. After recommendations, Meta improved contextual analysis, resulting in 2,500 additional pieces of content being correctly sent for human review in a month—showcasing the synergy between AI and human moderators.

Transparency with Users

Building trust requires clear communication. Meta now notifies users when automated safety AI tools flag content, allowing edits or appeals, as per the Oversight Board. This respects user agency while maintaining safety.

Continuous Model Training and Calibration

Harmful content evolves, so automated safety AI tools need ongoing refinement. During the Israel-Gaza conflict, aggressive automation removed legitimate content, highlighting the need for calibration, documented by the Oversight Board. Regular updates ensure models adapt to new threats without over-enforcement.

Closing Section: The Future of AI-Powered Moderation and Call to Action

AI-powered content moderation platforms like Meta’s are indispensable for scaling community guideline enforcement across global communities. Tools such as real-time filtering AI and media matching set new standards for safer online content. However, as the Oversight Board emphasizes, success hinges on balancing automation with human insight, transparency, and relentless improvement. The future lies in responsible implementation—where technology enhances safety without compromising fairness or trust.

Frequently Asked Questions

What is the Meta AI content moderation platform?

The Meta AI content moderation platform is a comprehensive system that uses artificial intelligence, including NLP and computer vision, to automatically scan, analyze, and enforce community guidelines on content across Meta’s apps, aiming to create safer digital spaces.

How does real-time filtering AI work in content moderation?

Real-time filtering AI continuously monitors content as it’s posted, using algorithms to instantly detect violations like hate speech or violence. It proactively flags or removes harmful material before it spreads, reducing exposure and harm.

What are the main limitations of AI content moderation?

Key limitations include context blindness (e.g., misunderstanding satire), gaps in non-English languages, and difficulty with emerging threats. These challenges require human oversight and continuous model training to ensure accurate enforcement.

How does Meta ensure transparency in automated moderation decisions?

Meta notifies users when content is flagged by AI systems, providing options to appeal or edit posts. This approach, recommended by oversight bodies, increases accountability and trust in automated processes.

Why is human oversight still necessary in AI content moderation?

Human oversight is crucial for handling nuanced cases where AI struggles, such as cultural context or ambiguous speech. It ensures fairness, reduces errors, and complements AI’s scalability with human judgment.