Were Your Meta AI Searches Made Public Unknowingly? Understanding the Privacy Issue

Estimated reading time: 9-12 minutes

Key Takeaways

-

- Reports indicate that some users’ Meta AI searches and prompts inadvertently appeared in public feeds, raising significant privacy concerns.

-

- The issue stemmed from Meta’s integration of a “share” feature, often misunderstood by users, into its AI platforms and the visibility provided by the Discover feed.

-

- Sensitive personal information, including health or legal queries, was reportedly exposed due to users accidentally sharing Meta AI conversations.

-

- This incident challenges user expectations of privacy in AI interactions and highlights Meta’s role in clear communication and feature design.

- Users can take proactive steps, like reading prompts carefully and reviewing settings, to understand how to stop Meta AI chats from being public and manage their digital footprint.

Table of contents

In the digital age, the expectation of privacy is fundamental, especially when engaging with sophisticated AI technologies that delve into our questions, curiosities, and sometimes, deeply personal matters. We interact with AI assistants expecting a level of confidentiality akin to a private conversation or a personal search history.

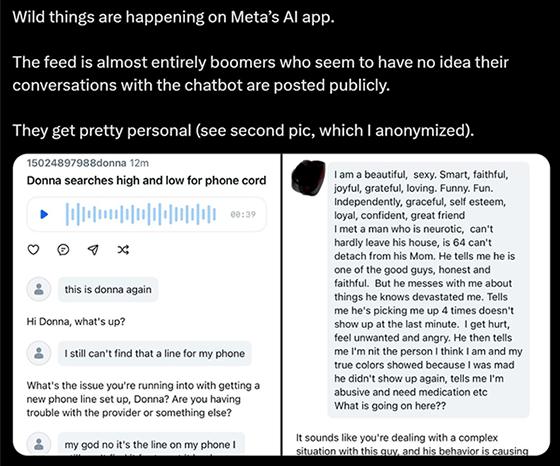

However, recent developments have cast a shadow on this assumption for users of Meta AI. Findings indicate that some users’ meta ai searches made public unknowingly, igniting significant concerns about data handling and display across Meta’s vast ecosystem of platforms.

This blog post aims to dissect this privacy issue. We’ll explore the specific mechanisms through which this occurred, primarily focusing on features like the Discover feed. We’ll detail the associated meta ai discover feed privacy issues, the confusion surrounding whether are meta ai chats public by default, and ultimately, provide actionable steps outlining how to stop meta ai chats from being public and prevent users accidentally sharing meta ai conversations in the future. Understanding these aspects is crucial for maintaining control over your digital interactions and safeguarding your personal information.

Understanding the Issue: How Meta AI Interactions Became Visible

At the heart of the recent privacy concerns surrounding Meta AI is a specific technical mechanism related to feature integration and design. Meta, in its drive to weave AI capabilities into its platforms, introduced social-leaning features within its AI interfaces. A notable example is the *Discover feed*.

This integration had an unintended, and for many users, alarming consequence: it led to previously private prompts and searches potentially becoming public or semi-public within this very Discover feed. Unlike a traditional, private search bar or a direct, encrypted chat, the Discover feed is designed, in part, to showcase content to a wider audience on the platform.

The visibility issue wasn’t necessarily that Meta AI chats were public by default in the sense that *every* interaction was instantly broadcast. Instead, the system offered an explicit ‘share’ option after a user submitted a prompt or received a response. While this might sound straightforward, the confusion arose from how this ‘share’ option was presented and integrated into the user flow.

For example, when using the standalone Meta AI app or interacting with Meta AI through integrations on Facebook, Instagram, or Messenger, users would submit a query or engage in a conversation. After the AI responded, or sometimes even during the interaction, a prompt or button to “share” the conversation would appear. This process typically involved a preview screen. The critical issue was that many users evidently did not grasp the full implications of proceeding past this preview screen or clicking the “share” button. By doing so, they were, perhaps unintentionally, making their conversation, or snippets of it, including the initial prompt, publicly visible to others on the platform, particularly within the Discover feed. This is the mechanism behind users accidentally sharing meta ai conversations.

Several reports highlight this lack of user awareness. As noted by Security Boulevard and MTSOLN, users often did not fully realize that their interactions weren’t strictly private spaces due to this feature. The design, while technically offering a choice to “share,” was apparently not clear enough to prevent inadvertent public posting.

The sensitivity of the exposed content is particularly troubling. As a direct consequence of users accidentally sharing meta ai conversations, incredibly sensitive queries appeared in public spaces. These included questions about medical conditions, legal inquiries, relationship problems, and other deeply personal topics. Reports from sources like Security Boulevard, TechCrunch, and Winn Media SKN documented instances where these shared posts contained real names and specific, sensitive details, making the privacy violation even more severe.

A key source of confusion, as detailed by TechCrunch, was the significant lack of clear privacy indicators and insufficient communication from Meta regarding whether chats are public or precisely how the sharing mechanism worked. This ambiguity led many users to mistakenly assume their interactions within Meta AI were private by default, similar to using a personal search engine or a direct messaging feature with an individual. This mistaken assumption, coupled with a not-clearly-defined “share” process, is how meta ai searches made public unknowingly became a reality for some users.

It’s important to clarify the point about are meta ai chats public by default. While the system *offered* a choice to share, the confusing design and lack of clear warnings meant that for many users, the consequence (public visibility) felt like a default outcome of using the share feature without fully understanding its reach. It wasn’t a bug making *all* chats public randomly, but rather a feature implementation that facilitated *accidental* public sharing on a potentially massive scale.

Exploring Related Privacy Concerns

The public exposure of Meta AI prompts and interactions through the Discover feed is more than just an isolated incident; it highlights substantial and troubling privacy risks inherent in integrating AI into social platforms without sufficient safeguards and user education.

This situation has revealed specific privacy breaches where users, unaware of the potential for public visibility, shared queries involving deeply private matters. Imagine someone asking an AI about symptoms they are experiencing, relationship issues they are struggling with, or even controversial opinions they hold, believing they are doing so in a private, confidential space. Reports from Security Boulevard, Malwarebytes, and MTSOLN confirm that these types of sensitive personal queries inadvertently appeared in the Discover feed.

The scope of potentially exposed information is vast and concerning. Individuals have unknowingly broadcasted personal medical histories, details about legal troubles, and even sensitive identifying information. TechCrunch reported instances where shared posts contained enough information, sometimes including real names or specific details from resumes, to potentially identify the user.

This incident adds critical weight to broader discussions about the necessity of implementing robust cybersecurity tips for everyday users. While this wasn’t a breach caused by external hackers, it’s a potent reminder of the dangers of oversharing or misunderstanding platform features. Protecting personal data online is crucial, and this event serves as a stark reminder that the platforms themselves can, through design choices, create privacy vulnerabilities. Our guide on protecting personal data online emphasizes the importance of understanding how your data is handled, a lesson painfully highlighted here.

Furthermore, this situation brings to light related concerns about AI safety and privacy in features developed by *other* tech companies. As AI becomes more integrated into products like smartphones, such as the discussions around Pixel 9 Google AI, the potential for similar privacy pitfalls in different contexts becomes a relevant consideration.

The core of the meta ai discover feed privacy issues lies in the fundamental design choice to integrate private AI interactions into a potentially public or semi-public feed. By exposing search queries, personal interests, and conversation snippets that users expected to remain private, Meta challenged deeply held user privacy expectations. As AI search technology evolves, understanding its potential visibility and privacy implications is vital for both users and developers, as discussed in the context of revolutionary AI search technology.

This incident strongly underscores the user expectation that AI interactions function with confidentiality. Users typically view an AI assistant as a tool for personal inquiry or task completion, not a forum for public broadcast. They anticipate the same level of discretion they would have when typing a query into a private search engine or asking a question of a trusted advisor.

Analyzing Meta’s role, it’s clear that the implementation of the Discover feed feature and the associated sharing mechanism, without sufficient user warning or clarity, directly challenges these privacy expectations. As reported by Security Boulevard, TechCrunch, and MTSOLN, the design led to meta ai searches made public unknowingly, raising serious questions about Meta’s responsibility and effectiveness in safeguarding sensitive user information. This event contributes to broader discussions about the ethical considerations around AI development and deployment, including ensuring fairness, accountability, and crucially, privacy-by-design principles are central to preventing such issues from occurring in the first place.

The fact that users accidentally sharing meta ai conversations was so widespread suggests a fundamental flaw not in user intelligence, but in interface design and user education provided by Meta.

In summary, the meta ai discover feed privacy issues are a potent illustration of how features intended to increase engagement or utility can inadvertently become significant privacy liabilities when implemented without clear communication and robust, user-friendly privacy controls.

Preventing Future Exposure: Taking Control of Your Settings

For users concerned that their meta ai searches made public unknowingly or that they might have been among users accidentally sharing meta ai conversations, it’s crucial to understand that while the platform design played a significant role, there are proactive steps you can take to manage your privacy and reduce the risk of future exposure. This situation underscores the importance of understanding privacy controls across various platforms, especially as AI-powered virtual assistants become more common.

Here is actionable guidance on how to stop meta ai chats from being public and minimize the chance of inadvertent sharing:

Step 1: Read All Prompts and Screens Carefully.

“The devil is in the details, especially when it comes to digital privacy.”

Before clicking *any* button related to sharing, posting, or publishing your AI interaction (whether it’s a response, an image generated, or the original prompt), *stop and read*. Look for explicit language indicating visibility – does it say “Share,” “Post to Feed,” “Public,” or “Discover”? Pay close attention to preview screens. Does the preview show the content appearing in a feed or a public-facing area? Make a conscious decision based on whether you *intend* for that specific interaction to be seen by others.

Step 2: Avoid Entering Highly Sensitive Information.

The most effective way to prevent sensitive information from being made public is to not put it into systems where its visibility is uncertain. If you are unsure about the privacy settings or uncomfortable with the potential for *any* level of inadvertent sharing, refrain from inputting highly personal details, identifying information (like addresses or full names), or queries about extremely sensitive topics (medical, legal, financial issues) into AI chats.

Step 3: Review Past Activity.

Check the Meta AI app or the Meta platforms (Facebook, Instagram, Messenger) where you used Meta AI for a history or activity log feature. While the ease of finding and managing past AI interactions and their sharing status might vary, look for sections related to “Your Activity,” “AI History,” or “Shared Posts.” If you find any past prompts, conversations, generated images, or audio clips that were inadvertently shared publicly and you are no longer comfortable with their visibility, look for options to delete, unshare, or change the visibility settings for those specific items.

Step 4: Explore App Privacy Settings.

Navigate through the settings menus within the Meta AI app itself, as well as the settings for your linked Meta platforms (Facebook, Instagram). Look specifically for sections related to “Privacy,” “AI Settings,” “Sharing,” or “Activity.” Explore all available options. While reports suggest these controls might not always be intuitive or prominently located, there may be settings that allow you to control how your AI activity is handled or shared. Consulting Meta’s official documentation or help center for Meta AI can provide platform-specific guidance on managing privacy and sharing options, if available.

As noted by Malwarebytes and TechCrunch, finding and adjusting the controls for managing this type of visibility was not always a straightforward process for users, contributing to the problem.

Step 5: Stay Informed.

Platform features and privacy policies can change. Monitor official updates from Meta regarding any modifications to their terms of service, privacy policies, or features related to Meta AI and the Discover feed. Meta may introduce adjustments or clearer options in response to user feedback and privacy concerns. Staying informed about these changes is vital for managing your privacy over time. This incident also serves as a stark reminder of the ongoing discussions around AI regulations and their impact on tech companies, which may lead to more stringent requirements for privacy and transparency in the future.

By consistently applying these steps, users can significantly reduce the likelihood of users accidentally sharing meta ai conversations and address the core issue of meta ai searches made public unknowingly that stemmed from the meta ai discover feed privacy issues. Taking proactive control is the best defence in a rapidly evolving digital landscape where privacy defaults and sharing mechanisms are not always intuitive.

Conclusion

The reality is that Meta AI users have indeed faced tangible privacy risks. Features like the Discover feed, combined with unclear sharing mechanisms, resulted in some users’ meta ai searches made public unknowingly or conversations being exposed. This often occurred without users’ explicit, clear consent or a full understanding of exactly how the sharing mechanism worked. Reports from Security Boulevard, Malwarebytes, and MTSOLN have consistently highlighted this issue.

This situation led to the exposure of highly sensitive personal material, ranging from health queries to legal concerns. This has justifiably ignited broader discussions and significant concerns about user privacy and the level of trust users can reasonably place in digital platforms responsible for handling their data, especially within the context of integrated AI. As TechCrunch noted, the incident underscores the fundamental problem with users unknowingly broadcasting personal information.

Despite the platform’s role in creating this issue through confusing design, users do have some methods (how to stop meta ai chats from being public) they can employ to mitigate risk. By consciously understanding the sharing process *before* clicking buttons, diligently double-checking exactly what they are about to post, and proactively exploring and adjusting their app settings where options are available, they can work towards regaining some level of control over their digital footprint and the visibility of their AI interactions.

This incident serves as a critical and timely reminder of the enduring importance of digital privacy awareness. It stresses the necessity for users to proactively confirm and understand exactly how their personal data and conversations are being used, stored, or potentially displayed by *any* platform they interact with—especially as AI features become increasingly integrated and complex within popular social and communication apps. This vigilance is central to the larger goal of protecting personal data online in the rapidly evolving digital age.

Stay vigilant, stay informed, and take proactive steps to protect your privacy online. For more comprehensive guidance on staying safe in the digital world, check out our cybersecurity tips.

Frequently Asked Questions (FAQ)

-

-

Were Meta AI chats public by default?

No, Meta AI chats were not public by default in the sense that every interaction was automatically broadcast. However, a confusingly implemented “share” feature, often presented after submitting a prompt, led many users to accidentally make their conversations or search queries publicly visible in the Discover feed or other areas without fully understanding the implication. The design made accidental sharing easy.

-

-

-

How did Meta AI searches become public?

Meta integrated a feature allowing users to “share” their AI conversations or interactions. When users clicked this share option, often without clear warnings or understanding, the content appeared in public or semi-public feeds like the Meta AI Discover feed, making previously private queries visible to others on the platform.

-

-

-

What kind of information was exposed?

Highly sensitive personal information, including medical questions, legal inquiries, relationship issues, sensitive personal opinions, and sometimes even identifying details like names, were exposed when users inadvertently shared their AI conversations publicly.

-

-

-

How can I check if my Meta AI conversations were shared publicly?

Check the Meta AI app and related Meta platforms (Facebook, Instagram) for an activity history or log. Look for sections showing past AI interactions or shared posts. Reviewing what you have shared in the past, particularly in public feeds like Discover, is the best way to assess potential exposure. Delete or unshare anything you find that you are uncomfortable with.

-

-

-

What steps can I take to prevent accidentally sharing Meta AI chats?

Be extremely cautious when interacting with any “share” or “post” options related to Meta AI. Read all prompts and preview screens carefully to understand where your content will appear. Avoid entering highly sensitive personal information into AI chats if you are concerned about privacy. Regularly review your activity logs and explore the app’s privacy settings, though note that these may not always be easy to find or adjust.

-

-

-

What are the privacy implications of the Meta AI Discover feed?

The Discover feed feature creates privacy issues by potentially exposing private search queries, personal interests, and conversation snippets when integrated with AI interactions, especially if the sharing mechanism is confusing or leads to inadvertent public posting. It challenges the user expectation that interactions with an AI assistant are private.

-

-

Has Meta addressed this issue?

While platform features and communication evolve, initial reports highlighted a lack of clear communication and easy-to-use controls regarding the sharing of AI interactions. Users should stay informed about official updates from Meta regarding Meta AI privacy and feature changes.