NVIDIA Blackwell Server GPU Specs: Revolutionizing AI Data Centers

Estimated reading time: 12 minutes

Key Takeaways

- NVIDIA Blackwell server GPUs deliver breakthrough NVIDIA Blackwell server GPU specs, including up to 96 GB GDDR7 memory, 1.6 TB/s bandwidth, and FP4 precision support, transforming enterprise AI hardware.

- These GPUs offer 3-4x performance uplifts over Hopper generation, making them essential for scaling to trillion-parameter models and enhancing enterprise GPU performance.

- Architectural innovations like dual-die designs, fifth-generation Tensor Cores, and enhanced NVLink enable seamless scaling for AI training cluster hardware.

- Systems like the GB200 NVL72 provide 20 TB total GPU memory and up to 576 TB/s aggregate bandwidth, enabling massive distributed training and inference.

- Blackwell reduces total cost of ownership through power efficiency, passive cooling, and higher density, while positioning against cloud NPU acceleration with workload flexibility.

Table of Contents

- NVIDIA Blackwell Server GPU Specs: Revolutionizing AI Data Centers

- Key Takeaways

- What Makes NVIDIA Blackwell Server GPUs a Game-Changer for Modern Data Centers

- Technical Deep Dive: Understanding NVIDIA Blackwell Server GPU Specifications

- The Architecture Behind the Specs

- Core GPU Memory and Bandwidth Specifications

- Tensor Core Performance and Precision Innovation

- NVLink and Interconnect Specifications

- Power and Thermal Specifications

- Multi-Instance GPU (MIG) Support

- From Specs to System: AI Training Cluster Hardware Architecture

- Building Enterprise-Scale Training Clusters with Blackwell

- Recommended Server Configurations for AI Workloads

- Cluster Topology and Interconnect Strategy

- Maximum Scalability and GB200 NVL72 Configuration

- Real-World Performance Impact: Enterprise GPU Performance Metrics

- Training Performance and Efficiency Gains

- Inference Performance and Multimodal AI

- Total Cost of Ownership (TCO) Analysis

- Comparative Analysis: Blackwell vs Hopper

- Positioning Within the Broader Ecosystem: Cloud and NPU Acceleration Strategies

- Understanding the Competitive and Complementary Landscape

- Blackwell GPU vs Cloud NPU Acceleration: Use Case Decision Framework

- Blackwell Availability on Major Cloud Platforms

- Strategic Implications and Future Outlook

- How Blackwell Redefines Enterprise AI Hardware Standards

- Key Considerations for Enterprise Procurement Decisions

- Looking Ahead: The Role of Blackwell in the AI Hardware Roadmap

- Taking Action: Next Steps for Your Enterprise

- Frequently Asked Questions

What Makes NVIDIA Blackwell Server GPUs a Game-Changer for Modern Data Centers

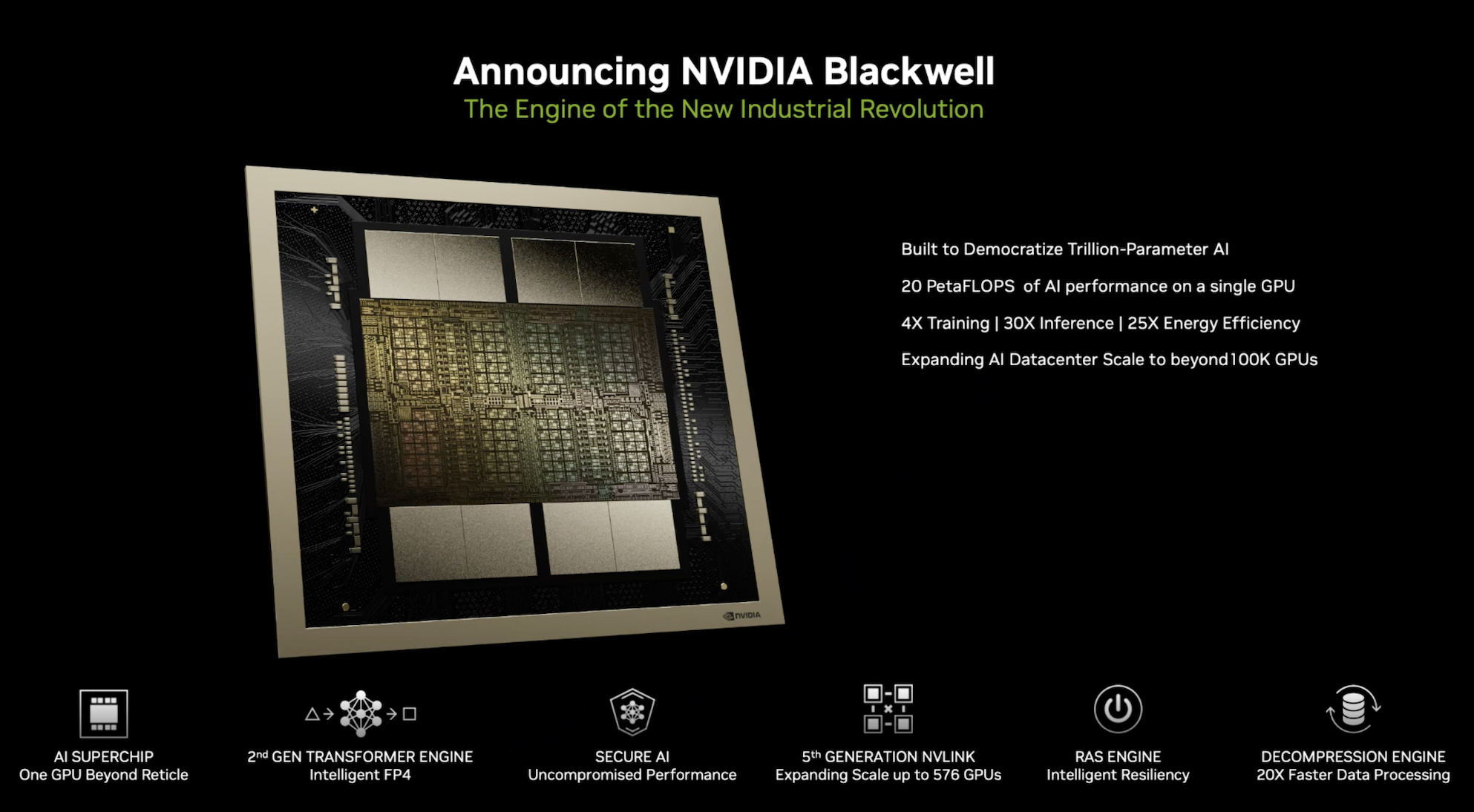

NVIDIA Blackwell server GPUs are transforming enterprise data center infrastructure with breakthrough NVIDIA Blackwell server GPU specs, including up to 96 GB GDDR7 memory, 1.6 TB/s bandwidth, and FP4 precision support. For IT professionals, data center managers, and AI researchers, this guide unveils how Blackwell’s specifications deliver real-world impact on AI workloads, enabling enterprise AI hardware to scale to new heights.

Why is Blackwell a pivotal development? It represents a generational shift, delivering 3-4x performance uplifts over Hopper generation GPUs in both inference and training efficiency. Enterprises scaling to trillion-parameter models require hardware that balances memory capacity, bandwidth, and compute power—Blackwell addresses all three, making it essential for enterprise GPU performance and AI training cluster hardware.

Technical Deep Dive: Understanding NVIDIA Blackwell Server GPU Specifications

The Architecture Behind the Specs

Blackwell’s performance stems from foundational architectural innovations: dual-die designs, fifth-generation Tensor Cores, and enhanced NVLink for data centers. Tensor Cores are specialized compute units for AI and matrix operations, while NVLink provides massive bandwidth between GPUs. These elements directly translate to the impressive NVIDIA Blackwell server GPU specs that redefine enterprise GPU performance.

Core GPU Memory and Bandwidth Specifications

• RTX PRO 6000 Blackwell: Features 96 GB GDDR7 ECC memory with up to 1.597 TB/s bandwidth, enabling larger models in memory and faster data movement for training efficiency. Source: Lenovo Press.

• B100/B200 variants: Offer up to 192 GB HBM3e memory with up to 8 TB/s bandwidth, providing higher capacity and speed for demanding workloads. Source: Hyperstack.

• GB200 NVL72 system: Boasts 20 TB total GPU memory across 72 GPUs with up to 576 TB/s aggregate GPU bandwidth, enabling massive distributed training. Source: NVIDIA GB300 NVL72.

More memory means fewer bottlenecks; higher bandwidth means faster model iterations. These NVIDIA Blackwell server GPU specs are crucial for AI training cluster hardware.

Tensor Core Performance and Precision Innovation

Tensor Cores accelerate AI workloads, with FP4 and FP6 precision reducing memory footprint without accuracy loss. Key specs:

- RTX PRO 6000 Blackwell: Up to 5x prior generation for FP4 LLM inference; peak 4 PFLOPS FP4 capacity.

- B100/B200: FP4 at approximately 20 PFLOPS peak, with FP64 at 40-45 TFLOPS for scientific compute.

- GB200 NVL72: 1.5x more AI FLOPS vs base Blackwell; 2x attention acceleration for transformer models.

Fifth-generation Tensor Cores deliver up to 5x LLM inference gains, while fourth-generation RT Cores double ray-triangle rates for physical AI. Sources: Lenovo Press, Hyperstack, Nexgencloud.

NVLink and Interconnect Specifications

NVLink allows multiple GPUs to communicate at high speeds. Details:

- RTX PRO 6000 Blackwell: PCIe Gen5 x16 connection, doubling CPU data transfer bandwidth.

- B100/B200: 18 NVLink links per GPU at 1.8 TB/s per link, enabling seamless scaling.

- GB200 NVL72: 5th-generation NVLink at 50 GB/s bidirectional per link; 37 TB fast memory accessible system-wide.

This reduces communication latency, allowing clusters to scale without performance loss. Sources: NVIDIA GB300 NVL72, Nexgencloud, NVIDIA Blackwell Architecture.

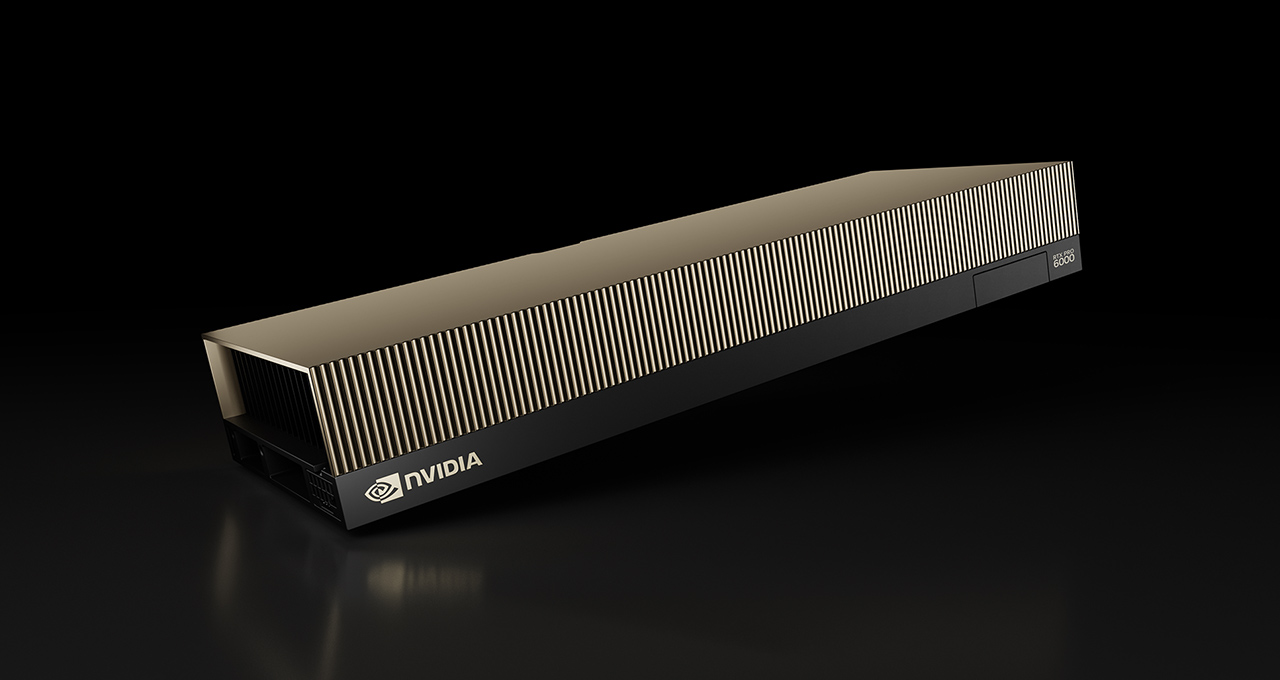

Power and Thermal Specifications

RTX PRO 6000 Blackwell has 600 W maximum board power with passive cooling capability, reducing data center operational complexity. Power efficiency improves through FP4, enabling 25x cost/energy reduction vs H100 for LLM inference. Lower power draw cuts cooling costs, critical for large-scale deployments. Source: Lenovo Press.

Multi-Instance GPU (MIG) Support

RTX PRO 6000 Blackwell supports MIG for up to 4 isolated GPU instances per physical GPU, enabling resource sharing across diverse AI applications with performance predictability. This is vital for multi-tenant cloud environments. Source: NVIDIA RTX PRO 6000 Blackwell.

From Specs to System: AI Training Cluster Hardware Architecture

Building Enterprise-Scale Training Clusters with Blackwell

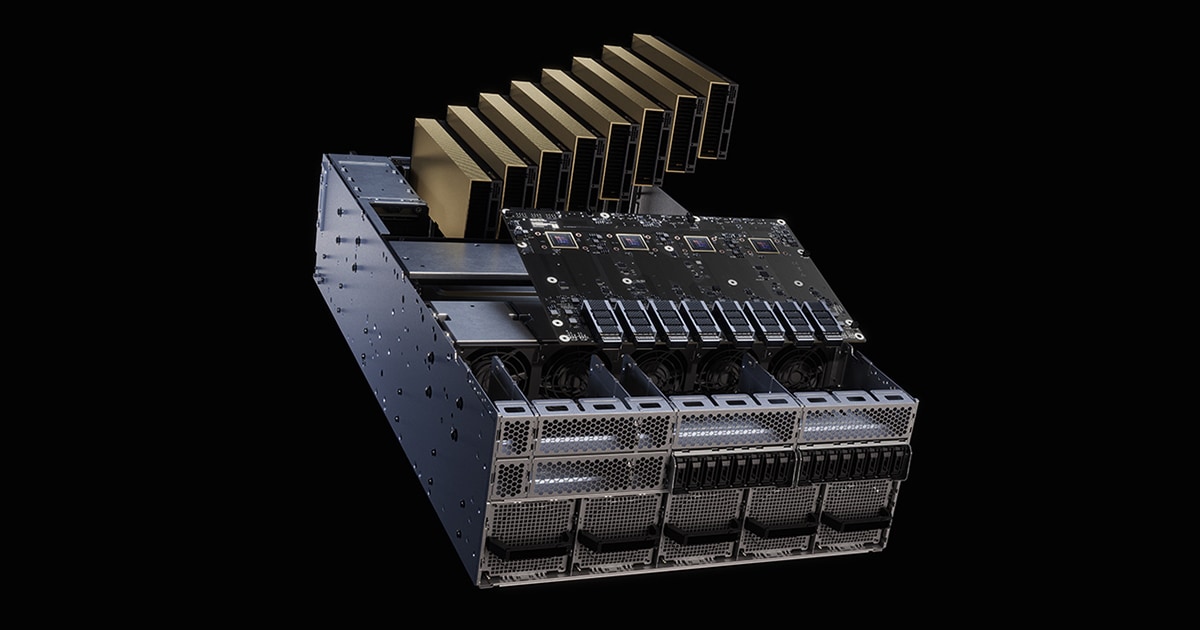

Blackwell’s individual spec advantages compound at scale. It supports up to 576 GPUs with 130 TB/s NVLink bandwidth in NVL72 racks, enabling trillion-parameter model training. This makes AI training cluster hardware with NVIDIA Blackwell server GPU specs essential for cutting-edge AI.

Recommended Server Configurations for AI Workloads

Standard building block: 8x RTX PRO 6000 Blackwell per server, with ConnectX-8 SuperNICs for GPU-to-GPU networking and PCIe Gen6 switches for OVX integration. This reference architecture reduces deployment risk. Sources: Lenovo Press, NVIDIA RTX PRO Server.

Cluster Topology and Interconnect Strategy

NVLink Switch enables model parallelism across servers, with 4x bandwidth efficiency gains vs prior generations. Efficient cross-server communication is critical for scaling training jobs. Sources: Nexgencloud, NVIDIA Blackwell Architecture.

Maximum Scalability and GB200 NVL72 Configuration

The GB200 NVL72 system integrates 72 Blackwell Ultra GPUs and 36 Grace CPUs in a single rack, with 20 TB GPU memory and 17 TB CPU memory. This enables end-to-end AI workflows, reducing data movement and simplifying management. Performance gains include support for 5-second 720p video generation. Source: NVIDIA GB300 NVL72.

Real-World Performance Impact: Enterprise GPU Performance Metrics

Training Performance and Efficiency Gains

Blackwell offers 3-4x performance uplift vs Hopper for next-generation LLMs, driven by higher FP4 peak performance and 8 TB/s bandwidth. This translates to faster time-to-model-deployment. Source: Hyperstack.

Inference Performance and Multimodal AI

Inference is 30x faster for multitrillion-parameter models in GB200 configurations. RTX PRO 6000 Blackwell includes 9th-generation NVENC/NVDEC with 4:2:2 chroma subsampling for efficient video processing, enabling multimodal AI applications. Sources: Lenovo Press, Hyperstack.

Total Cost of Ownership (TCO) Analysis

TCO advantages: lower operational cost via passive cooling, higher density (96 GB per GPU), improved efficiency. PCIe Gen5 doubles CPU data transfer, reducing bottlenecks. Initial cost may be similar, but 3-year TCO is significantly lower. Source: Lenovo Press.

Comparative Analysis: Blackwell vs Hopper

Blackwell excels in memory bandwidth, AI FLOPS, and efficiency. Enterprises training trillion-parameter models should prioritize Blackwell; smaller models may find Hopper cost-effective. Source: Hyperstack.

Positioning Within the Broader Ecosystem: Cloud and NPU Acceleration Strategies

Understanding the Competitive and Complementary Landscape

Enterprises face choices between GPUs, cloud NPUs (e.g., Google TPUs, AWS Trainium), and CPUs. GPUs offer programmable flexibility for diverse workloads, while NPUs are specialized. Blackwell’s flexibility enables training, inference, simulation, and rendering on the same hardware. Source: Lenovo Press.

Blackwell GPU vs Cloud NPU Acceleration: Use Case Decision Framework

Scenarios Optimal for Blackwell Clusters:

- Custom training of proprietary trillion-parameter models.

- Ray-tracing and physical AI workloads.

- Multi-app workflows requiring MIG isolation.

- On-premise security and data residency requirements. Sources: Lenovo Press, PNY.

Scenarios Preferable for Cloud NPU Acceleration:

- Standardized inference for bursty workloads.

- Single-purpose AI tasks like LLM inference.

- Minimal operational complexity requirement. Source: Hyperstack.

Blackwell Availability on Major Cloud Platforms

Blackwell-powered instances are expected on AWS, Google Cloud, Azure, and OCI, allowing enterprises to evaluate before on-premise commitment. It supports Omniverse farms up to 8 GPUs for metaverse use cases. Sources: Lenovo Press, NVIDIA RTX PRO Server.

Strategic Implications and Future Outlook

How Blackwell Redefines Enterprise AI Hardware Standards

Blackwell redefines benchmarks for AI training cluster hardware by combining memory capacity, bandwidth, compute density, and interconnect efficiency. It sets a new baseline for performance expectations. Sources: NVIDIA GB300 NVL72, NVIDIA Blackwell Architecture.

Key Considerations for Enterprise Procurement Decisions

Prioritize Blackwell for new data centers, Hopper upgrades, or scaling AI workloads. Benefit from mature CUDA software and pre-trained model support. Pilot on cloud platforms before capital expenditure. Source: NVIDIA GB300 NVL72.

Looking Ahead: The Role of Blackwell in the AI Hardware Roadmap

Blackwell is foundational for the next 2-3 years, with future generations like Rubin pushing performance further. Investing today positions enterprises on a proven technology path. As AI scales, Blackwell-class hardware becomes the minimum for competitiveness. Sources: NVIDIA GB300 NVL72, NVIDIA Blackwell Architecture.

Taking Action: Next Steps for Your Enterprise

To leverage NVIDIA Blackwell server GPU specs, IT professionals should review specs against workload requirements, data center managers should request pilots from NVIDIA partners, and procurement teams should begin TCO analysis with cloud providers. Delaying evaluation risks falling behind in the rapidly evolving AI landscape.

Frequently Asked Questions

What are the key NVIDIA Blackwell server GPU specs for AI data centers?

Key specs include 96 GB GDDR7 memory with 1.6 TB/s bandwidth for the RTX PRO 6000 Blackwell, up to 192 GB HBM3e memory for B100/B200, and support for FP4 precision, delivering 3-4x performance uplifts over Hopper.

How does Blackwell improve enterprise GPU performance?

Blackwell enhances performance through fifth-generation Tensor Cores, enhanced NVLink, and higher memory bandwidth, resulting in faster training and inference for trillion-parameter models.

What is the role of Blackwell in AI training cluster hardware?

Blackwell enables scalable clusters like the GB200 NVL72 system with 20 TB GPU memory, supporting massive distributed training and reducing data movement across servers.

How does Blackwell compare to cloud NPU acceleration?

Blackwell offers flexibility for diverse workloads, including training and simulation, while cloud NPUs are specialized for specific tasks like inference. Enterprises with custom models or on-premise requirements benefit from Blackwell.

What are the total cost of ownership advantages of Blackwell?

Blackwell reduces TCO through passive cooling, higher density, and improved efficiency, leading to lower power and cooling costs over time.