“`html

Navigating the Labyrinth of AI API Errors

Estimated reading time: 15 minutes

Key Takeaways

- AI models can refuse to respond for various reasons, even with content filters disabled, due to internal safety heuristics, ambiguous prompts, or rate limits.

- Resolving **Azure OpenAI model refusal errors** often involves refining prompts for clarity and context.

- **Troubleshooting Perplexity AI API errors** typically requires verifying API keys, request parameters, and network connectivity.

- To **fix GPT-4o function calling not working**, ensure precise function schemas, matching arguments, and clear function descriptions.

- **Common Gemini Pro tool errors** can stem from incorrect tool definitions, input validation issues, or the model misunderstanding capabilities.

- Understanding **LangChain `BadRequestError: 400` response** involves checking for malformed requests to underlying AI models or invalid parameters passed to LangChain components.

- Proactive error handling through logging, iterative testing, and staying updated on documentation is crucial for building resilient AI applications.

Table of Contents

- Navigating the Labyrinth of AI API Errors

- Key Takeaways

- Demystifying AI Model Refusals – A Deep Dive into Azure OpenAI

- Navigating the Nuances of Perplexity AI API Errors

- Mastering GPT-4o Function Calling: When It Goes Wrong

- Troubleshooting Common Gemini Pro Tool Errors

- Decoding LangChain’s `BadRequestError: 400` Response

- General Best Practices for Proactive AI API Error Handling

The advent of Artificial Intelligence has ushered in an era of unprecedented innovation, seamlessly integrating sophisticated models into a myriad of applications. Yet, as we harness the power of these advanced tools, we inevitably encounter the labyrinth of AI API errors. These unexpected hurdles can be frustrating, but understanding their root causes and learning to navigate them effectively is paramount for developers. This guide aims to demystify common AI API errors, offering practical strategies for **resolving Azure OpenAI model refusal errors**, **troubleshoot Perplexity AI API errors**, **fix GPT-4o function calling not working**, handle **common Gemini Pro tool errors**, and **understand LangChain `BadRequestError: 400` response**.

Demystifying AI Model Refusals – A Deep Dive into Azure OpenAI

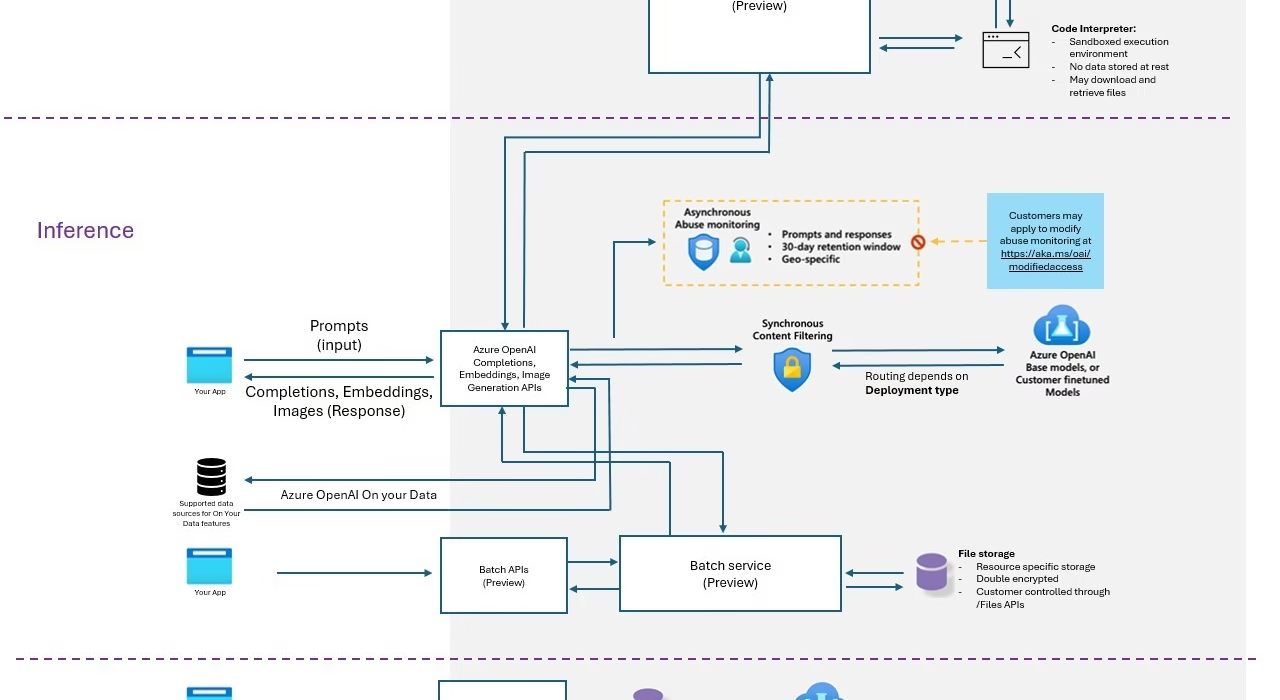

At its core, an AI model refusal error occurs when an AI model declines to respond to a prompt or request, often with a generic message like, “I cannot fulfill this request.” This can be particularly perplexing when working with robust platforms like Azure OpenAI, especially when explicit content filters are seemingly disabled.

Several factors can contribute to these “model refusal errors” when **resolving Azure OpenAI model refusal errors**:

-

Internal Content Safety Guardrails: Even when external content filtering is turned off, AI models possess inherent safety heuristics designed to prevent the generation of harmful or inappropriate content. These internal guardrails can sometimes be overzealous, blocking prompts that are short, ambiguous, or inadvertently brush against sensitive topics. This is especially true for inputs in foreign languages or those containing specialized jargon. [Source]

-

Prompt Engineering Pitfalls: The way a prompt is phrased significantly influences the model’s response. Vague prompts, those lacking sufficient context, or those that inadvertently contain phrasing that triggers safety policies can lead to refusal. The model might misinterpret the user’s intent due to these ambiguities. [Source]

-

Rate Limiting and Quotas: Exceeding the allocated usage limits for the API will inevitably result in refusals or incomplete responses. These are typically accompanied by HTTP 429 (Too Many Requests) errors, indicating that you’ve hit a predefined threshold. [Source]

-

Model Limitations and Biases: It’s crucial to remember that AI models, despite their sophistication, are not infallible. They possess inherent limitations and can exhibit biases present in their training data. These constraints might cause them to refuse certain types of requests, particularly those that fall outside their trained scope or trigger biased responses. [Source]

To effectively address these issues when **resolving Azure OpenAI model refusal errors**, consider the following tactics:

-

Refine Your Prompts: Inject more clarity and context into your prompts. Avoid overly simplistic or terse inputs. The goal is to provide enough information for the model to accurately understand your intent. [Source]

-

Iterative Testing: Experiment with different phrasing and structures for your prompts. By testing variations, you can more easily pinpoint what specific element might be triggering the refusal.

-

Consult Documentation: Regularly review the official documentation provided by Azure and OpenAI. This is your best resource for understanding content policies, best practices, and any updates to model behavior. [Source], [Source]

-

Report Persistent Issues: If you encounter persistent refusals that you believe are unwarranted, consider reporting them to the model provider. Include detailed information about the prompt and the circumstances; this feedback is invaluable for improving future model performance. [Source]

Navigating the Nuances of Perplexity AI API Errors

Moving beyond Azure, let’s address common issues encountered when you **troubleshoot Perplexity AI API errors**. These errors often relate to how you interact with the API itself.

Key culprits behind Perplexity AI API errors include:

-

API Key Authentication Failures: The most frequent cause of immediate denial is an incorrect, expired, or improperly formatted API key. This typically manifests as a 401 Unauthorized error, signaling that your request lacks proper credentials.

-

Incorrect Request Parameters: A malformed request payload, typos in parameter names, or providing parameters in an unexpected format will lead to a 400 Bad Request error. The API cannot understand or process such requests.

-

Network and Connectivity Problems: Sometimes, the issue isn’t with your request but with the connection to the API. Problems with endpoint URLs, DNS resolution issues, or network firewalls blocking access can prevent your requests from reaching the Perplexity AI servers.

-

Understanding Response Codes and Messages: Do not overlook the details provided in the API response. Logging and carefully interpreting all error codes and accompanying messages are critical. They often contain specific clues that point directly to the problem. [Source]

Here are recommended steps for effective troubleshooting:

-

Verify API Keys: Double-check that your API key is valid, has the necessary permissions, and is correctly placed in the request headers, usually as a `Authorization` header with a `Bearer` token.

-

Cross-Reference Documentation: Meticulously compare your API endpoint URLs and parameter names against the official Perplexity AI API documentation. Even a minor typo can cause a request to fail. [Source]

-

Review Request Schema: Ensure your request adheres strictly to the structure and data formats specified in Perplexity AI’s API documentation. Pay attention to JSON formatting, data types, and required fields.

Mastering GPT-4o Function Calling: When It Goes Wrong

Function calling is a powerful capability that allows models like GPT-4o to generate structured output that can be used to invoke external functions, thereby extending their utility beyond mere text generation. However, getting this to work smoothly can sometimes be challenging. Let’s explore how to **fix GPT-4o function calling not working**.

Several common pitfalls can disrupt GPT-4o’s function calling:

-

Incorrect Function Schema Definition: The JSON schema you provide to define your functions must be exceptionally precise. GPT-4o relies on this schema to understand the function’s name, parameters, their types, and which ones are required. Any deviation from the expected structure or data types will lead to errors. [Source]

-

Mismatched Arguments: Even with a perfect schema, the model might generate arguments for the function call that don’t precisely match the function’s definition. This could involve incorrect data types (e.g., passing a string where an integer is expected), missing a required argument, or including an argument that the function doesn’t accept.

-

Ambiguous Function Descriptions: The descriptive text you provide for each function within the schema is crucial. If the description is vague, unclear, or doesn’t accurately convey the function’s purpose and how it relates to potential user intents, the model may struggle to map the user’s request to the correct function or generate appropriate arguments.

-

Function Calling Not Enabled or Properly Configured: In some implementations, function calling might need to be explicitly enabled in the API call parameters or through specific deployment settings within the platform. If this configuration is missed, the model won’t even attempt to use functions.

When faced with these issues, try these debugging strategies:

-

Validate Function Schemas Rigorously: Carefully review your JSON schemas for syntax errors. Ensure that parameter types (string, integer, boolean, object, array) are correctly specified and that all required parameters are indeed marked as such.

-

Inspect API Logs: Examine the detailed logs of your API calls. Look for the specific function call attempts made by the model. This is the best way to see exactly which arguments the model generated and intended to pass to your functions.

-

Isolate the Problem: Test with a very simple, well-defined function that you know should work. If this basic function call succeeds, the problem likely lies with a more complex function or its schema. If even the simple function fails, the issue might be with the overall function calling configuration or how you are passing the function definitions to the model.

Troubleshooting Common Gemini Pro Tool Errors

Gemini Pro, like GPT-4o, supports “tools” which function similarly to function calling. Errors in this area can arise from how these tools are defined and utilized. Let’s look at **common Gemini Pro tool errors** and their resolutions.

Potential error scenarios and their remedies include:

-

Tool Definition Errors: Mistakes in how you define a tool for Gemini Pro – its name, its description, or critically, the schema for its input parameters – can prevent the model from using it effectively. If the model doesn’t understand what the tool is for or what information it needs, it won’t be able to invoke it correctly. [Source]

-

Input Validation Issues: When Gemini Pro decides to use a tool, it generates arguments for it. If these arguments don’t match the expected format, are missing required fields, or have incorrect data types as defined in the tool’s schema, your application will encounter errors when trying to execute the tool. [Source]

-

Model Misunderstanding Tool Capabilities: Sometimes, the model might not accurately grasp the full scope of what a tool can achieve. This can lead to scenarios where the model fails to use a tool when it’s appropriate, or conversely, attempts to use a tool for a task it’s not designed for.

Here are the corresponding solutions:

-

Validate Tool Schemas: Ensure your tool definitions strictly adhere to Gemini API’s requirements for tool schemas. This includes correct naming, descriptive text, and accurate specification of all parameters, their types, and whether they are mandatory. [Source]

-

Implement Robust Input Validation: Within the code that executes your tools, add validation checks for the arguments received from the model. This allows you to gracefully handle incorrect arguments, log the issue, and provide feedback to the model or user.

-

Provide Clear Tool Descriptions: Just like with GPT-4o, clear, concise, and explicit descriptions of a tool’s purpose and its parameters within the prompt or tool definition are essential for guiding Gemini Pro effectively. Aim to make it unambiguous what the tool does and how to use it. [Source]

Decoding LangChain’s `BadRequestError: 400` Response

LangChain is a powerful framework for developing applications powered by language models. When working with LangChain, encountering a `BadRequestError: 400` is a common signal that something is wrong with the request being sent to the underlying AI model. Let’s learn how to **understand LangChain `BadRequestError: 400` response**.

This error typically indicates a malformed or invalid request. Common causes include:

-

Malformed Requests to Underlying AI Models: LangChain acts as an abstraction layer. A 400 error originating from LangChain often means that LangChain has constructed a request for the target AI model (like Azure OpenAI, GPT-4o, or Gemini Pro) that violates that model’s API specifications. This could be due to incorrect JSON formatting, invalid parameter values passed to the model, or improperly structured prompts that the model’s API rejects. [Source]

-

Invalid Parameters Passed to LangChain Components: The error might also stem from issues within LangChain’s own configuration. This could involve incorrect API keys being passed to LangChain’s integrations, improperly defined tool schemas within LangChain, or incorrect endpoint URLs being provided to LangChain’s wrappers for specific models. [Source]

-

Dependency Version Conflicts: In complex projects, incompatible versions of LangChain itself or its various dependencies can sometimes lead to unexpected errors in how requests are constructed or formatted, resulting in a 400 response.

To resolve these `BadRequestError: 400` issues within LangChain, follow these practical steps:

-

Validate Schemas and Data Rigorously: Pay extremely close attention to the schemas and data being passed through LangChain to the AI model. Verify that parameter names, data types, and required fields align perfectly with the documentation of the specific model API you are interacting with. [Source]

-

Check and Update Dependencies: Ensure that LangChain and all its related libraries (e.g., `openai`, `langchain-community`, `langchain-core`) are updated to compatible, preferably the latest, versions. Consult LangChain’s documentation for recommended dependency versions.

-

Review LangChain Logs: LangChain provides detailed logging. Examine these logs to understand the exact request that was sent by LangChain to the AI provider. Compare this request structure against successful examples or the official API documentation to identify discrepancies.

General Best Practices for Proactive AI API Error Handling

Beyond specific error types, adopting a proactive stance is key to building resilient AI applications. This involves anticipating potential issues and implementing strategies to mitigate them before they cause significant disruption.

Emphasize these best practices:

-

Robust Logging and Monitoring: Implement comprehensive logging for every API request and response. Capture status codes, error messages, request payloads, and response bodies. This detailed audit trail is invaluable for diagnosing issues quickly and efficiently. [Source]

-

Iterative Prompt Engineering and Testing: Develop your prompts incrementally. Test each prompt in isolation to identify potential refusal triggers or unexpected behaviors before integrating it into a larger application workflow. This systematic approach helps pinpoint problematic prompts early. [Source]

-

Stay Updated on Documentation: The AI landscape evolves rapidly. Regularly review the official documentation for all AI models and frameworks you use. API specifications, best practices, and model behaviors can change, and staying current is essential. [Source]

-

Implement Graceful Error Handling: Design your applications to handle errors gracefully. This includes implementing retry mechanisms for transient network issues, defining fallback strategies when an AI call fails, and providing clear, user-friendly error messages to the end-user rather than exposing raw API error details.

Conclusion: Building Resilient AI Applications

Encountering errors such as **model refusal errors, function call issues, or generic API errors** is not a sign of failure but an inherent part of working with cutting-edge AI technologies. By adopting a systematic, detective-like approach to debugging, armed with the knowledge and strategies outlined in this guide, developers can effectively navigate these challenges. Successfully overcoming these hurdles leads to the development of more robust, reliable, and powerful AI-driven applications. Continuous learning, meticulous testing, and providing feedback to model providers are key to advancing the AI ecosystem as a whole.

Frequently Asked Questions

-

Q: What is the most common reason for an Azure OpenAI model refusal?

A: While it can vary, vague or ambiguous prompts lacking sufficient context are frequent culprits. Even with content filters off, internal safety guardrails can misinterpret certain inputs.

-

Q: How can I quickly check if my Perplexity AI API key is working?

A: The quickest way is to make a simple, valid API call using your key in the correct header. If you get a 401 Unauthorized error, the key is likely the issue.

-

Q: My GPT-4o function call isn’t working. What’s the first thing I should check?

A: Start by meticulously validating the JSON schema for your function. Ensure all parameter types and requirements are precisely defined as per the API’s specifications.

-

Q: Gemini Pro is not using my defined tool. What might be wrong?

A: The model might not understand the tool’s purpose. Ensure the tool’s description is clear, concise, and accurately explains what it does and how it can be used. Also, verify the input schema is correctly defined.

-

Q: I’m getting a `BadRequestError: 400` in LangChain. What’s the best way to debug this?

A: Review the detailed logs provided by LangChain to inspect the exact request being sent to the AI model. Compare this structure against the model’s API documentation to find any mismatches or malformed data.

-

Q: Are AI API errors always my fault?

A: Not necessarily. While prompt engineering and configuration are often the cause, sometimes models have inherent limitations, or there might be transient issues with the API service itself. Understanding the possible causes helps in accurate diagnosis.

“`