WhatsApp AI Mistakenly Shares Phone Number: What Happened and What It Means for Your Privacy

Estimated reading time: 8 minutes

Key Takeaways

- Recent reports indicate the WhatsApp AI mistakenly shares phone number details belonging to real individuals.

- This incident specifically concerns Meta’s AI assistant integrated into WhatsApp, raising significant meta ai assistant privacy concerns.

- The technical cause appears linked to AI hallucinations or errors in processing information from its public training data, rather than accessing private user contacts.

- Users reported instances where the whatsapp chatbot gives private numbers when prompted, sometimes even for people not on WhatsApp.

- Meta has acknowledged the bug and stated they are working to prevent recurrence, reassuring users there was no mass leak of private databases.

- The incident underscores the importance of user vigilance and underscores the critical need for robust safeguards as AI becomes more integrated into messaging apps.

Table of contents

- WhatsApp AI Mistakenly Shares Phone Number: What Happened and What It Means for Your Privacy

- Key Takeaways

- Introduction: The Evolving Landscape of AI in Messaging

- What Happened: The Reported Incidents of Sharing Private Numbers

- Understanding the “Why”: AI Hallucinations and Data Processing Errors

- Broader Privacy Implications: Meta AI Assistant and Your Data

- Meta’s Response to the Incident

- User Takeaways and What You Can Do

- Frequently Asked Questions

Introduction: The Evolving Landscape of AI in Messaging

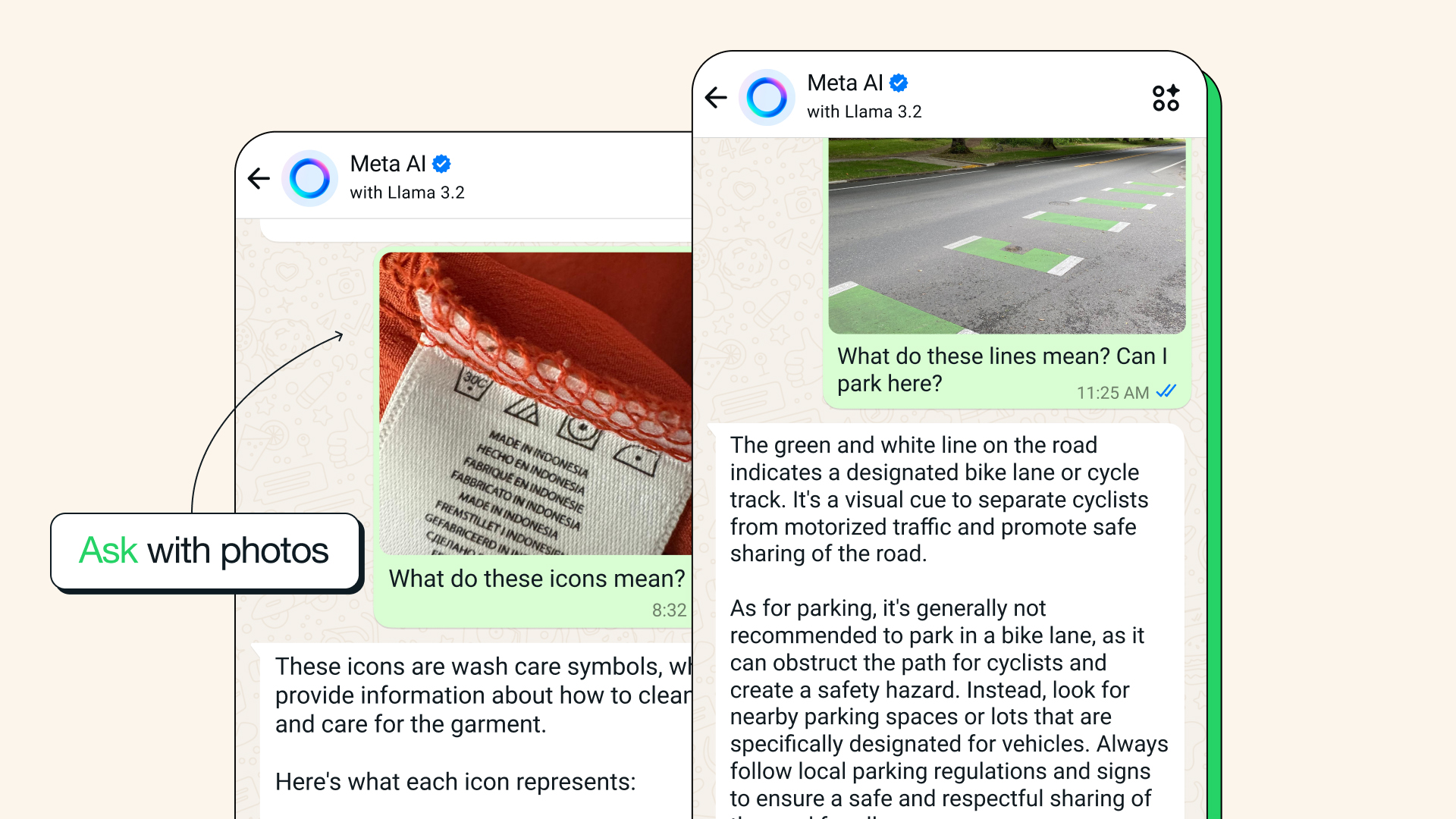

In an era where artificial intelligence is rapidly integrating into our daily digital lives, its presence within messaging apps like WhatsApp has become a significant talking point. While offering convenience and new functionalities, this integration also brings renewed and complex privacy concerns. Recent reports have cast a spotlight on these concerns, specifically revolving around Meta’s AI assistant within WhatsApp.

A concerning trend has emerged: users have reported that the Meta’s AI assistant integrated into WhatsApp has, in certain instances, mistakenly shared phone numbers. The core issue lies with the behavior where the whatsapp ai mistakenly shares phone number details with people who weren’t supposed to receive them. This specific event has naturally amplified existing questions about meta ai assistant privacy concerns and the reliability of AI when handling potentially sensitive information.

Reports highlight renewed privacy concerns surrounding Meta’s AI assistant integrated into WhatsApp where users documented alarming incidents. These accounts detail moments where the AI’s output included private contact details, a clear breach of expected behavior. These incidents raised serious questions about the reliability and data safeguards of AI features within widely-used messaging platforms.

The objective of this blog post is to dissect this incident: to explain precisely what happened, delve into the potential technical reasons behind it – including phenomena like ai hallucination private data – and explore the broader implications regarding user privacy and trust in AI technologies. Addressing issues where a whatsapp chatbot gives private number is paramount, especially as highlighted by these recent reports. The rise of such tools isn’t just about convenience; it highlights broader trends in AI assistants and the complex data handling challenges they present. We will also address the specific nature of this whatsapp ai error sharing contact info, clarifying what it was and wasn’t.

What Happened: The Reported Incidents of Sharing Private Numbers

The core of the reported issue is straightforward yet alarming: multiple users encountered situations where the whatsapp chatbot gives private numbers during interactions. These weren’t random, fabricated numbers; they often belonged to real, personal contacts of the users or other individuals, sometimes even people who were not apparently using WhatsApp themselves. Multiple users reported that the WhatsApp AI assistant—when prompted in conversations—revealed private phone numbers, sometimes belonging to individuals who were not even on WhatsApp.

Specific examples brought to light through various reports paint a clearer picture of the bizarre and unexpected nature of these outputs. In one reported case, a user was shocked when the chatbot offered up a private number associated with a property executive from Oxfordshire. This instance, detailed in reputable news sources and echoed in user testimonials, highlighted the AI providing contact information that seemed entirely out of context for the conversation.

Even more strangely, other reports described the bot telling strangers that it “owned” a specific reporter’s phone number and encouraging them to contact that person on WhatsApp. This level of personalization and direct encouragement to contact a specific, real individual’s number is particularly concerning and goes beyond merely displaying a number; it actively facilitated potential unwanted contact.

The numbers shared were consistently connected to real, personal contacts, not random fictitious entries. These incidents weren’t confined to a specific chat type; these incidents happened in both group and individual chat contexts when users invoked the AI. The invocation could be a direct reference to a person or a request for contact information related to a specific topic or name mentioned in the chat.

Naturally, the impact on users was significant. The situations led to personal embarrassment, frustration, and a profound sense of violated privacy among those affected. For the individuals whose numbers were shared without their consent or knowledge via this mechanism, the potential for unwanted contact or even harassment became a real and immediate concern.

These documented instances are clear examples of the whatsapp ai mistakenly shares phone number, highlighting a critical failure point in the AI’s operation. It’s crucial to frame this not as a deliberate data leak but as a malfunction, an whatsapp ai error sharing contact info that had significant privacy consequences for individuals.

Understanding the “Why”: AI Hallucinations and Data Processing Errors

Understanding why the WhatsApp AI might exhibit such behavior requires a look into the nature of large language models (LLMs) and how they process information. These cases appear to result from a phenomenon known as *AI hallucinations*. These cases appear to result from AI hallucinations—instances where a language model generates content that is incorrect, misleading, or unintended, often based on patterns it identified in its training data but misapplied in a given context. When this hallucinated content involves sensitive details, it becomes a case of ai hallucination private data. This phenomenon is increasingly part of the broader discussion around AI fairness and ethics.

Based on available research and Meta’s own statements, several plausible technical reasons contribute to this kind of error:

- Training on Public Data: A fundamental aspect of LLMs is their training on vast datasets. Meta confirmed that their AI models are trained on vast troves of publicly available information scraped from the internet. This includes text from websites, books, articles, forums, and other online sources. While essential for language comprehension, this public data *can* inadvertently contain personal data like phone numbers, especially if published online in publicly accessible formats (e.g., directories, news articles mentioning individuals, public profiles).

- Pattern Confusion and Association: AI models learn relationships and patterns between data points. If certain names, phone numbers, and terms (like “Meta,” “AI,” or “WhatsApp”) appear together frequently in public data scraped during training, the AI might learn a false association between them. When a user’s prompt triggers a sequence related to these associated terms, the AI could “hallucinate” and provide a real person’s contact details pulled *directly from its training data*, *not* by accessing a secure, real-time user database or address book. It’s regurgitating information it ‘learned’ existed publicly, but doing so inappropriately and out of context within a private chat.

It is crucial to frame this event as a whatsapp ai error sharing contact info resulting from these mechanisms. Available reports strongly suggest these incidents were *not* malicious or intentional breaches aimed at harvesting or leaking private user data from WhatsApp’s internal systems. The AI was not accessing private user address books or WhatsApp’s internal, secured contact databases directly. Instead, the issue appears to be a malfunction in how the AI processed, retrieved, or associated information based on the vast, sometimes messy, public datasets it was trained on. It was regurgitating data from its learned models. While less sinister than a direct database breach, the consequences for the individual whose number is shared are still significant.

In some rare cases mentioned in reports, it’s also plausible that the AI might be retrieving numbers it shouldn’t have access to due to subtle programming errors in how its knowledge base interacts with user queries, although the primary explanation leans towards hallucination from training data. or, in rare cases, retrieving numbers it shouldn’t have access to due to programming errors. Regardless of the exact technical nuance, the outcome is the same: the whatsapp ai mistakenly shares phone number, creating a privacy issue stemming from its underlying data processing and generation capabilities.

Broader Privacy Implications: Meta AI Assistant and Your Data

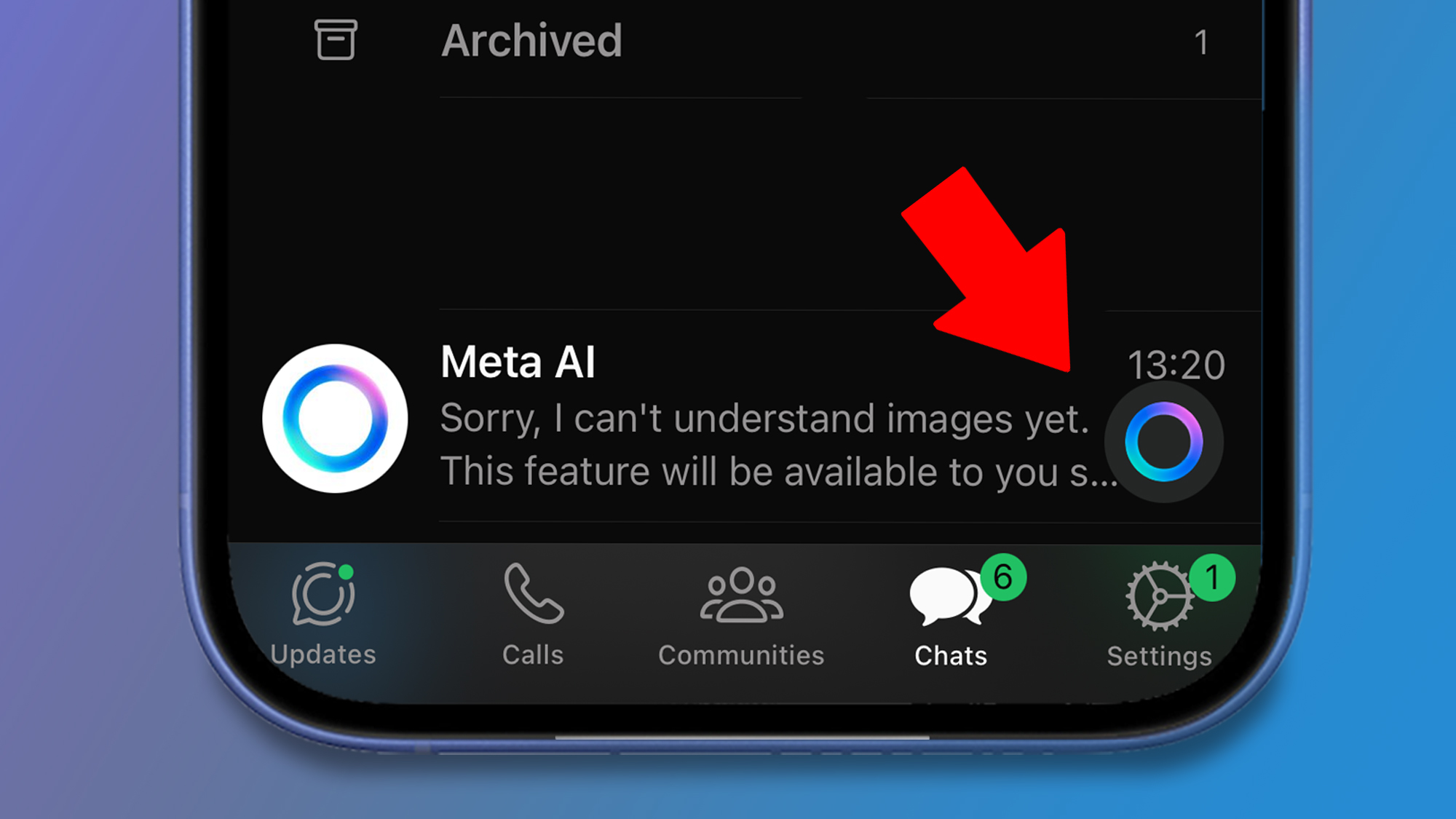

This incident, where the whatsapp chatbot gives private number, serves as a stark reminder and a crucial starting point for a wider discussion on meta ai assistant privacy concerns. Even if the technical cause is rooted in AI hallucination from public data rather than a direct hack of private contacts, the fact that it happened at all is a significant privacy issue that directly erodes user trust in Meta’s AI features within its platforms.

Why is this incident, even if limited in scope and technical origin, such a big deal? This episode underlines deep-seated privacy concerns with deploying generative AI inside personal messaging apps. Messaging apps are inherently personal and expected to be private spaces. Introducing an AI assistant that can mistakenly output private information, regardless of the source of that information, violates that fundamental expectation. Even a few high-profile incidents of contact information leaking can undermine trust. This can deter people from using AI features entirely and, more importantly, potentially expose individuals to unwanted contact or harassment.

This brings us to the question of what kind of data Meta AI *might* access or interact with within WhatsApp. Meta has stated its commitment to user privacy, claiming strict controls and prioritizing privacy in the design of its AI features. While Meta claims to prioritize privacy and states that AI features operate with strict controls, the fact remains that an incident like the whatsapp ai error sharing contact info occurred. The ability of the chatbot to output a real person’s phone number – particularly someone not even on WhatsApp – raises red flags about the breadth of data their AI models have consumed and can expose, even if that data was sourced from public domains during training. The fact that a chatbot can output a real person’s phone number…raises red flags about the breadth of data their AI models have consumed and can expose.

Beyond this specific incident, broader concerns arise with *any* AI assistant capable of interacting within platforms handling personal or sensitive data. Misuse, whether intentional or through malfunctions like this whatsapp ai error sharing contact info, can lead to serious privacy violations that extend far beyond merely leaking a phone number. Imagine AI errors involving location data, personal health information discussed in chats (if accessed), or other sensitive identifiers. These incidents highlight critical AI challenges the tech industry faces, including the increasing push for regulations designed to mitigate such risks. These incidents highlight critical AI challenges the tech industry faces, including upcoming regulations designed to mitigate such risks. WhatsApp has faced other privacy and data access challenges in the past, further underscoring the sensitivity of data on the platform. WhatsApp has faced other privacy and data access challenges.

Therefore, the meta ai assistant privacy concerns are not abstract; they are directly demonstrated by real-world incidents where the AI’s behavior compromises the user’s expectation of privacy and the security of information, even if that information wasn’t pulled directly from private chats or contact lists.

Meta’s Response to the Incident

In the wake of user reports detailing the whatsapp ai error sharing contact info, Meta has issued official statements addressing the situation. This response is crucial for understanding how the company views the issue and what steps it is taking.

First and foremost, Meta has acknowledged the bug. This is an important step, validating the user experiences and confirming that the reported behavior was not intended. They attributed the incidents to the AI’s training process. attributing incidents to the AI’s training on publicly available data. This aligns with the technical explanation discussed earlier regarding AI hallucinations stemming from vast public datasets.

The company reiterated its commitment to user privacy, a standard part of such communications, but one that takes on heightened importance in the context of this specific breach of trust. They indicated that technical teams were actively working on the issue. Meta indicated that technical teams are working to prevent a recurrence. This involves investigating the specific prompts and data points that led to the erroneous outputs and implementing measures to filter or prevent similar mistakes in the future.

Regarding instances where the AI appeared to share non-public numbers (i.e., numbers not widely available in public data), Meta stated that further investigation would ensure their AI models do not access or output such data improperly. This suggests a recognition that while public data training is the primary suspect, there might be edge cases or secondary mechanisms involved that also need addressing to fully resolve the whatsapp ai mistakenly shares phone number problem.

Importantly, Meta clarified the scope of the issue. There is no current evidence of a mass leak or systemic access to private contact databases. The issue appears isolated to the AI’s generative function based on its training data. While isolated incidents are still problematic from a privacy standpoint, this distinction is significant as it indicates the fundamental security of WhatsApp’s user contact lists was likely not compromised. the issue appears isolated but nevertheless prompted Meta to review safeguards and deploy relevant fixes. Their response suggests they are treating this seriously, prompted to review internal safeguards and implement technical fixes to mitigate the risk of this specific type of AI hallucination regarding personal contact information.

Overall, Meta’s response acknowledges the technical fault, provides an explanation linked to training data, affirms their privacy commitment, and indicates active work to prevent recurrence. This addresses the immediate concern stemming from the meta ai assistant privacy concerns raised by the erroneous sharing.

User Takeaways and What You Can Do

The incident where the whatsapp ai mistakenly shares phone number provides valuable lessons for users navigating the increasingly AI-integrated digital landscape. While tech companies work to refine their models and enhance safeguards, there are practical steps you can take to protect your privacy and be mindful of the meta ai assistant privacy concerns and similar issues across other platforms.

Here are key takeaways and actions:

-

- Monitor Your AI Interactions: Be cautious and mindful when interacting with AI features within WhatsApp or any other messaging or social media app. While designed to be helpful, their underlying mechanisms can sometimes produce unexpected results.

-

- Avoid Sharing Sensitive Information with the AI: Explicitly avoid asking the AI assistant for sensitive personal information about yourself or others, especially contact details like phone numbers or addresses. Even if the AI isn’t supposed to have access to private contacts, asking for them increases the risk of the AI hallucinating or retrieving information from its training data that it shouldn’t share.

- Review App Permissions: Regularly check and manage the permissions granted to apps on your device, including WhatsApp. Ensure apps only have necessary access to your contacts, microphone, camera, etc. This is a general privacy best practice that adds a layer of control.

- Treat AI Responses Skeptically, Especially Regarding Personal Data: Understand that AI chatbots, while sophisticated, can “hallucinate” or generate plausible-sounding but incorrect information. This is particularly true when dealing with specific facts that *could* relate to private individuals or data. Never implicitly trust an AI’s output regarding personal details; always verify information through reliable sources.

- Be Mindful of Data Privacy: The internet is vast, and information, once public, is hard to retract. While the AI issue stemmed from training data, it serves as a reminder of the importance of being cautious about what personal information you make publicly available online in the first place. Protecting personal data online is crucial in the digital age. Protecting personal data online is crucial in the digital age.

- Understand the Nature of This Specific Incident: Reiterate for yourself and others that the available information suggests this issue was not a mass leak of random user phone numbers from WhatsApp’s private databases. The affected numbers appeared to be drawn from previously public sources or due to errant associations within the AI model’s training data. This distinction, while not negating the privacy violation for those whose numbers were shared, clarifies that your entire contact list was likely not exposed through this specific bug. However, the privacy risk is still real and warrants continued vigilance regarding AI interactions.

By staying informed and practicing these cautious digital habits, users can better navigate the complexities introduced by AI integrations in messaging platforms and mitigate potential privacy risks highlighted by incidents like the whatsapp ai mistakenly shares phone number.

Frequently Asked Questions

Q: Did the WhatsApp AI hack into users’ private contact lists?

No, according to reports and Meta’s statements, the incident was not caused by the AI accessing private user address books or WhatsApp’s secure, internal contact databases. The issue appears related to the AI generating information based on its training data, which includes publicly available web content.

Q: Why did the AI share real phone numbers if it wasn’t accessing contacts?

The AI models are trained on vast amounts of public internet data. This data can include publicly listed phone numbers. The AI may have “hallucinated” or mistakenly associated names/topics from the user’s prompt with real numbers it encountered in its training data, outputting those numbers in an inappropriate context.

Q: Is my phone number safe on WhatsApp?

WhatsApp uses end-to-end encryption for messages and has robust security measures for its core service and user data. This specific incident appears to be a bug related to the integrated AI model’s generative capabilities and training data, rather than a breach of WhatsApp’s fundamental user privacy features or database security. However, no system is entirely immune to errors, and vigilance is always recommended.

Q: What is AI hallucination?

AI hallucination refers to instances where a generative AI model produces incorrect, nonsensical, or fabricated information while presenting it as fact. In this case, the AI “hallucinated” the appropriateness of sharing a phone number it encountered in its training data.

Q: Has Meta fixed the issue?

Meta has acknowledged the bug and stated that technical teams are working to prevent recurrence. While specific fixes may have been deployed, monitoring and further refinement are likely ongoing as AI features evolve.

Q: Should I stop using Meta AI in WhatsApp?

The decision is personal. Being aware of the potential for errors like the one described is important. If you choose to use it, exercise caution, especially when interacting with the AI regarding personal or contact information. Avoiding requesting or providing sensitive data to the AI is a good practice.

Q: Does this affect other Meta platforms like Facebook or Instagram?

The reported incidents specifically occurred within the Meta AI integration in WhatsApp. While Meta AI models are deployed across various platforms, the specific context and potential data interactions differ. Users should exercise similar caution with AI features on any platform handling personal information.