AI Regulation 2026: The Emerging Framework and Global Updates

Estimated reading time: 8 minutes

Key Takeaways

- The ai regulation 2026 framework is coalescing from transatlantic legislative efforts, not a single global law.

- Critical europe us ai laws update are driving harmonization, with EU phased implementation and US federal preemption.

- Technical pillars like the content authenticity act and deepfake transparency standards are essential for transparency and trust.

- Organizations must act now via audits, monitoring, and integrating compliance into product development and cybersecurity.

- Practical tools like the NIST AI Risk Management Framework bridge regulatory requirements with operational implementation.

Table of contents

- AI Regulation 2026: The Emerging Framework and Global Updates

- Key Takeaways

- Defining the AI Regulation 2026 Framework

- The Transatlantic Landscape: Europe US AI Laws Update

- The Content Authenticity Act: A Technical Pillar for Transparency

- Deepfake Transparency Standards: Combating Synthetic Media

- Synthesis: Integrating Elements into the 2026 Framework

- Actionable Implications for Professionals

- Frequently Asked Questions

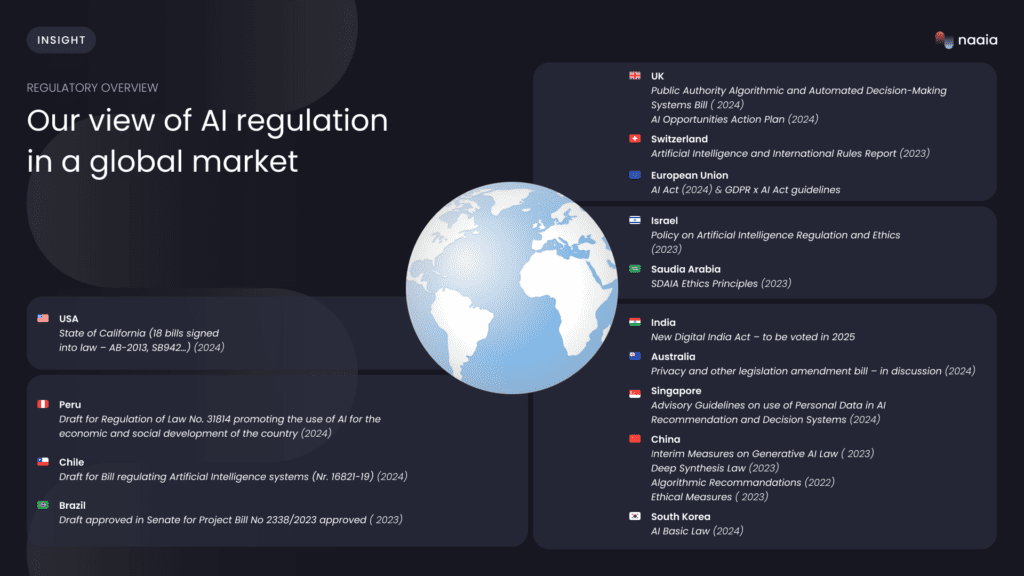

As we stand in January 2026, the global AI governance landscape is at a pivotal moment. The ai regulation 2026 framework is emerging as a critical benchmark, shaped by intense legislative activity rather than a single, unified global standard. While no cohesive global “AI Regulation 2026 Framework” exists yet, current developments point decisively toward harmonized standards by this year, driven by key europe us ai laws update. Are you prepared for the regulatory landscape solidifying by 2026?

For policymakers, industry leaders, and compliance teams, understanding these evolving regulations is urgent amid rapid AI advancements. The window to shape strategy and build compliant systems is now.

Defining the AI Regulation 2026 Framework

The ai regulation 2026 framework refers to the projected set of standards and principles emerging from 2025-2026 legislative actions across major economies. Its goals are harmonization, risk management, and fostering innovation within clear guardrails. This “framework” is significant as a target timeline influencing current efforts, such as the EU’s phased implementations and US federal pushes, positioning it as the convergence point for transatlantic policies.

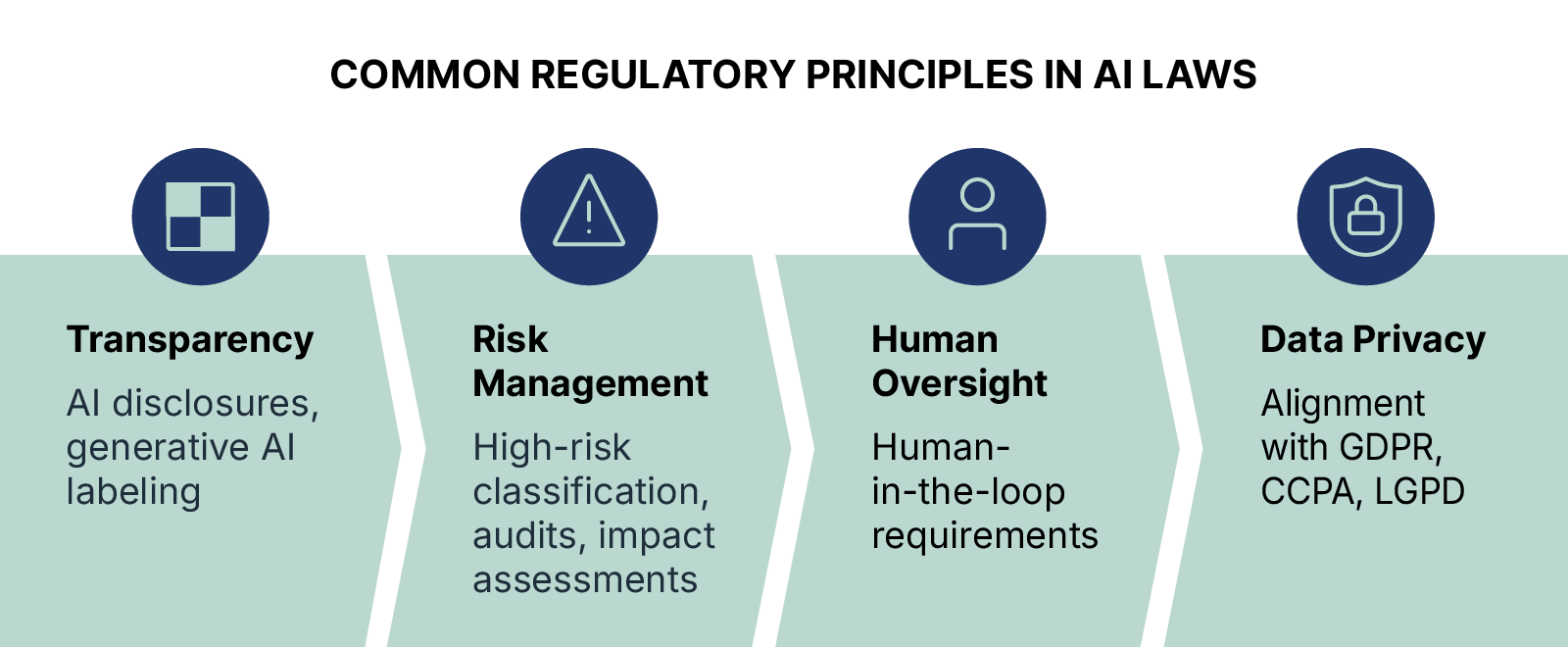

Its core goals include:

- Risk-based approaches to categorize AI systems (e.g., high-risk GPAI models requiring transparency).

- Robust data governance for training summaries.

- Innovation-friendly compliance mechanisms.

While not formally codified as one law, it’s actively shaped by initiatives like the EU’s tiered regulatory approach, which entered phased implementation in August 2025. Obligations for general-purpose AI (GPAI) models are now in effect, requiring providers to publish detailed summaries of training data (source: Baker Donelson).

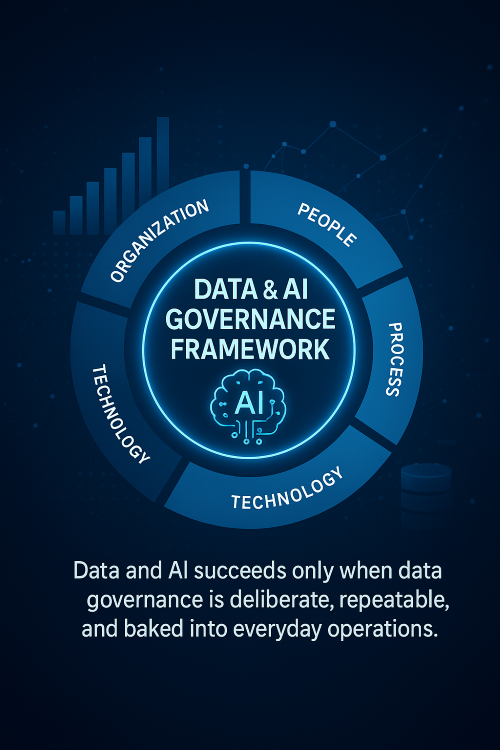

Organizations are preparing via established frameworks like the NIST AI Risk Management Framework, mapping requirements across security, legal, and governance functions for compliance (source: Wiz Academy). This practical mapping is a key step toward the ai regulation 2026 framework.

The Transatlantic Landscape: Europe US AI Laws Update

The path to 2026 is paved by significant europe us ai laws update. In the EU, the focus remains on the AI Act’s tiered regulatory approach, with phased implementation from August 2025. The European Commission’s November 2025 “digital omnibus” legislative proposals aimed at easing certain compliance obligations are also key (sources: Baker Donelson; Morgan Lewis).

In the US, the evolving patchwork is shifting toward federalization. A December 2025 White House executive order established a national policy framework for AI to preempt conflicting state laws. This includes creating an AI Litigation Task Force to challenge inconsistent state regulations and restrictions on federal funding for states with “onerous AI laws” (sources: White House; HK Law). This europe us ai laws update is creating a new baseline for global operations.

Convergences (e.g., shared emphasis on transparency and risk management) and divergences (EU’s comprehensive legislation vs. US executive-driven federal preemption) form the building blocks of the ai regulation 2026 framework. The table below clarifies these dynamics:

| Aspect | EU Approach | US Approach |

|---|---|---|

| Core Mechanism | Comprehensive legislation (AI Act) | Executive order & federal preemption |

| Timeline | Phased implementation from Aug 2025 | Policy framework effective Dec 2025 |

| Key Focus | Risk-based tiers, GPAI transparency | National coherence, innovation pace |

| Enforcement | Member-state authorities, EU Commission | Federal agencies, Litigation Task Force |

Quote from a regulatory expert: “The transatlantic dialogue is setting the de facto standards for AI governance worldwide, making 2026 a critical inflection point.”

The Content Authenticity Act: A Technical Pillar for Transparency

Digital provenance efforts are critical, though a formal “Content Authenticity Act” remains proposed legislation in current sources. It’s best framed as part of broader transparency mandates. The content authenticity act conceptually mandates transparency for AI-generated content via mechanisms like:

- Content credentials following the C2PA standard (Coalition for Content Provenance and Authenticity)—metadata chains tracking creation, edits, and AI involvement.

- Watermarking as invisible signals detectable by tools for provenance verification.

This is vital for trust in media (e.g., preventing misinformation), creative industries (protecting IP), and information ecosystems (platform liability for unlabeled deepfakes).

In policy terms, it’s a technical solution addressing regulatory gaps in GPAI transparency under EU AI Act rules now in effect (source: Baker Donelson), with global ripple effects via NIST frameworks (source: Wiz Academy). The content authenticity act is thus a foundational element for the broader framework.

Deepfake Transparency Standards: Combating Synthetic Media

A subset of authenticity efforts, deepfake transparency standards are regulatory and technical requirements for disclosing AI-generated synthetic media. They encompass mandatory labels, platform detection obligations, and creator liability.

Responses include:

- Mandatory disclosure (e.g., visible watermarks or metadata on deepfakes).

- Detection technology (AI classifiers scanning for anomalies like unnatural eye movements).

- Platform duties (e.g., scanning uploads, removing unlabeled content).

These standards link directly to content authenticity act mechanisms like C2PA for deepfake provenance.

Implications are significant: potential fines for non-compliance and a crucial role in elections and media integrity. They integrate with the ai regulation 2026 framework as EU GPAI data summaries and US federal policies pave the way (sources: Baker Donelson; White House).

Synthesis: Integrating Elements into the 2026 Framework

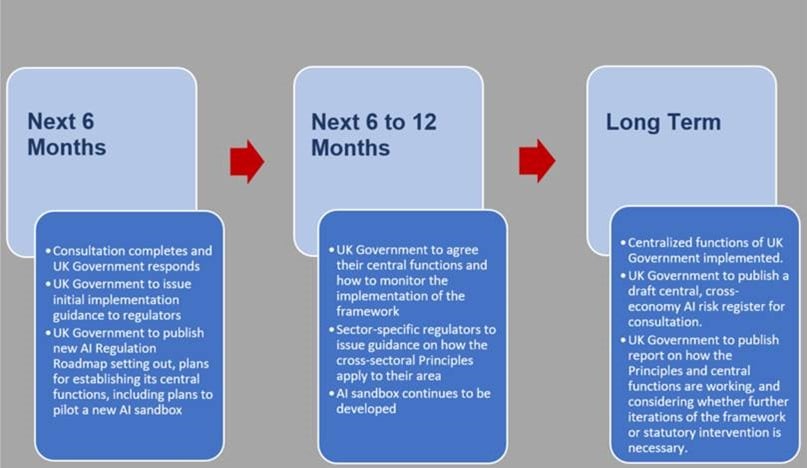

The content authenticity act and deepfake transparency standards are foundational for transparency within the broader ai regulation 2026 framework. EU AI Act implementations (phased from 2025), the US federal executive order (Dec 2025), and “digital omnibus” proposals form a trajectory from disparate laws to cohesive 2026 harmonization. The UK is also shaping its own distinct path, as explored in our analysis: mind-blowing AI regulations UK.

The NIST AI Risk Management Framework serves as a practical bridge for mapping these requirements (source: Wiz Academy), highlighting convergences like risk tiers and transparency.

Actionable Implications for Professionals

- For Compliance Teams: Conduct AI audits mapping to EU AI Act GPAI rules and US federal preemption (sources: Baker Donelson; HK Law). Proactively address explosive ai fairness & ethics to eliminate bias.

- For Government Affairs: Monitor the White House AI Litigation Task Force and EU Commission proposals closely.

- For Product Development: Embed deepfake transparency standards via C2PA watermarking and content credentials from the content authenticity act. Emphasize proactive ethical AI design, like training data transparency summaries. Understand how these technologies are transforming business operations: how AI is transforming businesses guide.

- For Cybersecurity: Integrate regulatory mandates into a robust defense strategy. The evolving threat landscape, detailed in our explosive cybersecurity threats analysis, makes this essential. Next-gen practices are outlined in our look at breakthrough ai cyber defense.

Monitoring Resources: EU AI Act portal, White House AI policy site, NIST RMF updates. Stay informed on the latest europe us ai laws update and the trajectory of the ai regulation 2026 framework.

Frequently Asked Questions

What is the AI Regulation 2026 Framework?

It’s the projected set of standards emerging from 2025-2026 legislative actions across major economies, aiming for harmonization, risk management, and innovation within guardrails. It’s not a single law but a convergence point influenced by EU and US updates.

How are Europe and US updating their AI laws?

The EU is implementing the AI Act with phased rules from August 2025, focusing on risk-based tiers and GPAI transparency. The US is federalizing policy via a December 2025 executive order, preempting state laws and establishing a national framework.

What is the Content Authenticity Act?

It’s a conceptual mandate for transparency in AI-generated content, using mechanisms like content credentials and watermarking to prove origin and edits. It addresses gaps in GPAI transparency under regulations like the EU AI Act.

Why are Deepfake Transparency Standards important?

They combat synthetic media by requiring disclosure, detection, and platform duties, crucial for preventing misinformation, protecting IP, and ensuring media integrity, especially in elections.

What should organizations do to prepare?

Conduct AI audits, monitor regulatory updates, integrate transparency standards into product development, and strengthen cybersecurity. Using frameworks like NIST AI RMF can help map requirements effectively.